Method and device for measuring pixel pitch of image sensor based on point-target image splicing technology

An image sensor, pixel pitch technology, applied in measurement devices, optical devices, instruments, etc., can solve problems such as defocusing, and achieve the effects of improving repeatability, reducing errors, and amortizing errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

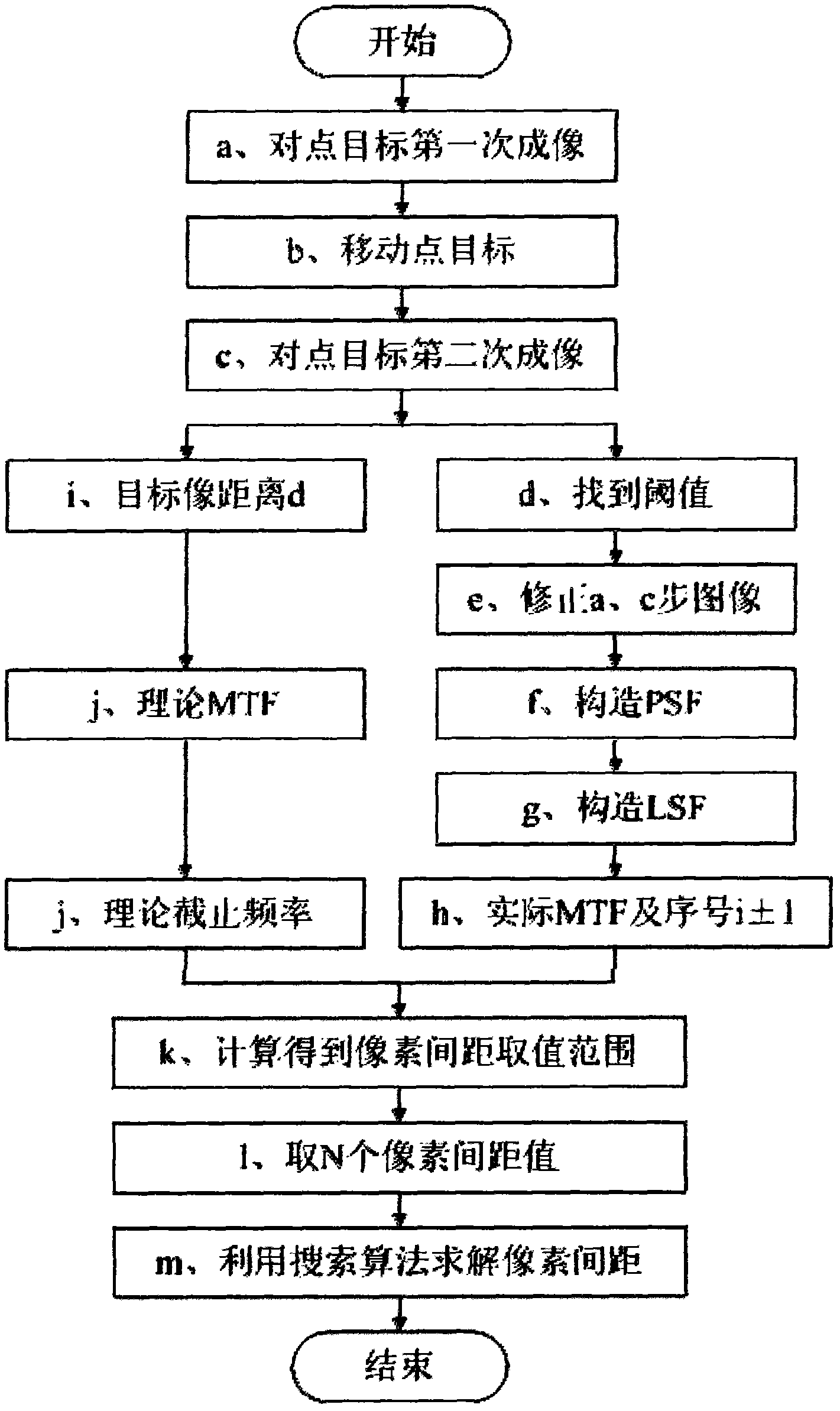

[0067] The specific embodiments of the present invention will be further described in detail below in conjunction with the accompanying drawings.

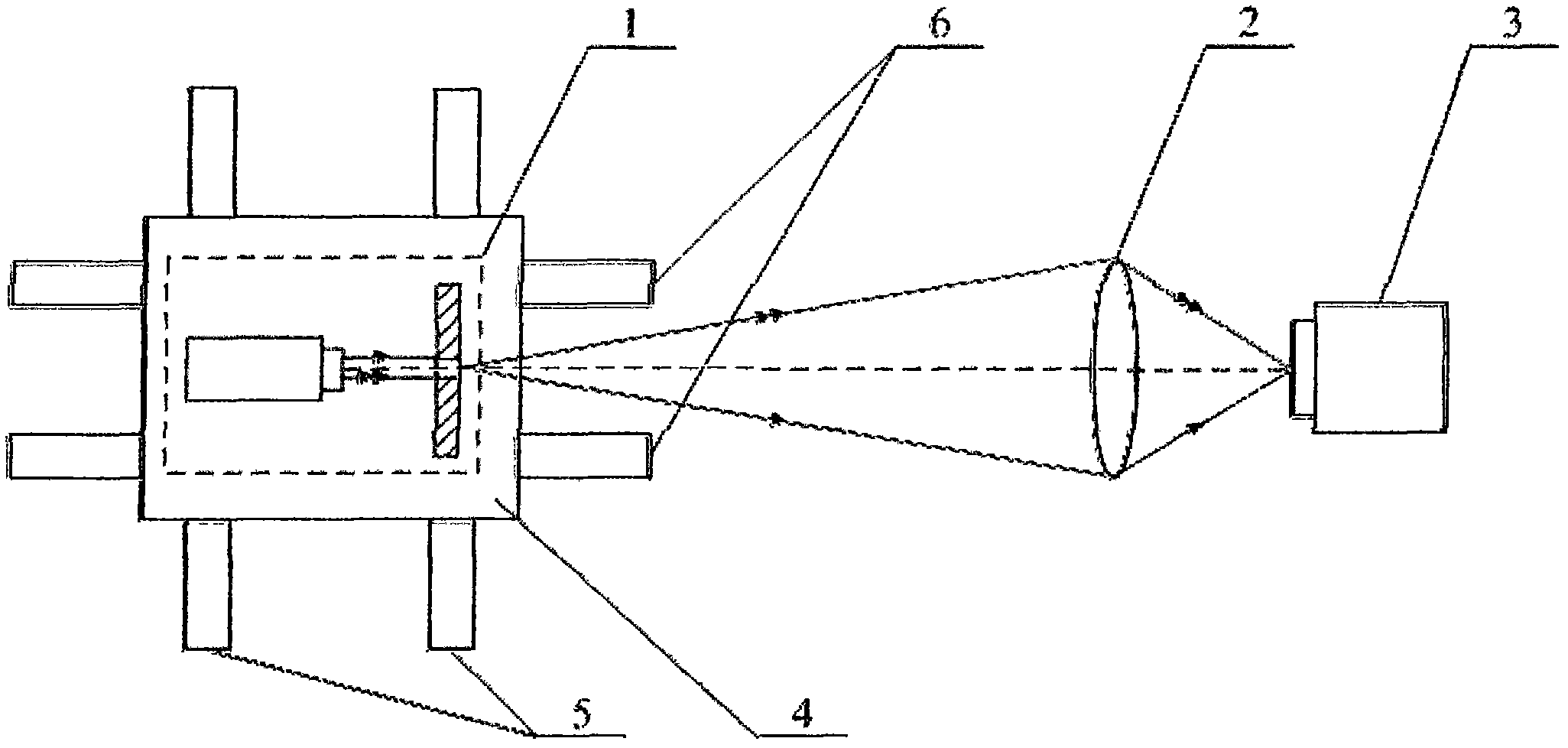

[0068] figure 1 It is a structural schematic diagram of an image sensor pixel pitch measuring device that uses a point target image stitching; the device includes a point target 1, an optical system 2, an image sensor 3, a slider 4 and a first guide rail 5 perpendicular to the optical axis direction, and the point target 1 is imaged onto the surface of the image sensor 3 through the optical system 2; and, the device also includes a second guide rail 6 along the optical axis direction, and the slider 4 carrying the point target 1 is installed on the first guide rail 5 and the second guide rail 6, and the slide The movement of the block 4 on the first guide rail 5 cooperates with the movement of the slider 4 on the second guide rail 6, so that the point target 1 is in-focus and imaged on the surface of the image sensor 3 at any posit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com