Aggregated facial tracking in video

A video, face technology, applied in the field of image processing, can solve the problems of face tracking difficulty, face detector algorithm unable to detect faces, inaccuracy, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

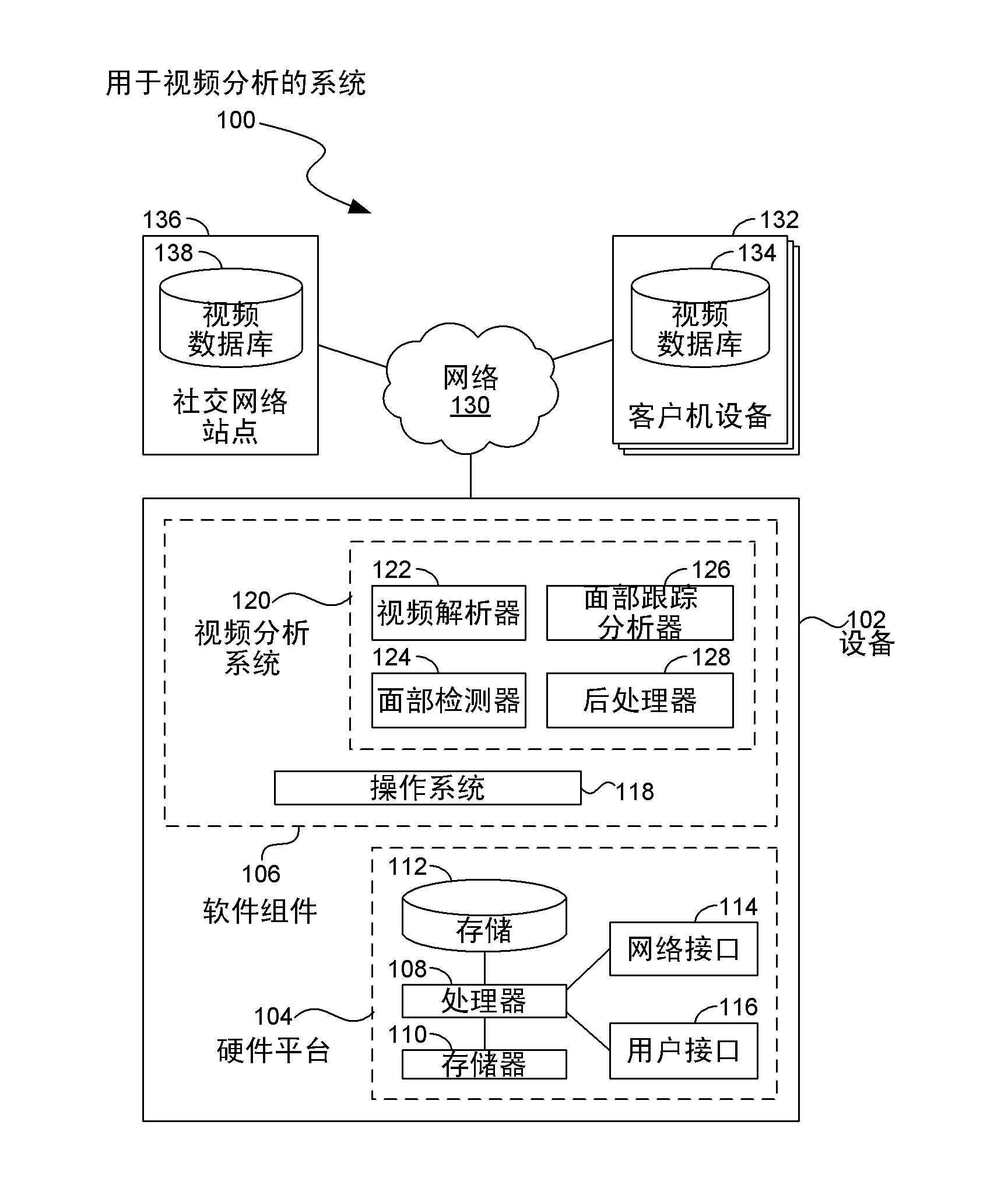

Embodiment 100

[0037] The system of embodiment 100 is shown contained within a single device 102 . In many embodiments, various software components can be implemented on many different devices. In some cases, a single software component may be implemented on a cluster of computers. Certain embodiments may operate using cloud computing technology for one or more of these components.

[0038] The system of embodiment 100 is accessible by various client devices 132 . Client devices 132 may access the system through a web browser or other application. In one such embodiment, the device 102 can be implemented as a web service that can process video in a cloud-based system, such an embodiment can receive video images from various clients, process video images in a large data center, And return the analyzed result to the client computer for operation.

[0039] In another embodiment, the operations of device 102 may be performed by a personal computer, server computer, or other computing platfor...

Embodiment 200

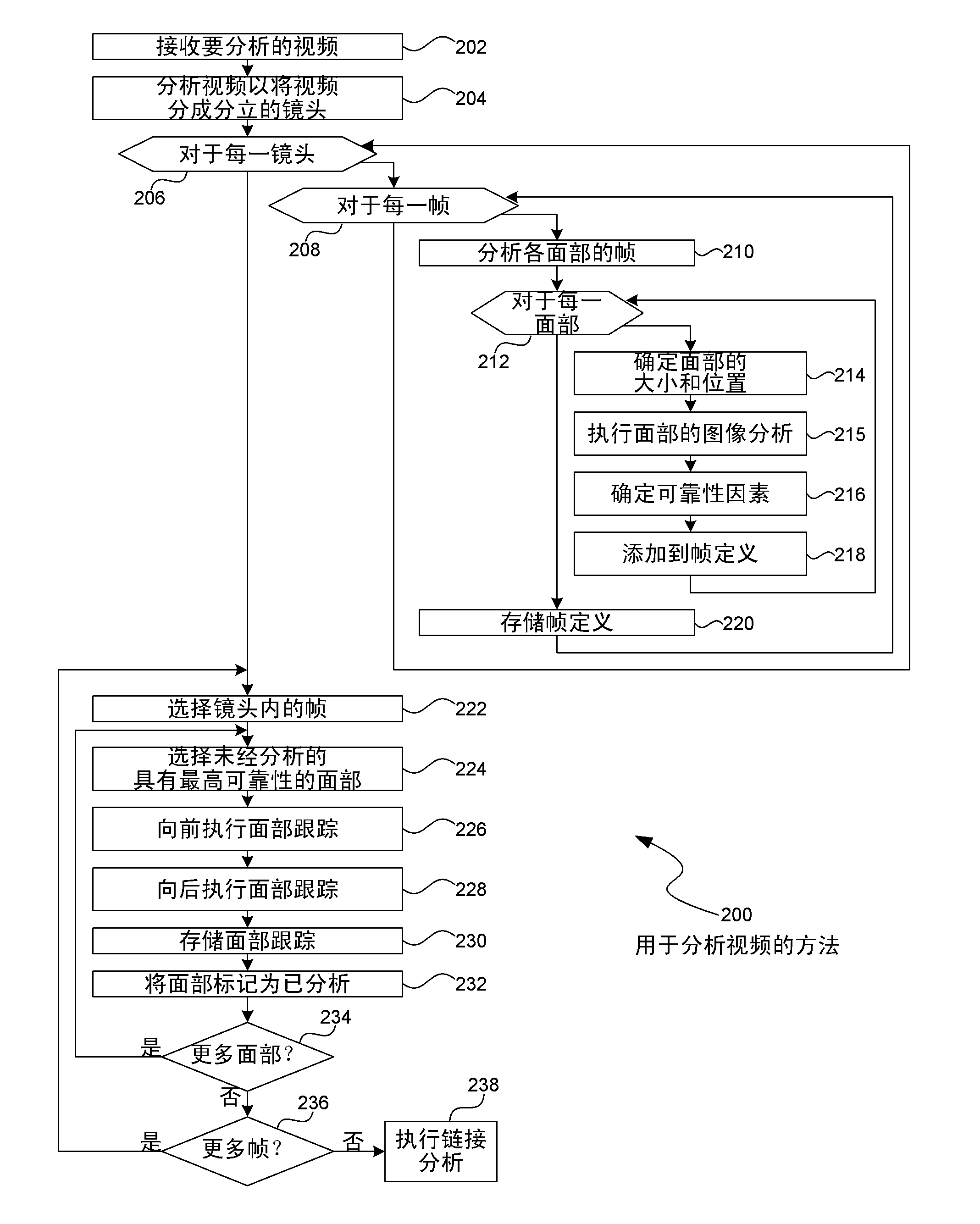

[0062] Embodiment 200 illustrates one method by which a video may be analyzed to create a track of faces in the video. After the video is split into shots, each shot can be analyzed on a frame-by-frame basis for static face detection. The frame-by-frame analysis results can then be used to link multiple frames together in order to show the movement or progression of a single face in the video.

[0063] At block 202, video to be analyzed may be received. A video may be any type of video image consisting of a series or sequence of individual frames. At block 204, the video may be divided into discrete shots. Each shot may represent a single scene or a group of related frames. An example of a process that may be performed in block 204 is found at embodiment 300 later in this specification.

[0064]At block 206, each shot may be analyzed. For each shot in block 206 and for each frame of each shot in block 208 , the frame may be analyzed for each face in block 210 . The analy...

Embodiment 300

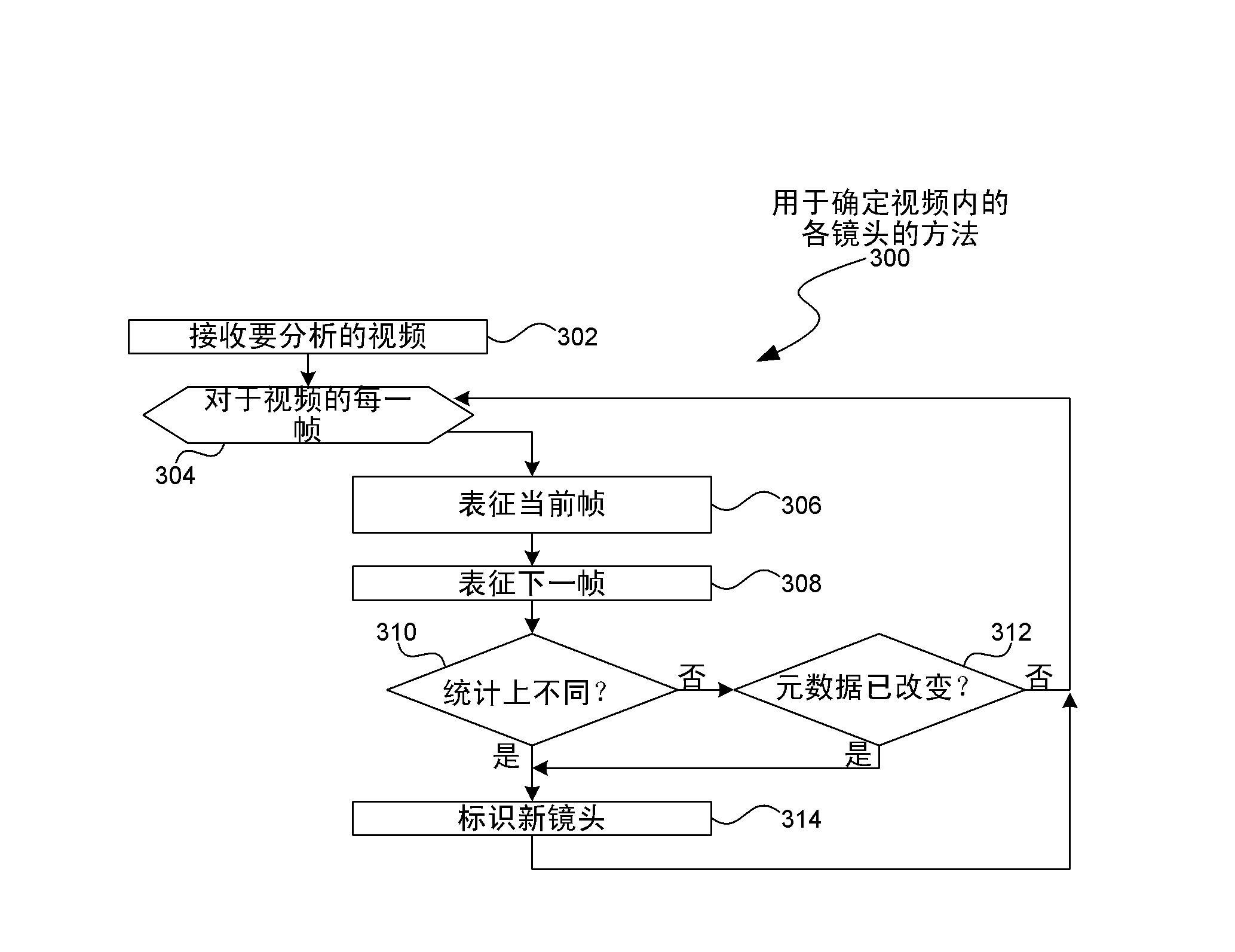

[0075] The method of embodiment 300 shows one example of how to divide a video sequence into discrete shots. Each shot may be a sequence of similar frames and may have the same face image in face tracking.

[0076] Video to be analyzed may be received in block 302 . For each frame in the video in block 304 , the current frame may be characterized in block 306 and the next frame may be characterized in block 308 . The representations of the frames may be compared in block 310 to determine whether the frames are statistically different. In block 310, if the blocks are not significantly different, then in block 312 the metadata associated with the frames may be compared to determine if the shot has changed. If not, the process returns to block 304 to process the next frame.

[0077] If the statistical analysis or metadata analysis indicates that the shot has changed either in block 310 or in block 312 , then a new shot may be identified in block 314 . The process may return t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com