SSD-based (Solid State Disk) cache management method and system

A cache management and caching technology, applied in memory systems, electrical digital data processing, memory address/allocation/relocation, etc., can solve the problems of SSD small-grained random write too much, cache pollution, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

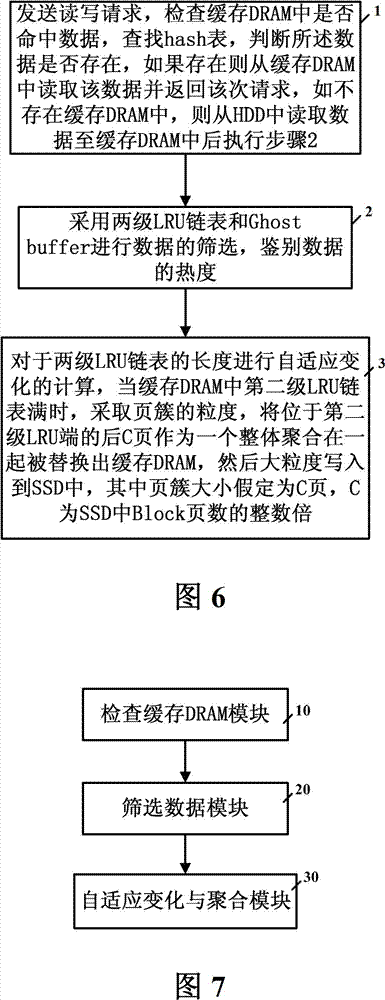

[0081] Specific embodiments of the present invention are given below, and the present invention is described in detail in conjunction with the accompanying drawings.

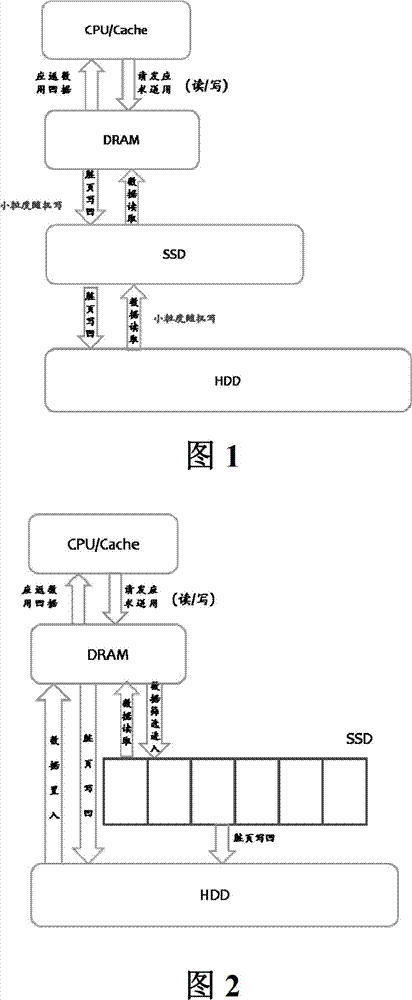

[0082] new caching system

[0083] The new cache system is composed of DRAM, SSD, HDD, see the attached figure 2 . SSD is located between DRAM and HDD, and acts as a cache of HDD. Data is persistently stored in HDD, DRAM is used as the first level cache, and SSD is used as the second level cache. DRAM and SSD constitute the two-level cache of HDD. In DRAM, the content stored in the DRAM cache should be recorded. In order to quickly locate whether a page exists in the DRAM cache, the pages stored in the cache are managed in the form of a hash table. At the same time, the content in the SSD should also be recorded. The relevant information of the data in the SSD cache needs to be recorded in the DRAM, which requires the hash table to record the content as well. Therefore, the following information needs to be...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com