Method for selecting camera combination in visual perception network

A visual perception and camera technology, applied to color TV parts, TV system parts, TV, etc., can solve the problem of less research

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

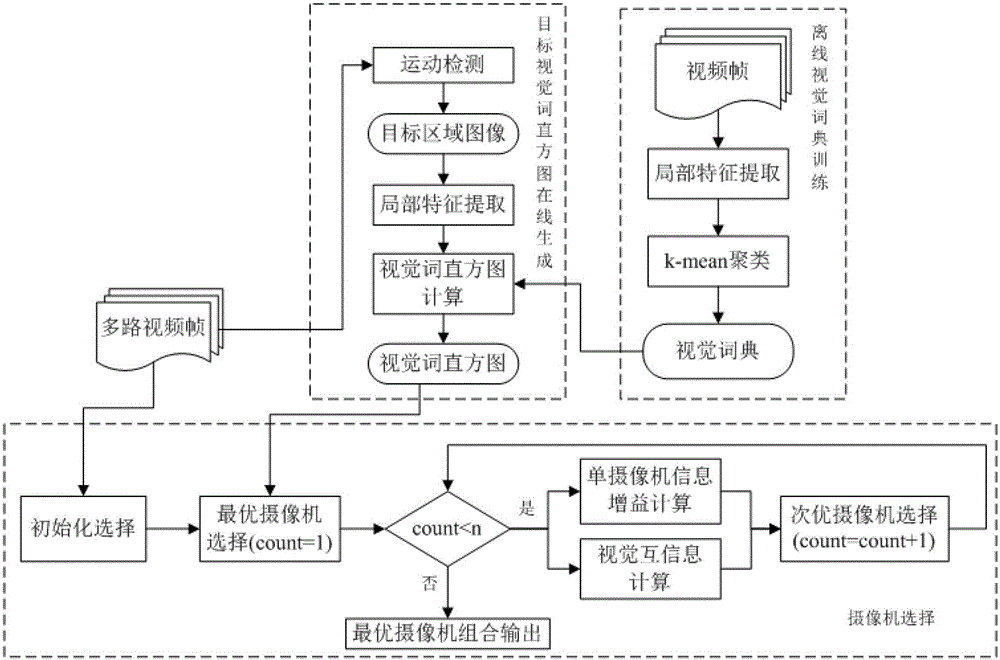

[0071] This embodiment includes off-line training to generate a visual dictionary, on-line generation of the target image visual histogram and sequential forward camera selection, and its processing flow chart is as follows figure 1 As shown, the whole method is divided into two main steps of online target image visual histogram generation and camera selection, and the main processes of each embodiment part are introduced below.

[0072] 1. Online generation of target image visual histogram

[0073] In order to establish the information association between the cameras, this embodiment first selects multiple channels of video data in the same scene, extracts the local feature information in the video frame, clusters the local feature vectors, and uses the cluster center as the visual image generated by offline training. Dictionary, so that the online video can generate the corresponding visual histogram according to the visual dictionary and compare the associated information. ...

Embodiment 2

[0119] The camera selection system implemented by this scheme is used for POM data sets mainly outdoor scenes (Fleuret F, Berclaz J, Lengane R, Fua P.Multi-camera people tracking with a probabilistic occupancy map[J].IEEE Transaction on Pattern Analysis and Machine Intelligence, 2008.vol30 (2): 267-282) to select the Terrace video sequence, the video sequence is configured with 4 cameras, and the Terrace2 video sequence is selected as the scene training data to train and generate the visual dictionary in this scene, Terrace1 The online selection test is performed on the video sequence, and the original image of the 180th frame is shown in Figure 2. Figure 2a~2d Denote the target images captured by cameras C0, C1, C2, and C3, respectively. Figure 3a~3d for right Figure 2a~2d The target images acquired by cameras C0, C1, C2, and C3 use the mixed Gaussian model for background modeling and foreground extraction, and combine texture information for shadow removal to detect fore...

Embodiment 3

[0121] The camera selection system implemented by this scheme is used for the i3DPost data set (N.Gkalelis, H.Kim, A.Hilton, N.Nikolaidis, and I.Pitas. The i3dpost multi-view and 3dhuman action / interaction database.In CVMP, 2009.), the video sequence is selected, and the video set is configured with 8 cameras, among which the Walk video sequence D1-002 and D1-015 are selected as training data to generate a visual dictionary for this scene, and the Run video sequence D1-016 online selection test, the original image of the 62nd frame is as follows Figure 6a ~ Figure 6h as shown, Figure 6a ~ Figure 6h Represent images captured by cameras C0, C1, C2, C3, C4, C5, C6, and C7, respectively. Figure 7a ~ Figure 7h Indicates the target area under each viewing angle after motion detection and shadow elimination. For the target area, the face detection value of C5 and C6 in the optimal camera selection process of this scheme is 1, and the value of other cameras is 0. Comprehensive inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com