Sparse disparity acquisition method based on target edge features and gray similarity

An edge feature and acquisition method technology, applied in the field of stereo vision, can solve the problems of loss of information, recovery of ambiguity of 3D scene information, and low correct matching rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] In order to better illustrate the purpose and advantages of the present invention, it will be described below in conjunction with specific embodiments.

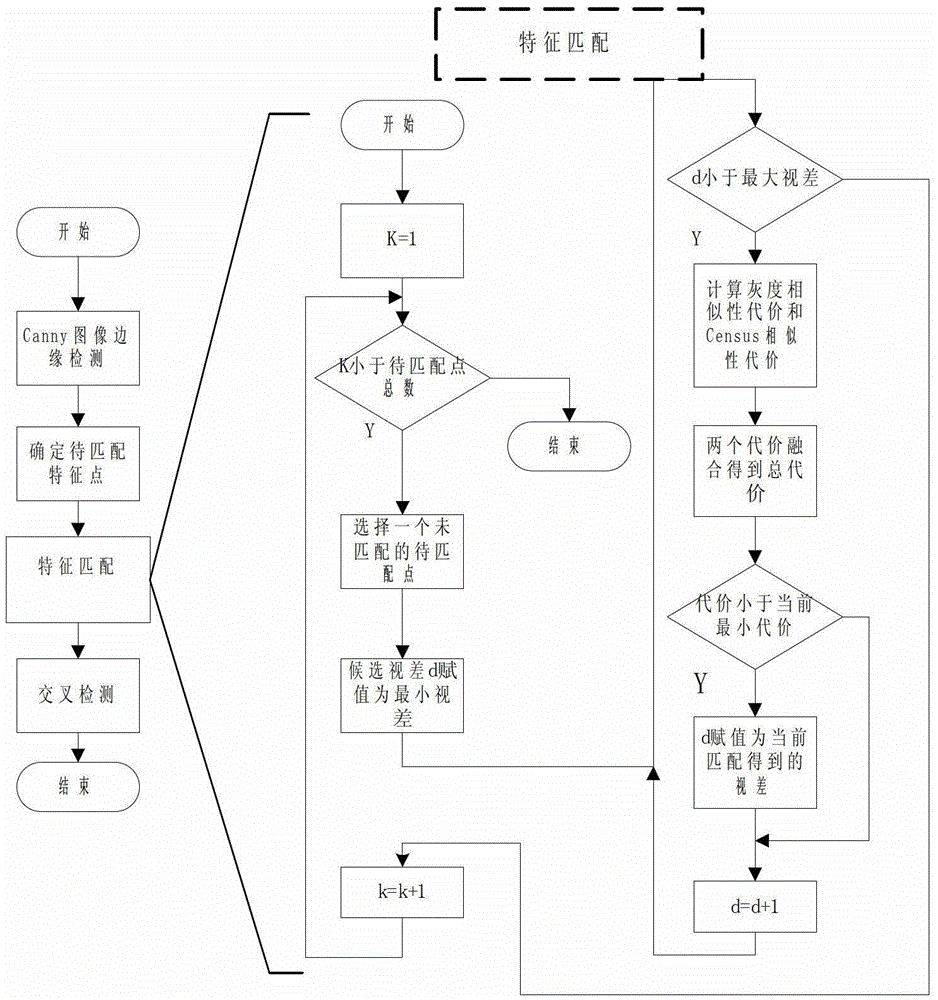

[0055] The method of the present invention first uses the left image as a reference, and the right image as a matching search image for matching, and utilizes edge feature similarity constraints to eliminate non-corresponding points as soon as possible; then, utilizes a similarity detection method based on grayscale and grayscale Census transformation to find The best matching point. Finally, the right image will be used as a reference, and the left image will be used as a matching search image to perform cross-checking on the matching results to eliminate false matching points and further improve the accuracy of matching. The specific process is as follows figure 2 shown.

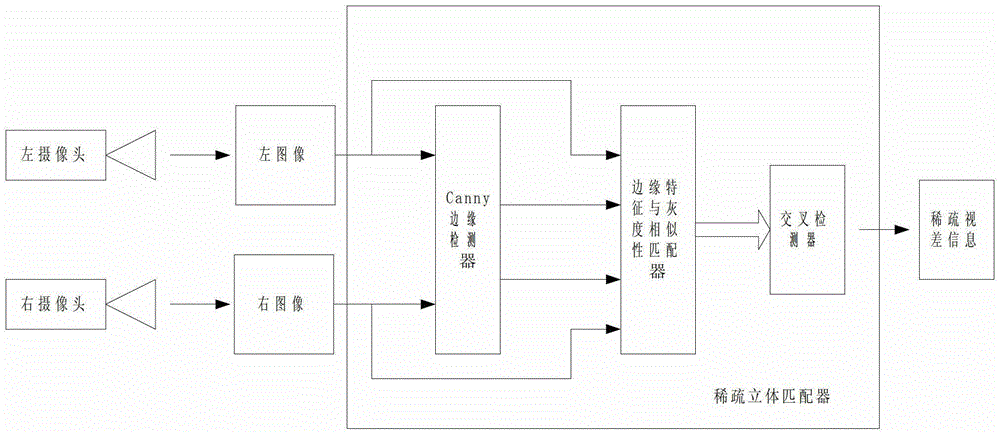

[0056] The structure of the sparse stereo matching device designed according to the method of the present invention is as follows figure 1 As s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com