Foreground segmentation method based on significance detection

A foreground segmentation and salient technology, applied in the field of computer vision, to achieve the effect of convenient follow-up processing and analysis, suppression of interference, and good real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0044] (1) Perform a 3×3 median filter on the original image to remove the influence of some impulse noise on saliency detection.

[0045] (2) Extract the color, brightness, and direction features of the original image, assuming that the original image is in RGB format, the extraction method is as follows,

[0046] a. Let r, g, and b be the three components of image red, green and blue respectively, then the brightness feature can be obtained by the following formula: I(r+g+b) / 3;

[0047] b. Convert the RGB color space to CIELAB space, and extract three color components of l, a, and b as color features;

[0048] c. Use Gabor filters in 4 directions of 0°, 45°, 90°, and 135° to filter the brightness map I respectively to obtain four direction features.

[0049] In this way, 8 feature maps are formed, and the feature map set {F i}, 1≤i≤8 represent them.

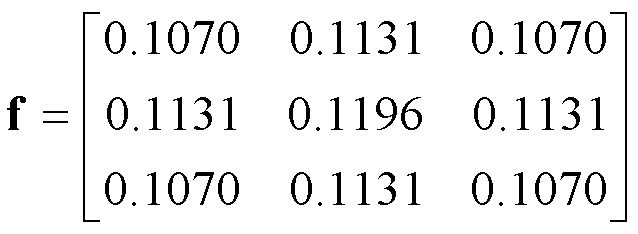

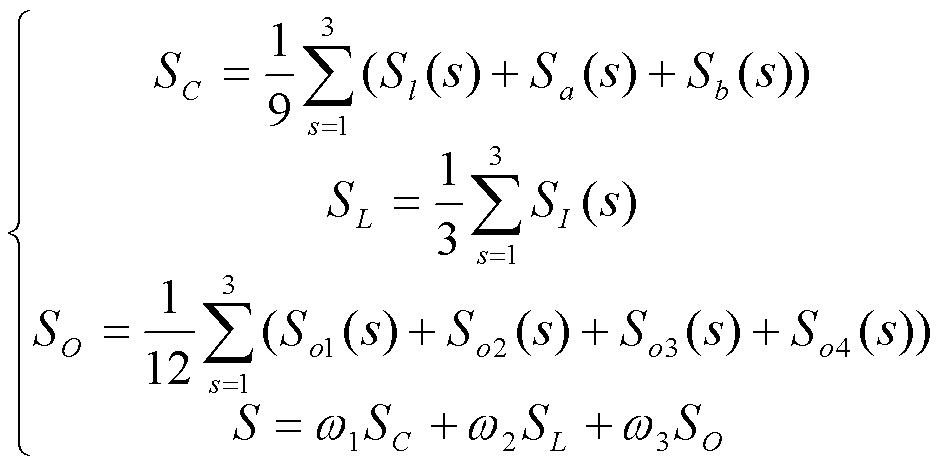

[0050] (3) The feature atlas is down-sampled at intervals of 2 scales, the scales are 1 / 2 and 1 / 4 of the original image, and...

Embodiment 2

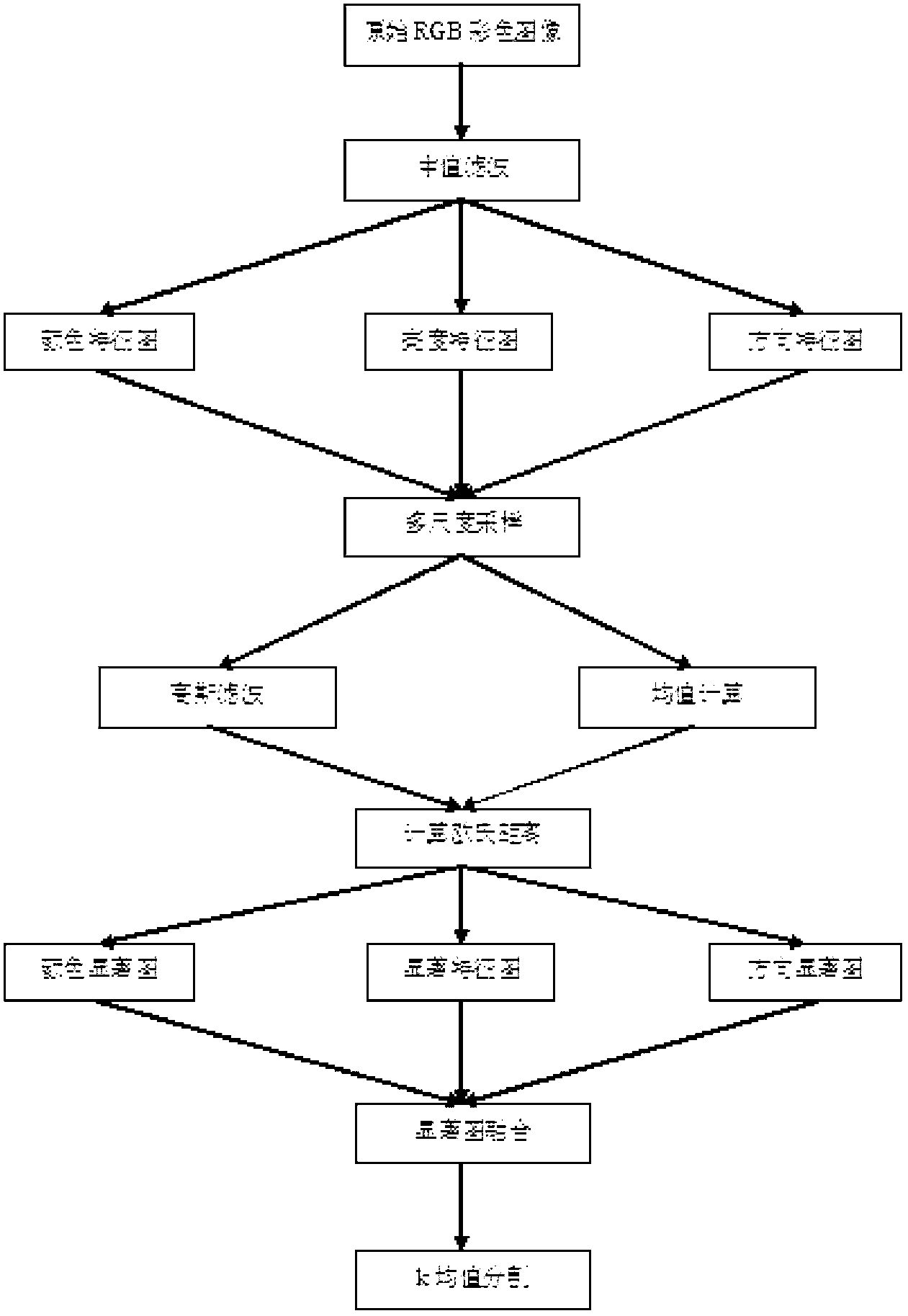

[0059] figure 1 The flow chart of the foreground segmentation method based on saliency detection according to the present invention is provided, and its main steps are as follows:

[0060] (1) Input a color image I(x,y) in RGB format, perform 3×3 median filtering on I(x,y), and the filtered image I'(x,y) is

[0061] I'(x,y)=median(I(i+1,y+j)),1≤i≤1,-1≤j≤1

[0062] (2) Extract color, brightness, and direction features according to the following rules

[0063] a. Let r, g, and b be the three components of image red, green and blue respectively, then the brightness feature can be obtained by the following formula: I=(r+g+b) / 3;

[0064] b. Convert the RGB color space to CIELAB space, and extract three color components of l, a, and b as color features;

[0065] c. Use Gabor filters in 4 directions of 0°, 45°, 90°, and 135° to filter the brightness map I respectively to obtain four direction features.

[0066] In this way, 8 feature maps are formed, and the feature map set {F i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com