Generation method of three-dimensional image

A technology of three-dimensional images and processing methods, applied in image data processing, animation production, instruments, etc., can solve the problems of low integration, complicated shooting and production process, and inability to extract spatial stereoscopic effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0037] A three-dimensional animation (image) processing method, comprising the following steps:

[0038] Step 1: Obtain an original two-dimensional image as a first view, extract multiple target areas from the original two-dimensional image, and divide the multiple target areas into target areas of interest and target areas of non-interest.

[0039] as figure 1 The original two-dimensional image P above is used as a left-eye image. When making a three-dimensional image, it is necessary to perform three-dimensional conversion based on the left-eye image to obtain a gray-scale shifted right-eye image P'. When viewing, the audience's left eye captures Left-eye image, right-eye image captures a synchronized right-eye image, and the parallax of the binocular images produces a three-dimensional effect.

[0040] Such as figure 1 As shown above, the original two-dimensional image is a top view image, including at least three target areas from near to far: the character M1, the float...

Embodiment 2

[0057] A three-dimensional animation (image) processing method, comprising the following steps:

[0058] Step 1. Obtain the original two-dimensional image P and the embedded image Q, extract one or more target areas from the original two-dimensional image P as non-interest target areas, and extract one or more target areas from the embedded image Q as interest target areas , embed the region of interest embedded in the image Q into the original 2D image P as the first view.

[0059] Such as Figure 4 As shown in , the embedded image Q includes a target region M1, which is taken as the target region of interest. Such as Figure 5 As shown, the original two-dimensional image P includes at least three target areas from near to far: soldier M2, guardrail M3, and vase column fence M4, and M2, M3, and M4 are regarded as non-interest target areas.

[0060] Step 2: Carry out an overall offset for each non-interest target area according to the depth of field of the non-interest targ...

Embodiment 3

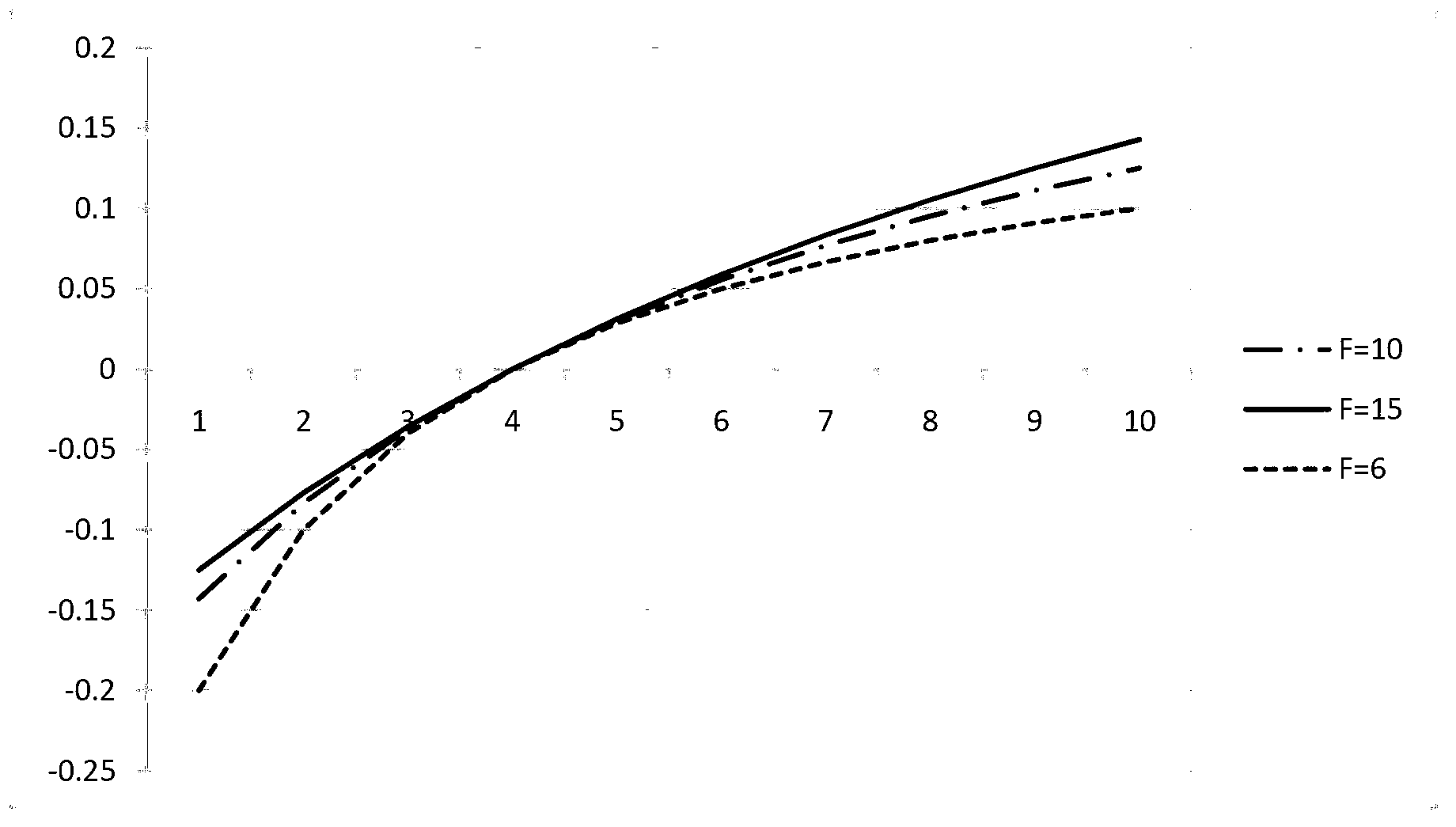

[0071] There are usually continuous frames of the same scene group in the film and television animation. For example, in the second embodiment, when the embedded object of interest moves from near to far, in order to reduce the amount of calculation, the left and right eye views of other non-interest objects can be kept, and only the Target region of interest for processing. Since the distance of the target of interest, that is, the depth of field, changes, the size and offset of the area where the target is located will also change accordingly. Therefore, the scaling of the area and the scaling of the offset can be performed only for the target area of interest. Such as Figure 7 , 8 as shown, Figure 7 is the previous frame image when the depth of field of the target area of interest changes, Figure 8 is the current frame image. Among them, sampling point 1 is located in the non-interest target area, and sampling points 2 and 3 are located in the interesting targe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com