Joint calibration method of vision and satellite positioning sensor for robot navigation

A visual sensor, satellite positioning technology, applied in the field of integrated navigation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

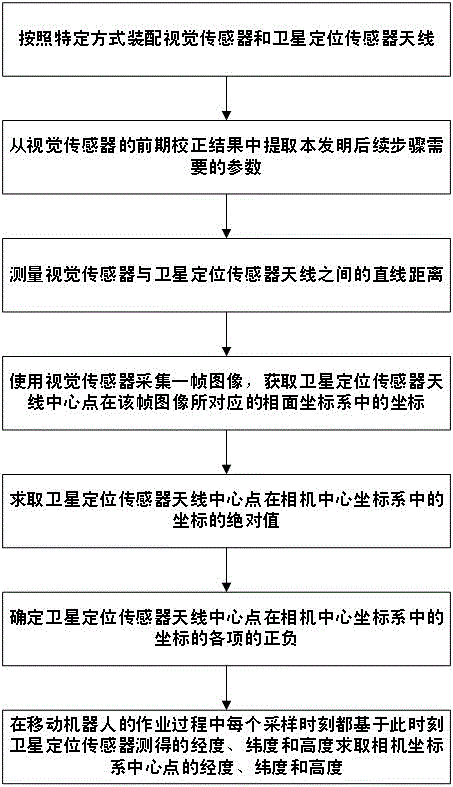

Method used

Image

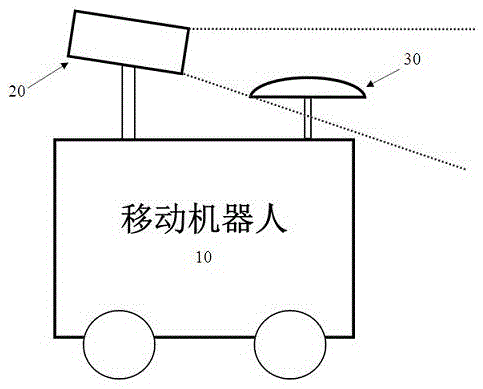

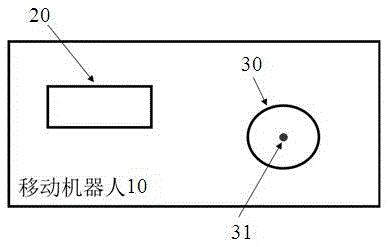

Examples

Embodiment Construction

[0023] In order to better explain the problem, first declare the coordinate system involved in the present invention here:

[0024] The earth-centered earth-fixed coordinate system (represented by e in the present invention) is a right-handed coordinate system. The center of the earth is the origin, the x-axis points to the prime meridian, the y-axis points to 90 degrees east longitude, and the z-axis coincides with the earth's rotation axis and points to the North Pole. According to different expressions, the coordinates under e have two equivalent expressions: Cartesian coordinates, using (x e ,y e ,z e ) represents; geodetic coordinates, with longitude λ, latitude Height h said.

[0025] The local geographic coordinate system (represented by g in the present invention) is a right-handed coordinate system. The origin of the coordinate system is arbitrary, so whenever the local geographic coordinate system is expressed in the subsequent discussions of the present invent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com