State estimation device

A technology for state estimation and measurement device, which is applied in measurement devices, instruments, complex mathematical operations, etc., and can solve problems such as estimating observation objects with high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 Embodiment approach ]

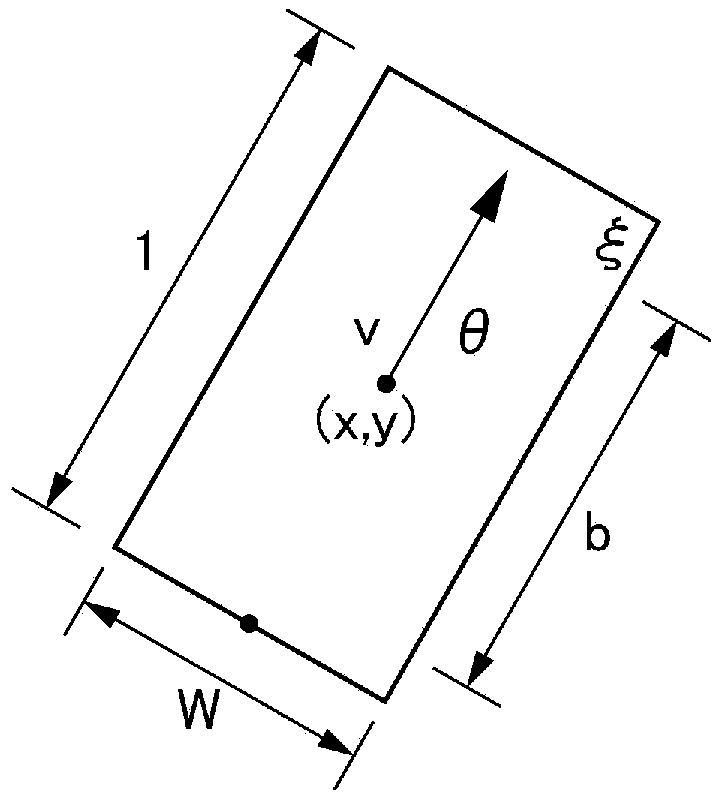

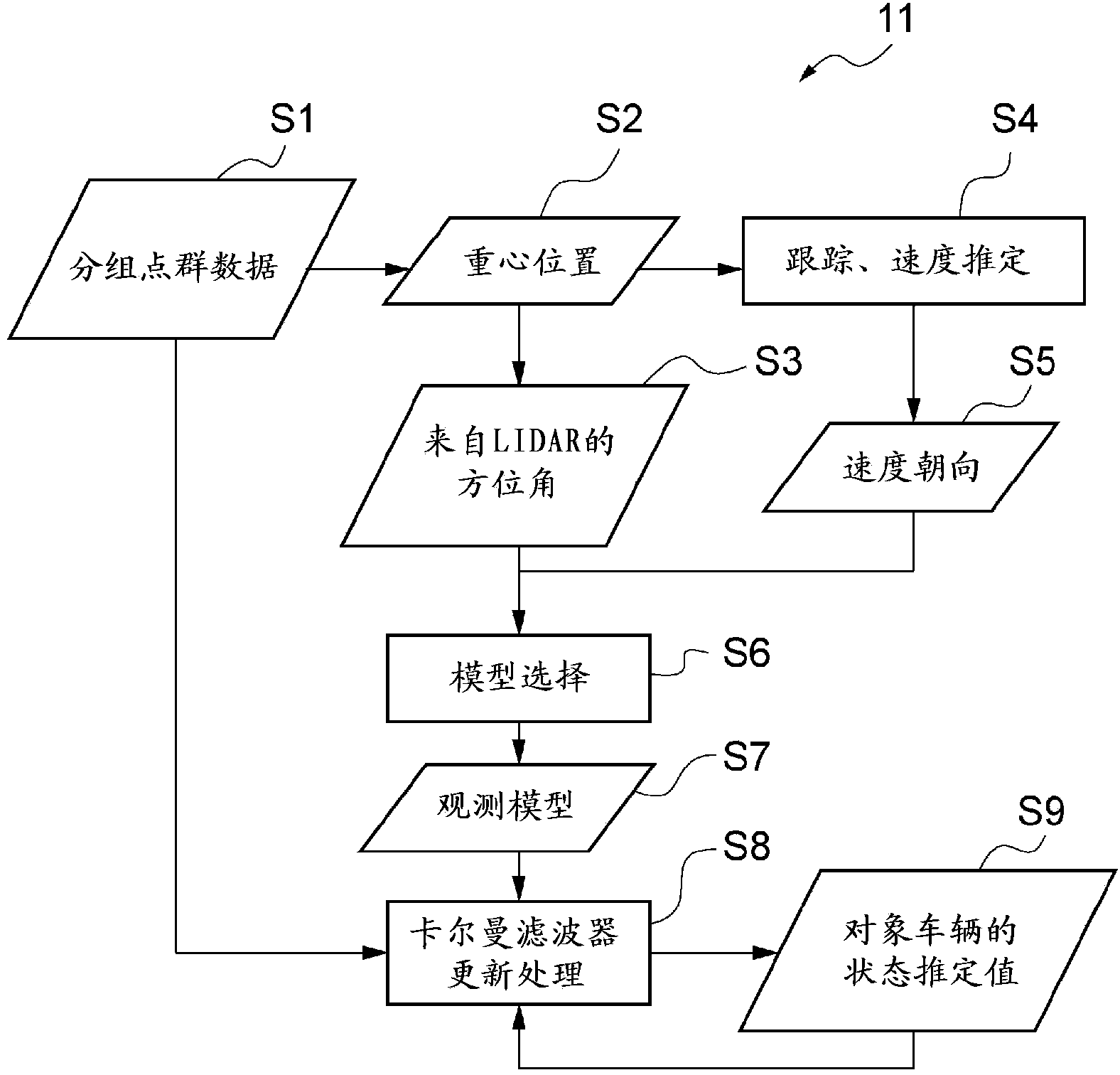

[0075] The estimation processing of the state estimation device 11 according to the first embodiment will be described. image 3 It is a figure which shows the estimation process of the state estimation apparatus concerning 1st Embodiment.

[0076] Such as image 3 As shown, the state estimation device 11 according to the first embodiment changes the observation model used in the Kalman filter update process based on the direction of the center position of the target vehicle with respect to the LIDAR 2 and the orientation of the target vehicle. As the observation model, there are the following eight: a rear observation model targeting the rear of the target vehicle, a left oblique rear observation model targeting the rear and left side of the target vehicle, a left observation model targeting the left side of the target vehicle, A left oblique front observation model for the front and left of the target vehicle, a front observation model for the front of the target vehicle, a...

no. 2 Embodiment approach ]

[0129] Next, the estimation processing of the state estimation device 12 according to the second embodiment will be described. The second embodiment is basically the same as the first embodiment, but differs from the first embodiment in the method of selecting an observation model. Therefore, only the parts different from the first embodiment will be described below, and the description of the same parts as the first embodiment will be omitted.

[0130] Figure 8 It is a figure which shows the estimation process of the state estimation apparatus concerning 2nd Embodiment. Such as Figure 8 As shown, the state estimation device 12 according to the second embodiment selects the observation models used in the current estimation process based on the observation models used in the previous estimation process.

[0131] Usually, the behavior change of the vehicle is continuous. Therefore, even if the positional relationship with the target vehicle or the state of the target vehic...

no. 3 Embodiment approach ]

[0138]Next, the estimation processing of the state estimation device 13 according to the third embodiment will be described. The third embodiment is basically the same as the first embodiment, but differs from the first embodiment in the method of selecting an observation model. Therefore, only differences from the first embodiment will be described below, and the description of the same parts as the first embodiment will be omitted.

[0139] Figure 9 It is a figure which shows the estimation process of the state estimation apparatus concerning 3rd Embodiment. As described above, in the first embodiment, the direction of the center position of the target vehicle with respect to LIDAR2 and the orientation of the target vehicle are obtained based on the grouped point cloud data generated in S1. In contrast, as Figure 9 As shown, in the third embodiment, the direction of the center position of the target vehicle with respect to LIDAR 2 and the orientation of the target vehic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com