Disk caching method and device in a parallel computing system

A parallel computing and disk caching technology, applied in computing, memory systems, instruments, etc., can solve problems such as no cache to external memory and inability to process, achieve fast computing, small fan-in and fan-out costs, and ensure operability and extensible effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in detail below with reference to the accompanying drawings and specific embodiments.

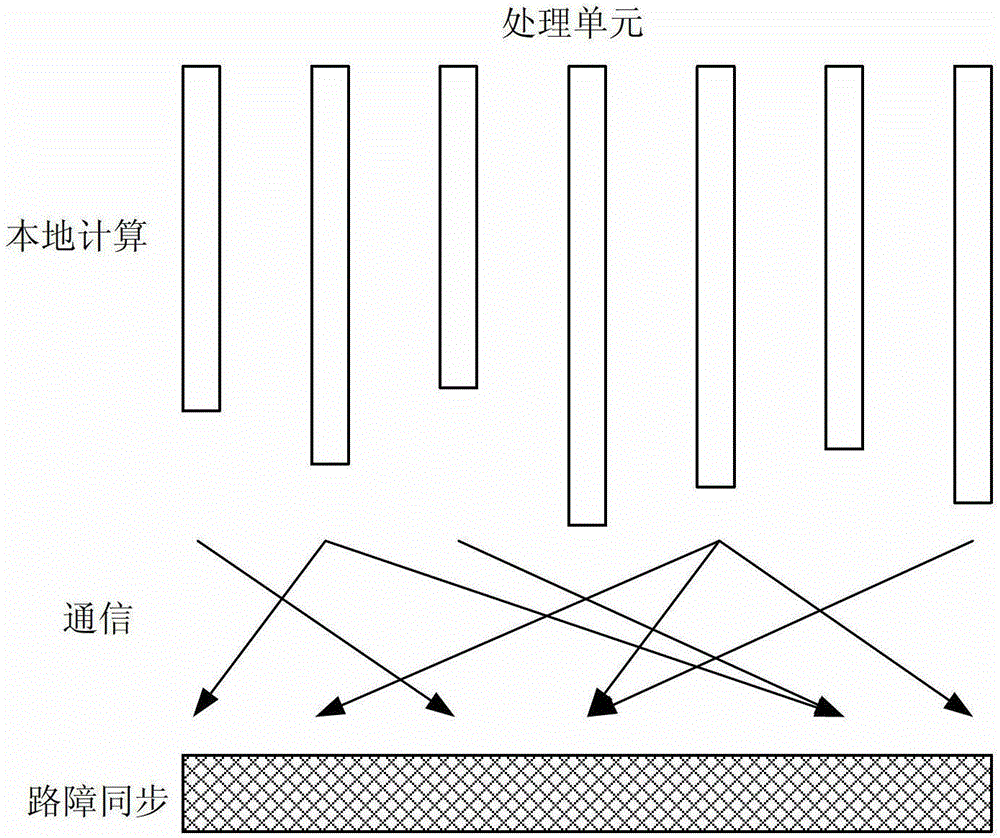

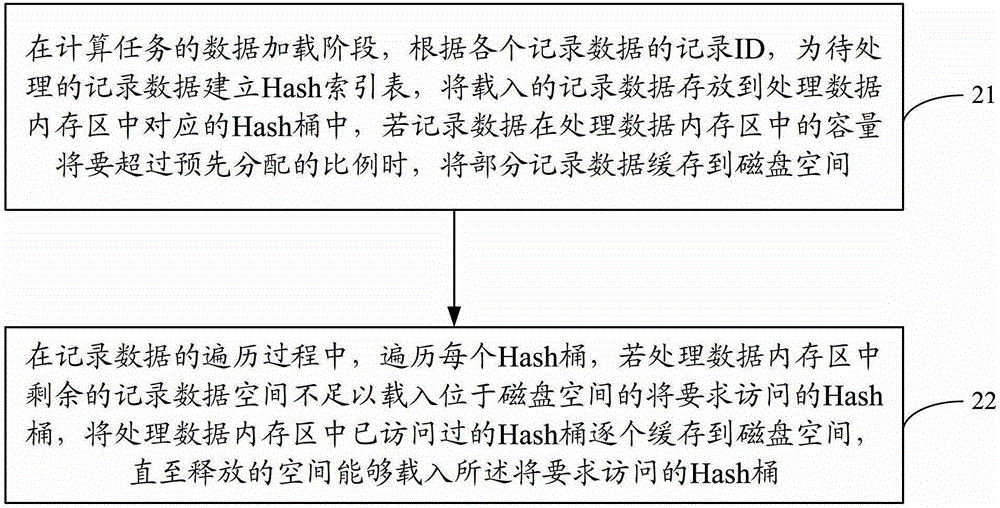

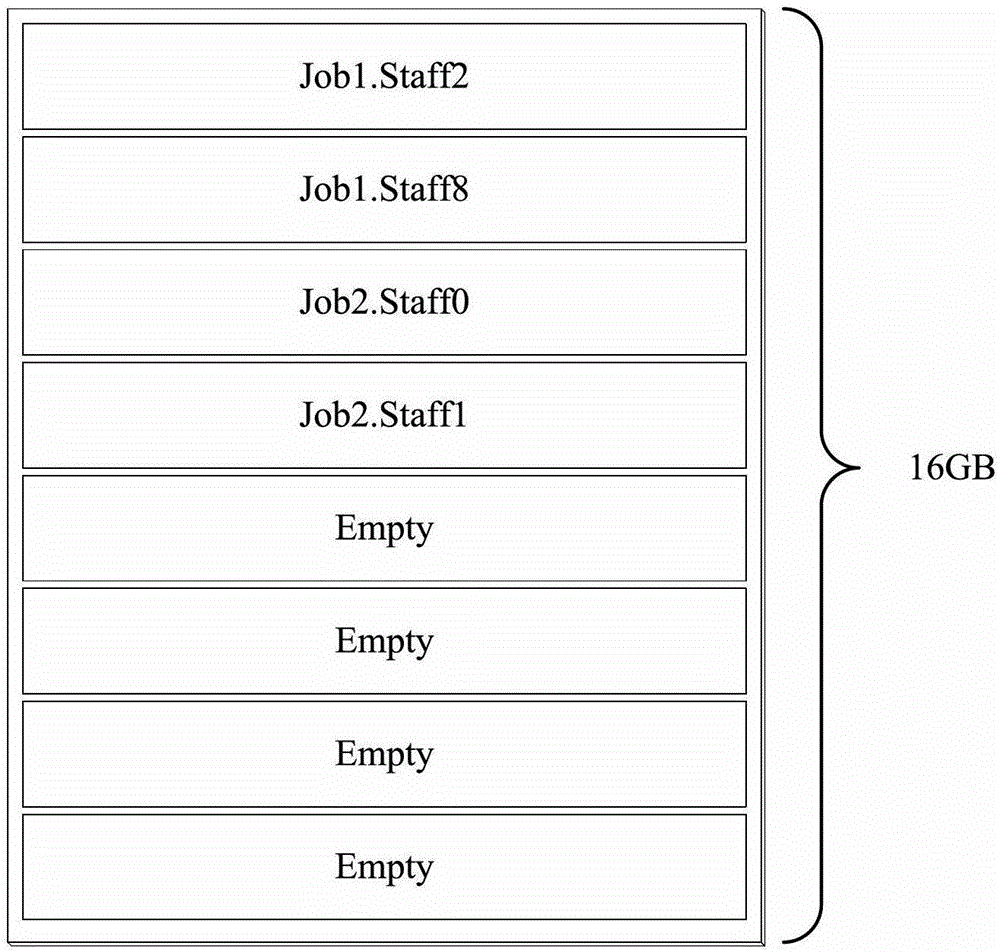

[0043] The problem to be solved by the embodiment of the present invention is exactly: how to support the automatic caching of data to the disk in the parallel iterative computing system based on the BSP model, that is: when the memory can accommodate the calculation data and message data, it is still running in the memory; When the amount of data and messages exceeds the memory capacity, it can automatically cache the overflowed part to the disk file. Furthermore, the embodiment of the present invention can also ensure that when the subsequent calculation process needs these data, it can be quickly read from the disk into the memory for calculation with a small fan-in and fan-out cost, thereby providing the system with the ability to run large-scale...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com