Message preprocessing method and device

A preprocessing device and preprocessing technology, applied in the field of data processing, can solve the problems of CPU resource occupation, poor availability, and low overall performance of network equipment, and achieve the effect of high overall performance and avoidance of occupation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

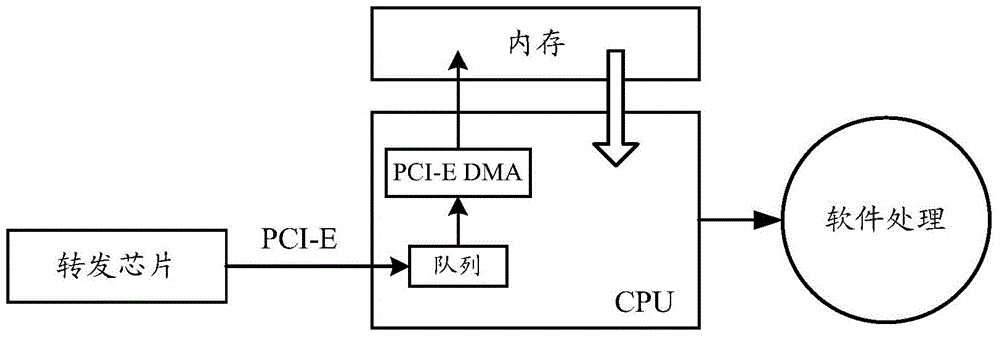

[0037] As an exemplary embodiment, the PCI-E DMA channel corresponding to the PCI-E port can be bridged with the network port DMA channel corresponding to the NA engine, so that the message from the forwarding chip is transmitted to the NA engine, and implemented by the NA engine Preprocessing of packets.

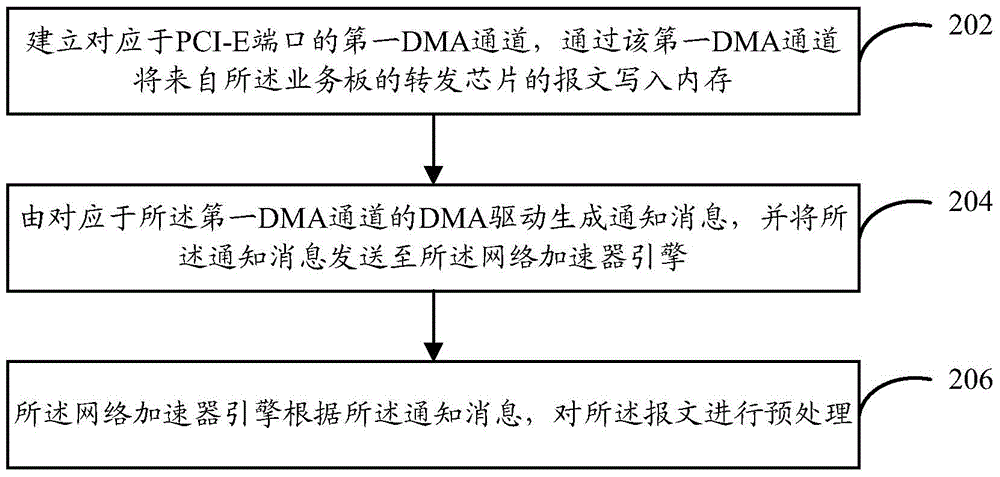

[0038] specifically, Figure 4 It shows a schematic diagram of preprocessing the incoming packets of the PCI-E port through the network accelerator engine according to an embodiment of the present invention.

[0039] Such as Figure 4 As shown, the process of preprocessing the incoming message of the PCI-E port by the network accelerator engine according to an embodiment of the present invention includes:

[0040] (1) Chip configuration during initialization. Specifically, it includes: the DMA driver configures a corresponding physical memory address for the PCI-E DMA, and the network port driver configures a corresponding physical memory address for the network port DMA...

Embodiment 2

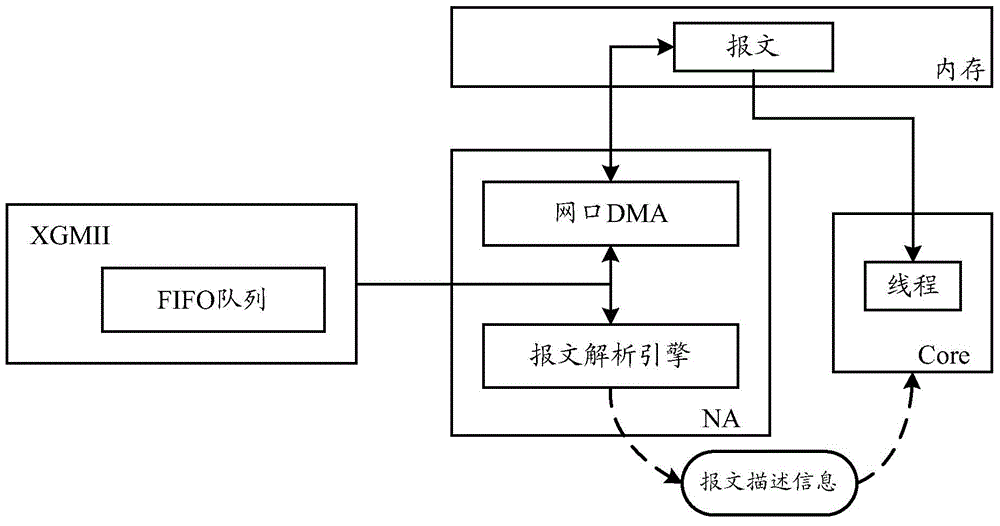

[0073] As another exemplary embodiment, after the message is transferred to the memory through the PCI-E DMA channel, it can be added to the queue of the network port corresponding to the NA engine, such as image 3 The GMAC FIFO queue shown, so that after the message is resent through the network port, the NA engine realizes the preprocessing of the message.

[0074] specifically, Figure 6 It shows a schematic diagram of preprocessing the incoming packets of the PCI-E port through the network accelerator engine according to another embodiment of the present invention.

[0075] Such as Figure 6 As shown, according to another embodiment of the present invention, the process of preprocessing the incoming message of the PCI-E port by the network accelerator engine includes:

[0076] (1) Chip configuration during initialization. Specifically, it includes: 1) The DMA driver configures the corresponding physical memory address for the PCI-E DMA, and the network port driver conf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com