Patents

Literature

84 results about "Web accelerator" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A web accelerator is a proxy server that reduces web site access time. They can be a self-contained hardware appliance or installable software. Web accelerators may be installed on the client computer or mobile device, on ISP servers, on the server computer/network, or a combination. Accelerating delivery through compression requires some type of host-based server to collect, compress and then deliver content to a client computer.

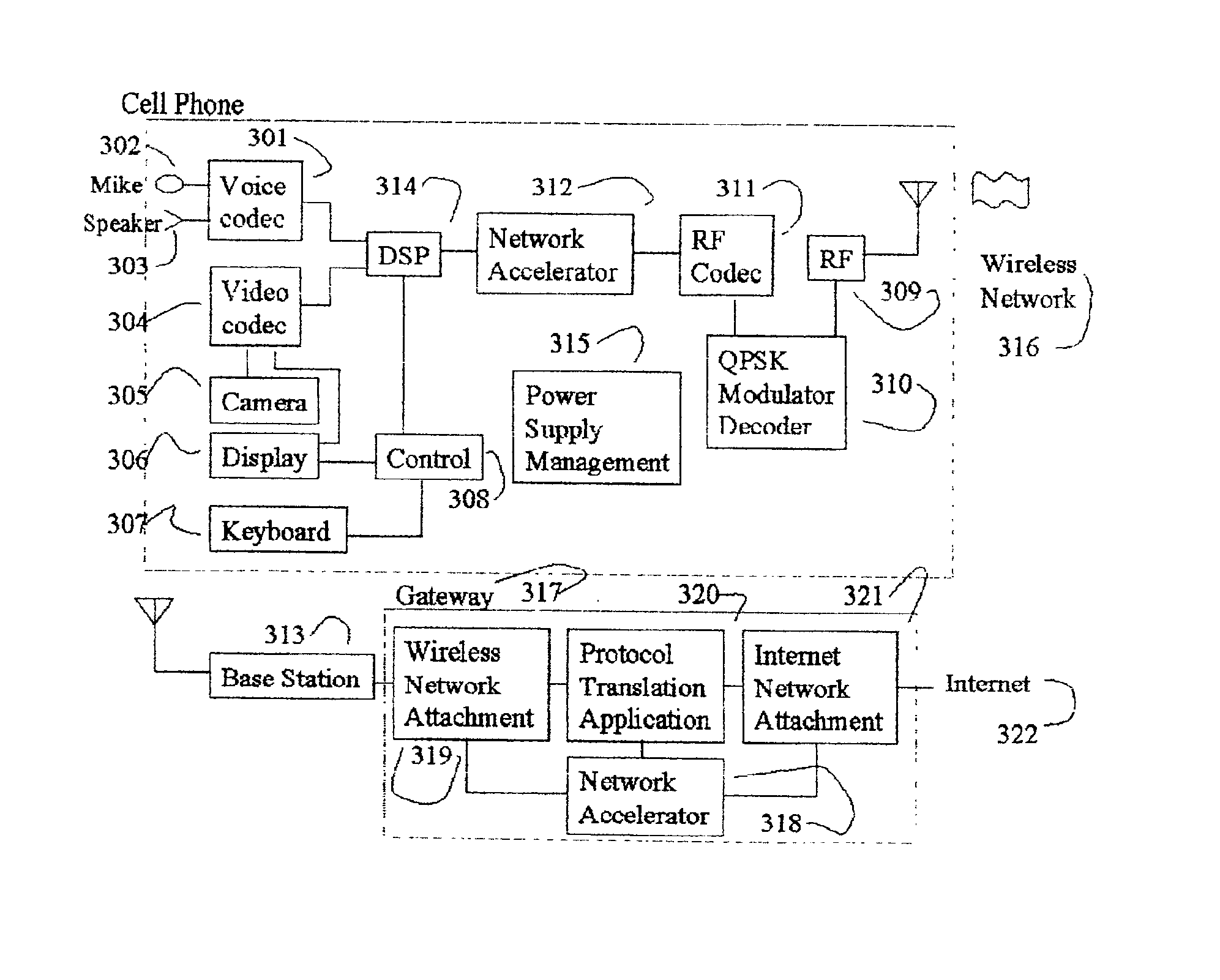

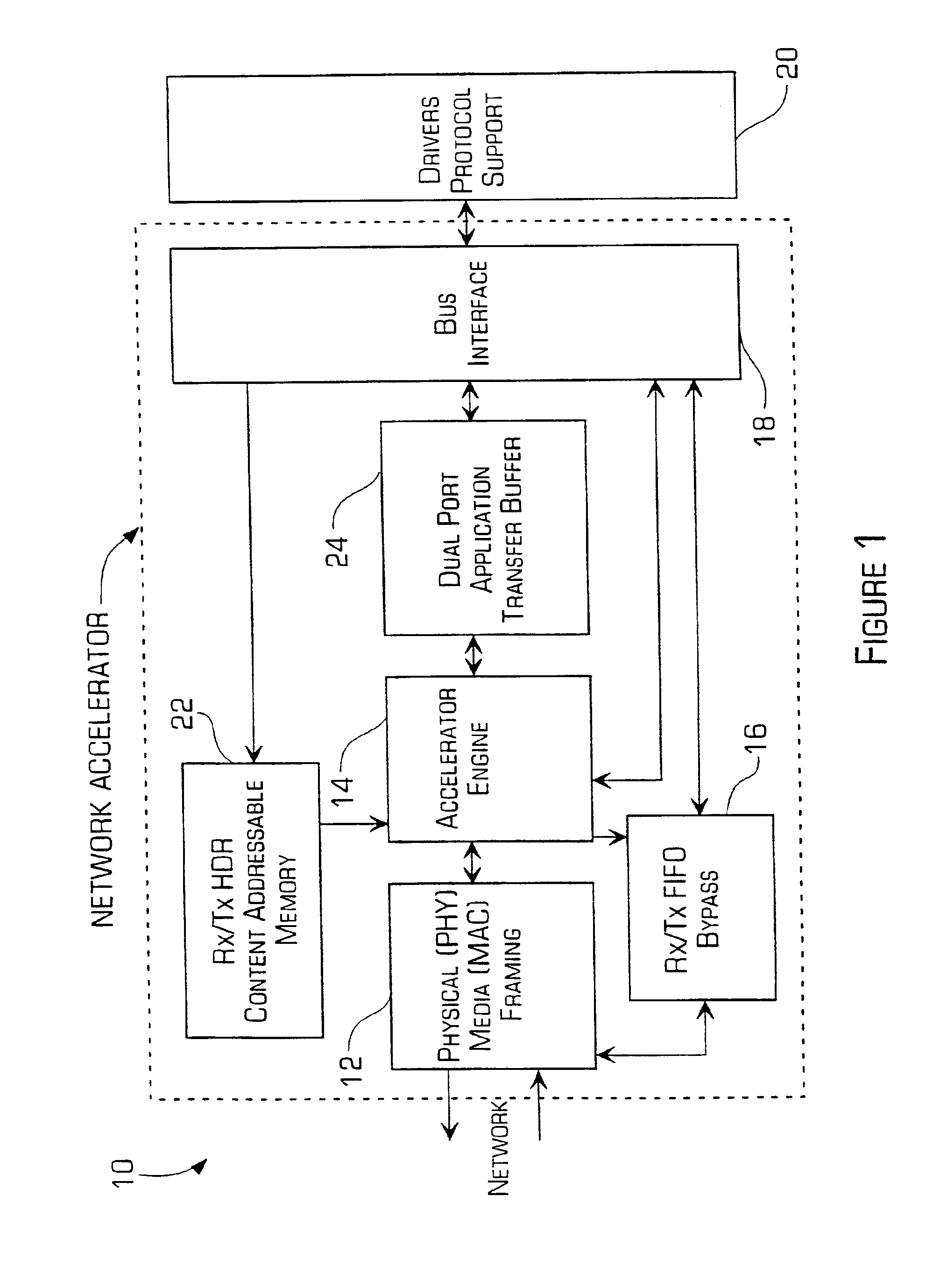

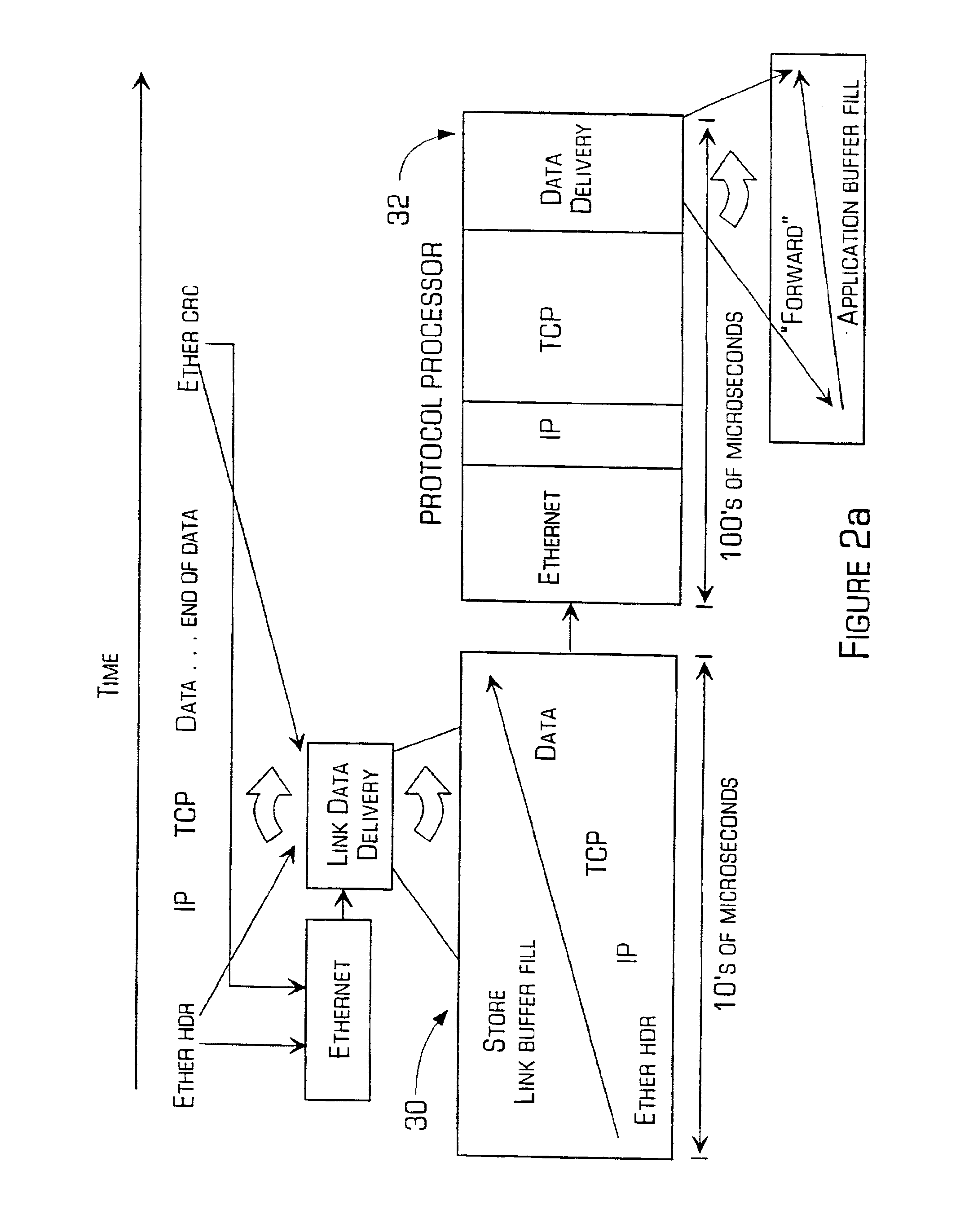

Accelerator system and method

InactiveUS20010004354A1Improve throughputFaster and less-expensive to constructTime-division multiplexRadio/inductive link selection arrangementsTransmission protocolComputer hardware

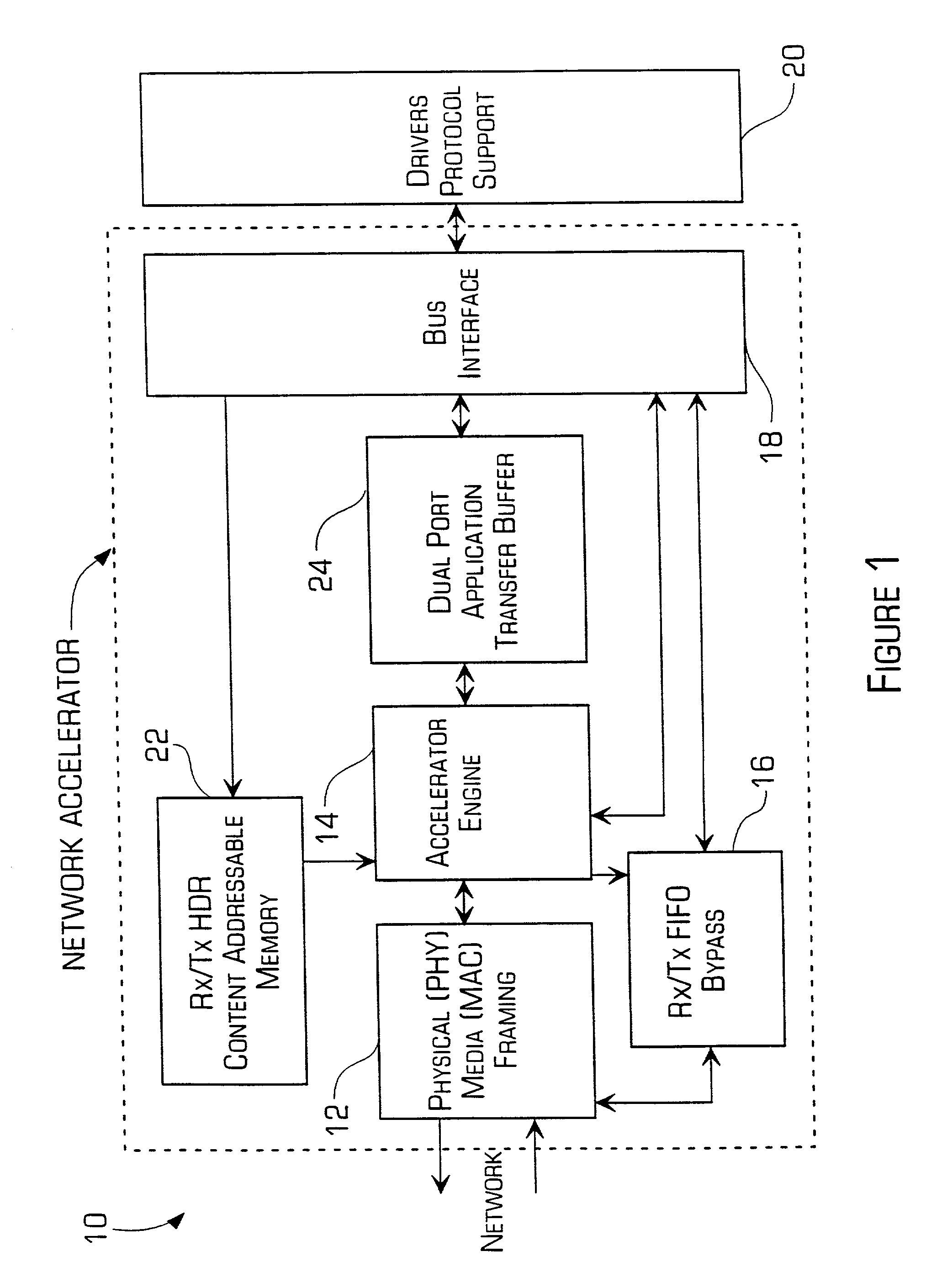

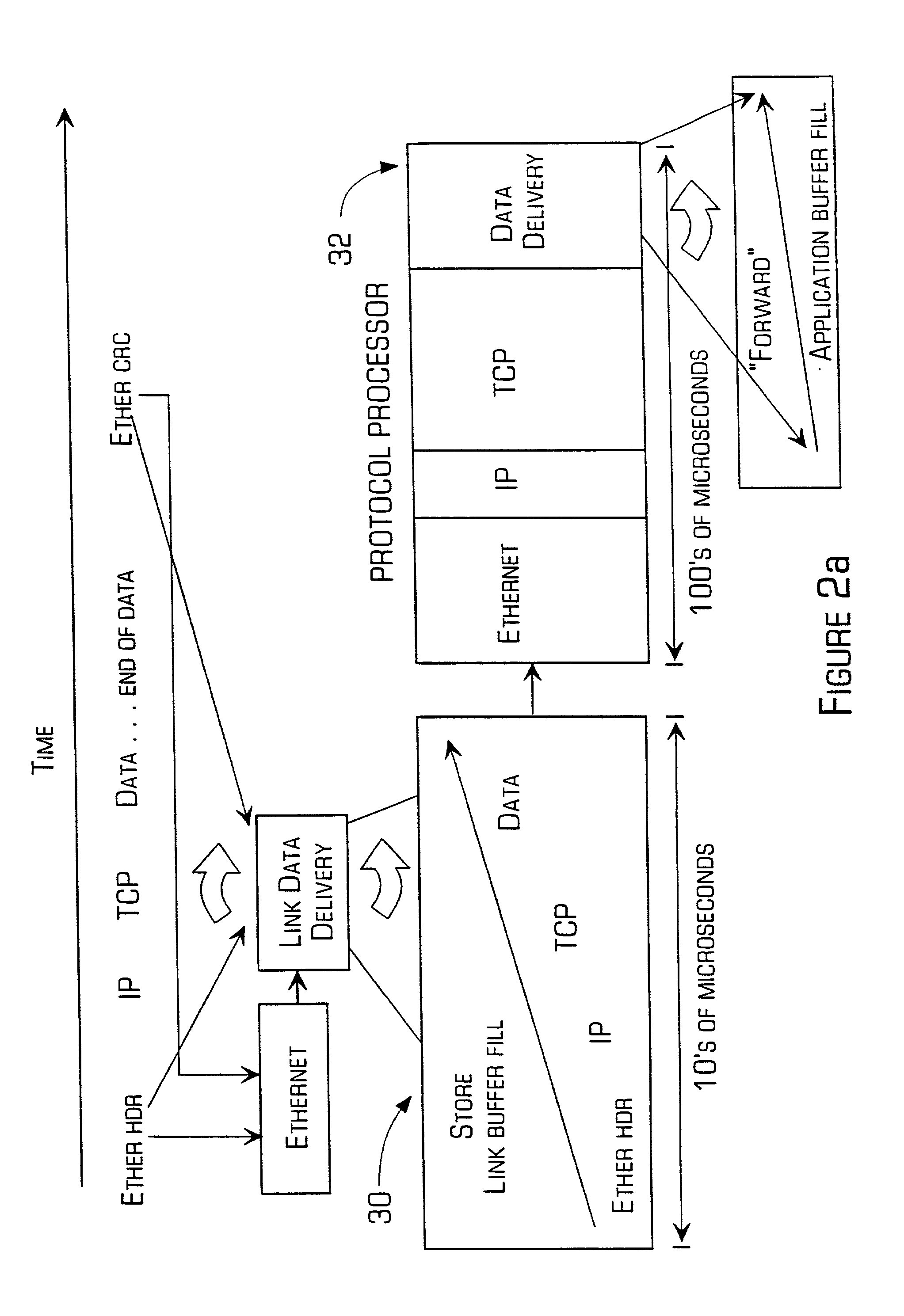

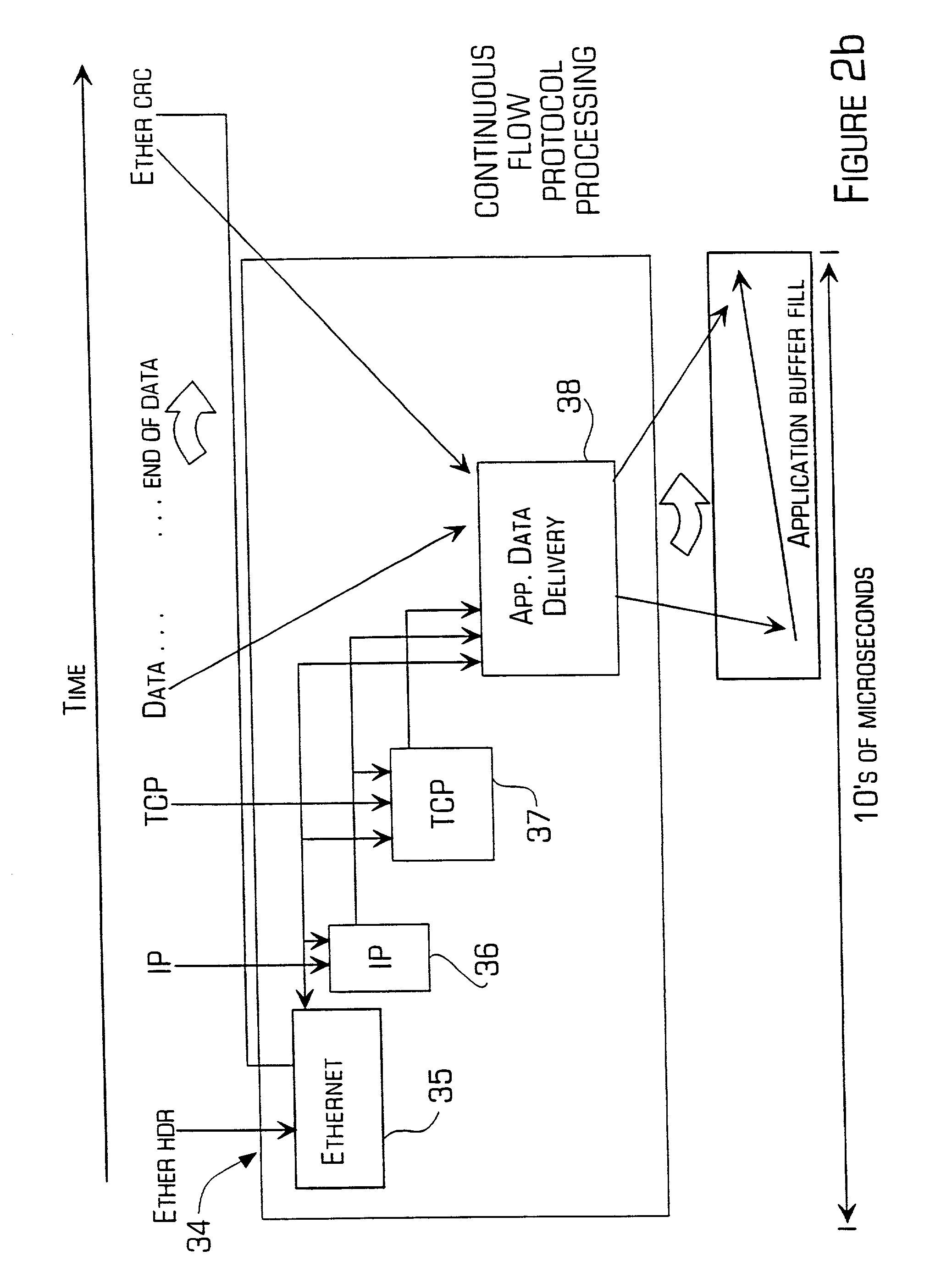

A network accelerator for TCP / IP includes programmable logic for performing transparent protocol translation of streamed protocols for audio / video at network signaling rates. The programmable logic is configured in a parallel pipelined architecture controlled by state machines and implements processing for predictable patterns of the majority of transmissions which are stored in a content addressable memory, and are simultaneously stored in a dual port, dual bank application memory. The invention allows raw Internet protocol communications by cell phones, and cell phone to Internet gateway high capacity transfer that scales independent of a translation application, by processing packet headers in parallel and during memory transfers without the necessity of conventional store and forward techniques.

Owner:JOLITZ LYNNE G

Accelerating network performance by striping and parallelization of TCP connections

InactiveUS20050025150A1Improve performanceReduce needError preventionTransmission systemsTransmission protocolNetwork packet

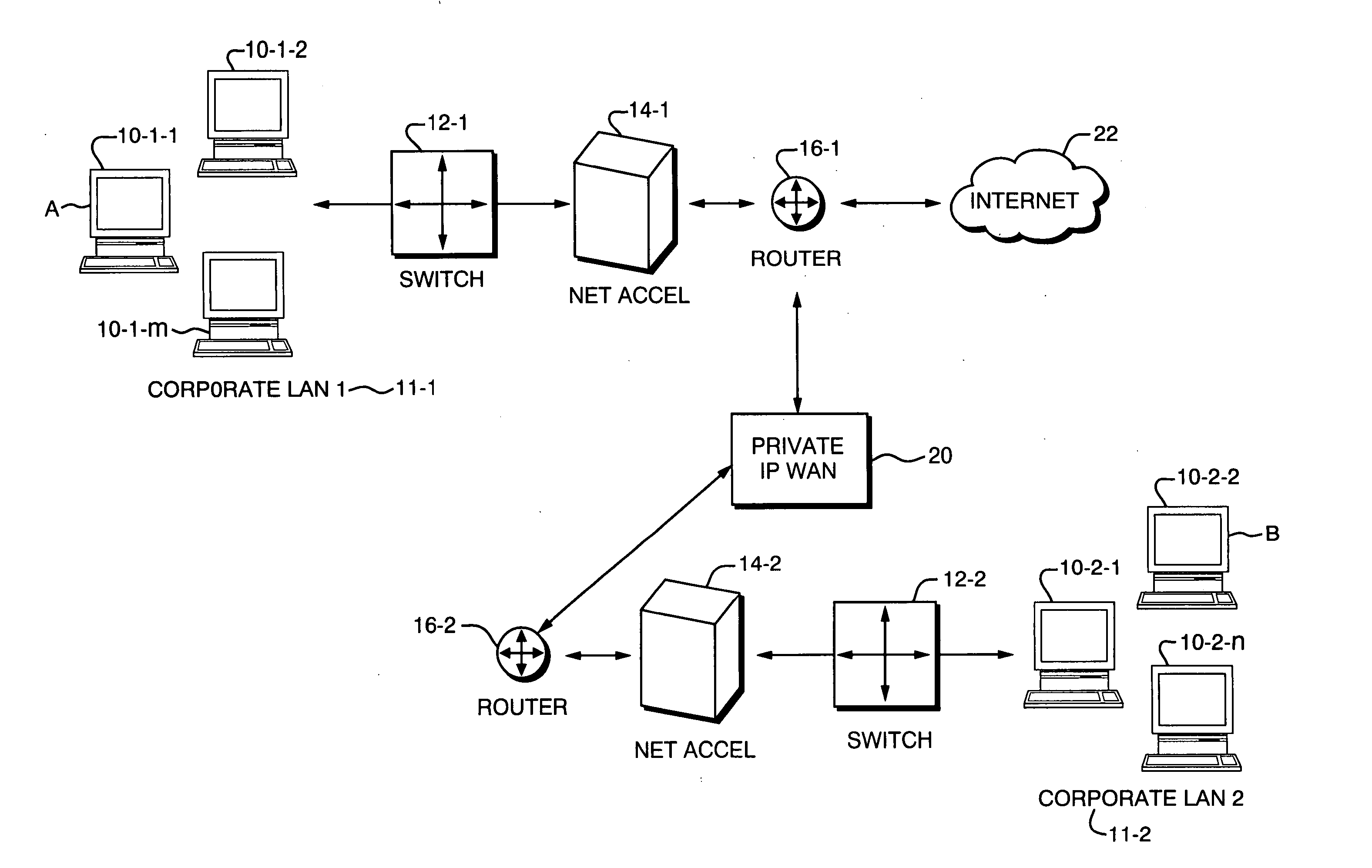

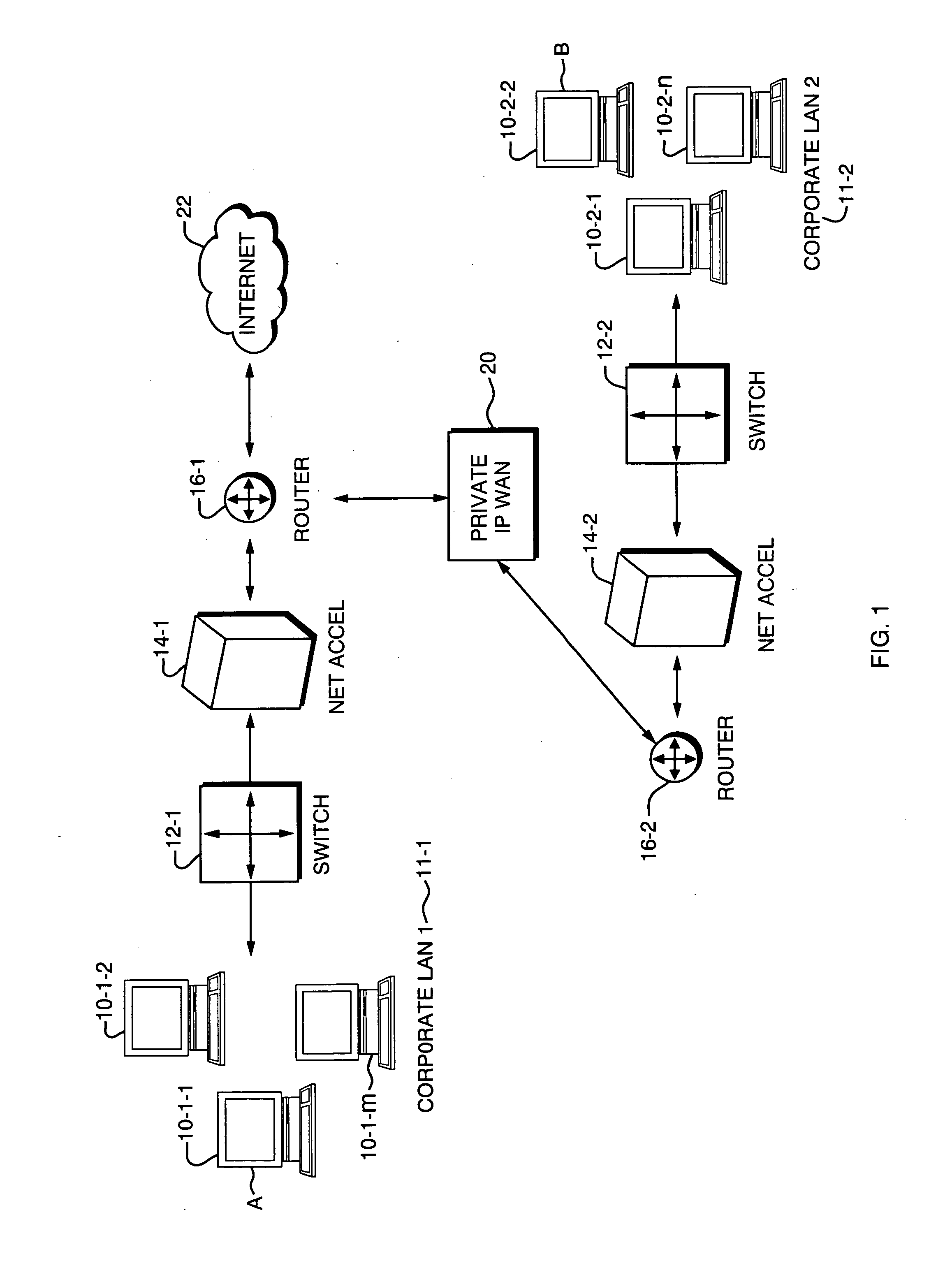

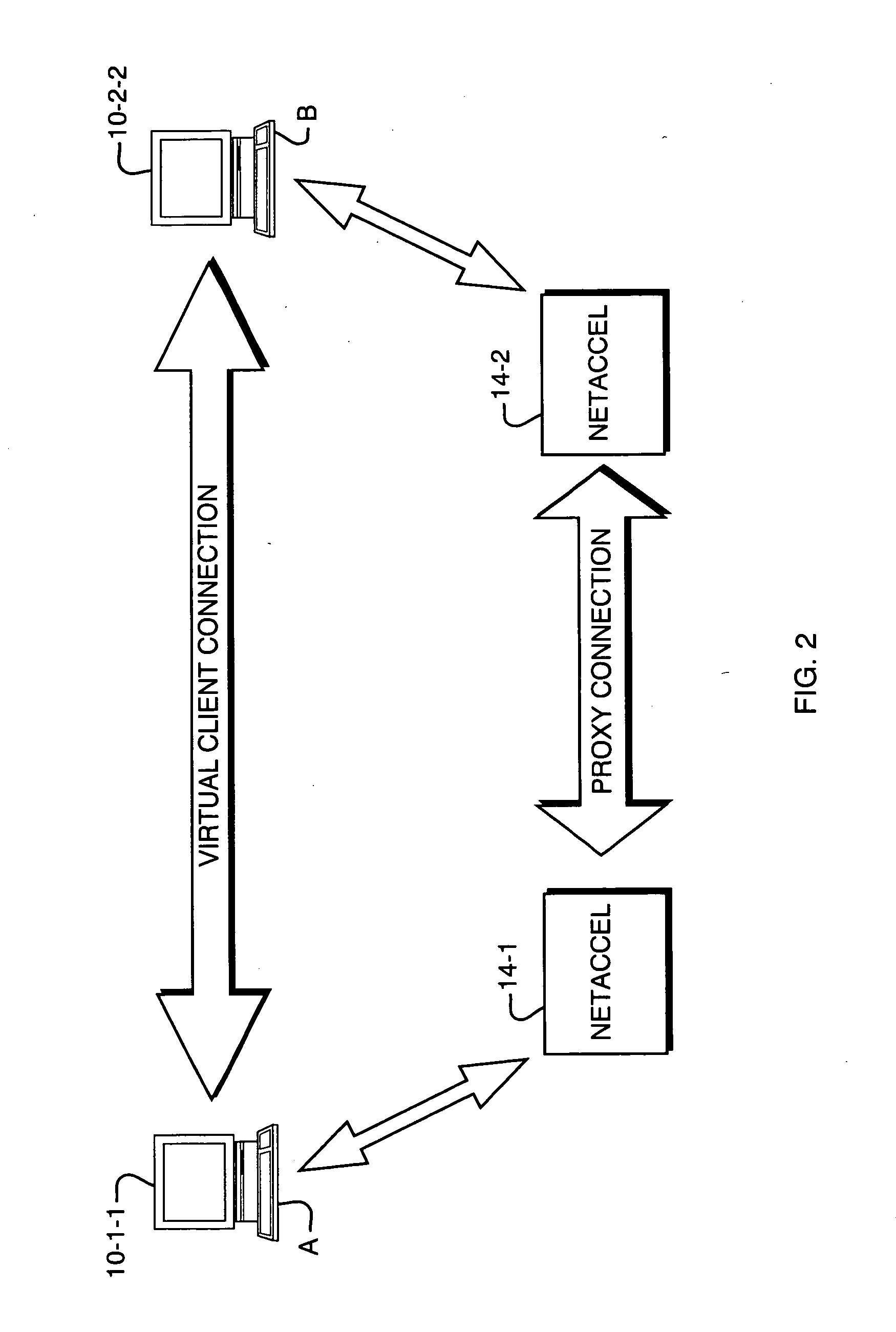

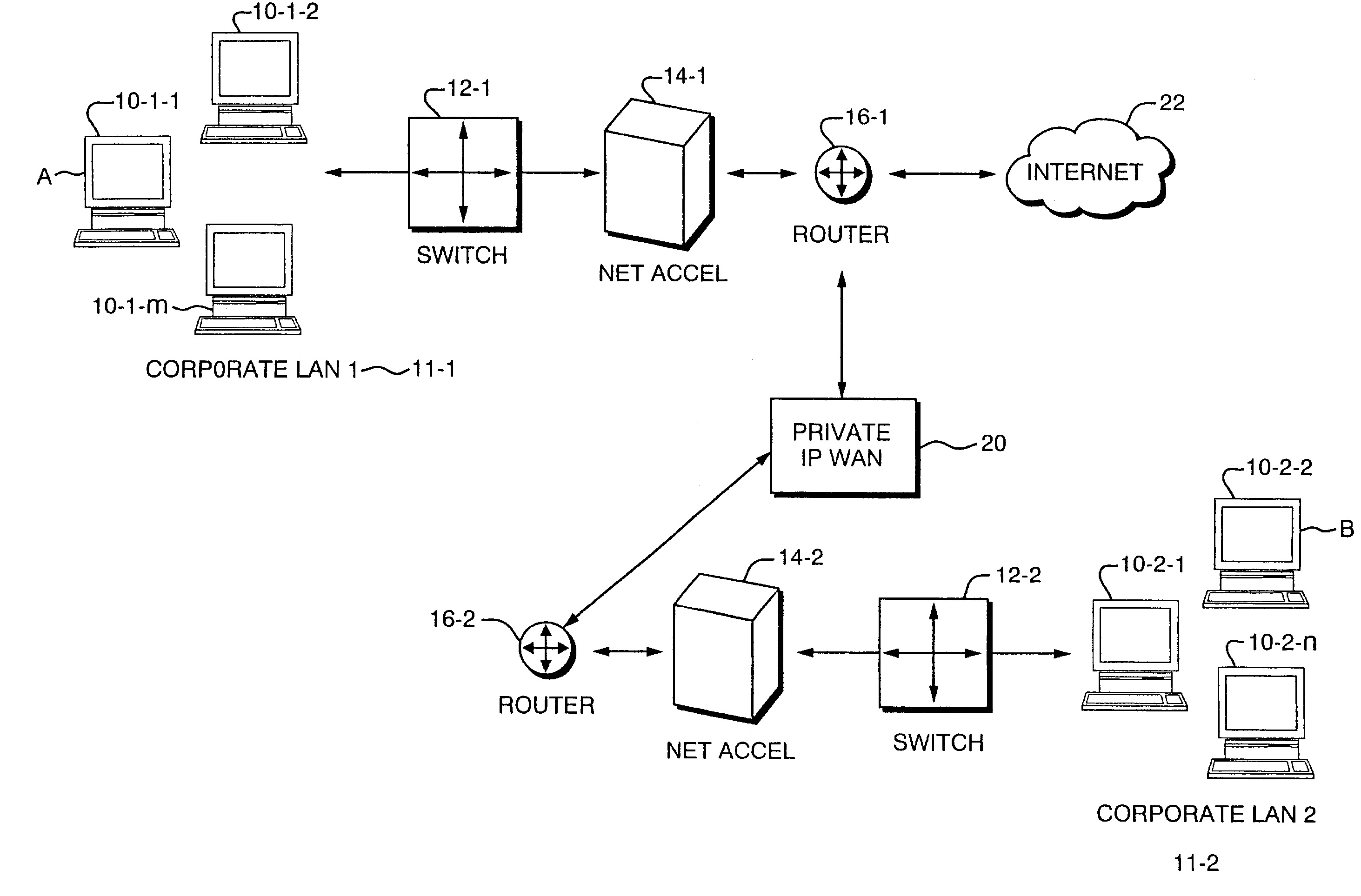

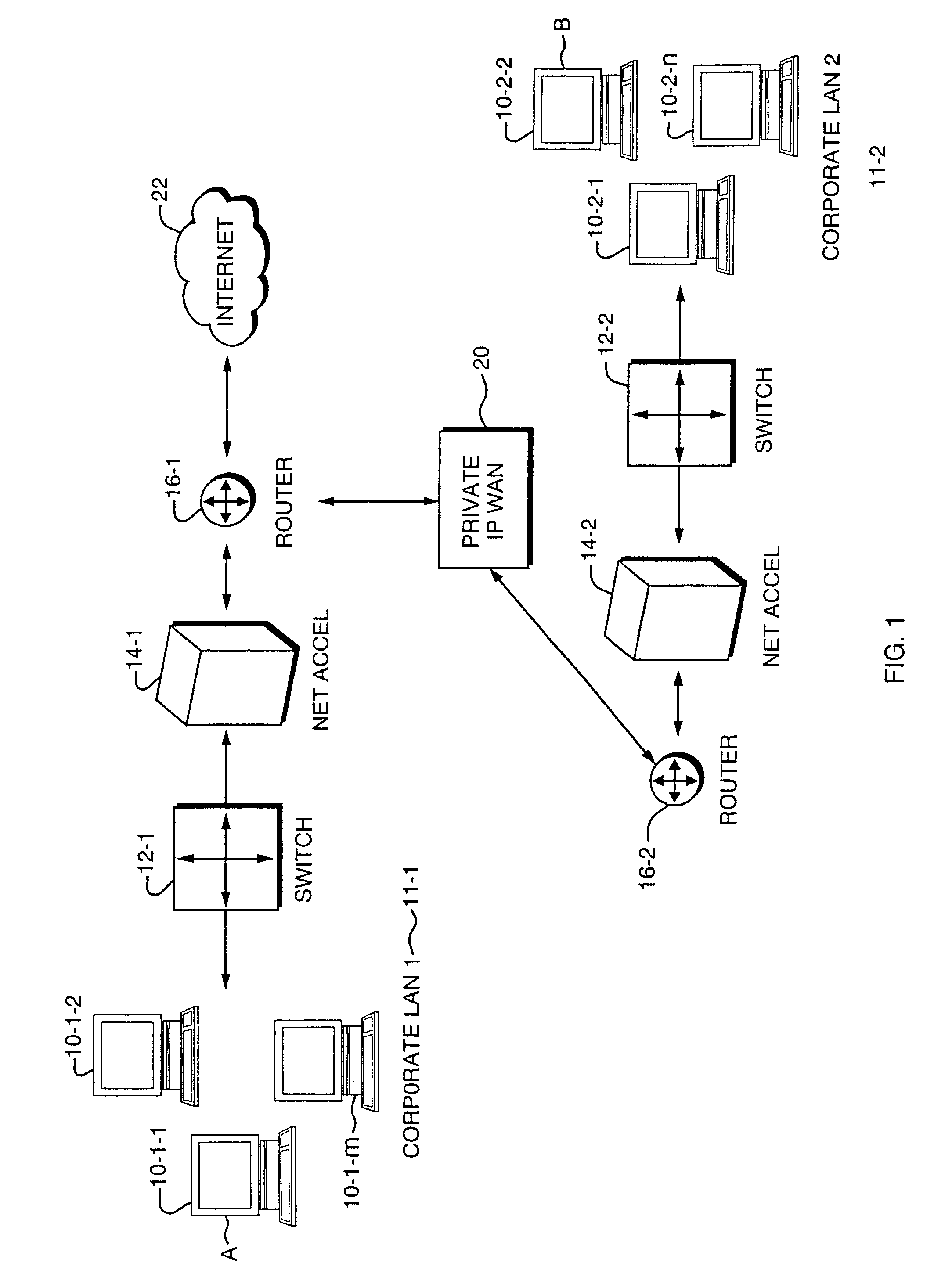

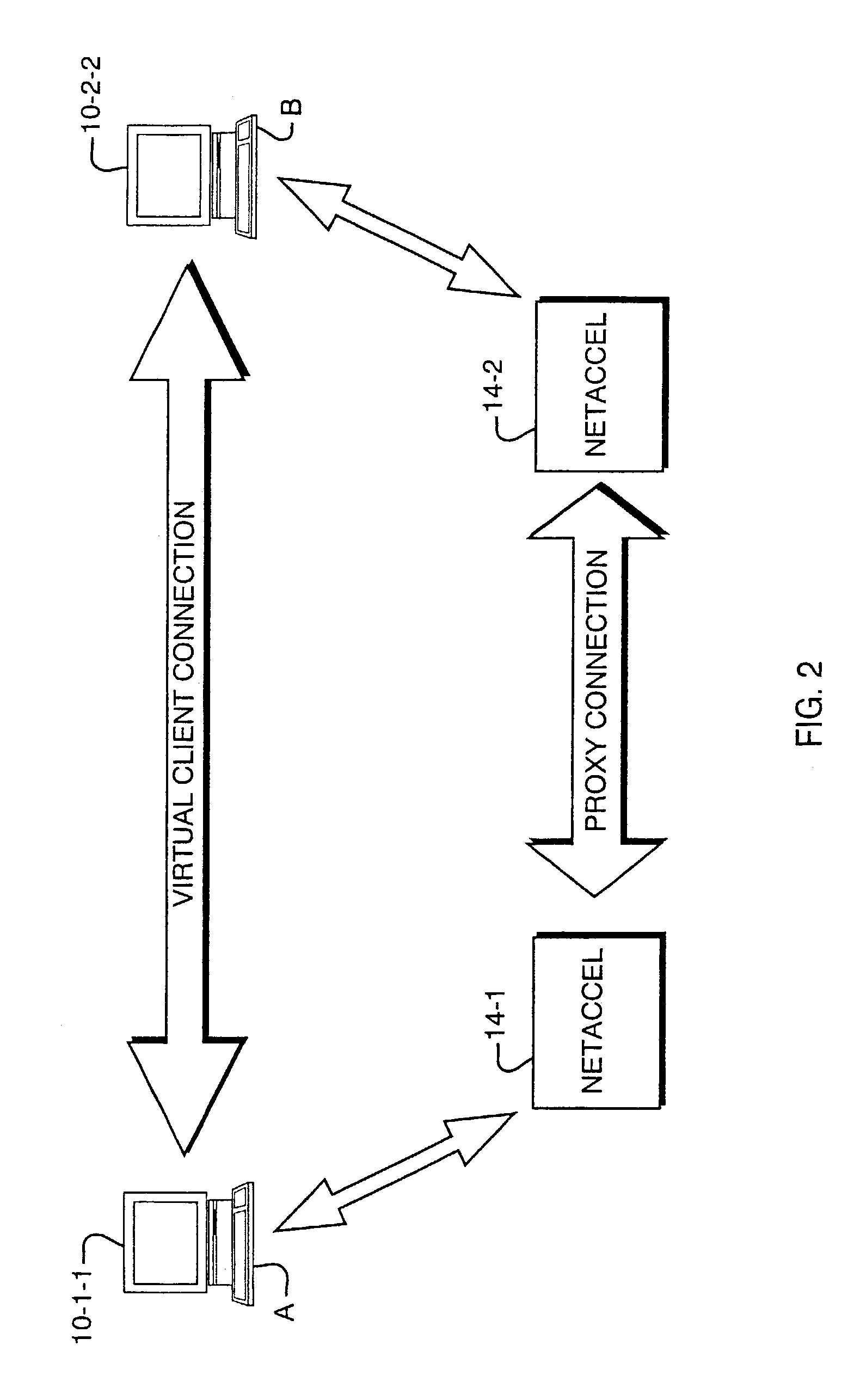

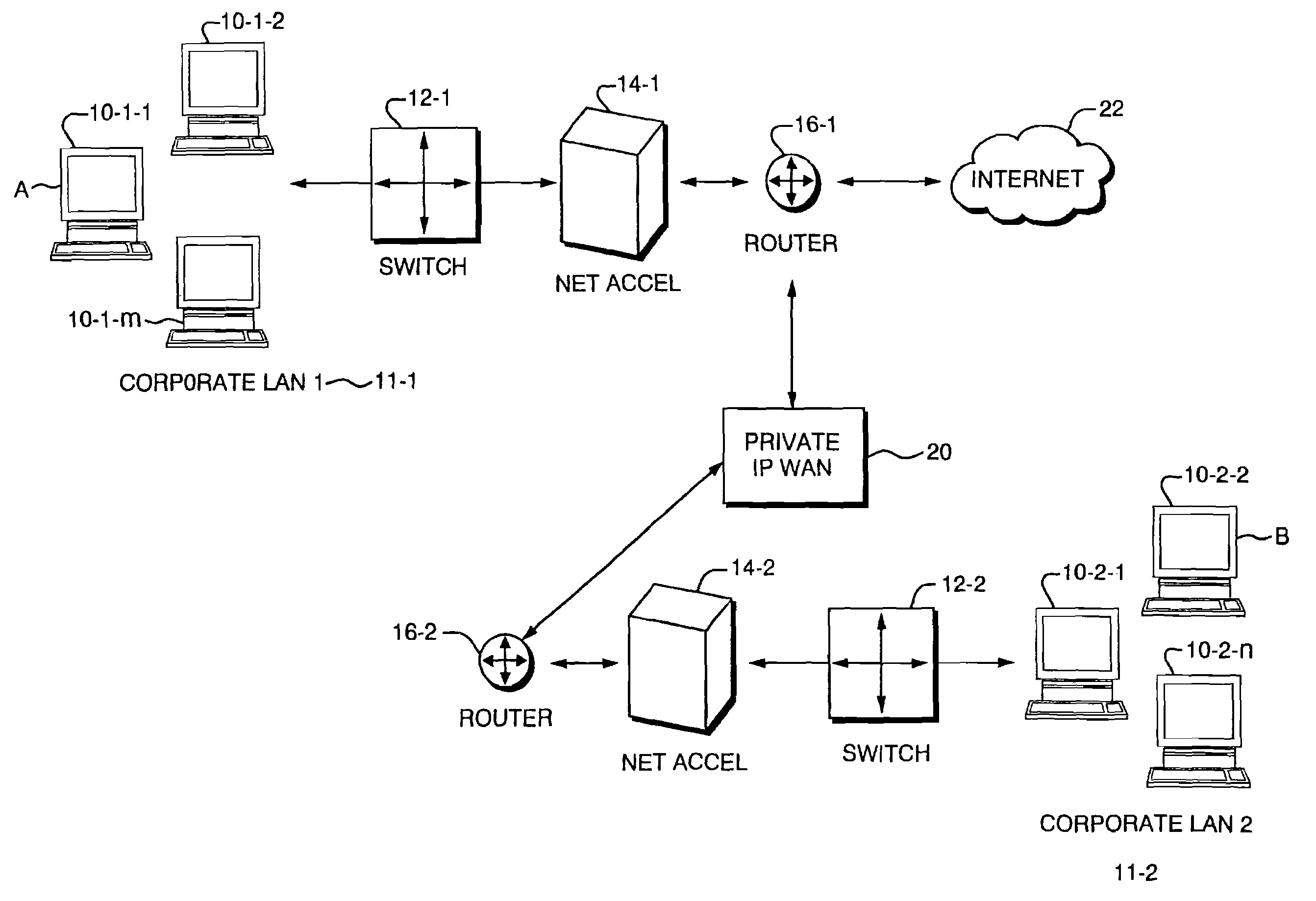

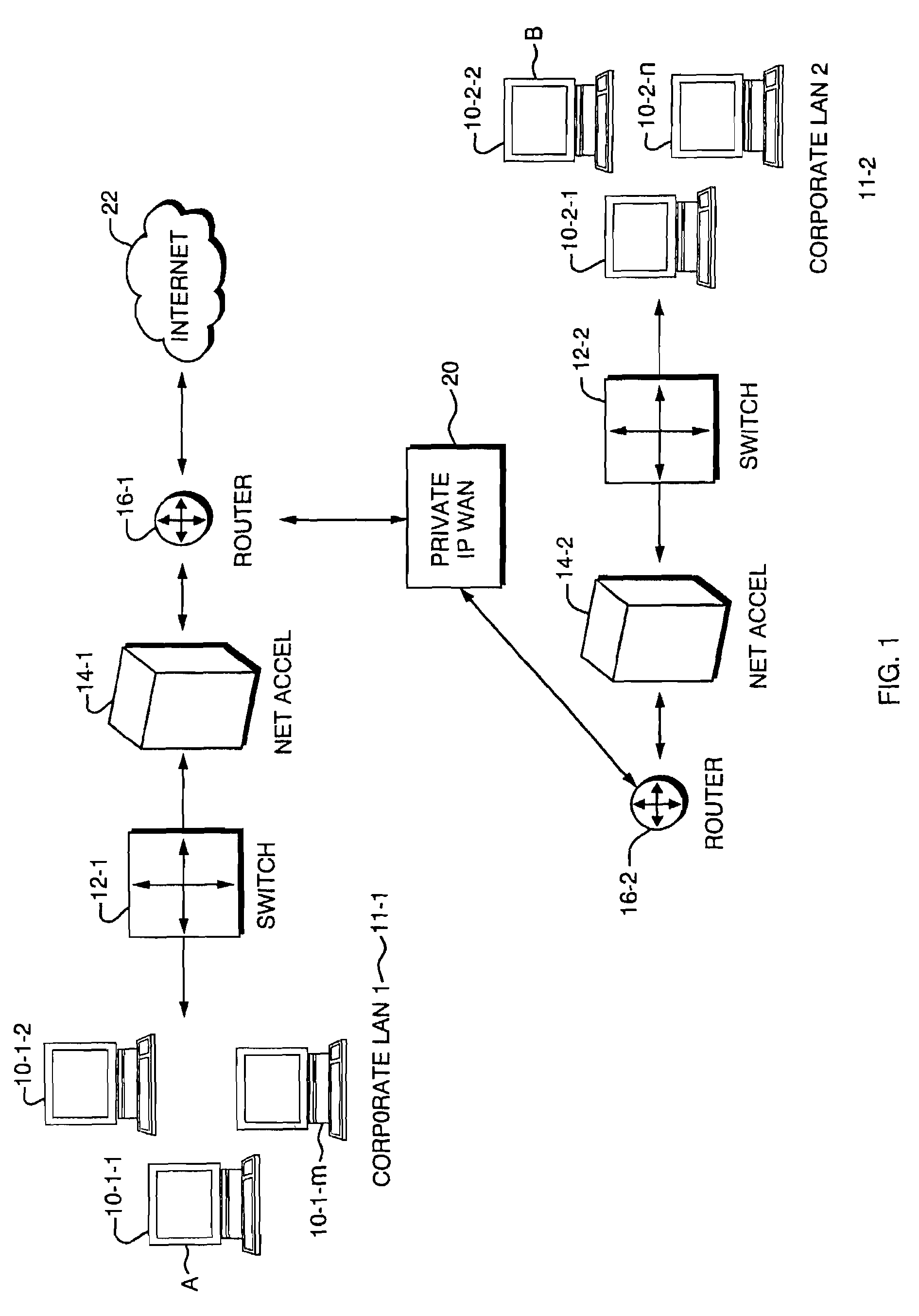

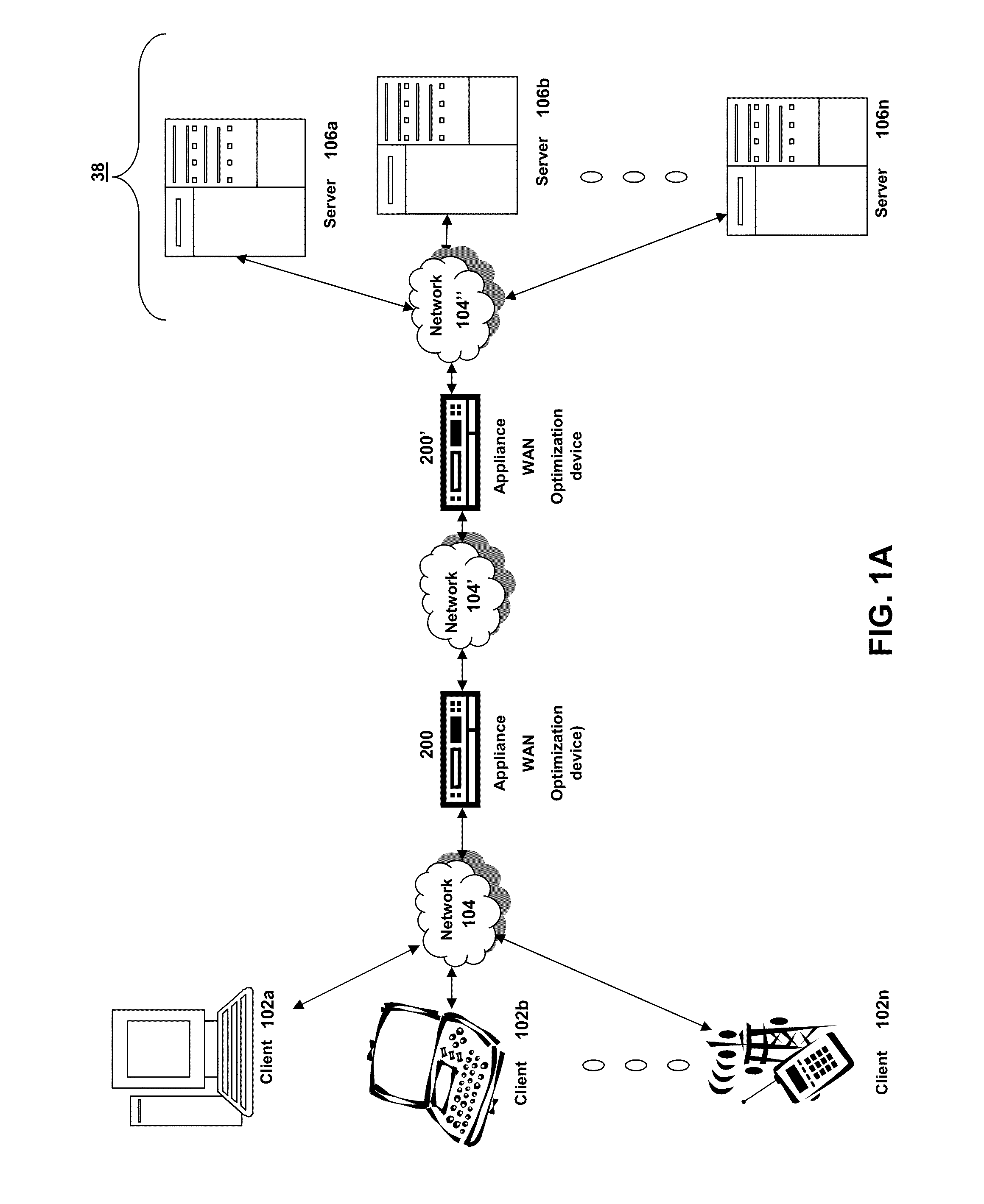

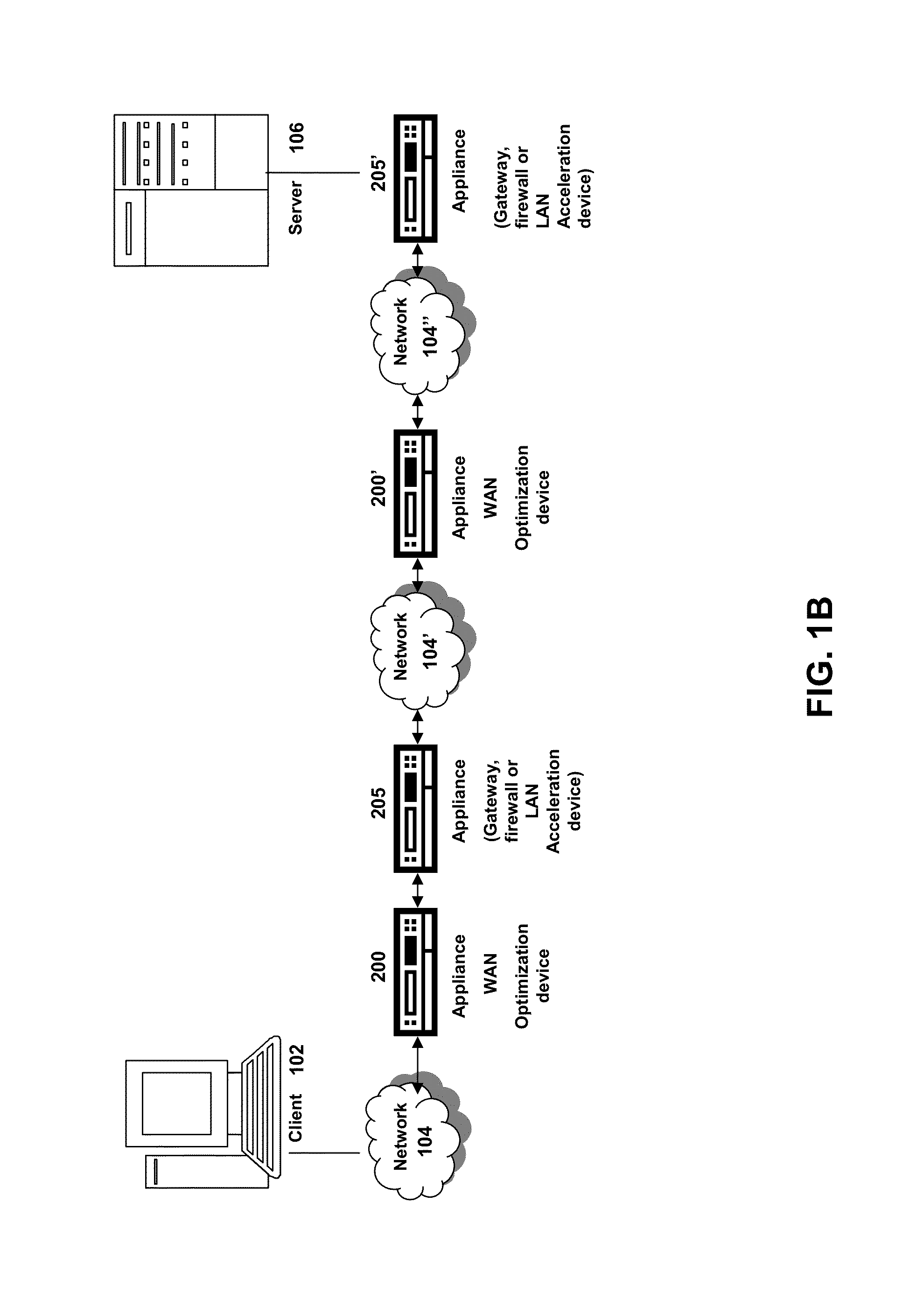

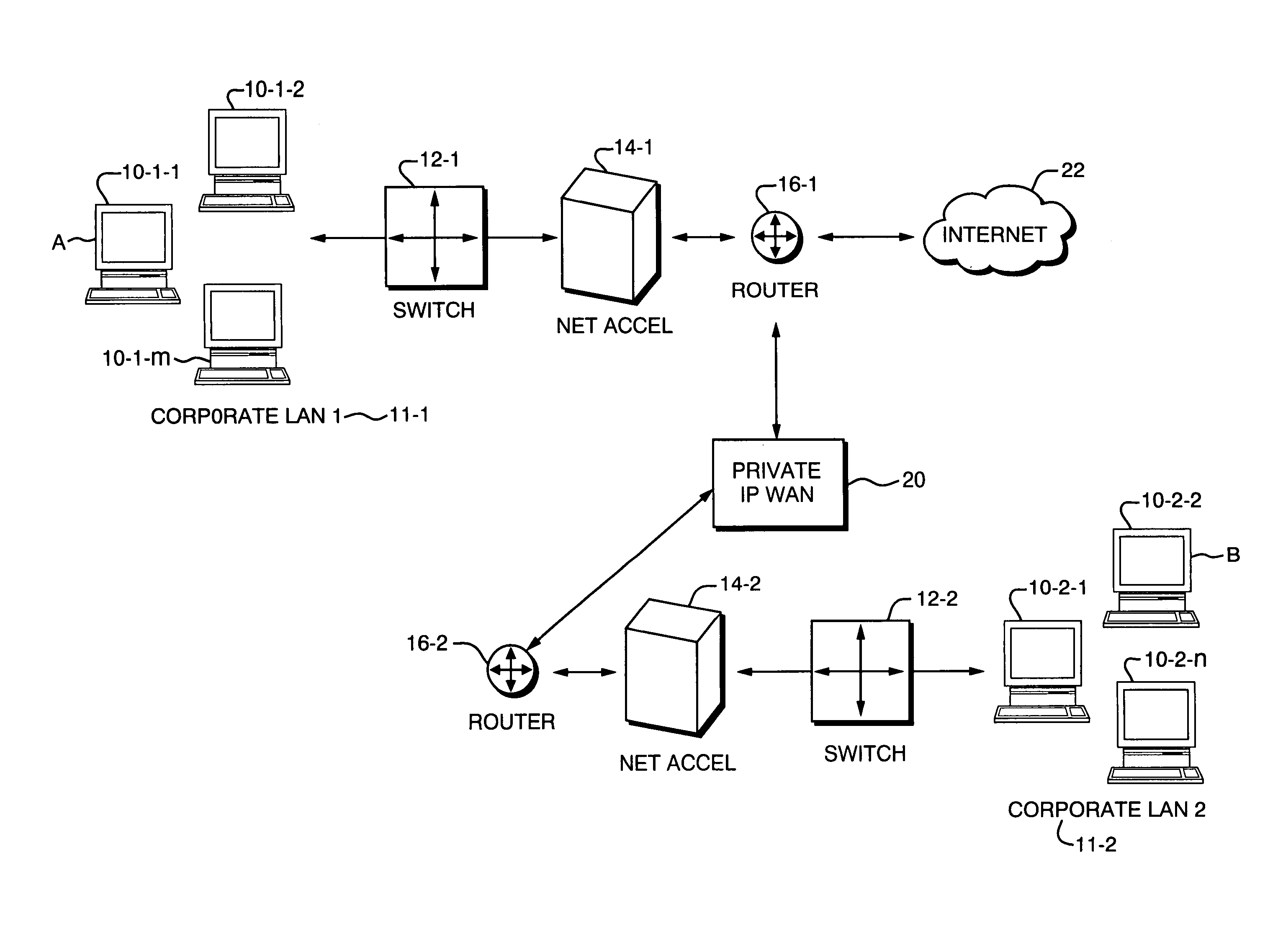

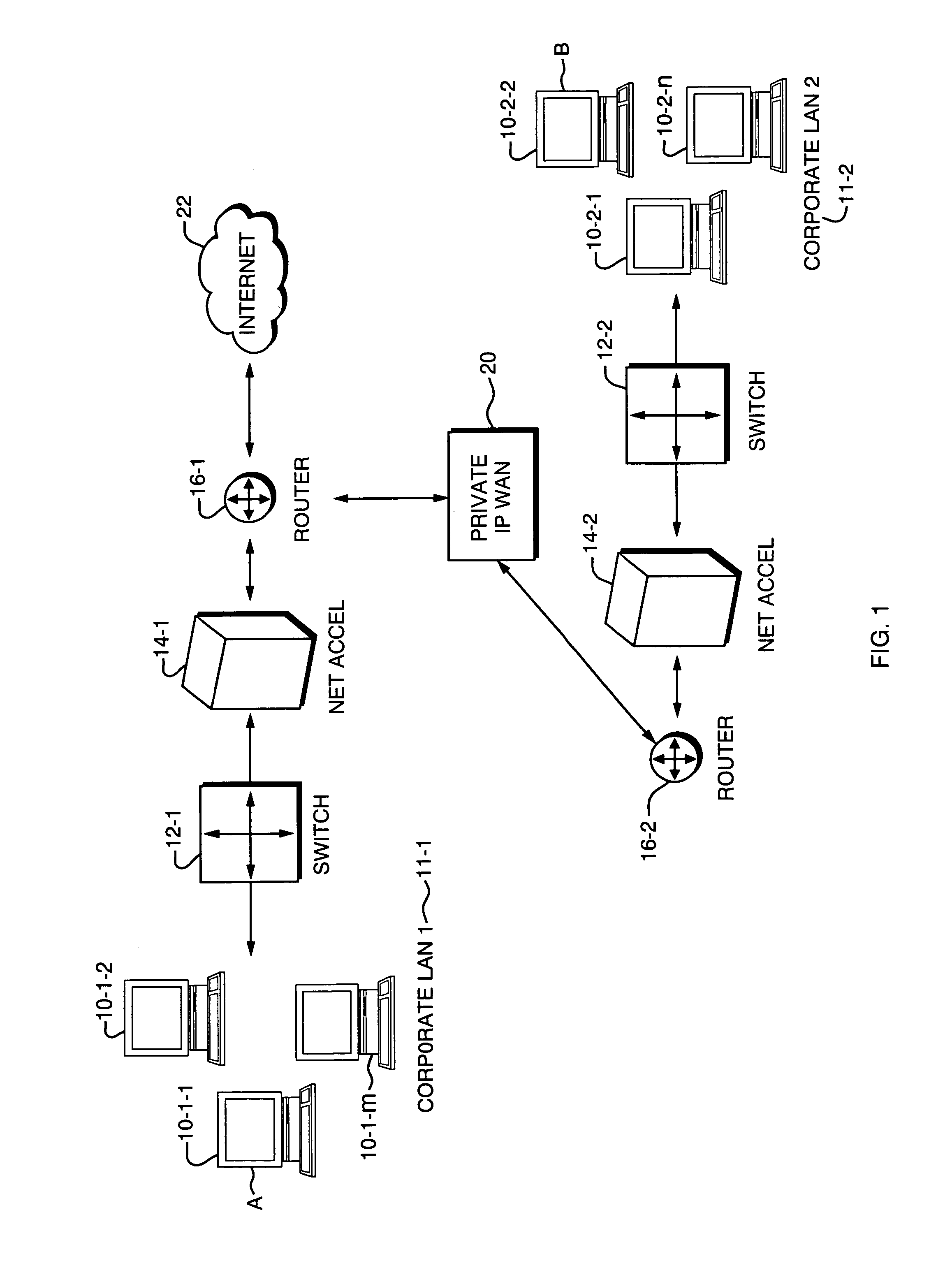

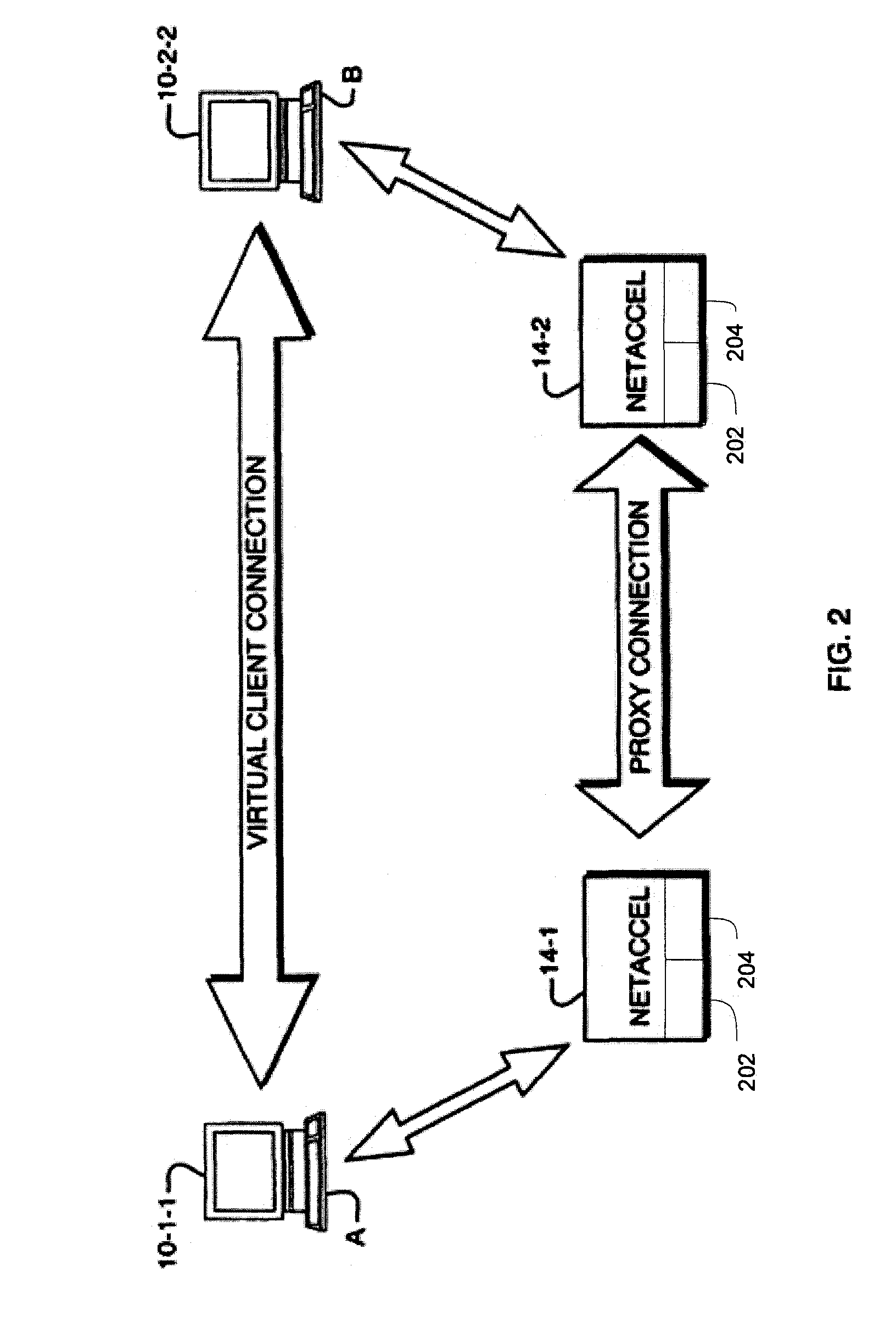

An architecture for optimizing network communications that utilizes a device positioned at two edges of a constrained Wide Area Network (WAN) link. The device intercepts outgoing network packets and reroutes them to a proxy application. The proxy application uses multiple, preferably persistent connections with a network accelerator device at the other end of the persistent connection. The proxy applications transmit the intercepted data. Packet mangling may involve spoofing the connection request at each end node; a proxy-to-proxy communication protocol specifies a way to forward an original address, port, and original transport protocol information end to end. The packet mangling and proxy-to-proxy communication protocol assure network layer transparency.

Owner:ITWORX EGYPT +1

Architecture for efficient utilization and optimum performance of a network

InactiveUS7126955B2Save bandwidthImprove performanceTime-division multiplexData switching by path configurationNetwork packetNetwork communication

An architecture for optimizing network communications that utilizes a device positioned at two edges of a constrained Wide Area Network (WAN) link. The device intercepts outgoing network packets and reroutes them to a proxy application. The proxy application uses persistent connections with a network accelerator device at the other end of the persistent connection. The proxy applications transmit the intercepted data after compressing it using a dictionary-based compression algorithm. Packet mangling may involve spoofing the connection request at each end node; a proxy-to-proxy communication protocol specifies a way to forward an original address, port, and original transport protocol information end to end. The packet mangling and proxy-to-proxy communication protocol assure network transparency.

Owner:ITWORX EGYPT +1

Architecture for efficient utilization and optimum performance of a network

InactiveUS7639700B1Save bandwidthAssure transparencyTime-division multiplexData switching by path configurationNetwork packetNetwork communication

Owner:F5 NETWORKS INC

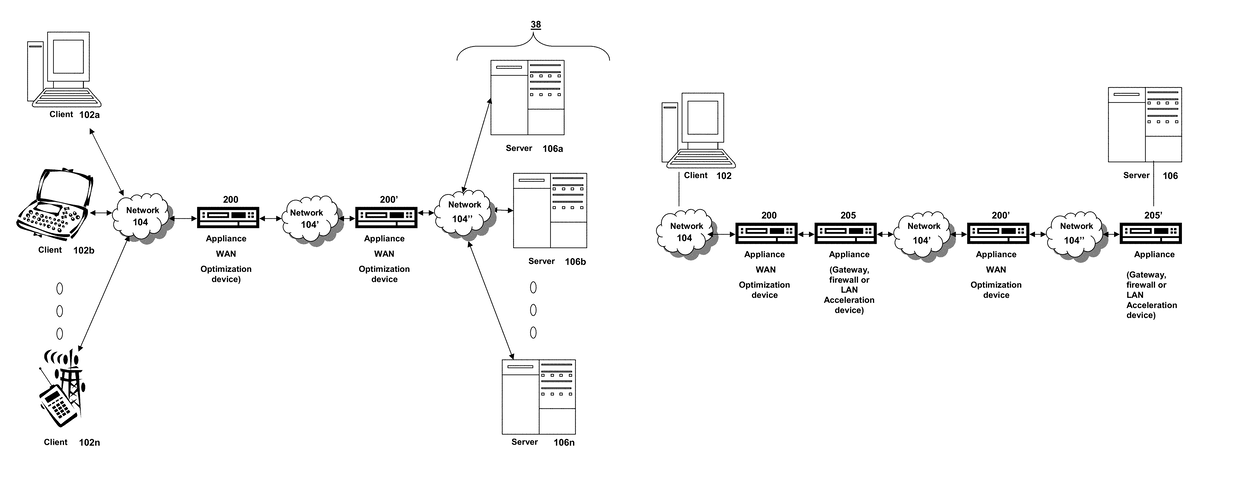

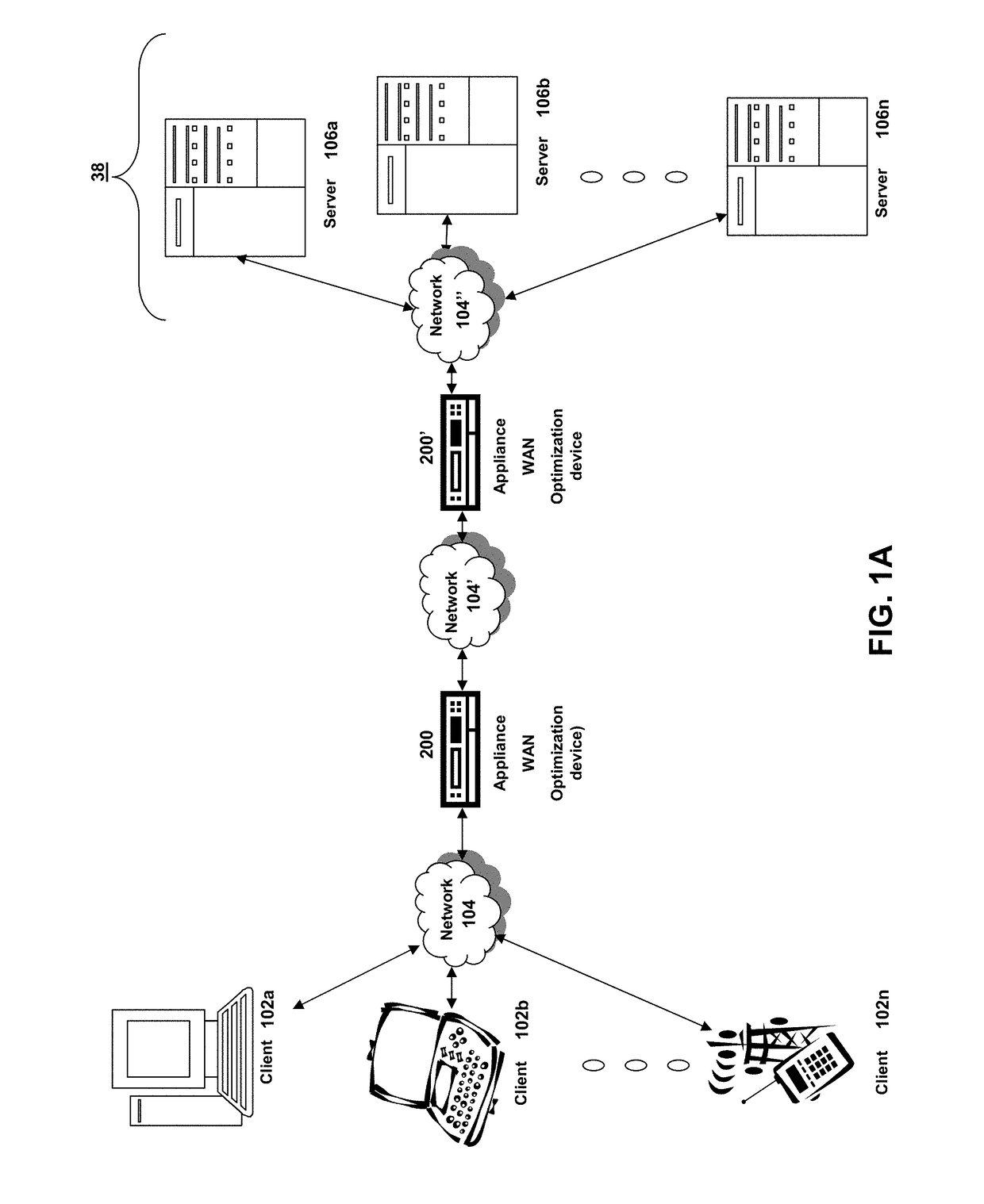

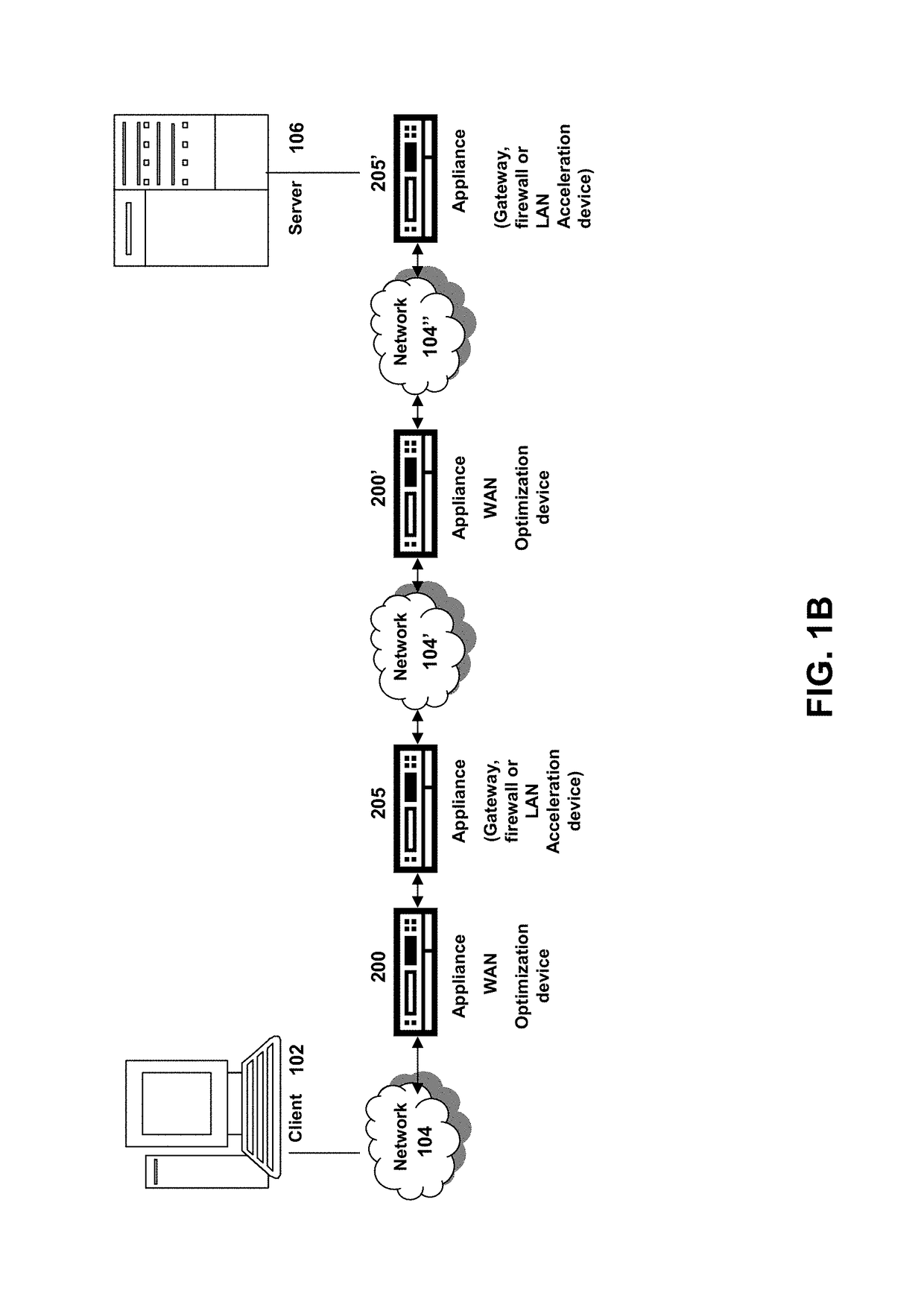

Systems and methods for dynamic adaptation of network accelerators

ActiveUS20140101306A1Effectiveness will varyDigital computer detailsTransmissionWAN optimizationResource based

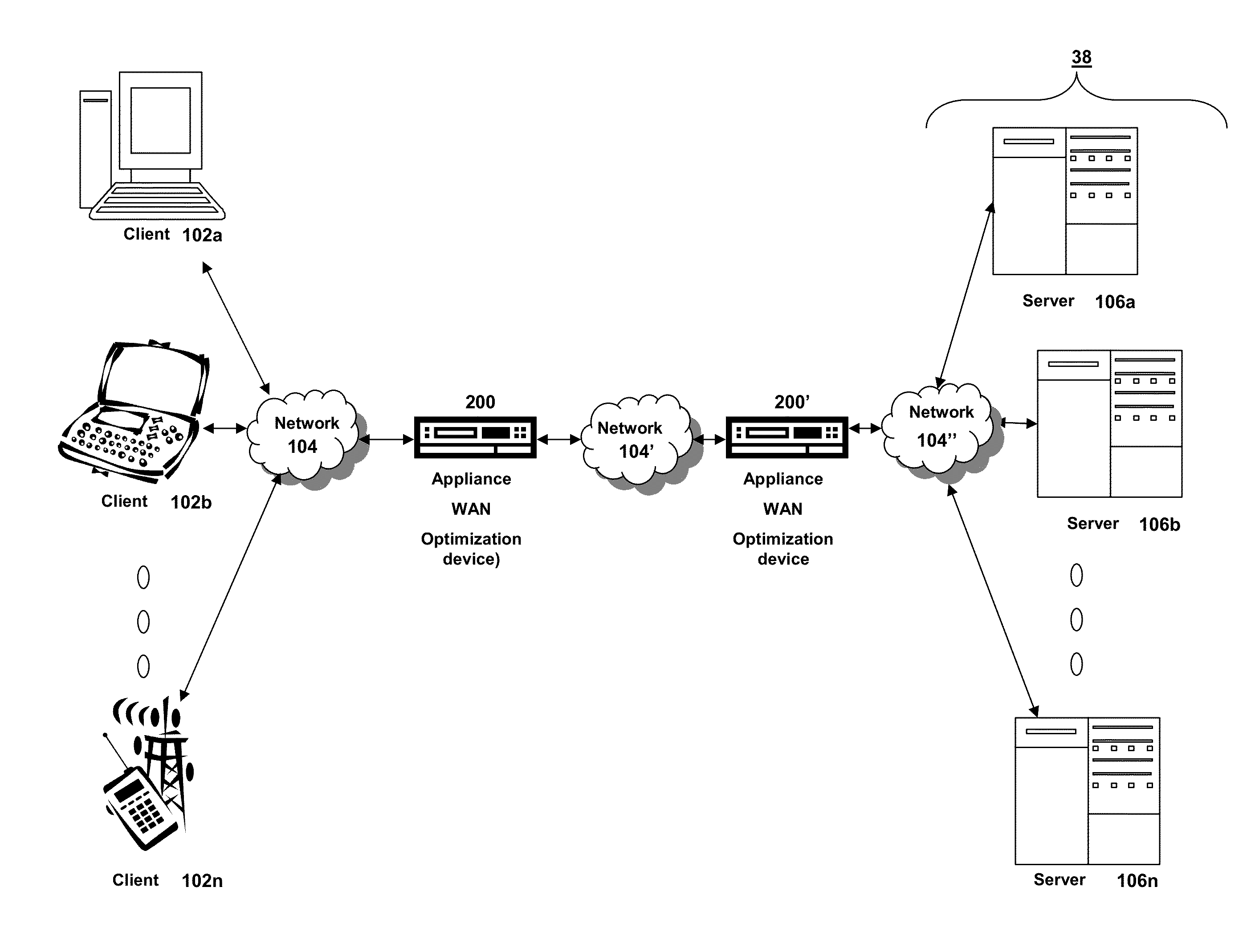

Systems and methods of the present solution provide a more optimal solution by dynamically and automatically reacting to changing network workload. A system that starts slowly, either by just examining traffic passively or by doing sub-optimal acceleration can learn over time, how many peer WAN optimizers are being serviced by an appliance, how much traffic is coming from each peer WAN optimizers, and the type of traffic being seen. Knowledge from this learning can serve to provide a better or improved baseline for the configuration of an appliance. In some embodiments, based on resources (e.g., CPU, Memory, Disk), the system from this knowledge may determine how many WAN optimization instances should be used and of what size, and how the load should be distributed across the instances of the WAN optimizer.

Owner:CITRIX SYST INC

Accelerating network performance by striping and parallelization of TCP connections

InactiveUS7286476B2Improve performanceReduce needError preventionTransmission systemsNetwork packetNetwork communication

An architecture for optimizing network communications that utilizes a device positioned at two edges of a constrained Wide Area Network (WAN) link. The device intercepts outgoing network packets and reroutes them to a proxy application. The proxy application uses multiple, preferably persistent connections with a network accelerator device at the other end of the persistent connection. The proxy applications transmit the intercepted data. Packet mangling may involve spoofing the connection request at each end node; a proxy-to-proxy communication protocol specifies a way to forward an original address, port, and original transport protocol information end to end. The packet mangling and proxy-to-proxy communication protocol assure network layer transparency.

Owner:ITWORX EGYPT +1

Media Data Processing Using Distinct Elements for Streaming and Control Processes

InactiveUS20080285571A1Improve handlingIncrease data rateData switching by path configurationTelevision systemsTime ProtocolData store

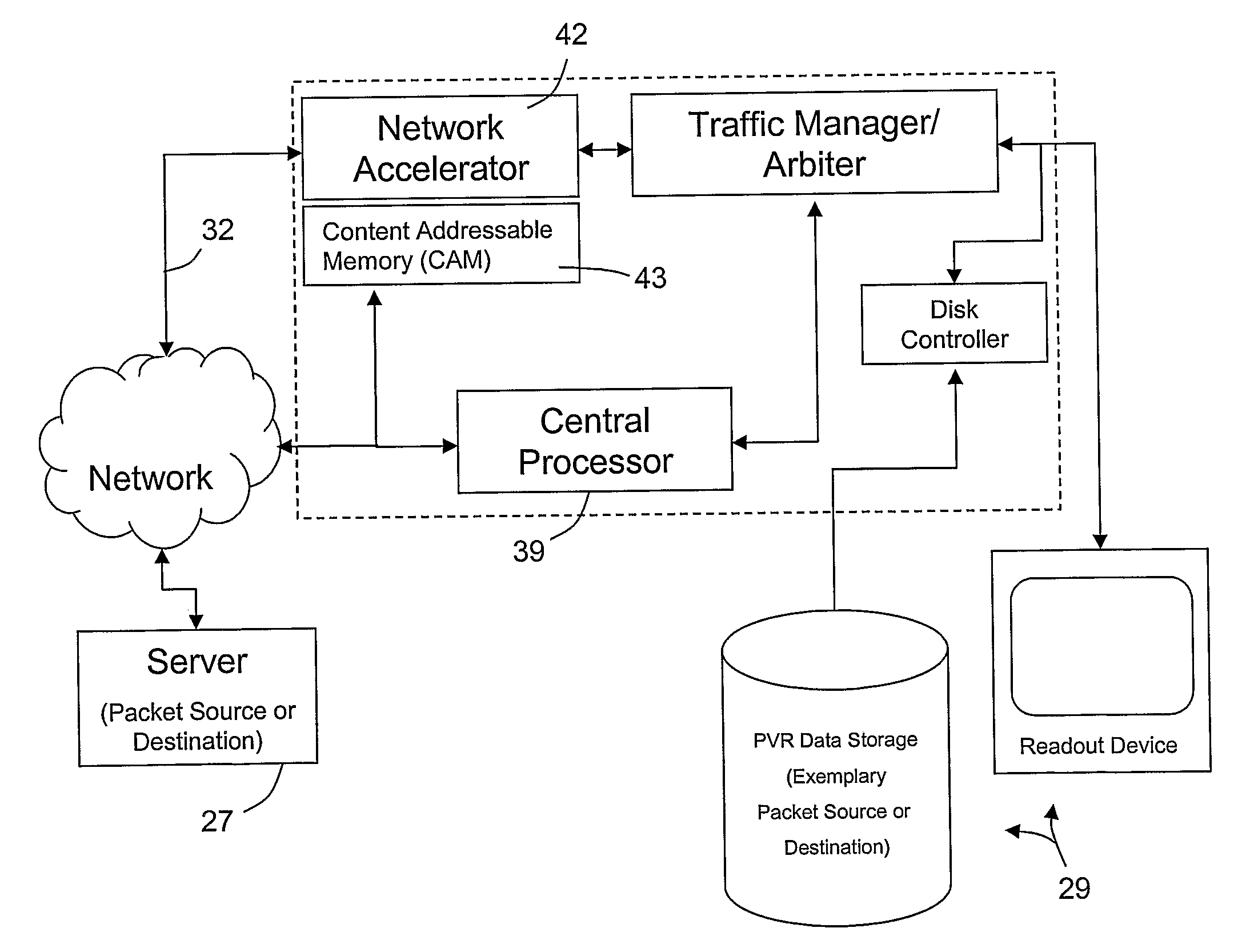

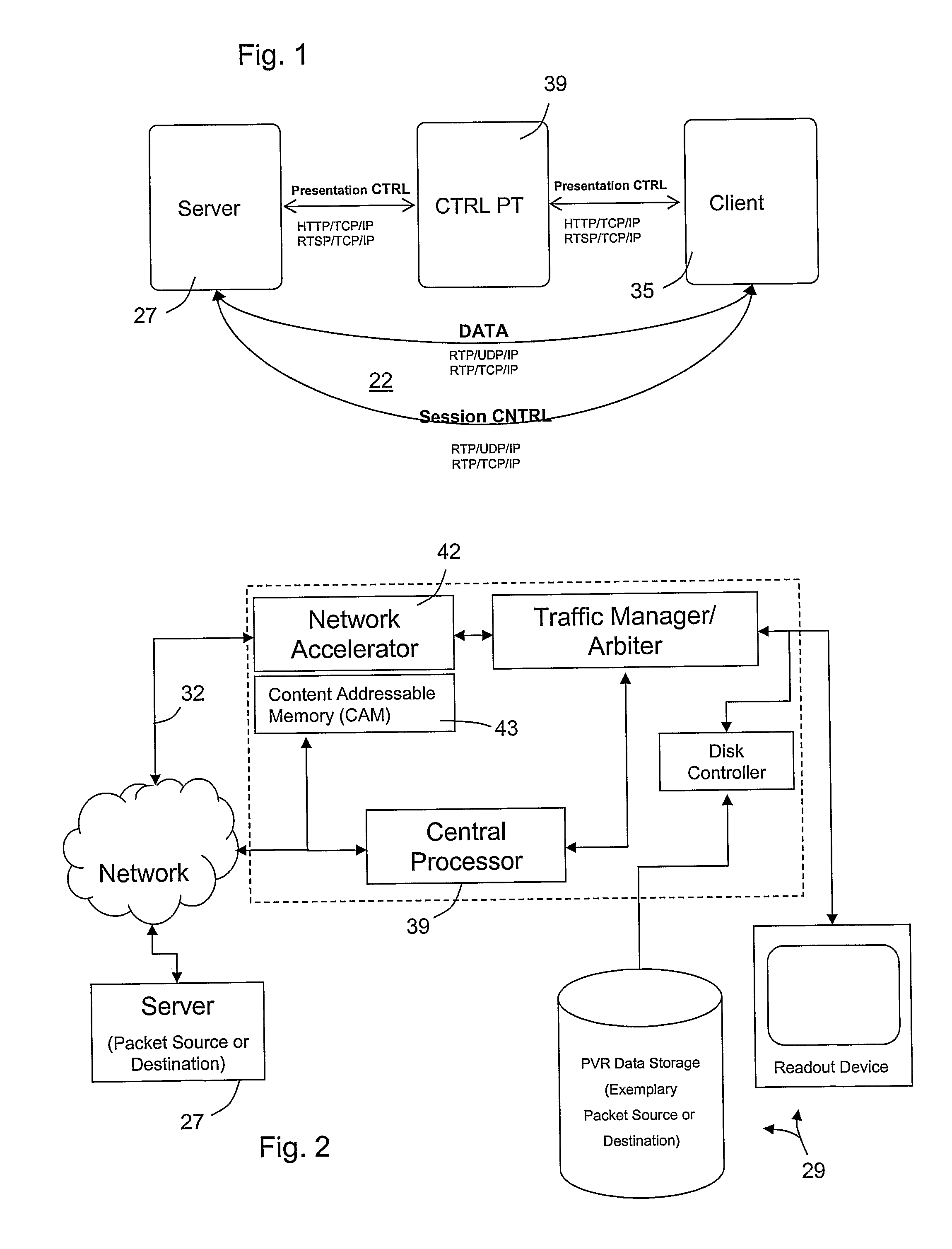

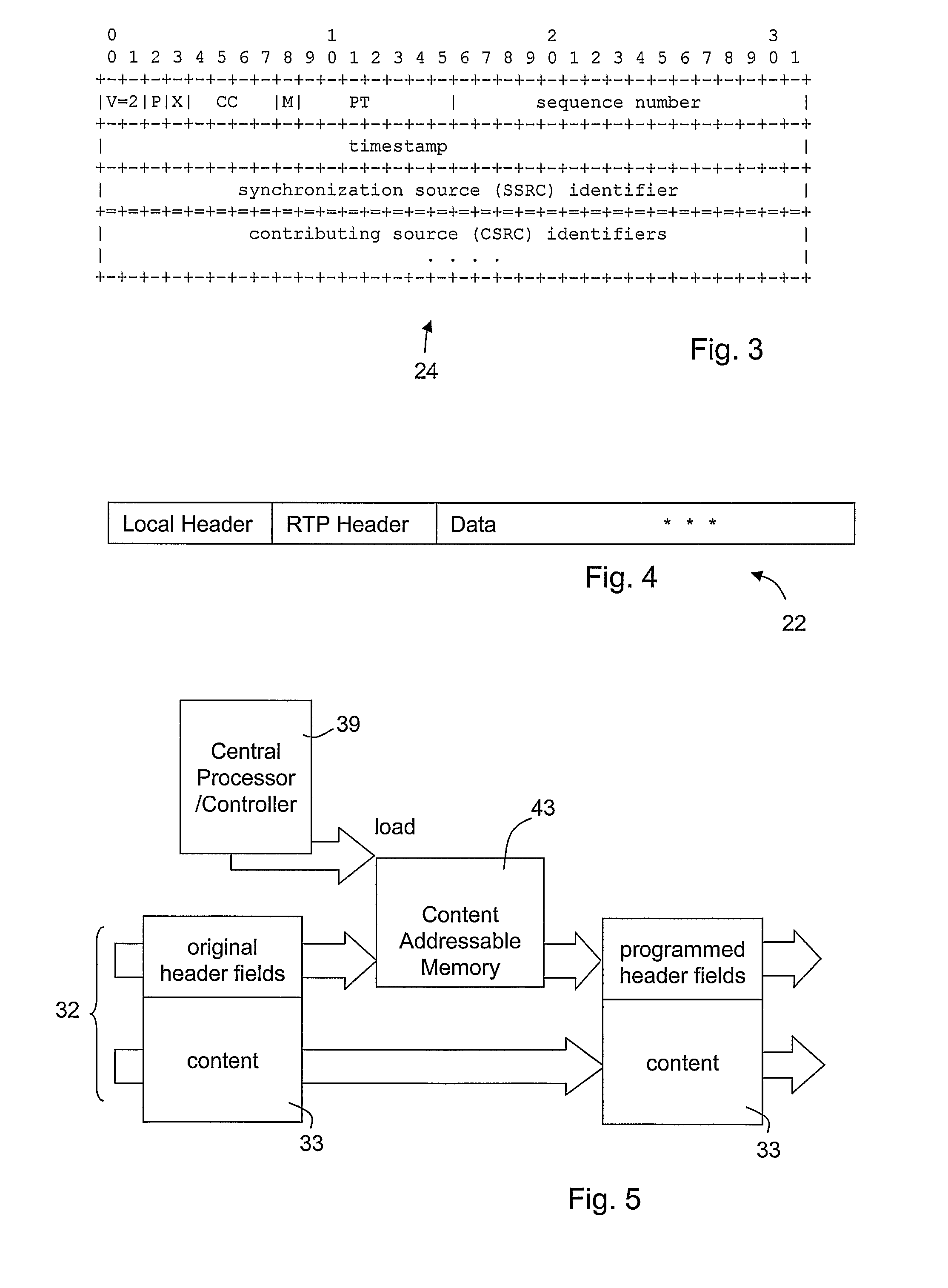

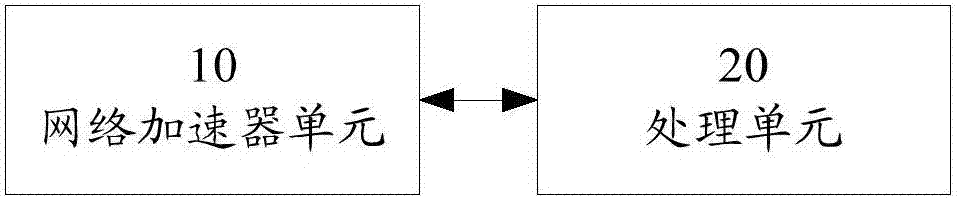

A hardware accelerated streaming arrangement, especially for RTP real time protocol streaming, directs data packets for one or more streams between sources and destinations, using addressing and handling criteria that are determined in part from control packets and are used to alter or supplement headers associated with the stream content packets. A programmed control processor responds to control packets in RTCP or RTSP format, whereby the handling or direction of RTP packets can be changed. The control processor stores data for the new addressing and handling criteria in a memory accessible to a hardware accelerator, arranged to store the criteria for multiple ongoing streams at the same time. When a content packet is received, its addressing and handling criteria are found in the memory and applied, by action of the network accelerator, without the need for computation by the control processor. The network accelerator operates repetitively to continue to apply the criteria to the packets for a given stream as the stream continues, and can operate as a high date rate pipeline. The processor can be programmed to revise the criteria in a versatile manner, including using extensive computation if necessary, because the processor is relieved of repetitive processing duties accomplished by the network accelerator.

Owner:AGERE SYST INC

Accelerator system and method

InactiveUS6952409B2Improve throughputFaster and less-expensive to constructTime-division multiplexRadio/inductive link selection arrangementsTransmission protocolComputer hardware

A network accelerator for TCP / IP includes programmable logic for performing transparent protocol translation of streamed protocols for audio / video at network signaling rates. The programmable logic is configured in a parallel pipelined architecture controlled by state machines and implements processing for predictable patterns of the majority of transmissions which are stored in a content addressable memory, and are simultaneously stored in a dual port, dual bank application memory. The invention allows raw Internet protocol communications by cell phones, and cell phone to Internet gateway high capacity transfer that scales independent of a translation application, by processing packet headers in parallel and during memory transfers without the necessity of conventional store and forward techniques.

Owner:JOLITZ LYNNE G

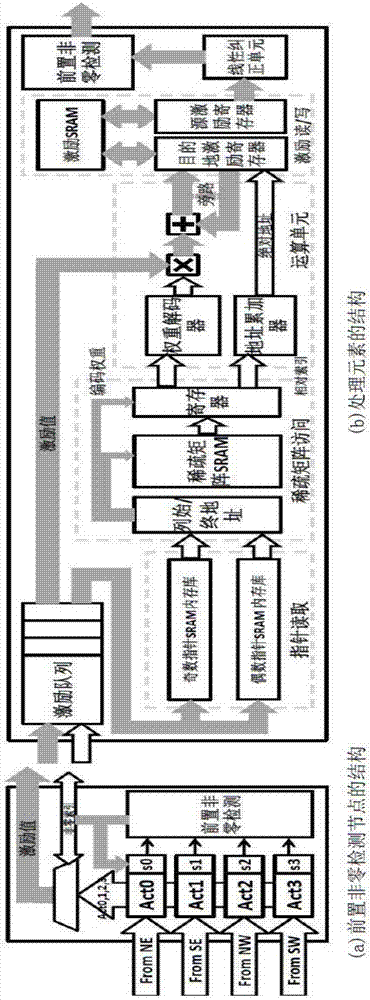

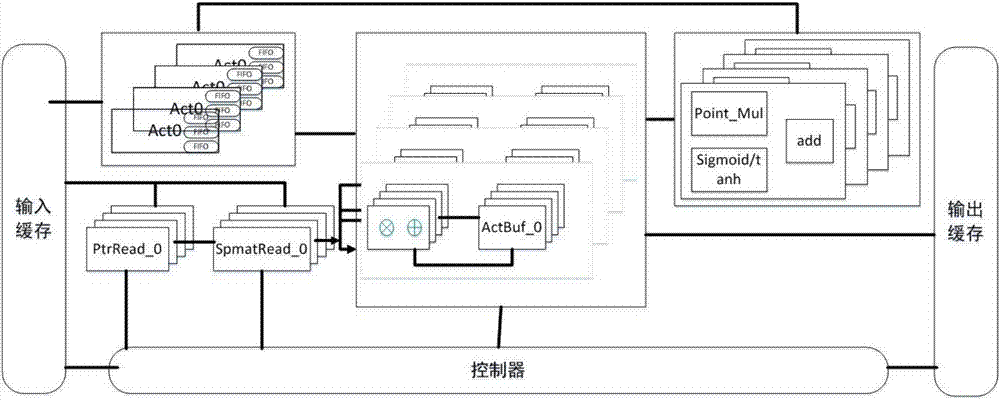

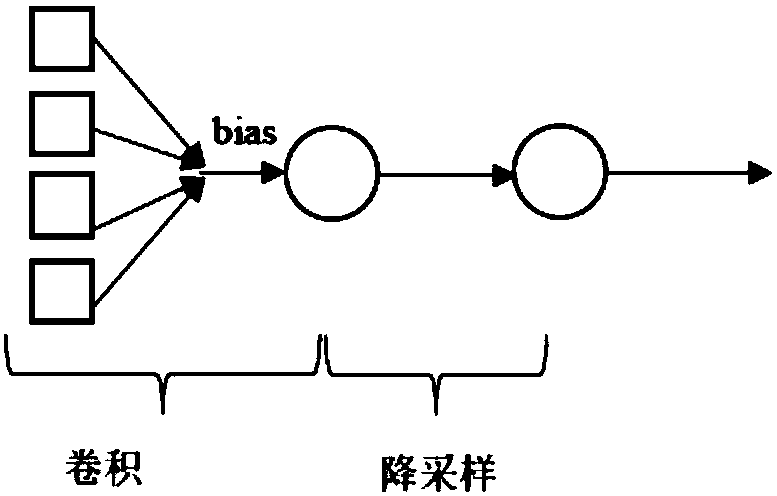

Apparatus and method for realizing sparse neural network

PendingCN107239823AImplement data cachingImprove computing efficiencyNeural architecturesPhysical realisationComputer moduleWeb accelerator

The invention provides an apparatus and method for realizing sparse neural network. The apparatus herein includes an input receiving unit, a sparse matrix reading unit, an M*N number of computing units, a control unit and an output buffer unit. The invention also provides a parallel computing method for the apparatus. The parallel computing method can be used for sharing in the dimension of input vector and for sharing in the dimension of weight matrix of the sparse neural network. According to the invention, the apparatus and the method substantially reduce memory access, reduce the number of on-chip buffering, effectively balance the relationship among on-chip buffering, I / O access and computing, and increase the performances of computing modules.

Owner:XILINX INC

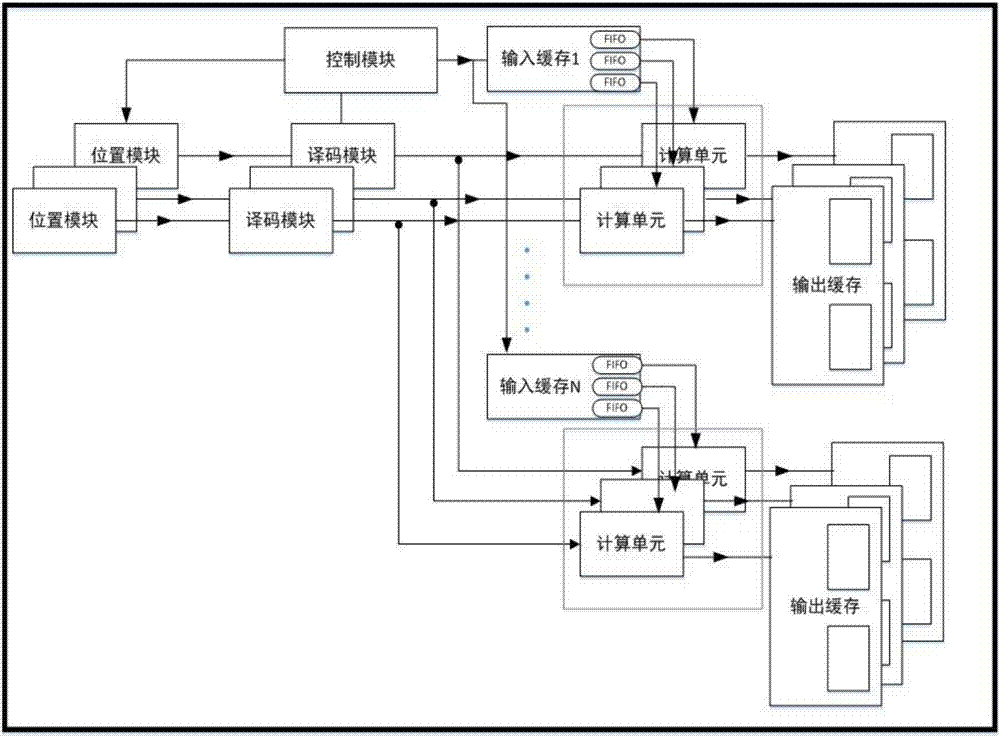

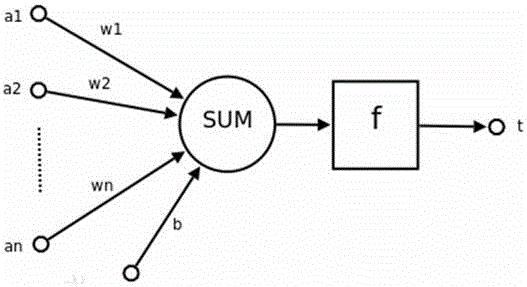

Implementation methods of neural network accelerator and neural network model

InactiveCN106485317AReduce consumptionMeet storage capacity needsPhysical realisationElectricityActivation function

The invention relates to a neural network data processing method, and particularly relates to implementation methods of a neural network accelerator and a neural network model. The implementation method of a neural network accelerator is characterized in that the neural network accelerator comprises a nonvolatile memory, and the nonvolatile memory comprises a data storage array prepared in a back-end manufacturing process; and in a front-end manufacturing process for preparing the data storage array, a neural network accelerator circuit is prepared on a silicon substrate under the data storage array. According to the implementation method of a neural network model, the neural network model comprises an input signal, a connection weight signal, a bias, an activation function, an operation function and an output signal, wherein the activation function and the operation function are implemented through the neural network accelerator circuit, and the input signal, the connection weight, the bias and the output signal are stored in the data storage array. As the neural network accelerator circuit is implemented directly under the data storage array, the data storage bandwidth is not restricted, and data is still not lost after power failure.

Owner:SHANGHAI XINCHU INTEGRATED CIRCUIT

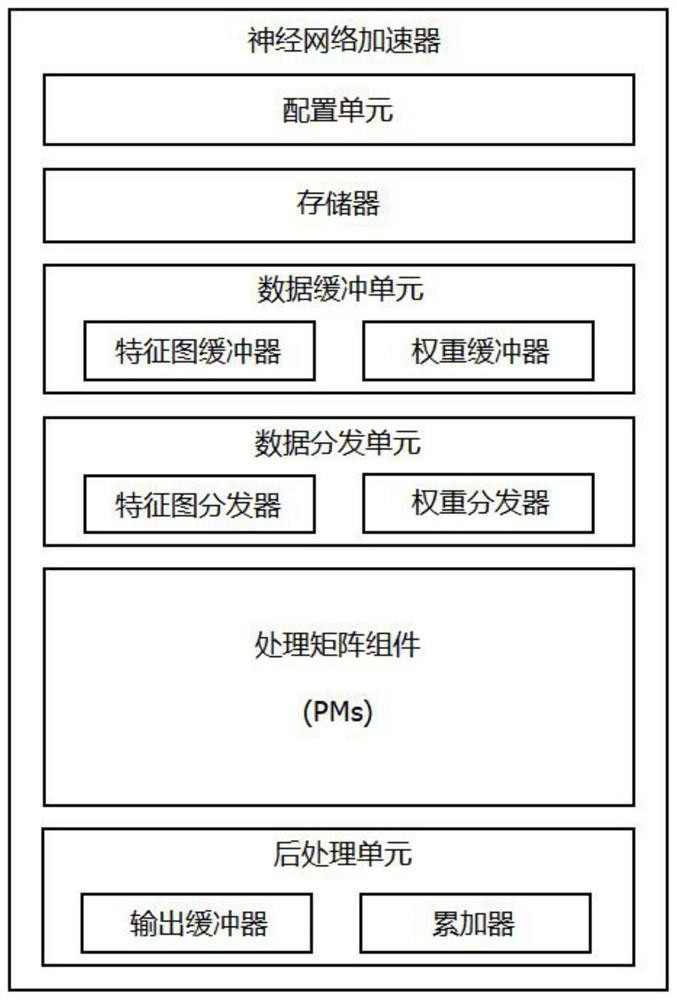

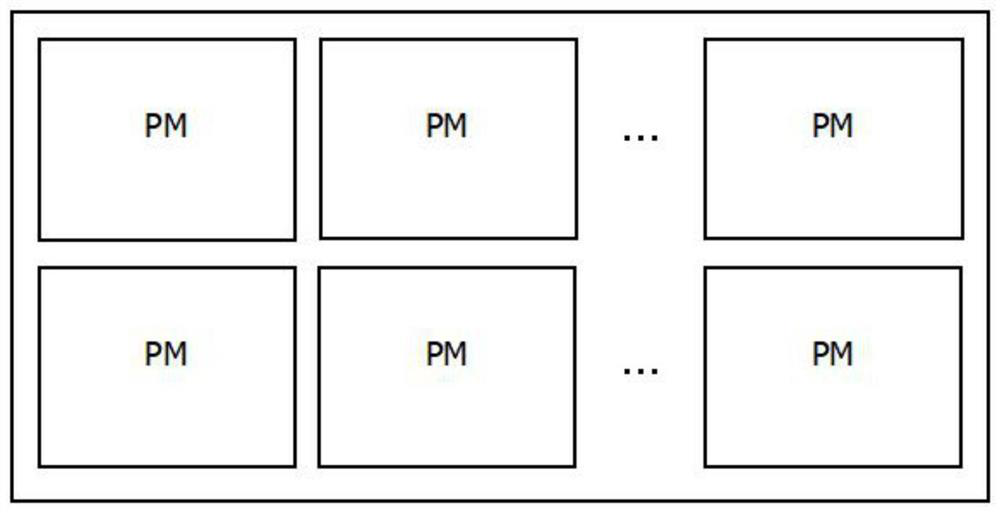

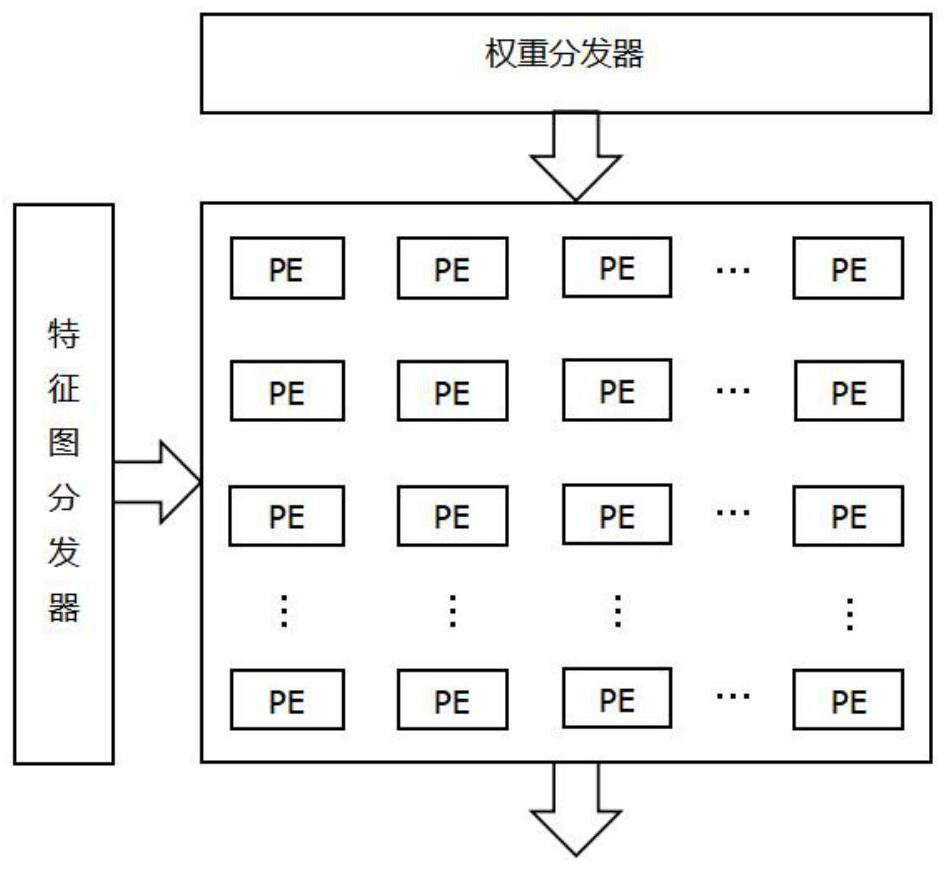

Neural network accelerator suitable for edge equipment and neural network acceleration calculation method

ActiveCN111667051ARealize multiplexingImprove reuse rateDigital data processing detailsNeural architecturesMultiplexingProcessing element

The invention discloses a neural network accelerator suitable for edge equipment and a neural network acceleration calculation method, and relates to the technical field of neural networks. The network accelerator comprises a configuration unit, a data buffer unit, a processing matrix component (PMs) and a post-processing unit, and a main controller writes feature parameters of different types ofnetwork layers into a register of the configuration unit to control the mapping of different network layer operation logics to the processing matrix hardware, so as to realize the multiplexing of theprocessing matrix component, i.e., the operation acceleration of different types of network layers in the neural network is realized by using one hardware circuit without additional hardware resources; and the different types of network layers comprise a standard convolution layer and a pooling network layer. The multiplexing accelerator provided by the invention not only ensures the realization of the same function, but also has the advantages of less hardware resource consumption, higher hardware multiplexing rate, lower power consumption, high concurrency, high multiplexing characteristic and strong structural expansibility.

Owner:上海赛昉科技有限公司

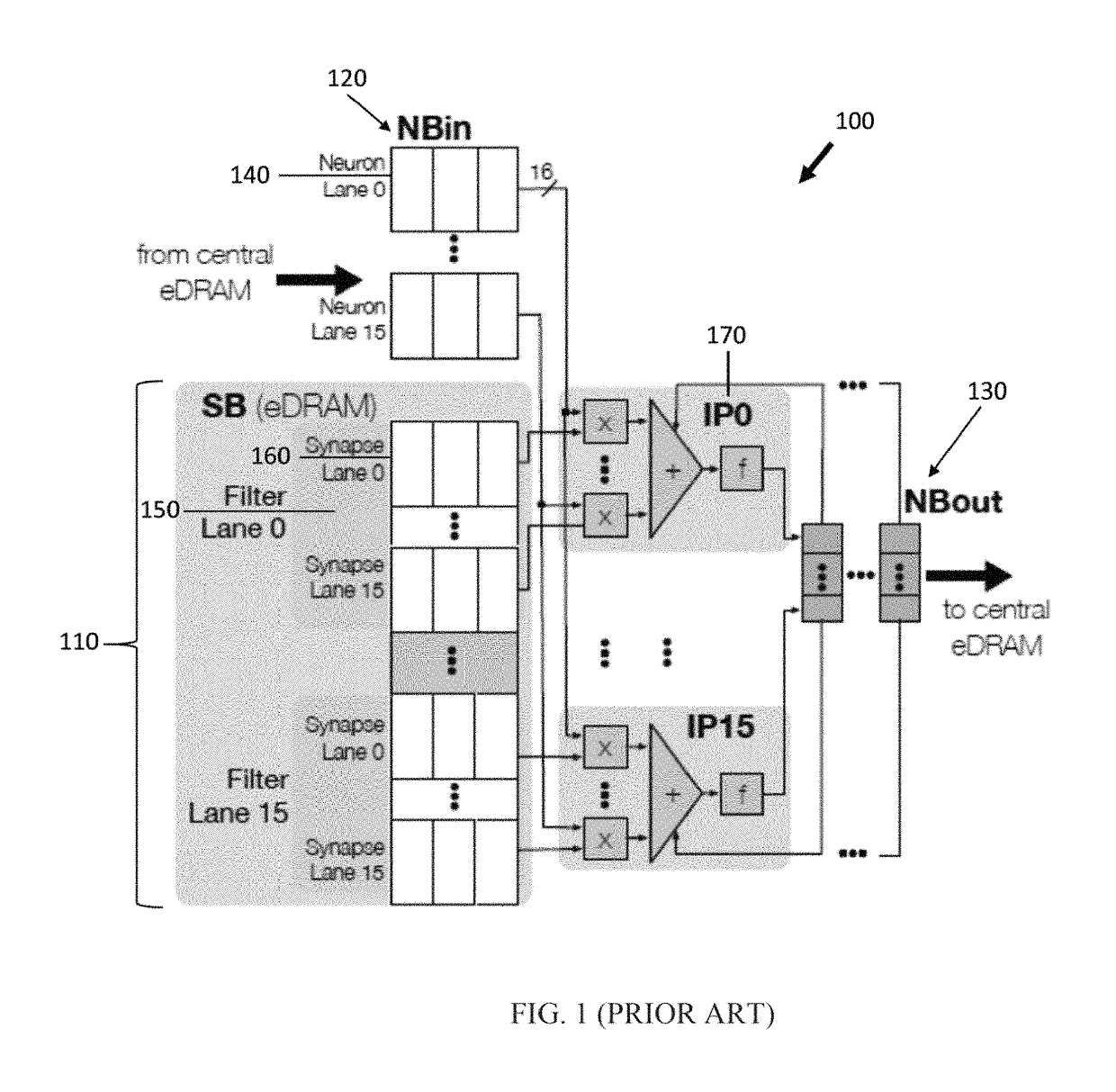

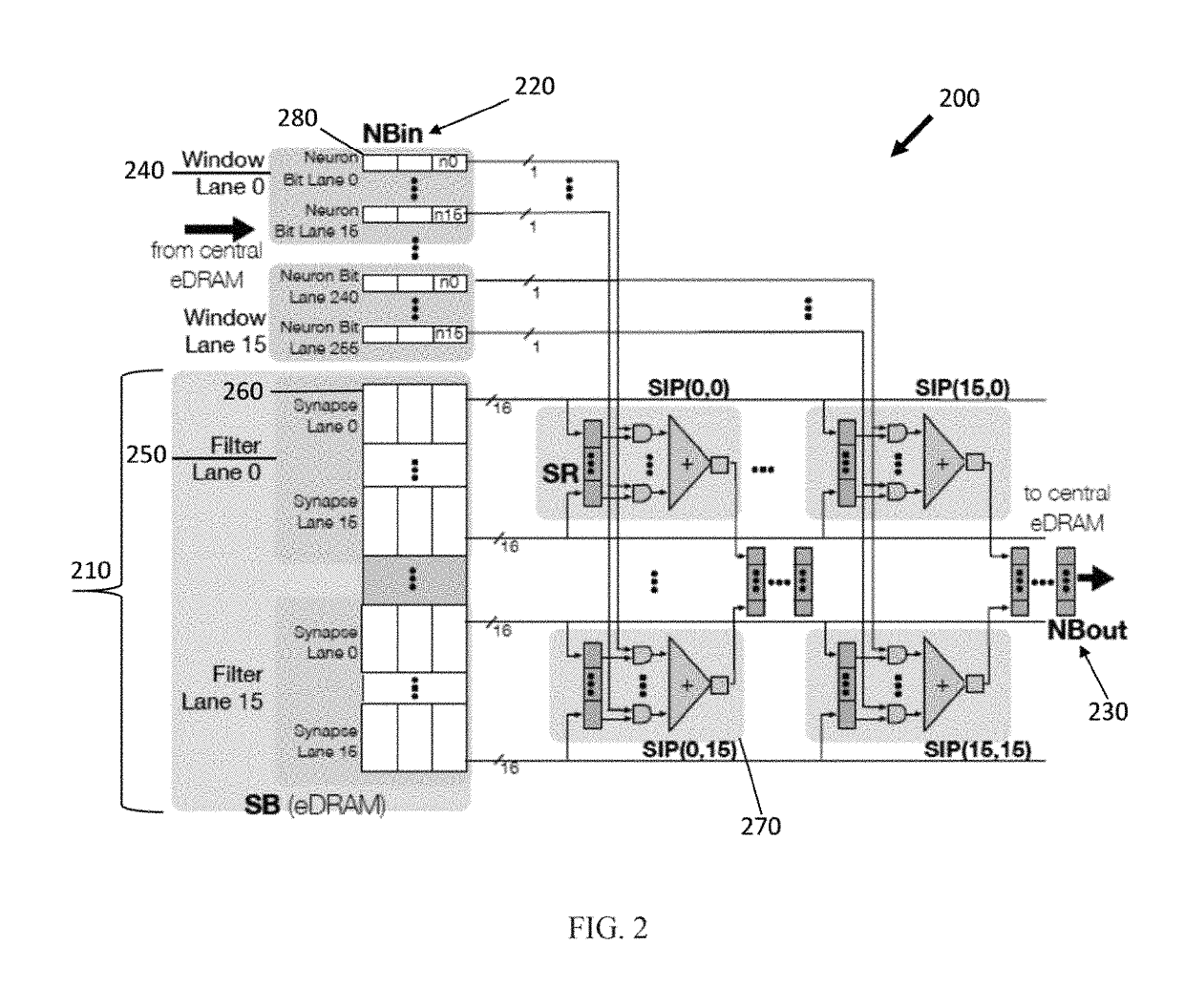

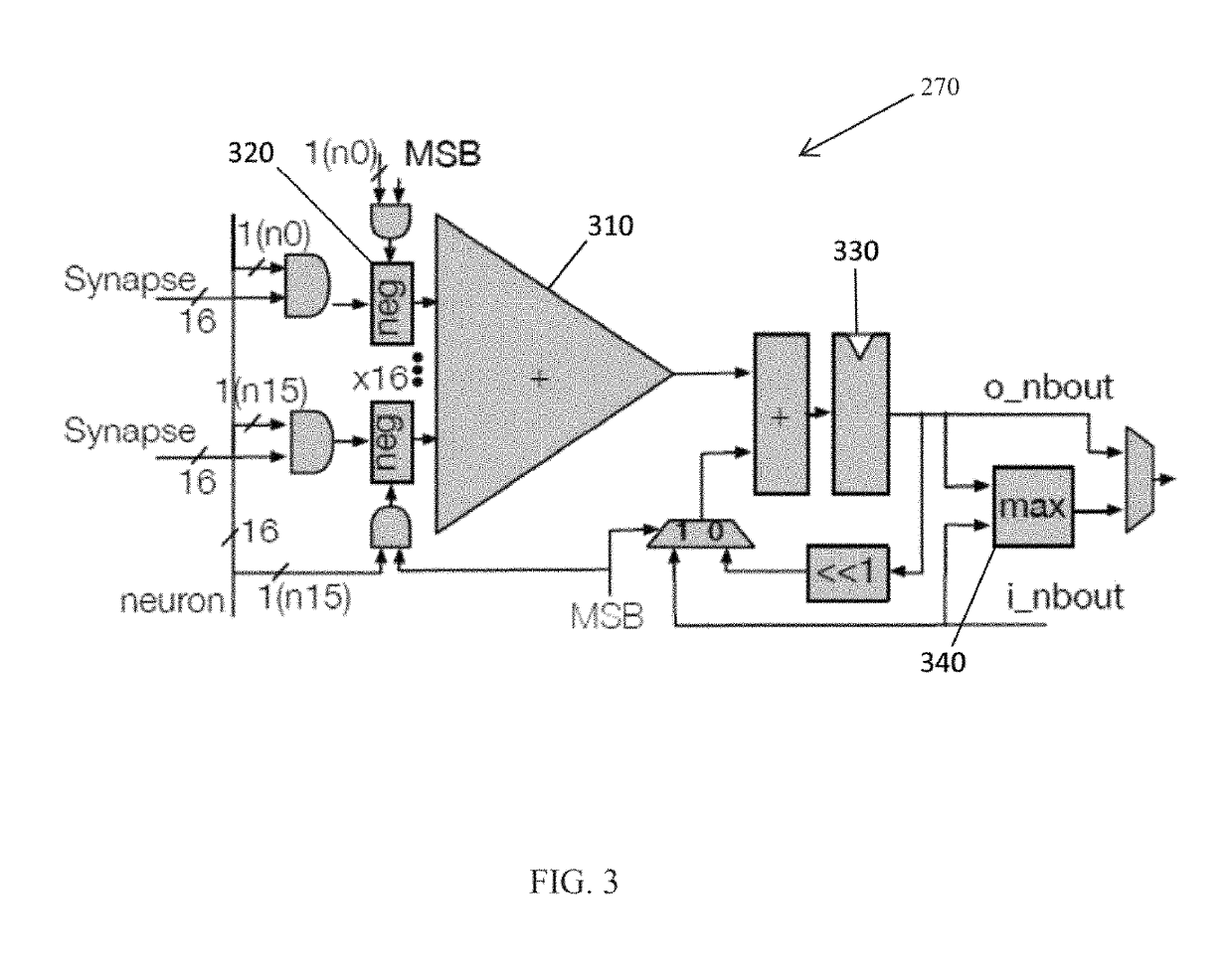

Accelerator for deep neural networks

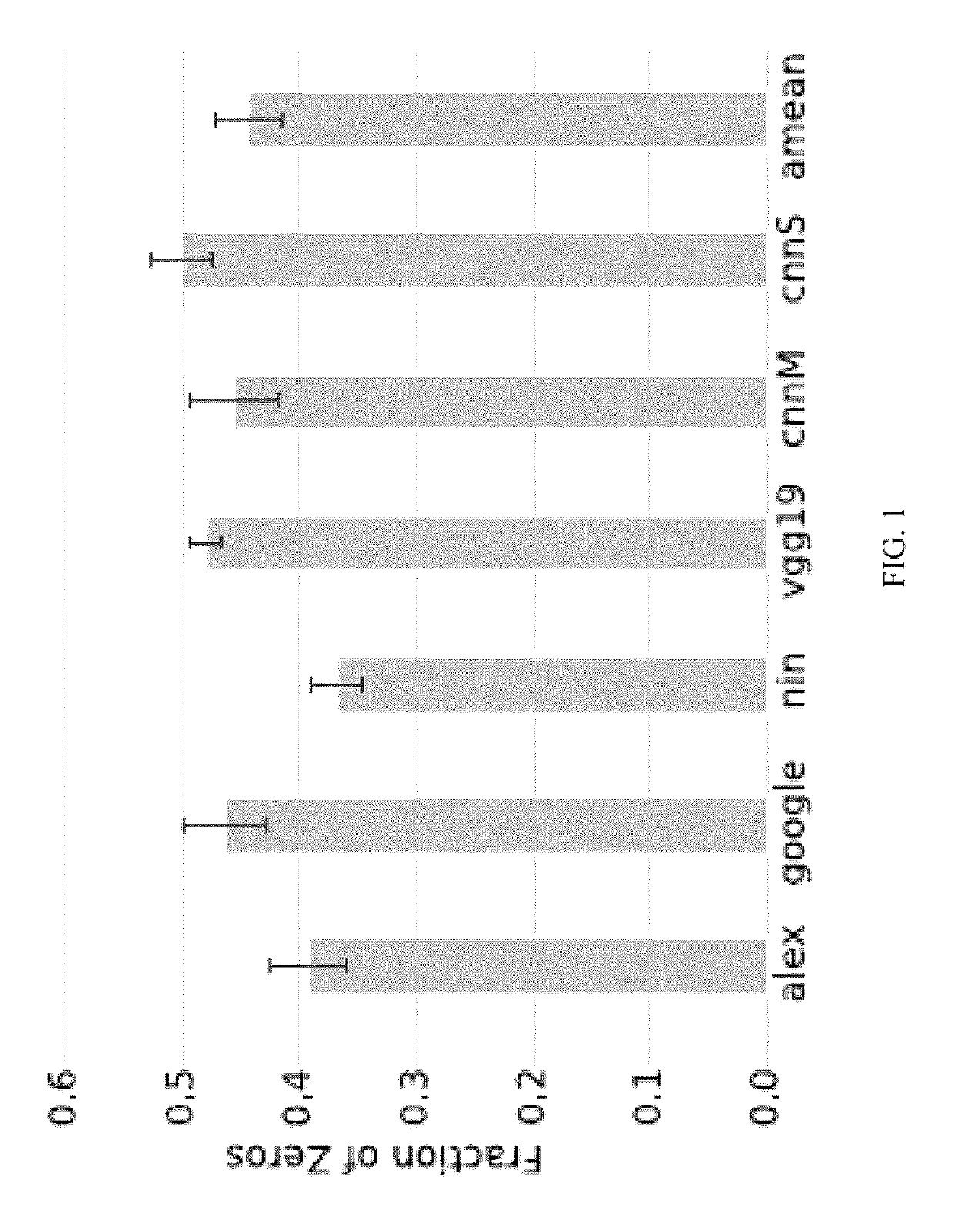

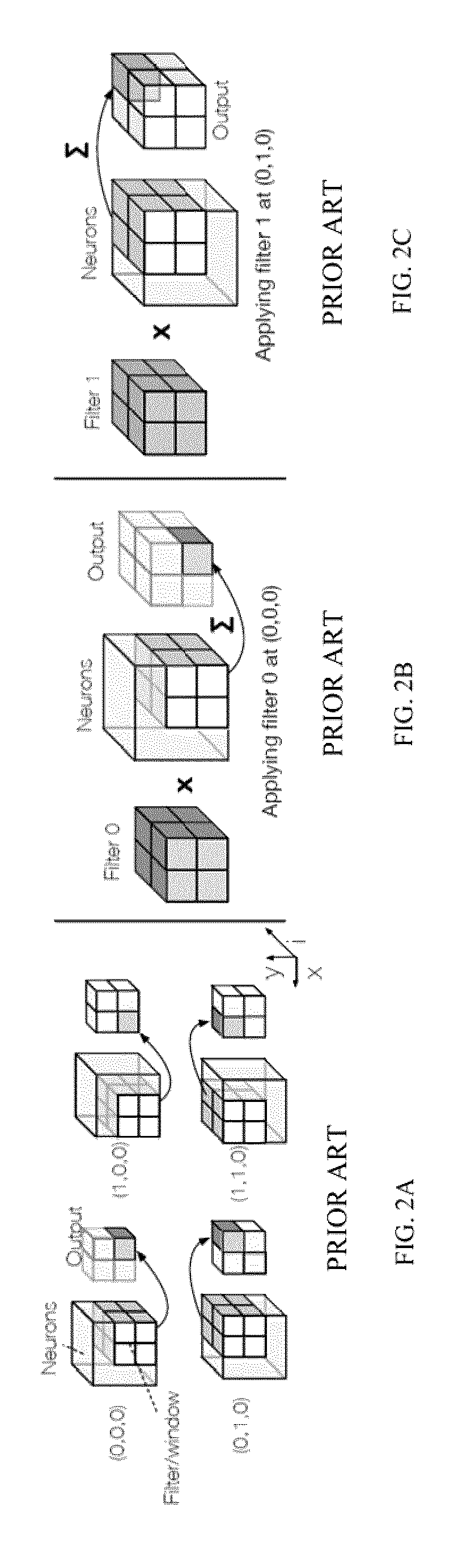

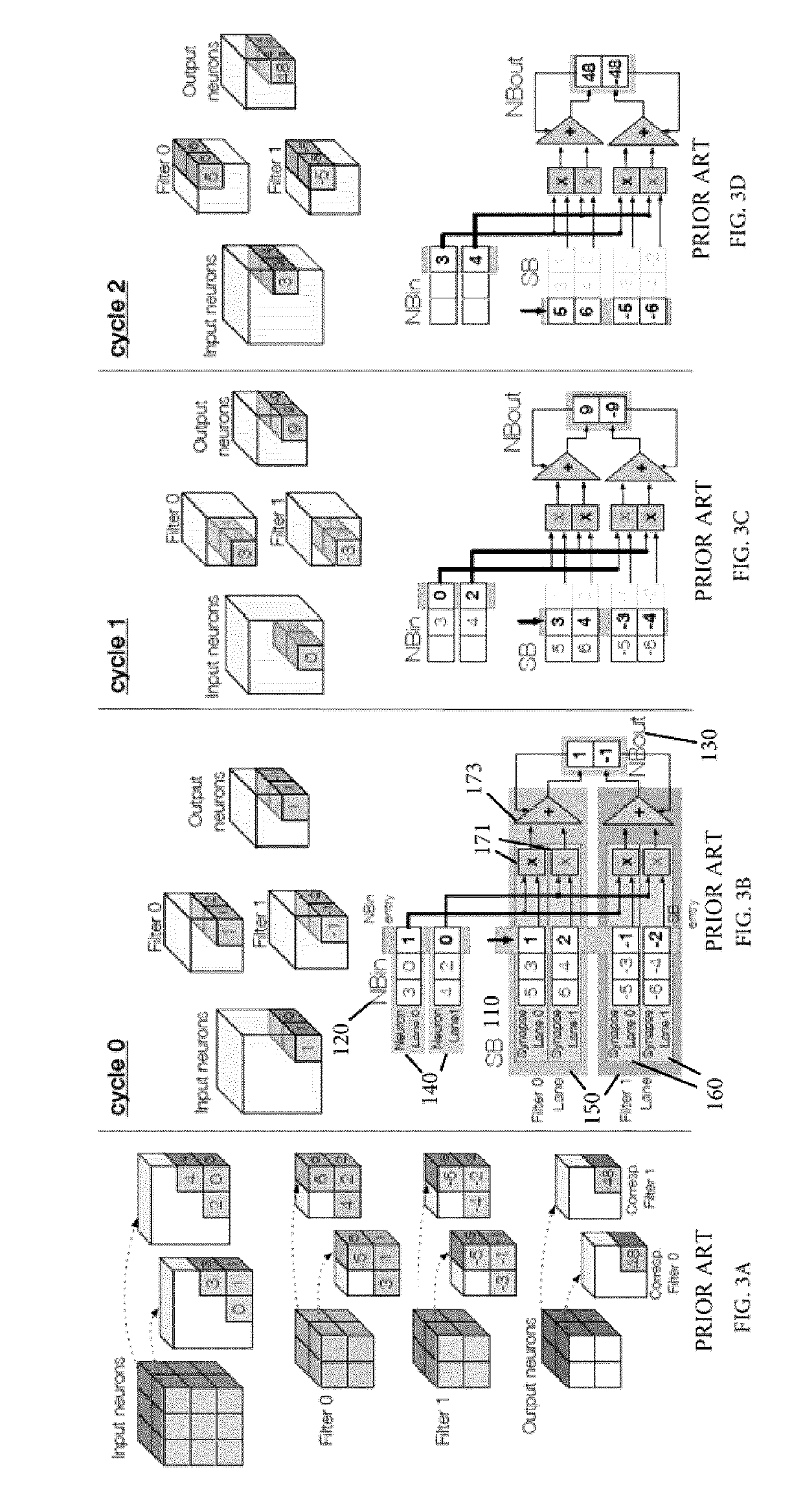

ActiveUS20190205740A1Reduce invalid operationsHigh energyNeural architecturesPhysical realisationSynapseBiological activation

Described is a system, integrated circuit and method for reducing ineffectual computations in the processing of layers in a neural network. One or more tiles perform computations where each tile receives input neurons, offsets and synapses, and where each input neuron has an associated offset. Each tile generates output neurons, and there is also an activation memory for storing neurons in communication with the tiles via a dispatcher and an encoder. The dispatcher reads neurons from the activation memory and communicates the neurons to the tiles and reads synapses from a memory and communicates the synapses to the tiles. The encoder receives the output neurons from the tiles, encodes them and communicates the output neurons to the activation memory. The offsets are processed by the tiles in order to perform computations only on non-zero neurons. Optionally, synapses may be similarly processed to skip ineffectual operations.

Owner:SAMSUNG ELECTRONICS CO LTD

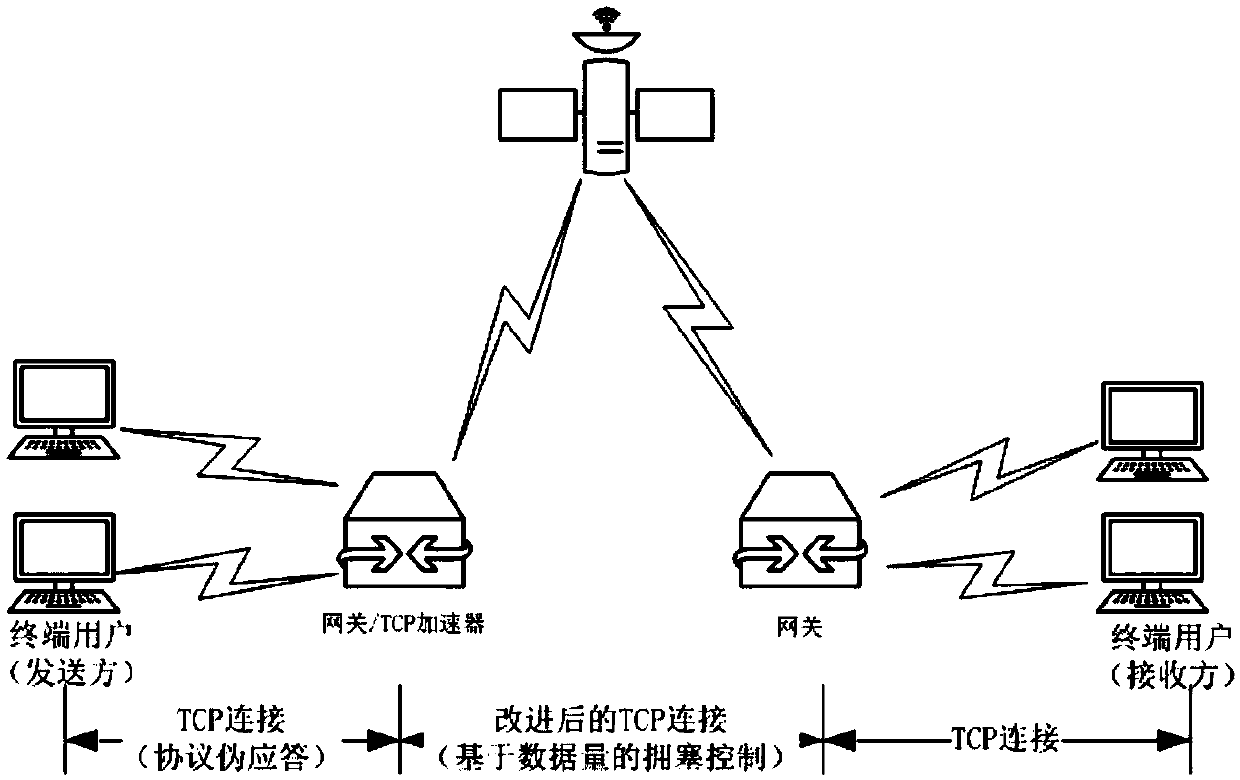

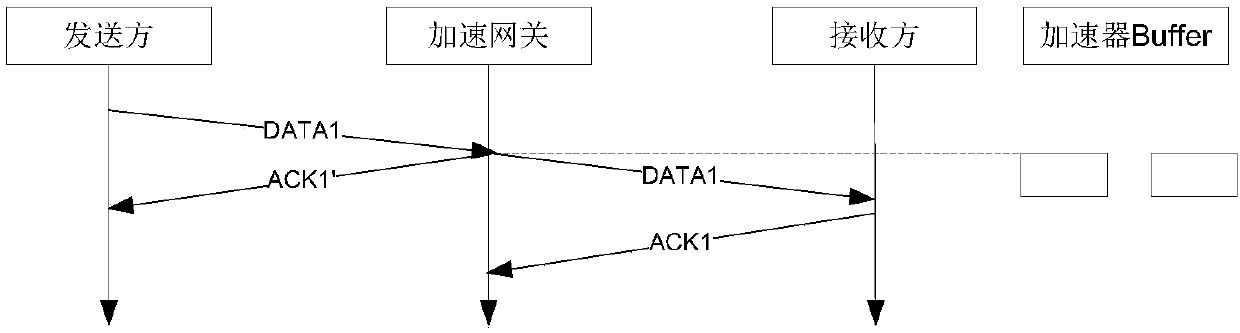

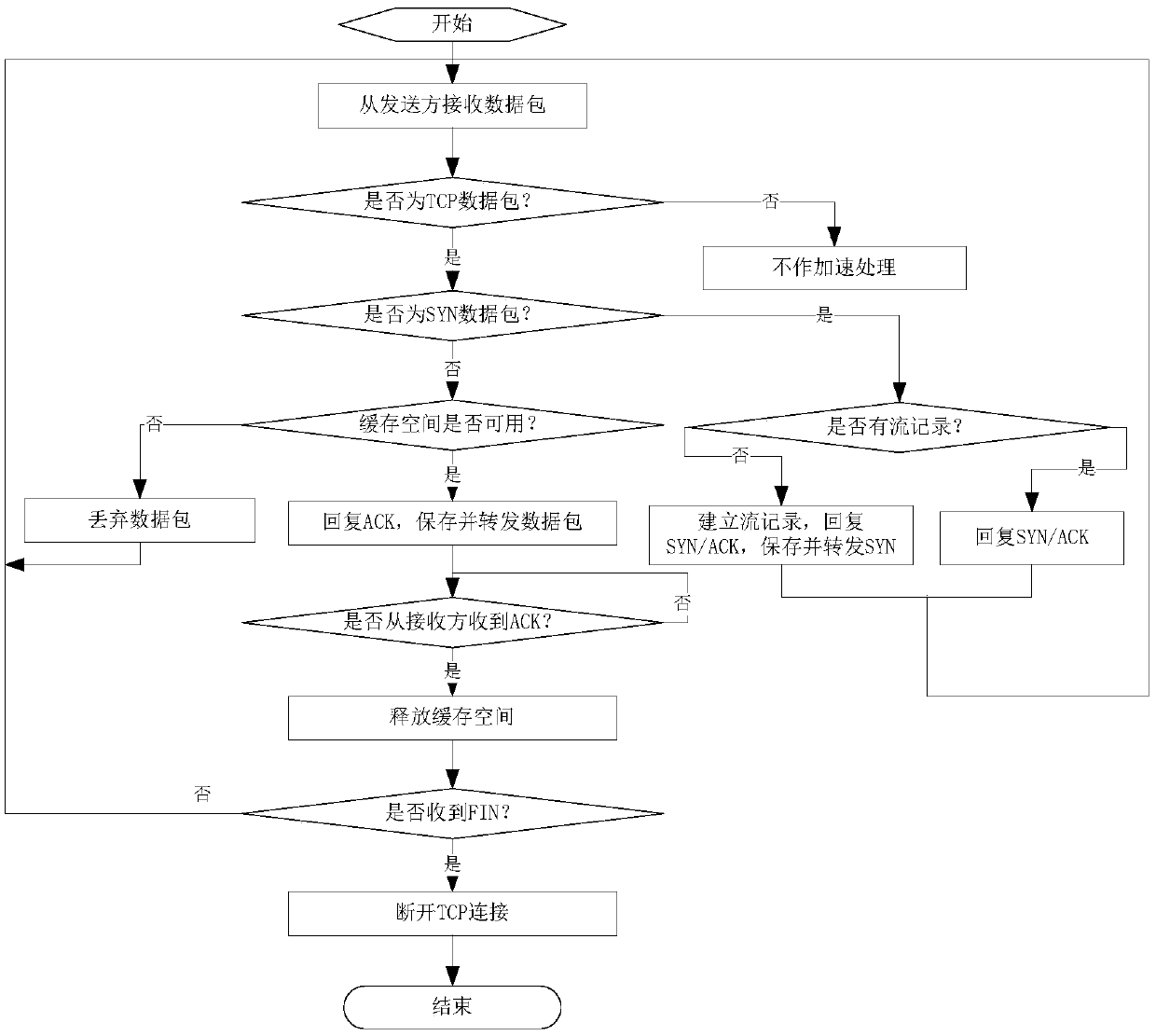

TCP acceleration method suitable for satellite link

ActiveCN109639340AImprove throughputRadio transmissionData switching networksComputer terminalProtocol for Carrying Authentication for Network Access

The invention discloses a TCP acceleration method suitable for a satellite link. The method comprises the following steps: TCP communication of a satellite network is divided into three sections: a sender terminal user and a satellite gateway, a satellite gateway and a satellite gateway, a satellite gateway and a receiver terminal user; and ground communication between the terminal user and the satellite gateway is carried out by adopting a TCP, the satellite gateway at a sending end is provided with a network accelerator, and the network accelerator realizes bidirectional acceleration. On onehand, TCP data sent to the terminal user by the satellite gateway responds to TCP acceleration transmission in a protocol pseudo-response mode; on the other hand, an improved TCP is adopted for communication between the gateway and the gateway; the improved TCP is combined with traditional TCP congestion control and data amount-based congestion control modes to carry out congestion judgment on the network; and the problem of congestion misjudgment caused by long delay and high error rate of the link is solved by combining protocol pseudo-response and data amount-based congestion control mechanisms, so that the using efficiency of the TCP is improved.

Owner:CHENGDUSCEON TECH

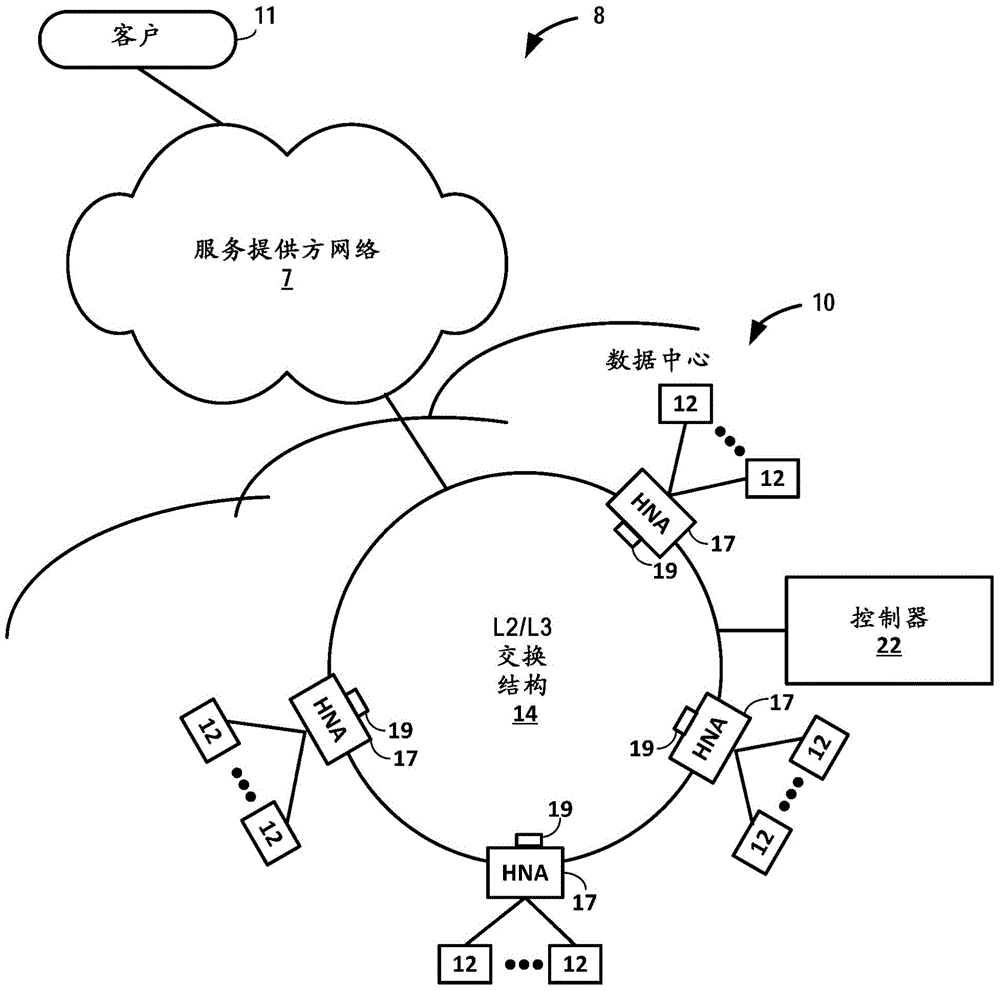

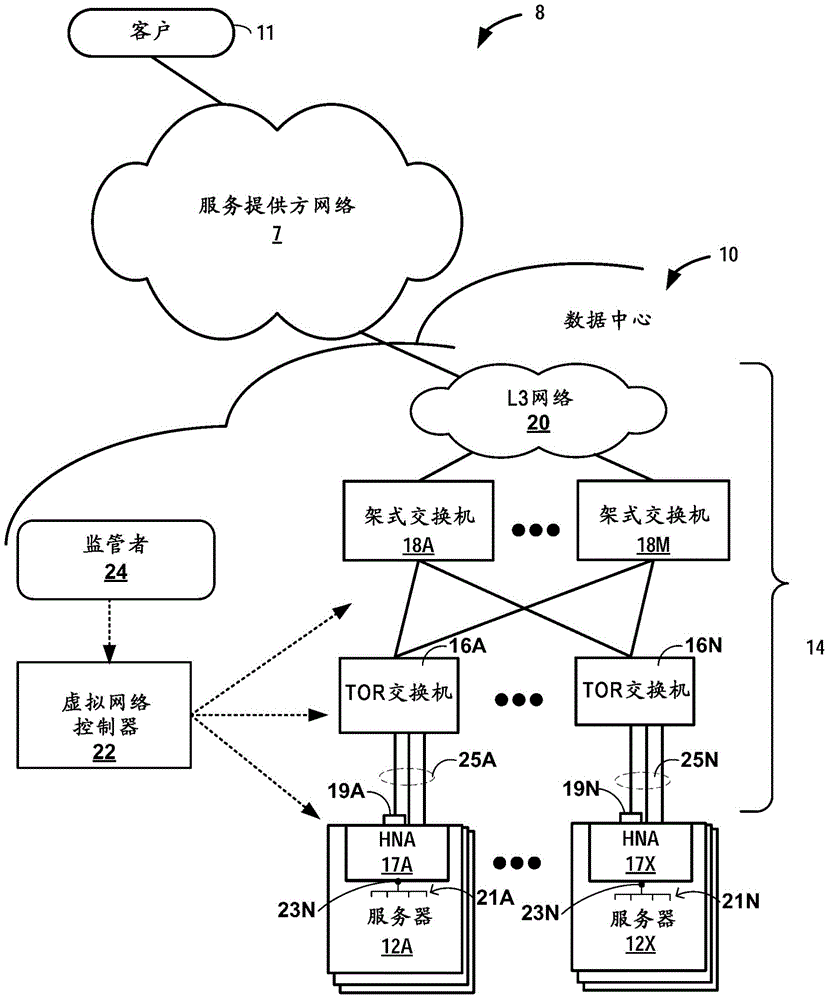

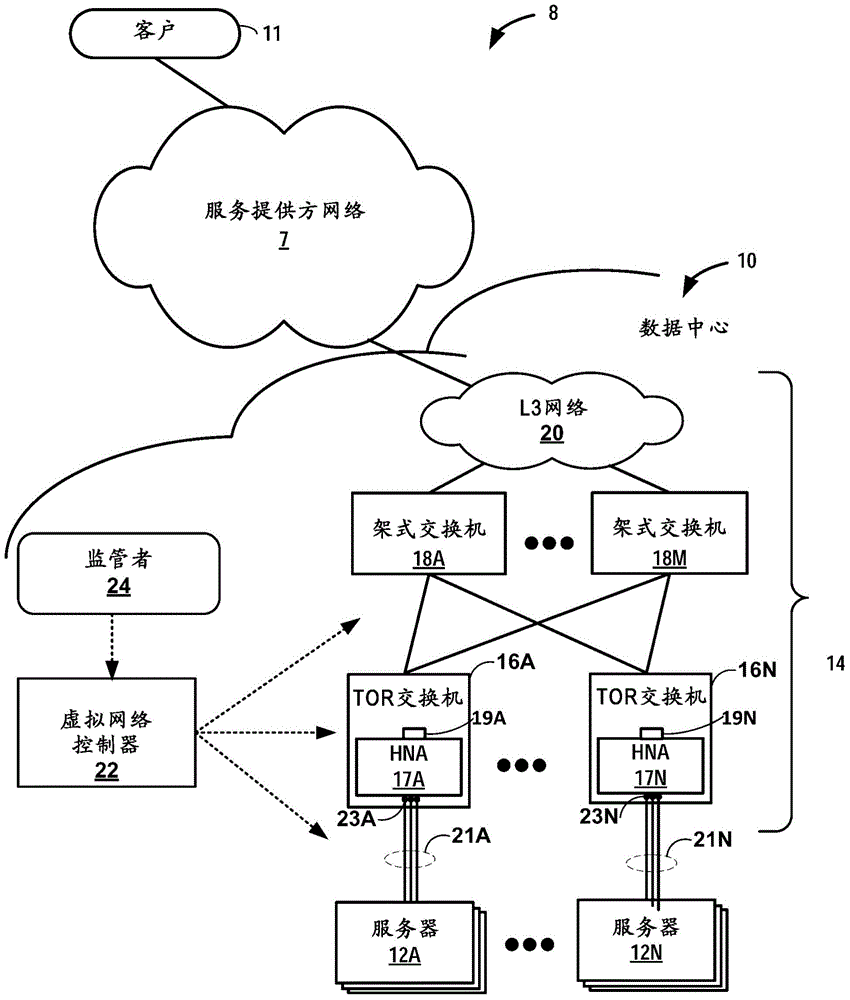

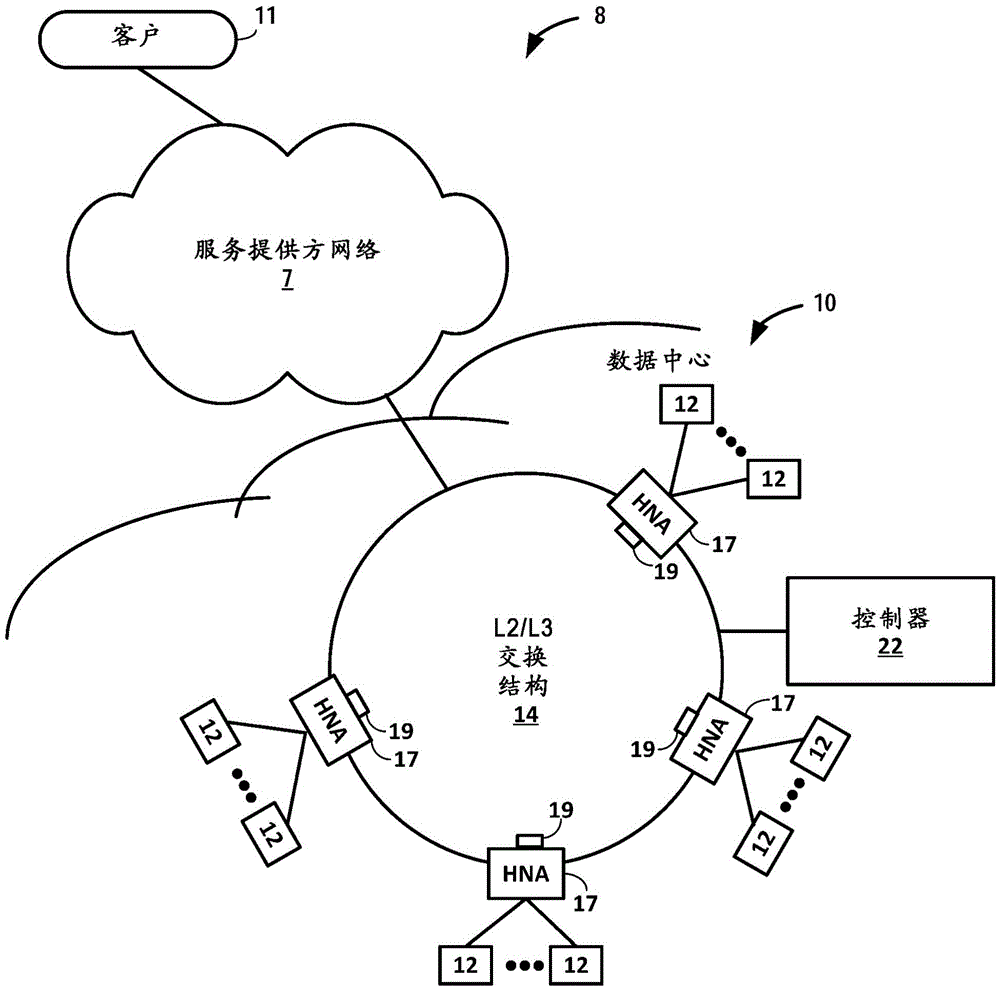

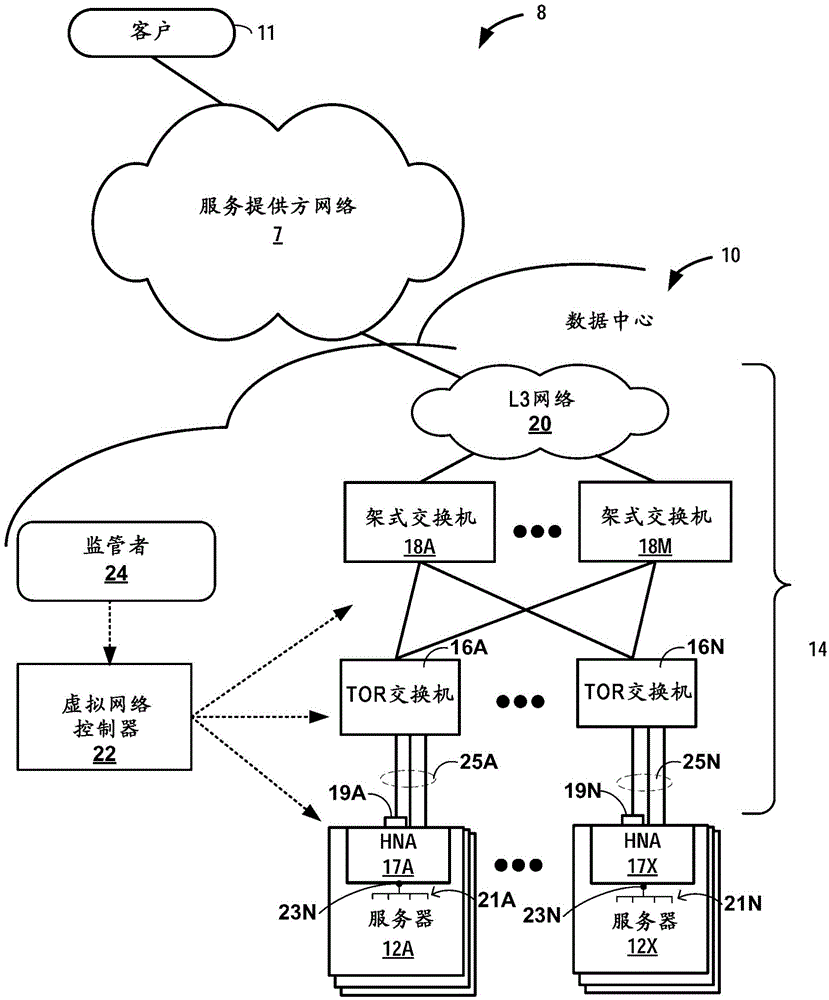

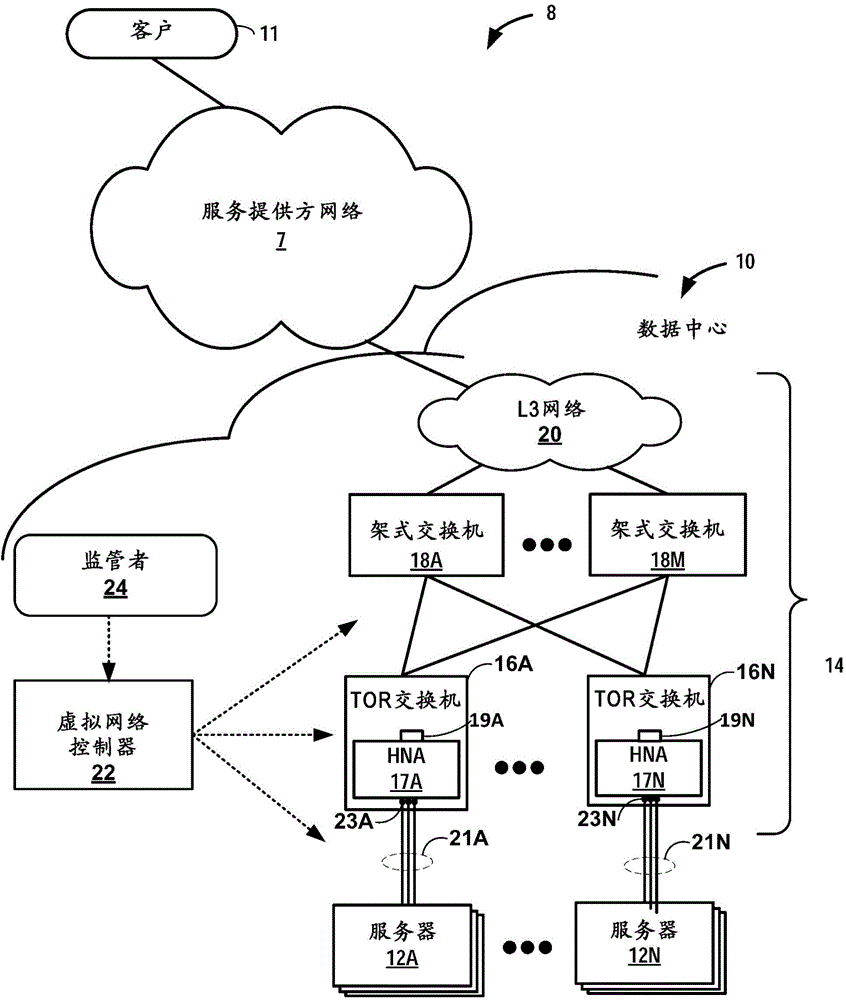

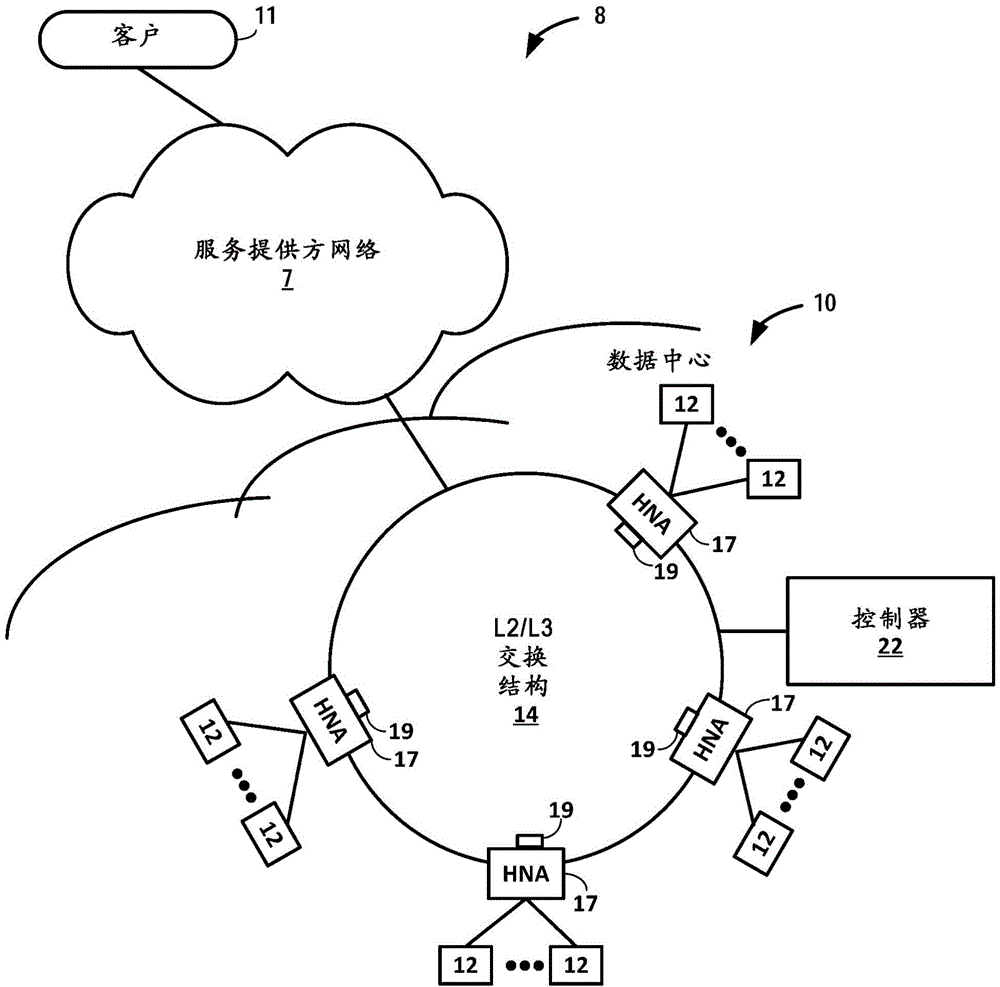

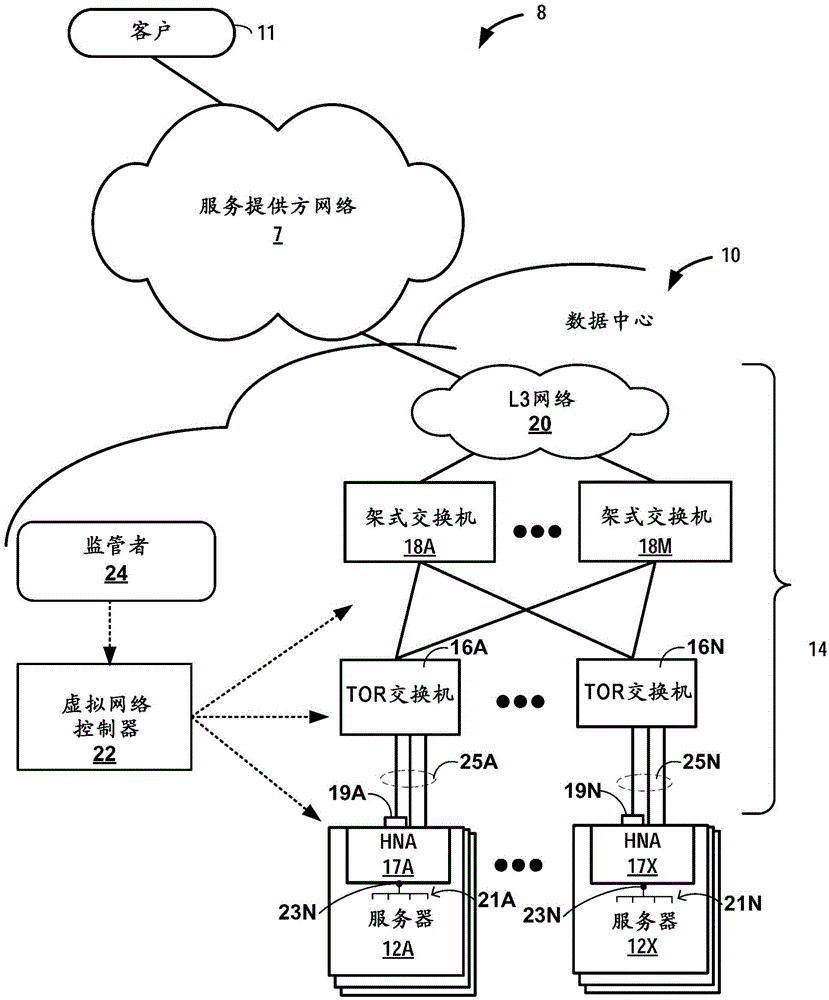

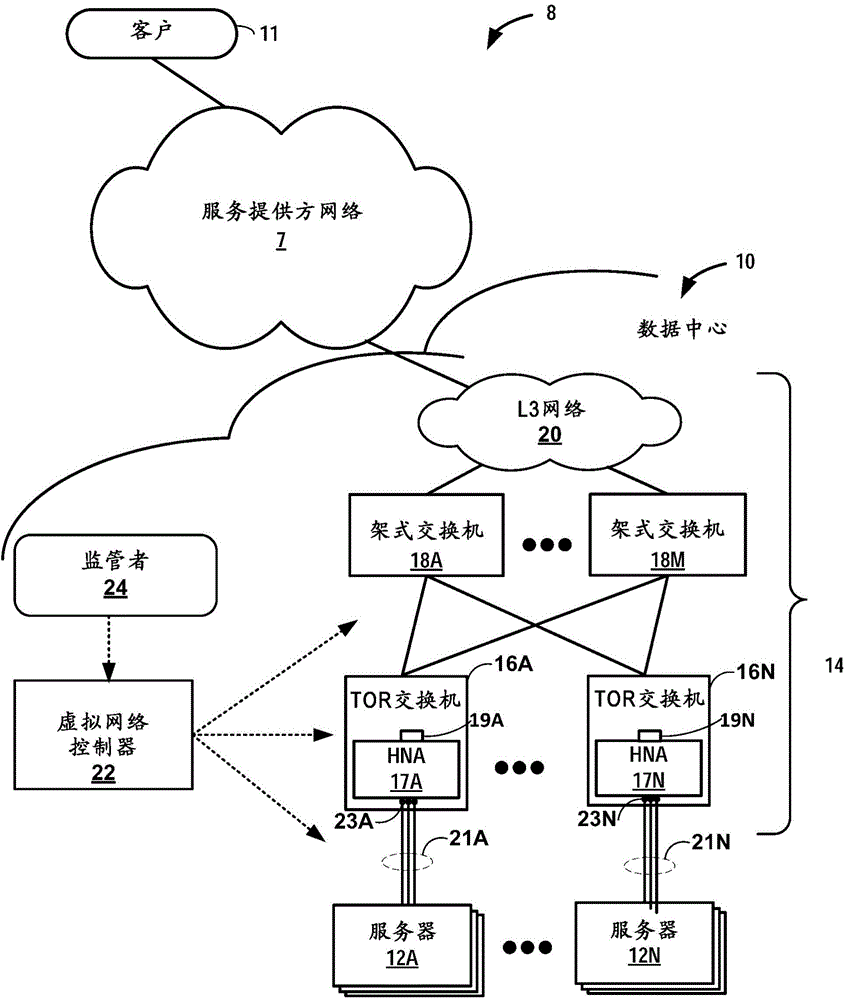

High-performance, scalable and drop-free data center switch fabric

ActiveCN104954251AExperience high speed and reliabilityHigh-speed and reliable forwardingNetworks interconnectionElectric digital data processingOff the shelfData center

A high-performance, scalable and drop-free data center switch fabric and infrastructure is described. The data center switch fabric may leverage low cost, off-the-shelf packet-based switching components (e.g., IP over Ethernet (IPoE)) and overlay forwarding technologies rather than proprietary switch fabric. In one example, host network accelerators (HNAs) are positioned between servers (e.g., virtual machines or dedicated servers) of the data center and an IPoE core network that provides point-to-point connectivity between the servers. The HNAs are hardware devices that embed virtual routers on one or more integrated circuits, where the virtual router are configured to extend the one or more virtual networks to the virtual machines and to seamlessly transport packets over the switch fabric using an overlay network. In other words, the HNAs provide hardware-based, seamless access interfaces to overlay technologies used for communicating packet flows through the core switching network of the data center.

Owner:JUMIPER NETWORKS INC

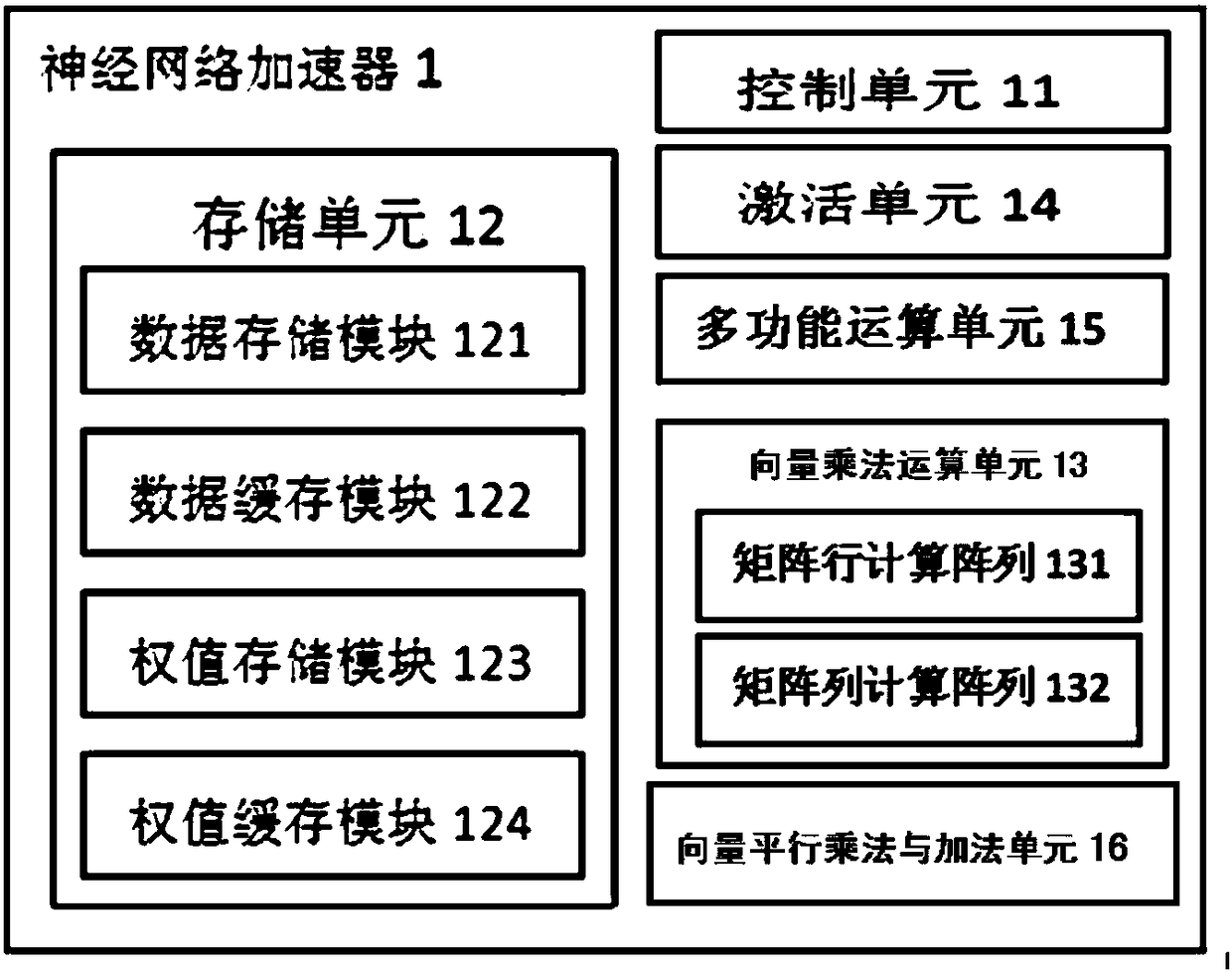

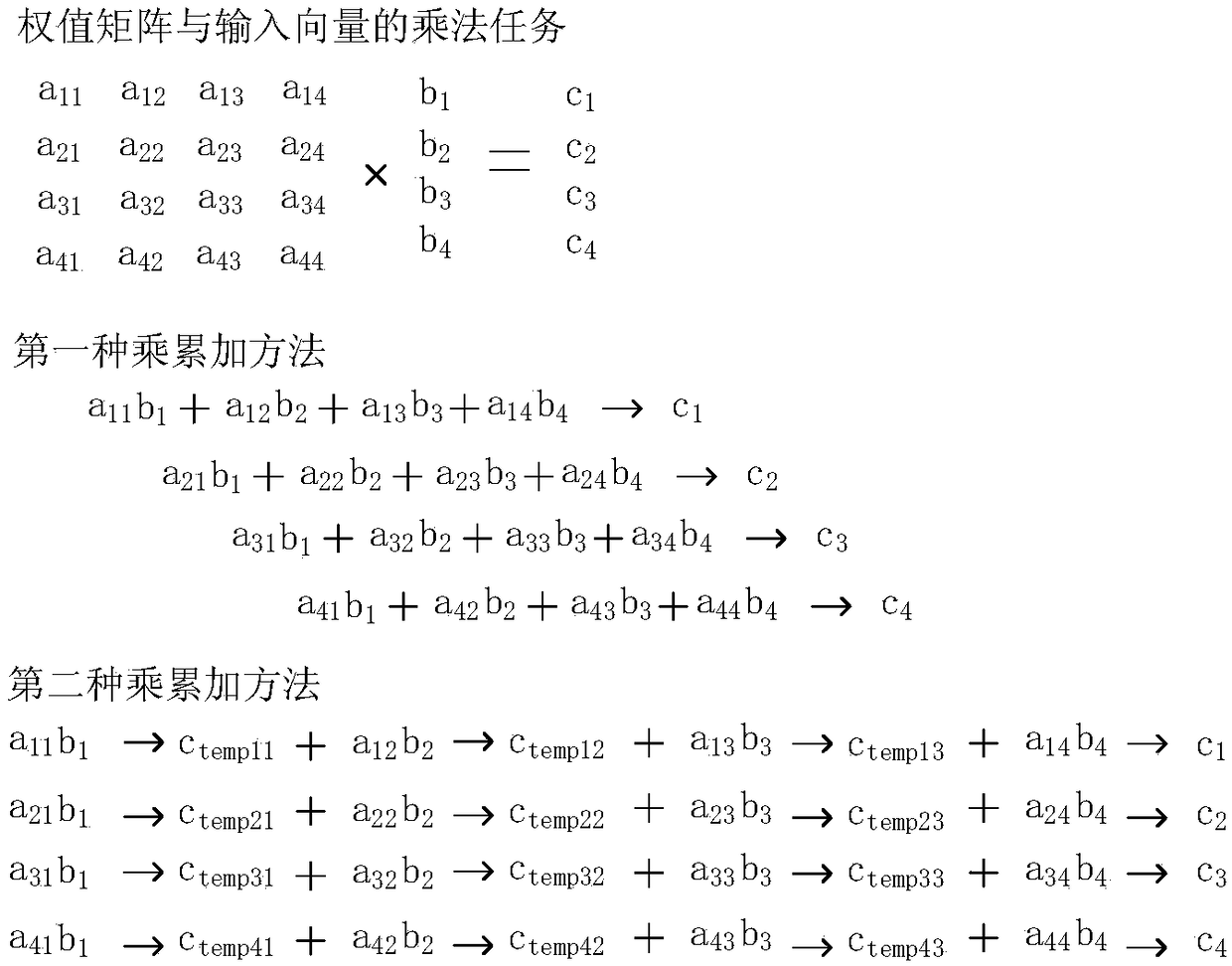

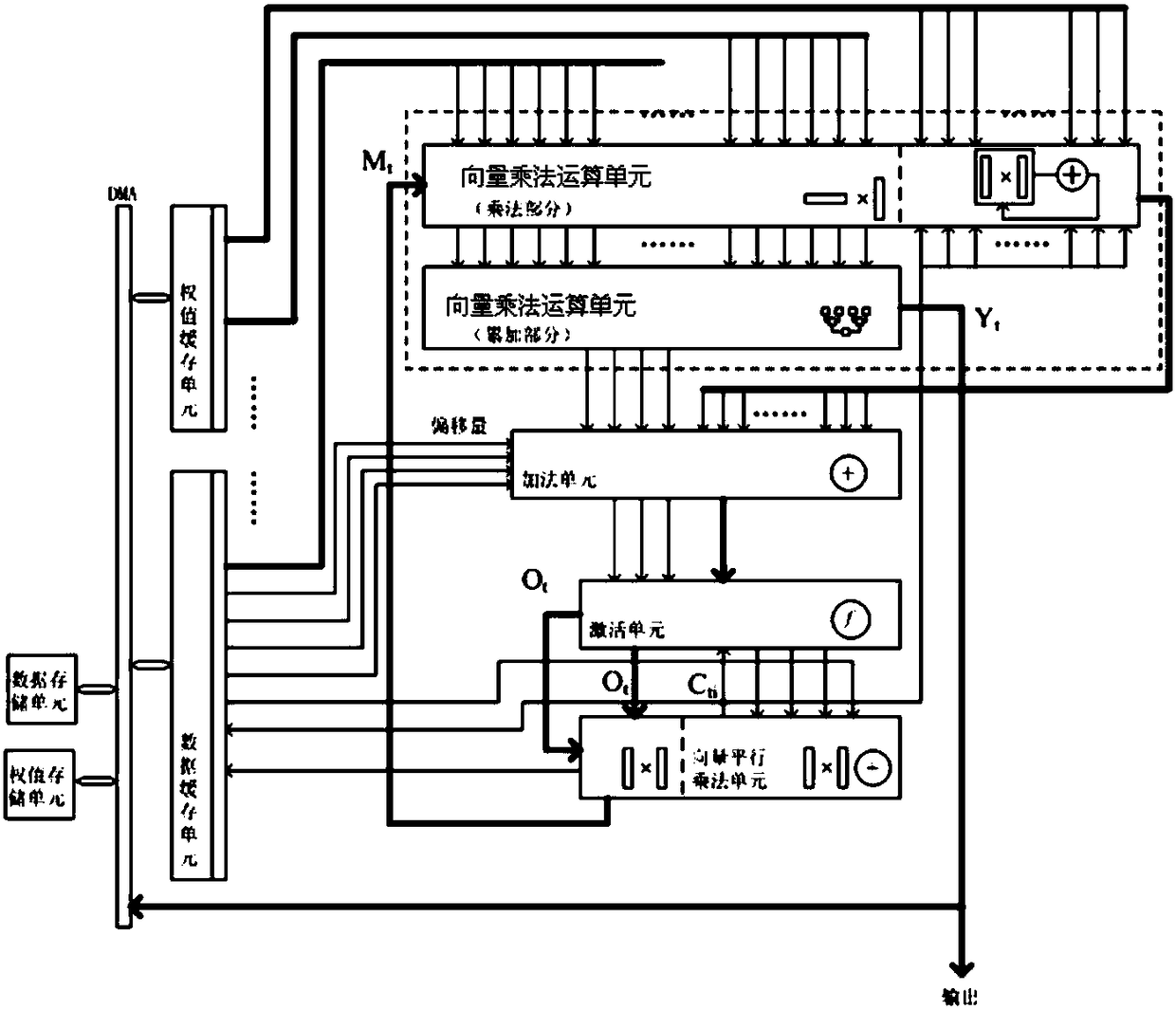

Neural network accelerator for facing multi-variant LSTM and data processing method thereof

InactiveCN108376285AImprove processing efficiencyImprove compatibilityPhysical realisationBiological activationNeuron

The invention relates to a neural network accelerator for facing a multi-variant LSTM. The neural network accelerator comprises the components of a storage unit which is used for storing neuron data and weight data of the LSTM and the variant network and outputting; a matrix multiplication unit which is used for receiving data from the storage unit, executing a vector multiplying and accumulatingoperation on the received data and outputting an operation result; a multifunctional operating unit which is used for receiving data from a vector multiplication operation unit, performs a specific operation which corresponds with the LSTM or the variant network for aiming at the received data and outputs an operation result; an activating unit which is used for receiving data from the multifunctional operating unit and the storage unit, performs an activation operation for aiming at the received data and outputs an activation result; and a vector parallel multiplying and adding unit which isused for receiving data from the activating unit and the storage unit and performing a multiplying operation and an adding operation for aiming at the received data.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Message preprocessing method and device

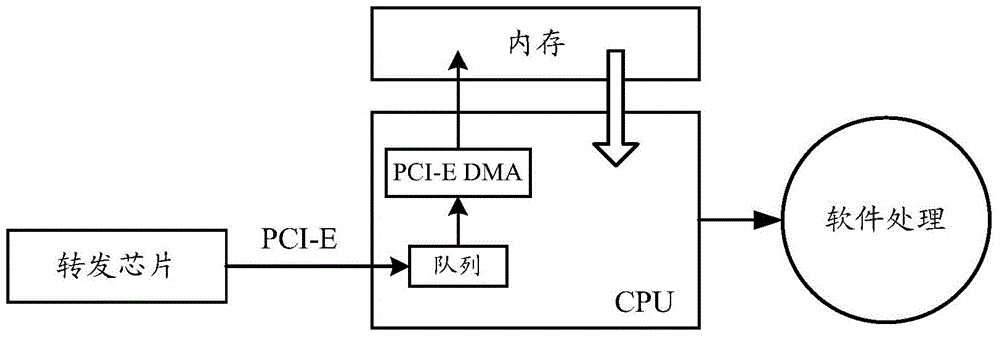

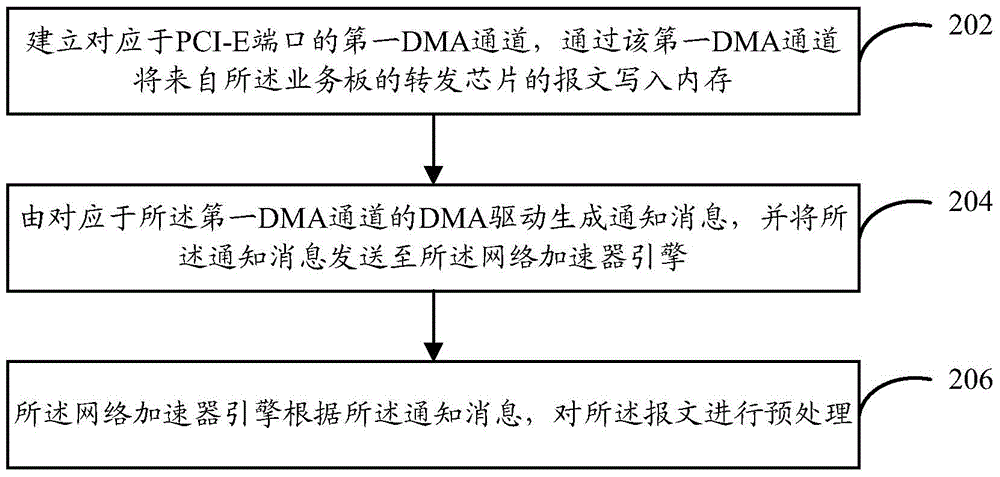

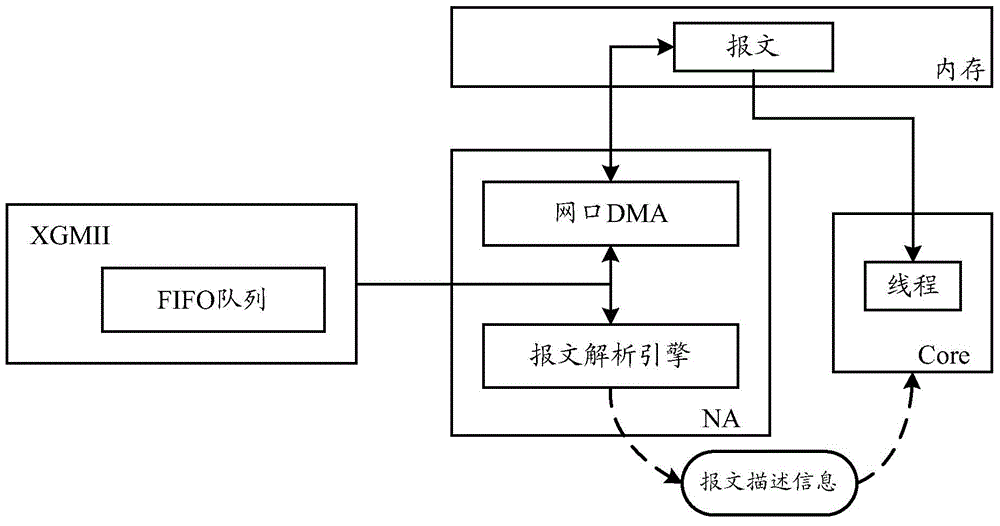

ActiveCN103986585AAvoid occupyingImprove performanceData switching detailsPretreatment methodWeb accelerator

The invention provides a message preprocessing method and device which are applied to a service board provided with a network accelerator engine. The method includes the steps that a first DMA channel corresponding to a PCI-E port is established, messages from a forwarding chip of the service board are written into a memory; a notification message is generated by a DMA driver corresponding to the first DMA channel, and the notification message is sent to the network accelerator engine, wherein the notification message contains attribute information of the messages; the messages are preprocessed by the network accelerator engine according to the notification message. According to the technical scheme, the messages from the forwarding chip are preprocessed through the network accelerator engine, and thus occupation of CPU resources is avoided, and promotion of overall performance of network equipment is facilitated.

Owner:NEW H3C TECH CO LTD

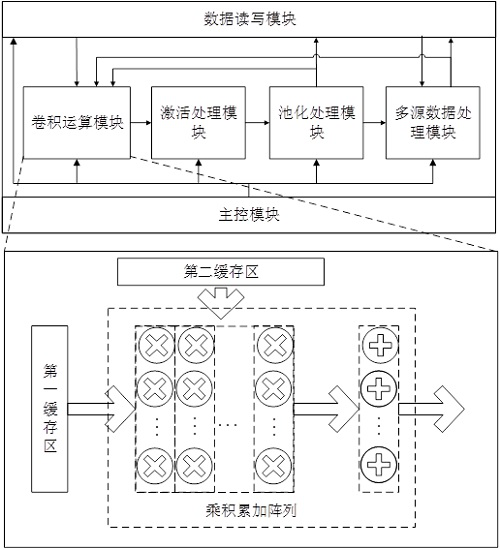

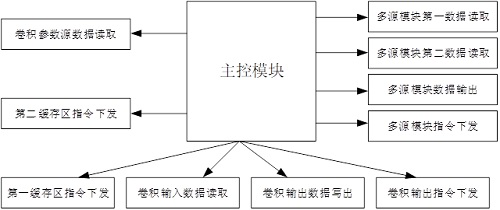

Neural network accelerator

ActiveCN111931918AImprove the ratio of computing power to power consumptionRealize data reuseNeural architecturesPhysical realisationMassively parallelExternal data

The invention relates to the technical field of artificial intelligence, and provides a neural network accelerator. According to the neural network accelerator, simplified design is carried out by taking a layer as a basic unit; all the modules can run in parallel to achieve simultaneous running of all layers of the neural network on hardware so as to greatly improve the processing speed, a main control module can complete cutting distribution of operation tasks and corresponding data of all the layers, and then all the modules execute the operation tasks of the corresponding layers. Moreover,a three-dimensional multiply-accumulate array is also realized in the neural network accelerator architecture; therefore, multiplication and accumulation operations can be operated in parallel on a large scale; the convolution calculation efficiency is effectively improved; data multiplexing and efficient circulation of convolution operation parameters and convolution operation data among the modules are realized through a first cache region and a second cache region, data multiplexing between layers of the neural network is realized to reduce external data access, so that the power consumption is reduced while the data processing efficiency is improved, and the neural network accelerator can improve the computing power consumption ratio.

Owner:SHENZHEN MINIEYE INNOVATION TECH CO LTD

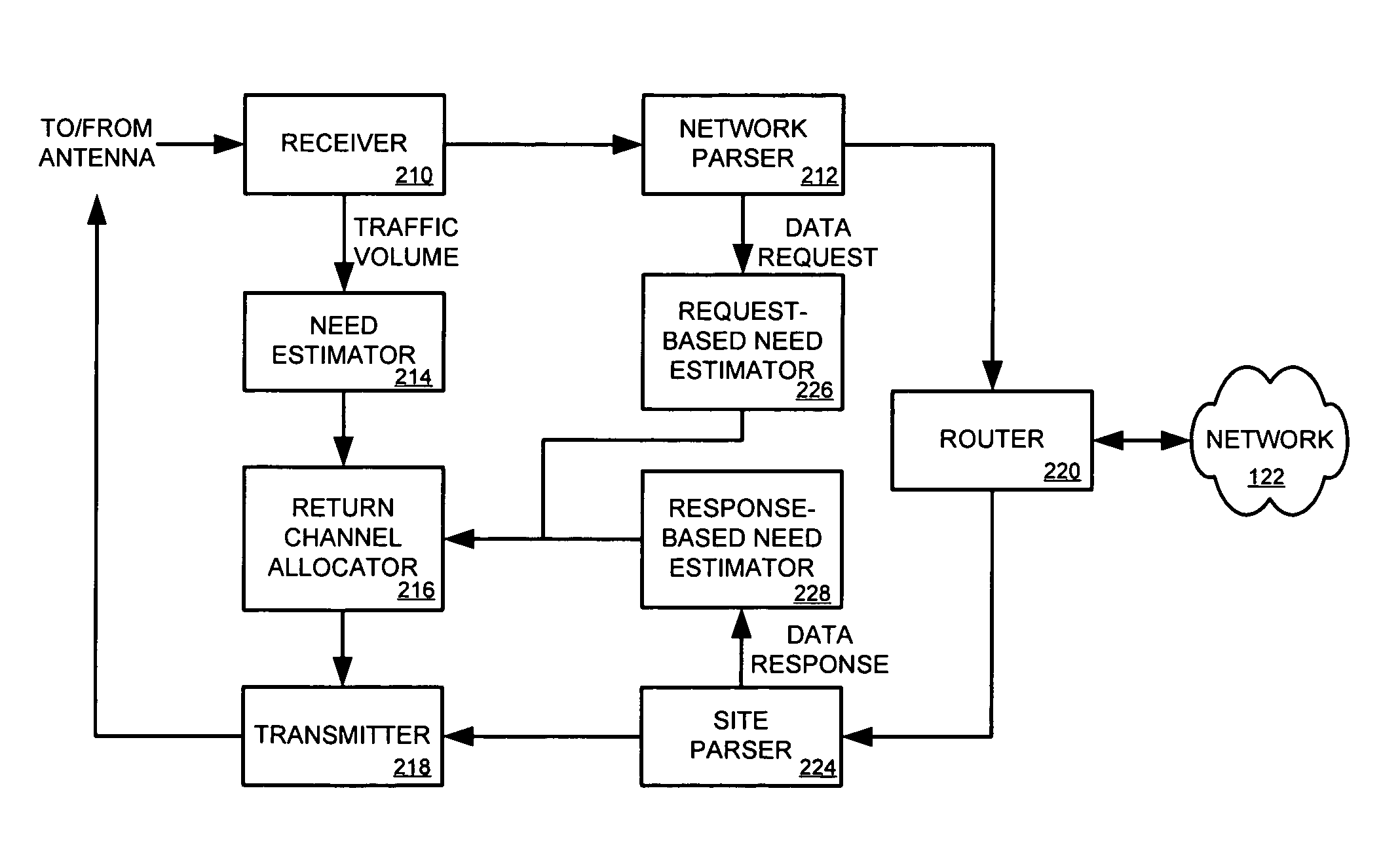

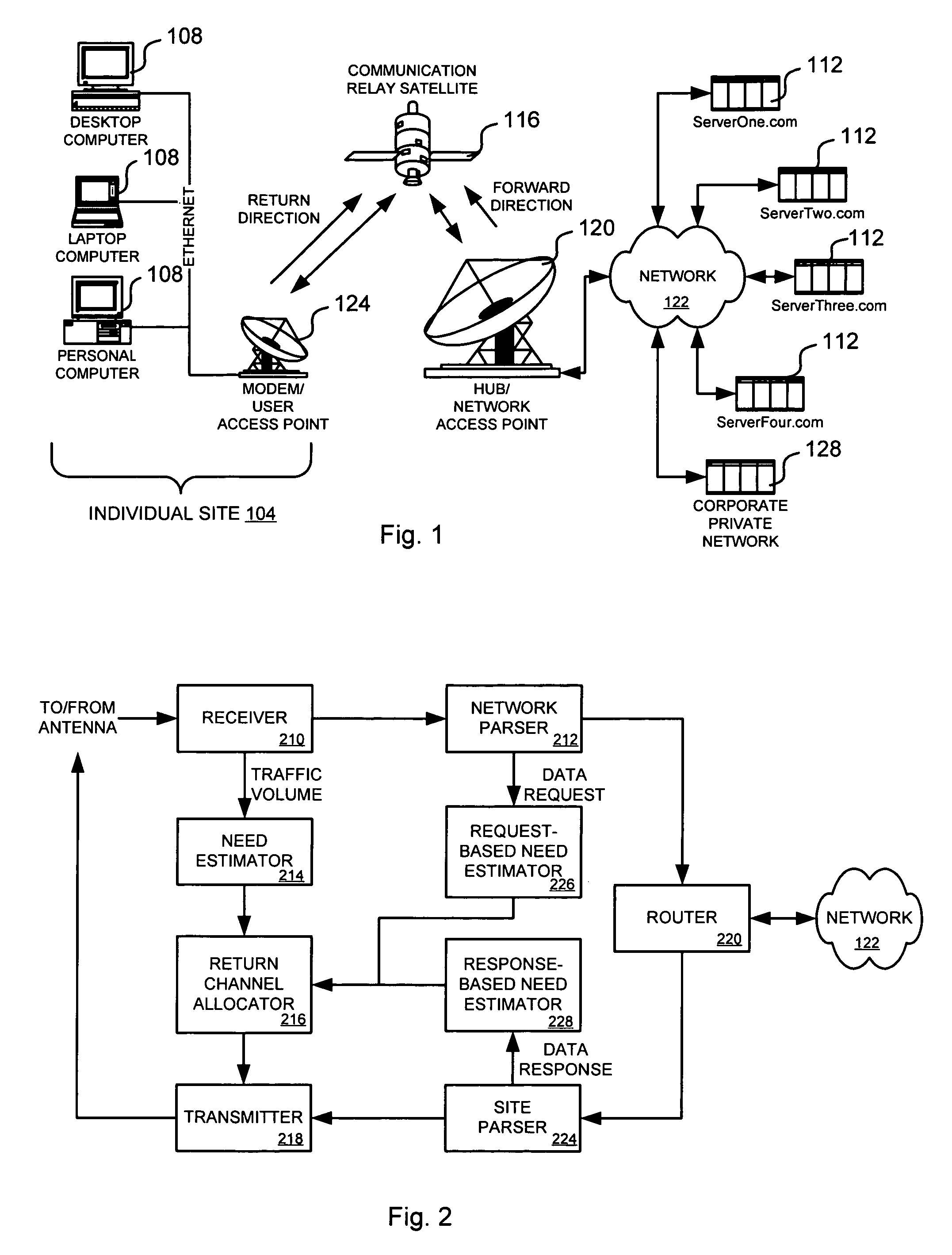

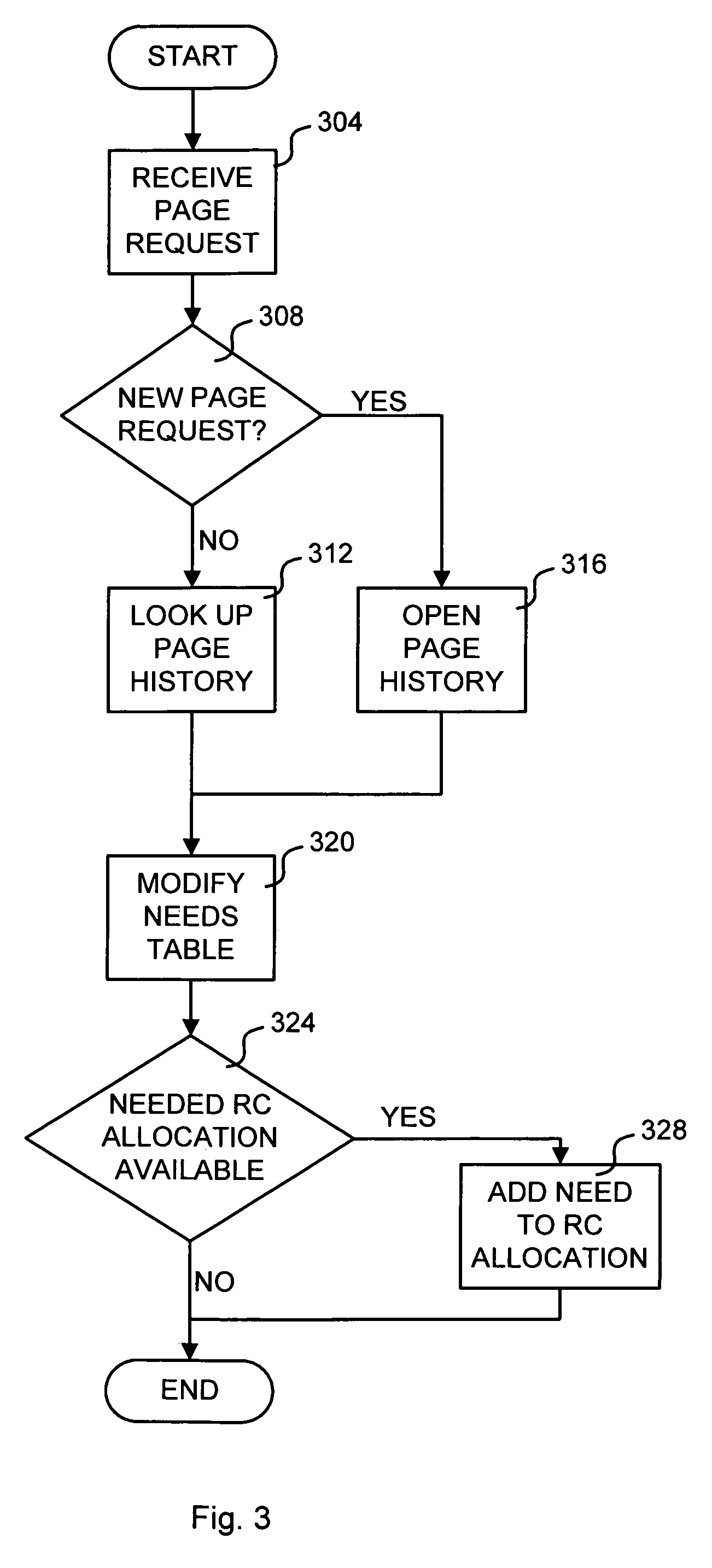

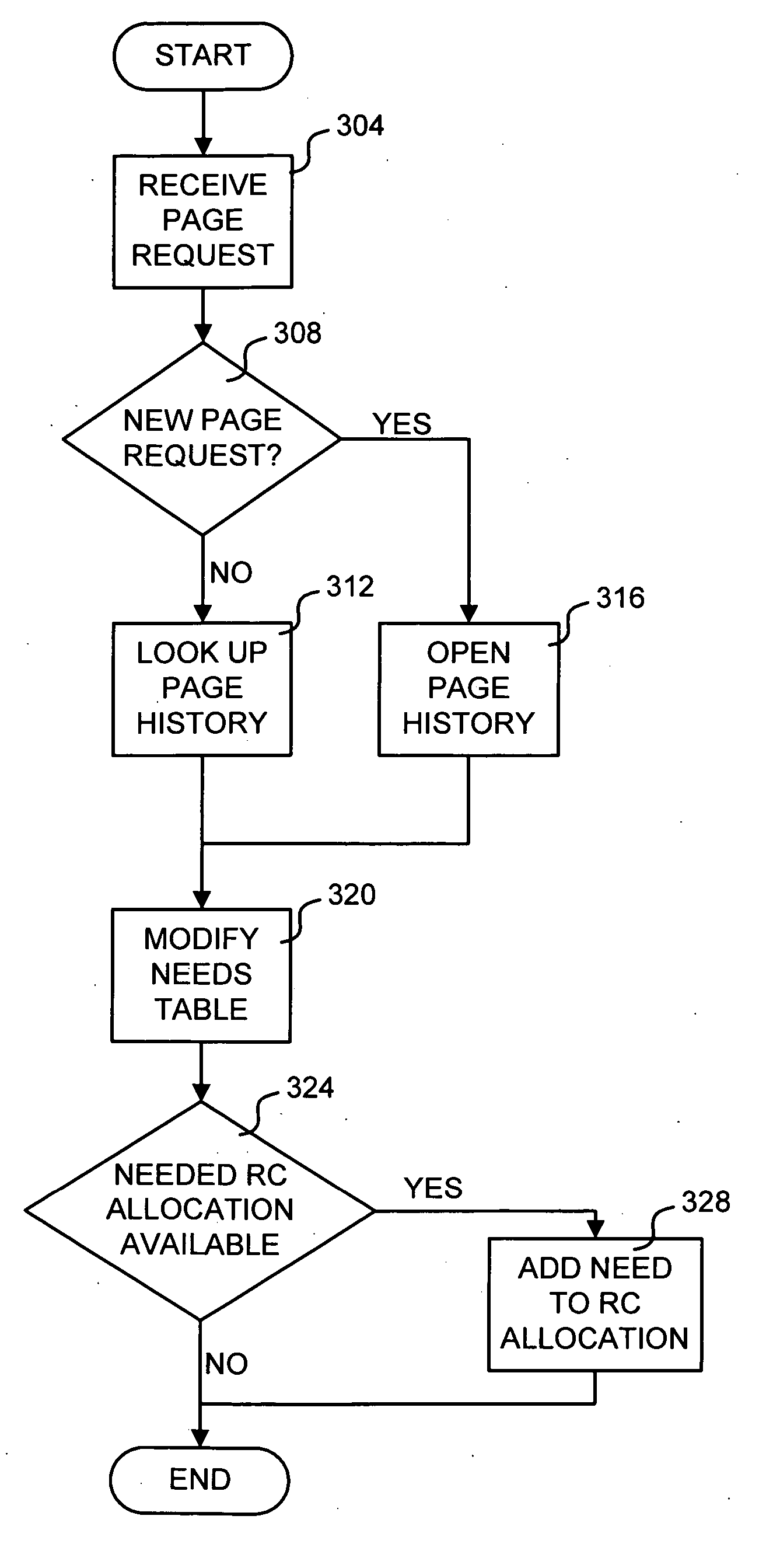

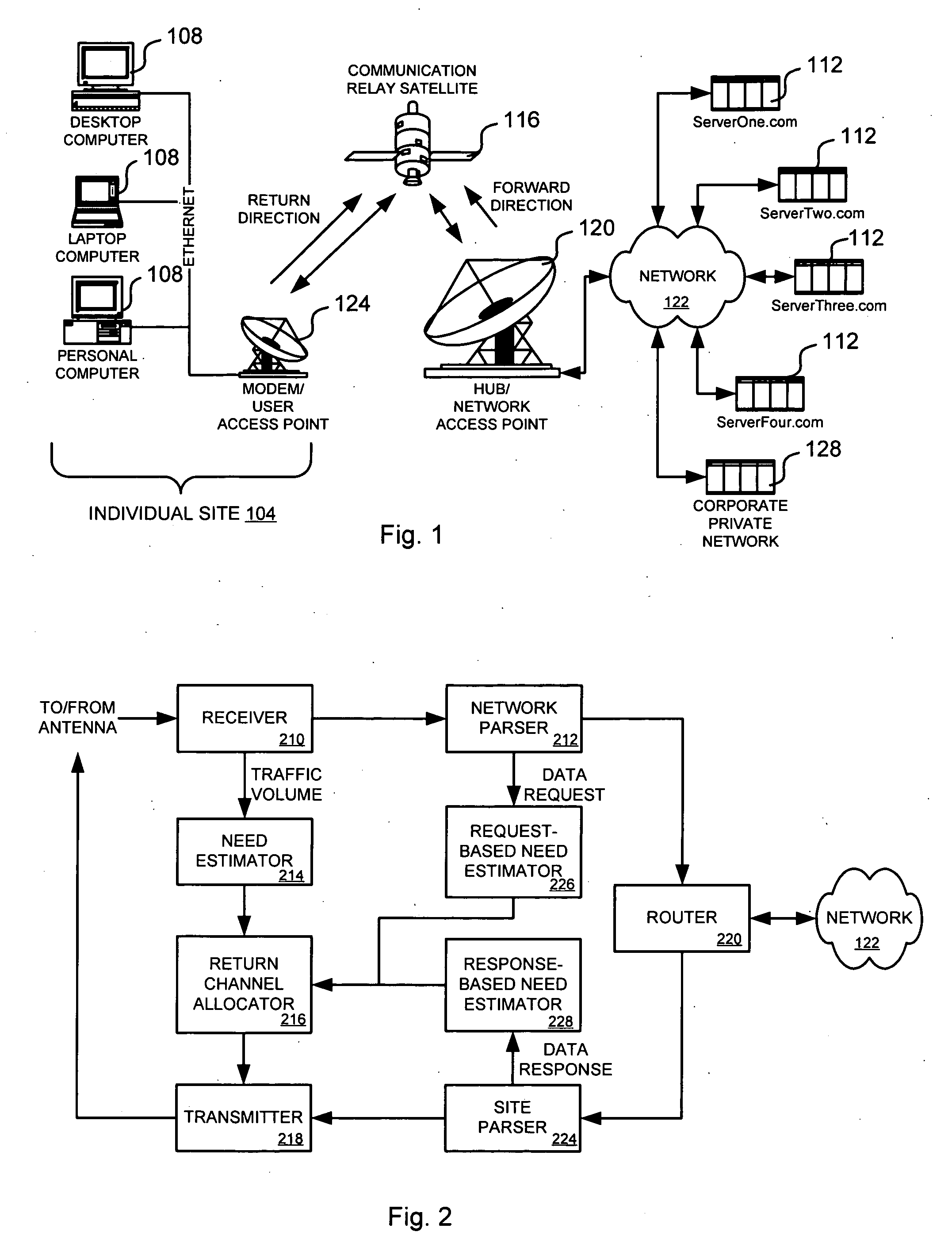

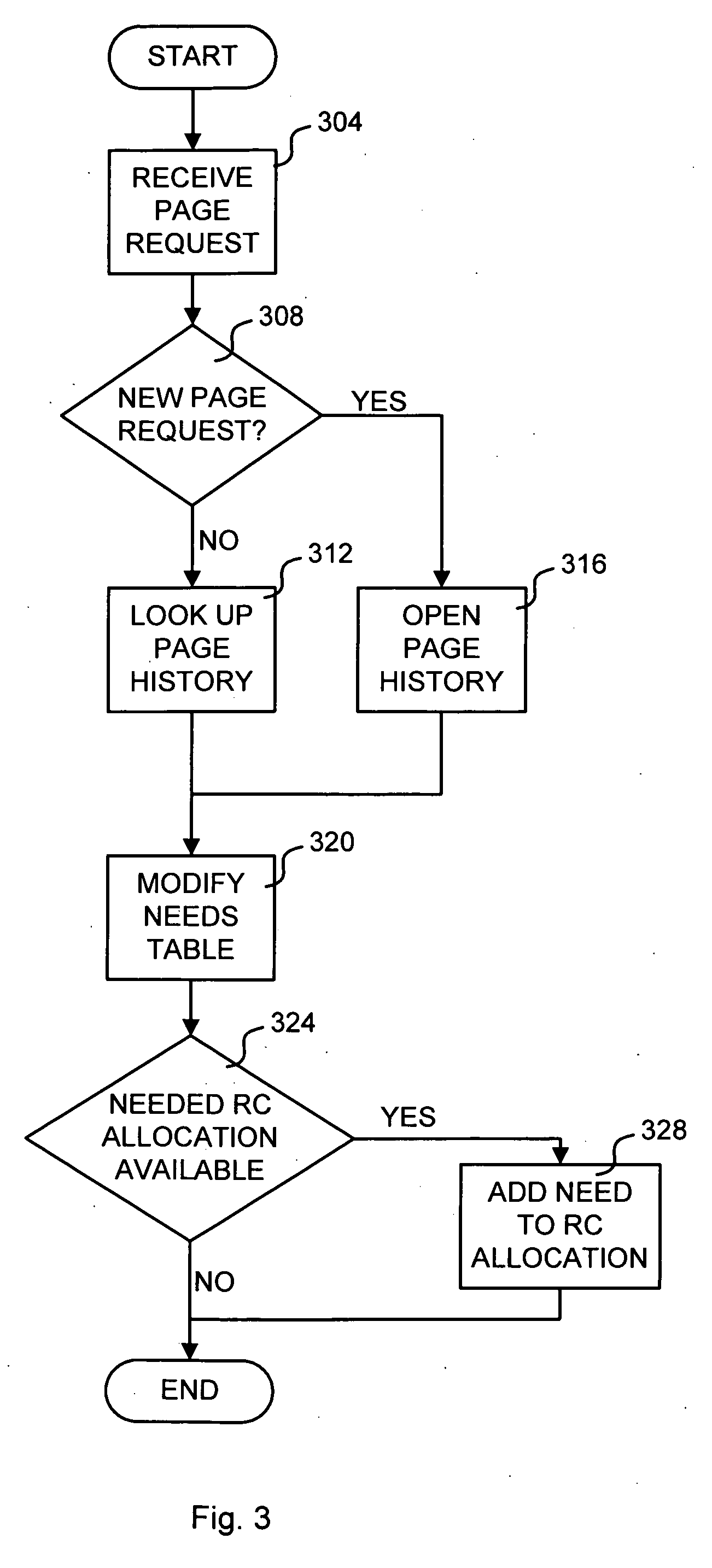

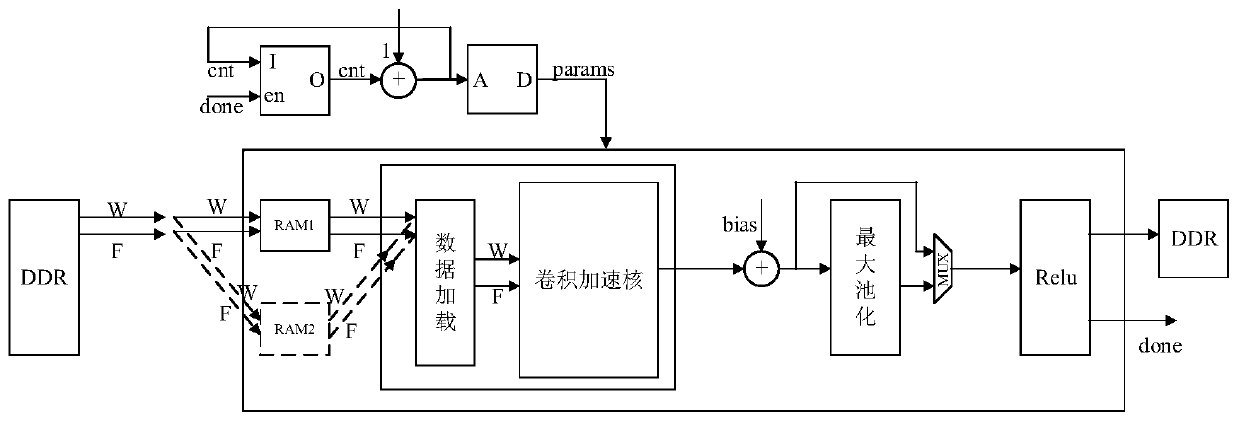

Network accelerator for controlled long delay links

ActiveUS7769863B2Digital computer detailsRadio transmissionTelecommunications linkCommunications system

A communication system for providing network access over a shared communication link is disclosed. The communication system includes a user access point, a network access point and a communications link. The user access point is coupled to one or more user terminals that access a remote network. The network access point is coupled to the remote network. The communications link couples the user access point and the network access point. The communications link is at least partially controlled by the network access point, which monitors information passed between the remote network and the user access point to create an estimate of future usage of the communications link by the user access point based on the information. The network access point allocates communications link resources for the user access point based on the estimate.

Owner:VIASAT INC

Host network accelerator for data center overlay network

ActiveCN104954247AExperience high speed and reliabilityHigh-speed and reliable forwardingData switching networksElectric digital data processingGPRS core networkOff the shelf

A high-performance, scalable and drop-free data center switch fabric and infrastructure is described. The data center switch fabric may leverage low cost, off-the-shelf packet-based switching components (e.g., IP over Ethernet (IPoE)) and overlay forwarding technologies rather than proprietary switch fabric. In one example, host network accelerators (HNAs) are positioned between servers (e.g., virtual machines or dedicated servers) of the data center and an IPoE core network that provides point-to-point connectivity between the servers. The HNAs are hardware devices that embed virtual routers on one or more integrated circuits, where the virtual router are configured to extend the one or more virtual networks to the virtual machines and to seamlessly transport packets over the switch fabric using an overlay network. In other words, the HNAs provide hardware-based, seamless access interfaces to overlay technologies used for communicating packet flows through the core switching network of the data center.

Owner:JUMIPER NETWORKS INC

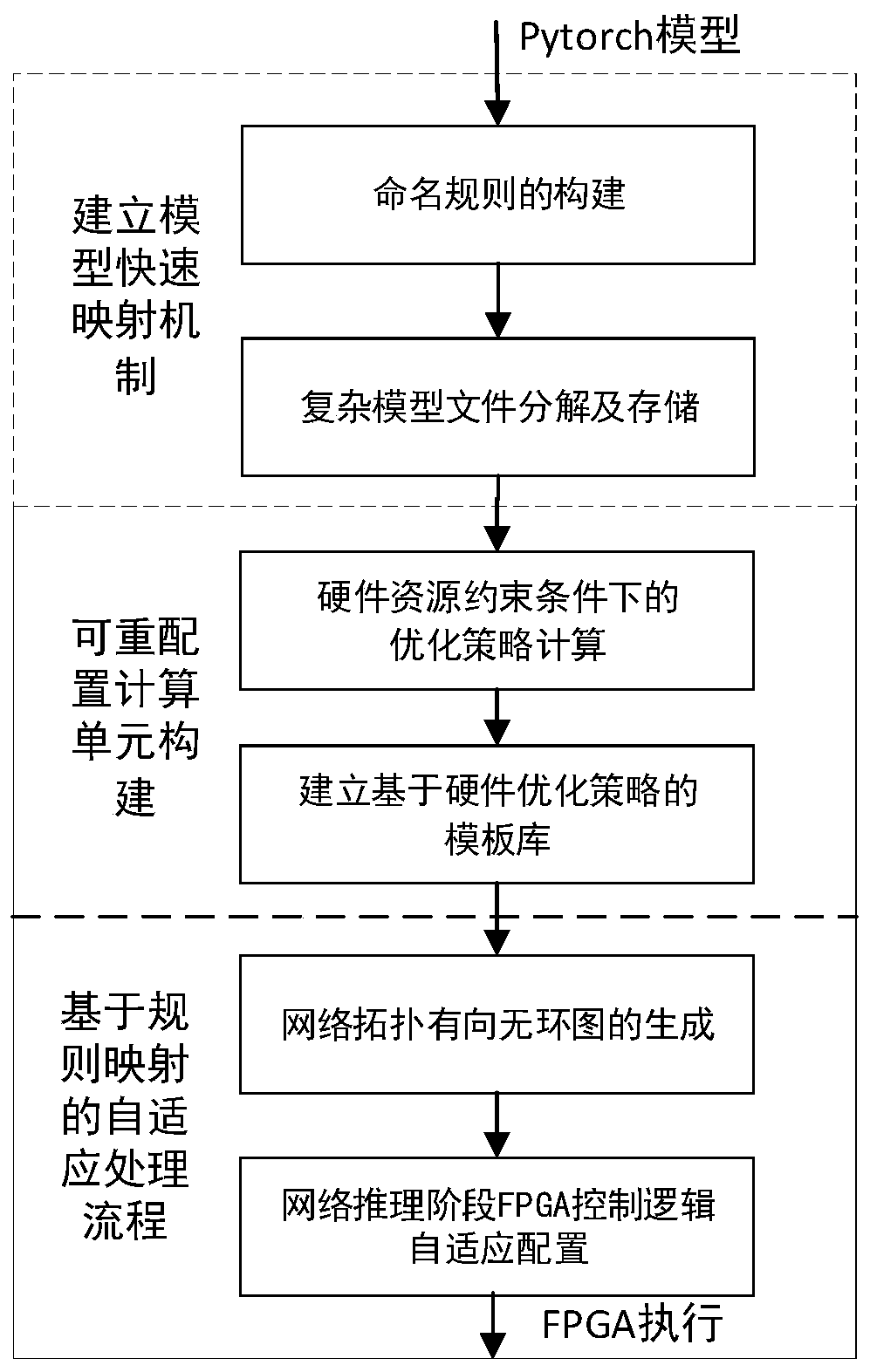

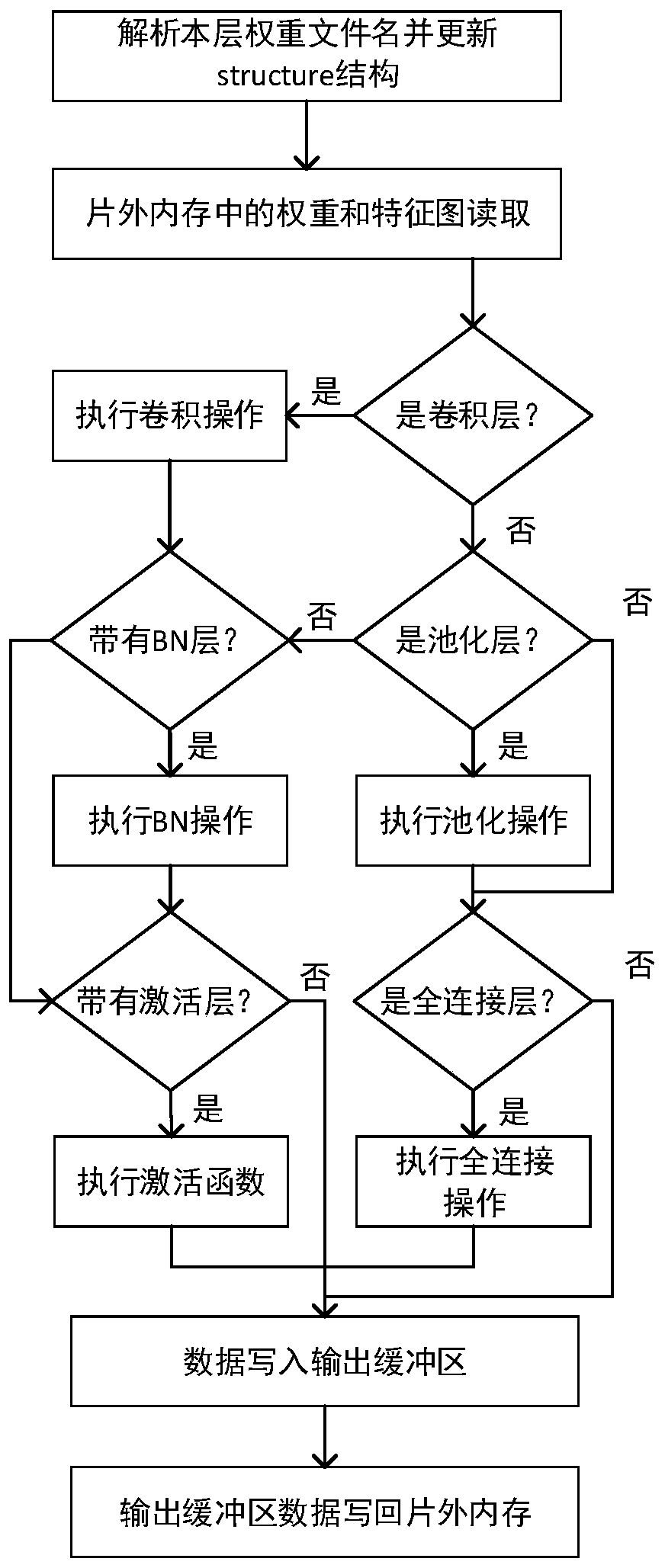

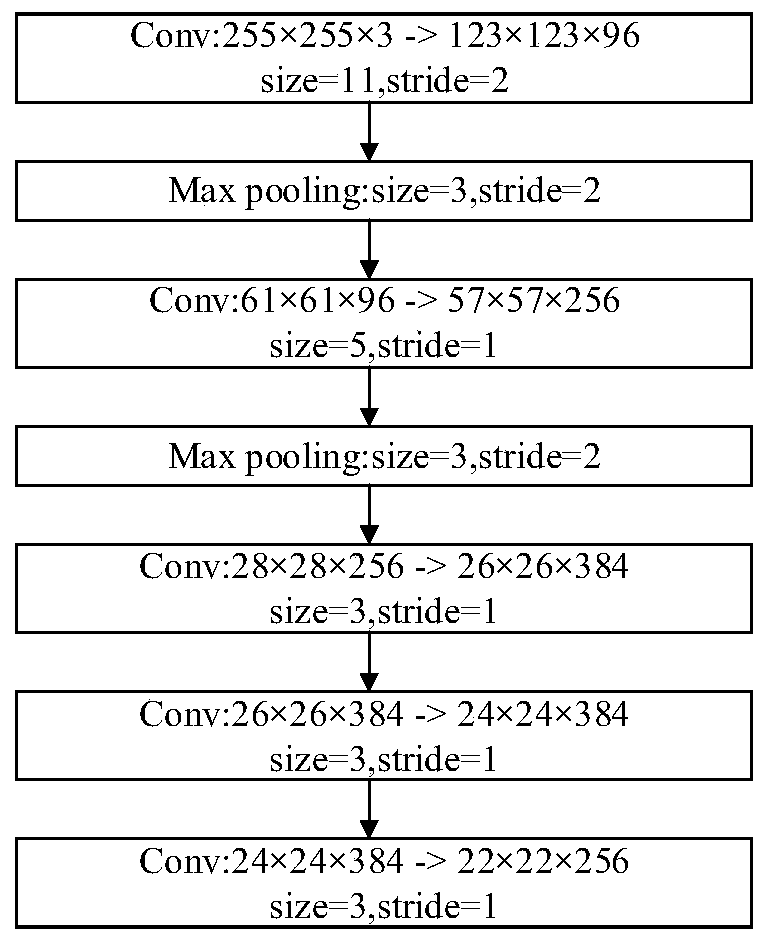

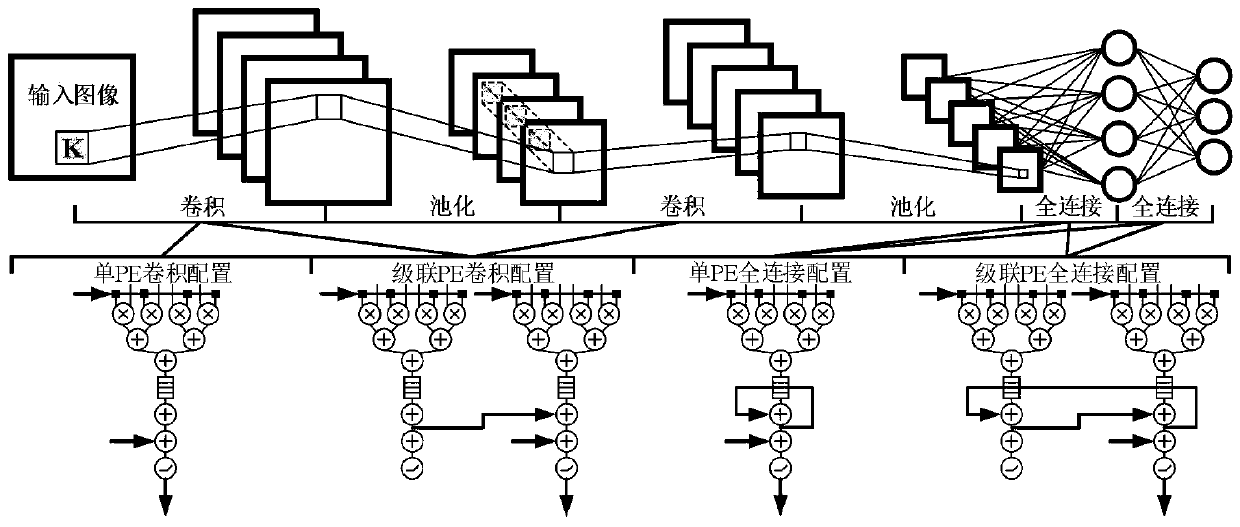

Method for quickly deploying convolutional neural network on FPGA (Field Programmable Gate Array) based on Pytorch framework

ActiveCN111104124AMake up for the problem of not including network topology informationVersatilityNeural architecturesPhysical realisationTheoretical computer scienceReconfigurable computing

The invention discloses a method for quickly deploying a convolutional neural network on an FPGA (Field Programmable Gate Array) based on a Pytorch framework. The method comprises the steps of establishing a model quick mapping mechanism, constructing a reconfigurable computing unit and carrying out self-adaptive processing flow based on rule mapping; when a convolutional neural network is definedunder a Pytorch framework, establishing a model fast mapping mechanism through construction of naming rules; making optimization strategy calculation under a hardware resource constraint condition, establishing a template library based on a hardware optimization strategy and creating a reconfigurable calculation unit i at an FPGA end; and finally, decomposing the complex network model file at the FPGA end in the self-adaptive processing flow based on rule mapping, abstracting the network into a directed acyclic graph, and finally generating a neural network accelerator to realize an integrated flow from the model file of the Pytorch framework to FPGA deployment. The directed acyclic graph of the network can be established through a model fast mapping mechanism, the FPGA deployment process can be completed only by inputting hardware design variables in the FPGA deployment process, and the method is simple and high in universality.

Owner:BEIHANG UNIV

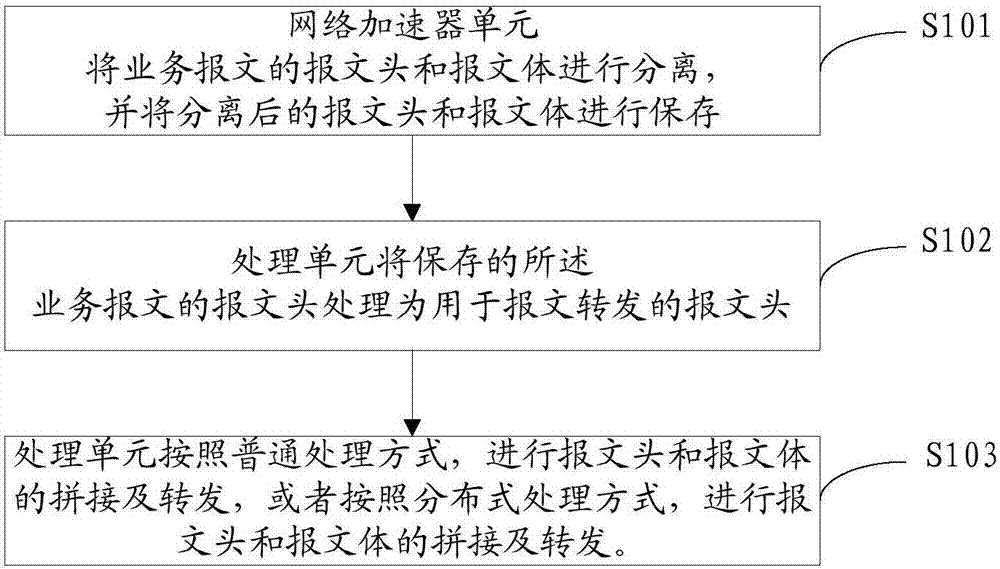

Message processing method, device and base station

PendingCN107547417AImprove processing efficiencyImprove forwarding efficiencyData switching networksIntelligent NetworkMessage processing

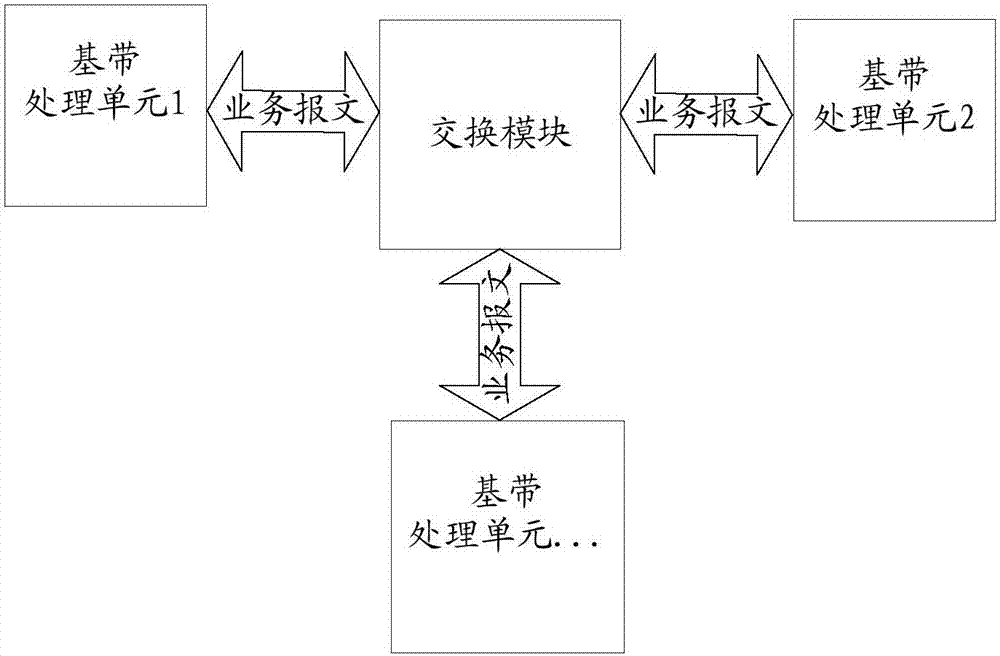

The invention discloses a message processing method, device and a base station, relating to the field of intelligent network communication. The method comprises the following steps: a network accelerator unit separates a message header and a message body of a service message, and separately stores the separated message header and message body; a processing unit processes the stored message headerof the service message to a message header for message forwarding; and the processing unit splices and forwards the message header for message forwarding and the message body according to a general processing mode, or, the processing unit splices and forwards the message header for message forwarding and the message body by using the network accelerator unit according to a distributed processing mode. The service message is separated and spliced by using the network accelerator unit, and thus CPU resources can be saved, and the processing and forwarding efficiency of the service message can beimproved.

Owner:ZTE CORP

Accelerator of pulmonary-nodule detection neural-network and control method thereof

InactiveCN108389183AReduce resource consumptionImprove data throughputImage enhancementImage analysisPulmonary noduleActivation function

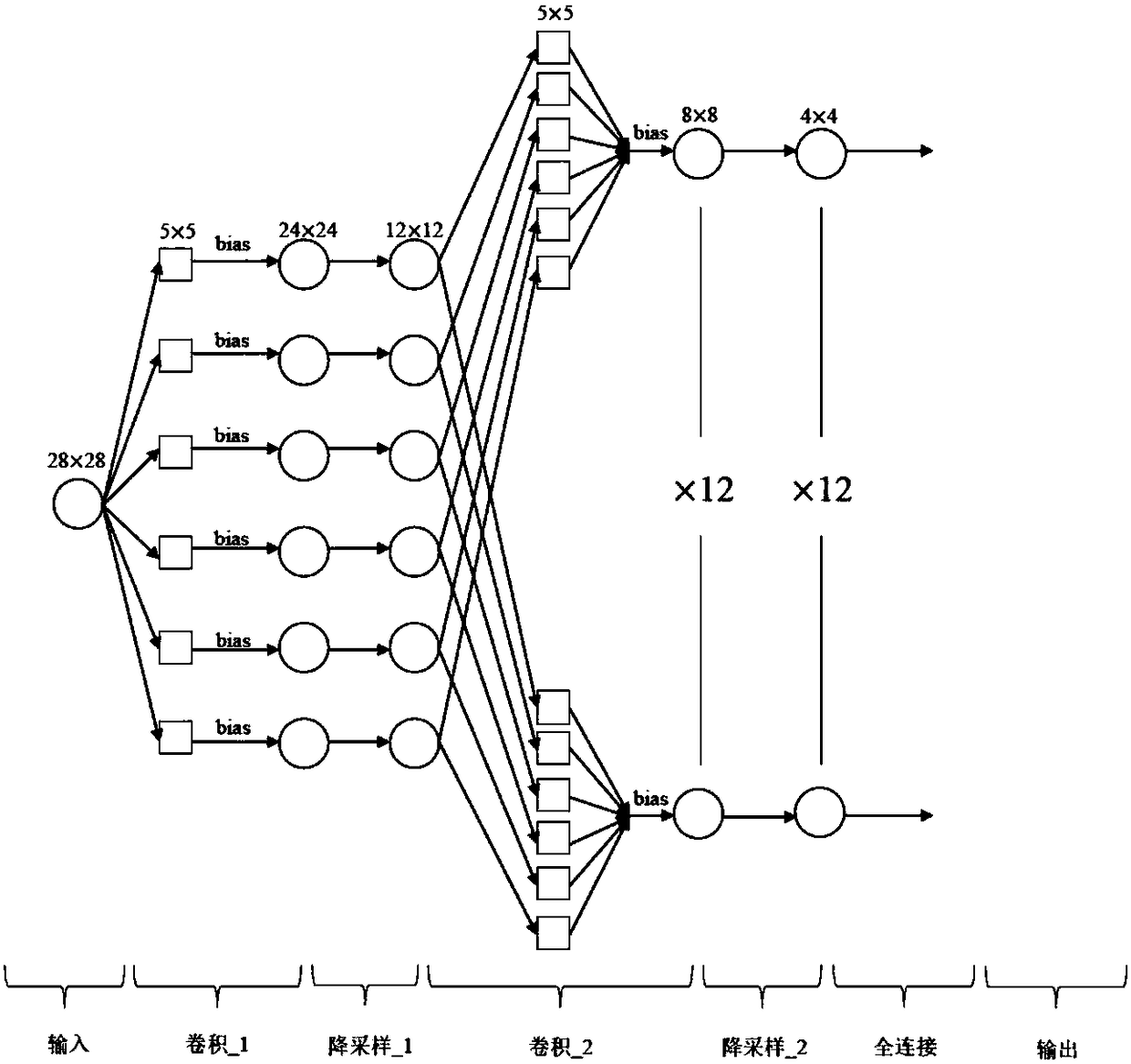

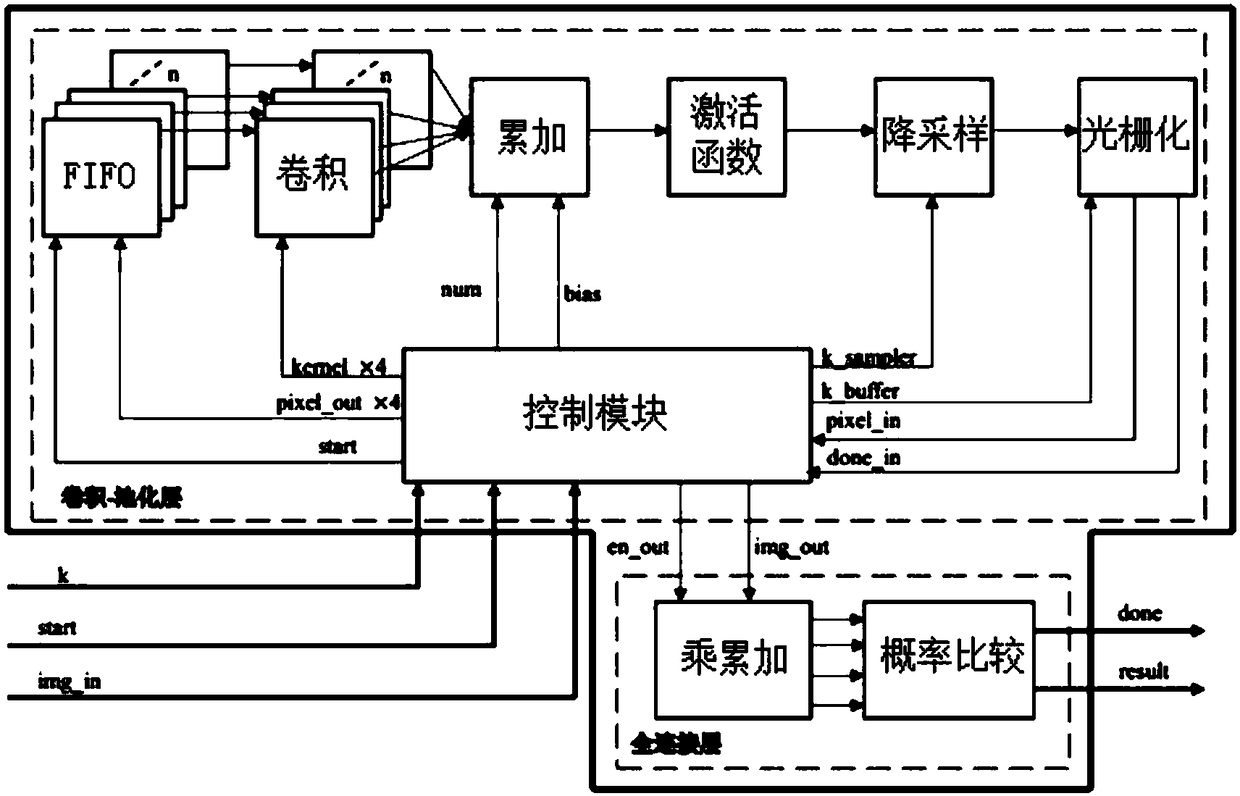

The invention provides an accelerator of a pulmonary-nodule detection neural-network and a control method thereof. Input data enter an FIFO module through a control module, then enter a convolution module to complete multiplication and accumulation operations in convolutions, and enter an accumulation module after the multiplication and accumulation operations to accumulate intermediate values; the intermediate values after accumulation enter an activation function module for activation function operations, enter a down-sampling module after the activation function operations for mean pooling,and enter a rasterization module after mean pooling for rasterization; output is converted to a one-dimensional vector, and is returned to the control module; and the control module calls and configures the FIFO module, the convolution module, the accumulation module, the activation function module, the down-sampling module and the rasterization module to control iteration, and transmits iteration results to a fully connected layer for multiplication and accumulation operations and probability comparison. According to the accelerator, iteration control logic is optimized for the pulmonary-nodule detection network through the control module to reduce resource consumption, and a data throughput rate is increased.

Owner:SHANGHAI JIAO TONG UNIV

Systems and methods for dynamic adaptation of network accelerators

ActiveUS9923826B2Multiple digital computer combinationsData switching networksWAN optimizationResource based

Systems and methods of the present solution provide a more optimal solution by dynamically and automatically reacting to changing network workload. A system that starts slowly, either by just examining traffic passively or by doing sub-optimal acceleration can learn over time, how many peer WAN optimizers are being serviced by an appliance, how much traffic is coming from each peer WAN optimizers, and the type of traffic being seen. Knowledge from this learning can serve to provide a better or improved baseline for the configuration of an appliance. In some embodiments, based on resources (e.g., CPU, Memory, Disk), the system from this knowledge may determine how many WAN optimization instances should be used and of what size, and how the load should be distributed across the instances of the WAN optimizer.

Owner:CITRIX SYST INC

PCIe-based host network accelerators (HNAS) for data center overlay network

ActiveCN104954253AExperience high speed and reliabilityHigh-speed and reliable forwardingNetworks interconnectionElectric digital data processingOff the shelfData center

A high-performance, scalable and drop-free data center switch fabric and infrastructure is described. The data center switch fabric may leverage low cost, off-the-shelf packet-based switching components (e.g., IP over Ethernet (IPoE)) and overlay forwarding technologies rather than proprietary switch fabric. In one example, host network accelerators (HNAs) are positioned between servers (e.g., virtual machines or dedicated servers) of the data center and an IPoE core network that provides point-to-point connectivity between the servers. The HNAs are hardware devices that embed virtual routers on one or more integrated circuits, where the virtual router are configured to extend the one or more virtual networks to the virtual machines and to seamlessly transport packets over the switch fabric using an overlay network. In other words, the HNAs provide hardware-based, seamless access interfaces to overlay technologies used for communicating packet flows through the core switching network of the data center.

Owner:JUMIPER NETWORKS INC

Network accelerator for controlled long delay links

ActiveUS20060109788A1Error preventionFrequency-division multiplex detailsCommunications systemTelecommunications link

A communication system for providing network access over a shared communication link is disclosed. The communication system includes a user access point, a network access point and a communications link. The user access point is coupled to one or more user terminals that access a remote network. The network access point is coupled to the remote network. The communications link couples the user access point and the network access point. The communications link is at least partially controlled by the network access point, which monitors information passed between the remote network and the user access point to create an estimate of future usage of the communications link by the user access point based on the information. The network access point allocates communications link resources for the user access point based on the estimate.

Owner:VIASAT INC

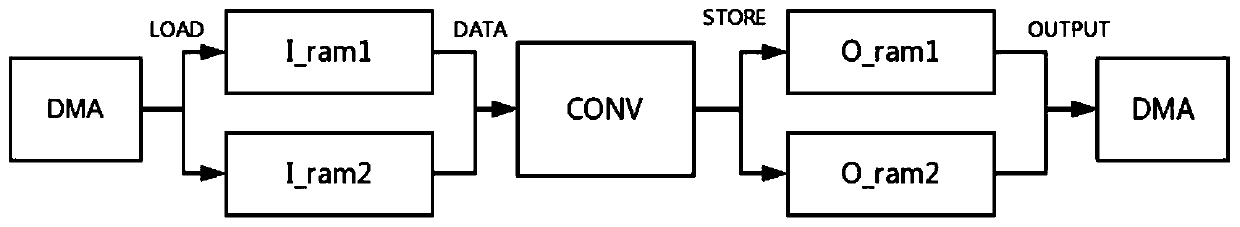

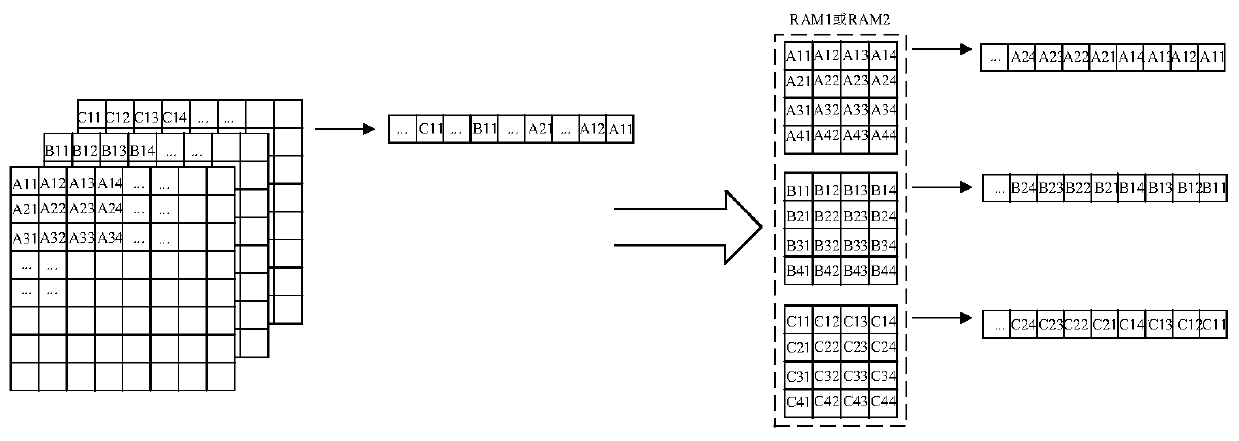

Convolutional network accelerator, configuration method and computer readable storage medium

ActiveCN111416743AImprove parallelismImprove throughputData switching networksNetwork deploymentNetwork model

The invention belongs to the technical field of hardware acceleration of a convolutional network. The invention discloses a convolutional network accelerator, a configuration method and a computer readable storage medium. The method comprises the steps: judging the number of layers, where a whole network model is located, of a currently executed forward network layer through a mark; obtaining a configuration parameter of the currently executed forward network layer, and loading a feature map and a weight parameter from a DDR through the configuration parameter; meanwhile, the acceleration kernel of the convolution layer configures the degree of parallelism according to the obtained executed forward network layer configuration parameters. According to the method, the network layer structureis changed through configuration parameters, only one layer structure can be used when the network FPGA is deployed, flexible configurability is achieved, and meanwhile the effect of saving and fullyutilizing on-chip resources of the FPGA is achieved. A method of splicing a plurality of RAMs into an overall cache region is adopted, the bandwidth of data input and output is improved, ping-pong operation is adopted, and therefore feature map and weight parameter loading and accelerator operation are in pipeline work.

Owner:HUAZHONG UNIV OF SCI & TECH

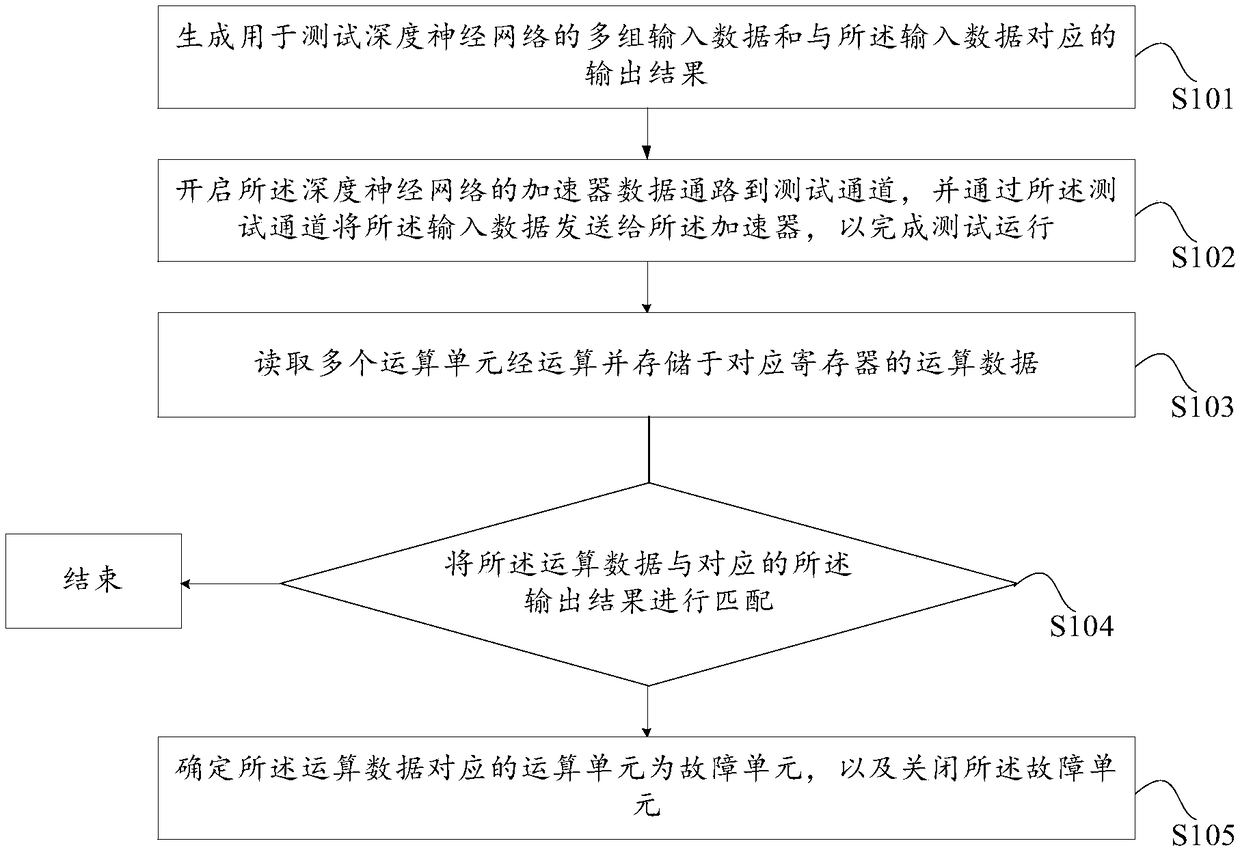

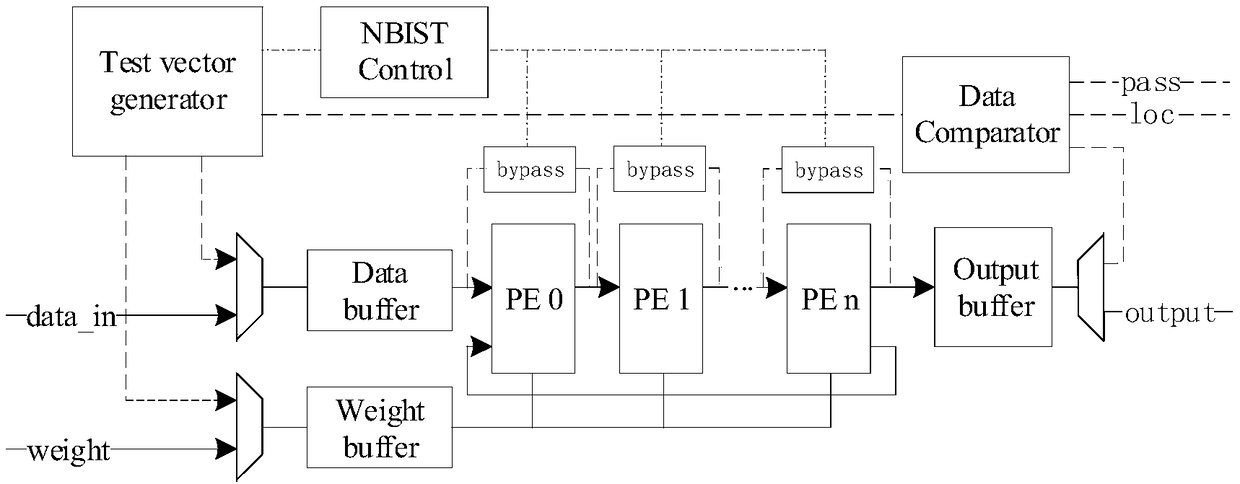

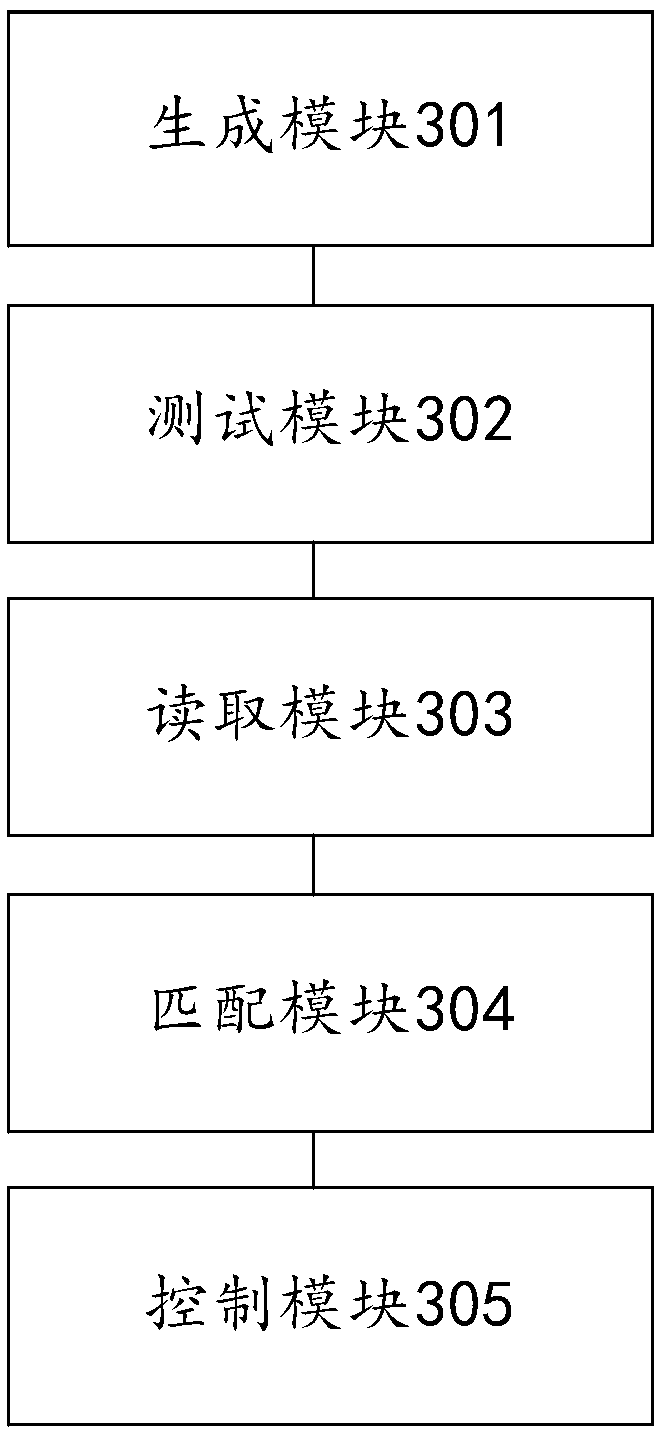

Deep neural network accelerator fault handling method and device

InactiveCN109358993AWith self-test capabilityReduce overheadDetecting faulty hardware using neural networksFaulty hardware testing methodsTest channelFault handling

The embodiment of the invention relates to a deep neural network accelerator fault handling method and device. The method comprises the following steps: generating a plurality of groups of input datafor testing the deep neural network and an output result corresponding to the input data; opening an accelerator data path of the depth neural network to a test channel, and sending the input data tothe accelerator through the test channel to complete the test operation; reading operation data of a plurality of operation units which are operated and stored in corresponding registers; matching theoperation data with a corresponding output result; If there is no match, the operation unit corresponding to the operation data is determined as a fault unit, and the fault unit is turned off, so that the DNN accelerator has the self-test ability, and the manufacturing fault is quickly tested and eliminated in the production stage, the test cost is reduced, and the available chip output is improved.

Owner:中科物栖(北京)科技有限责任公司

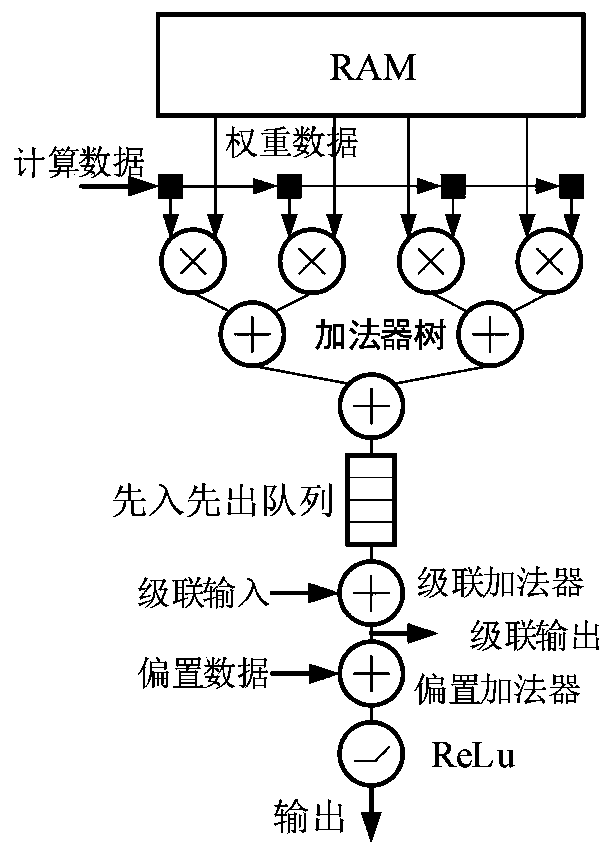

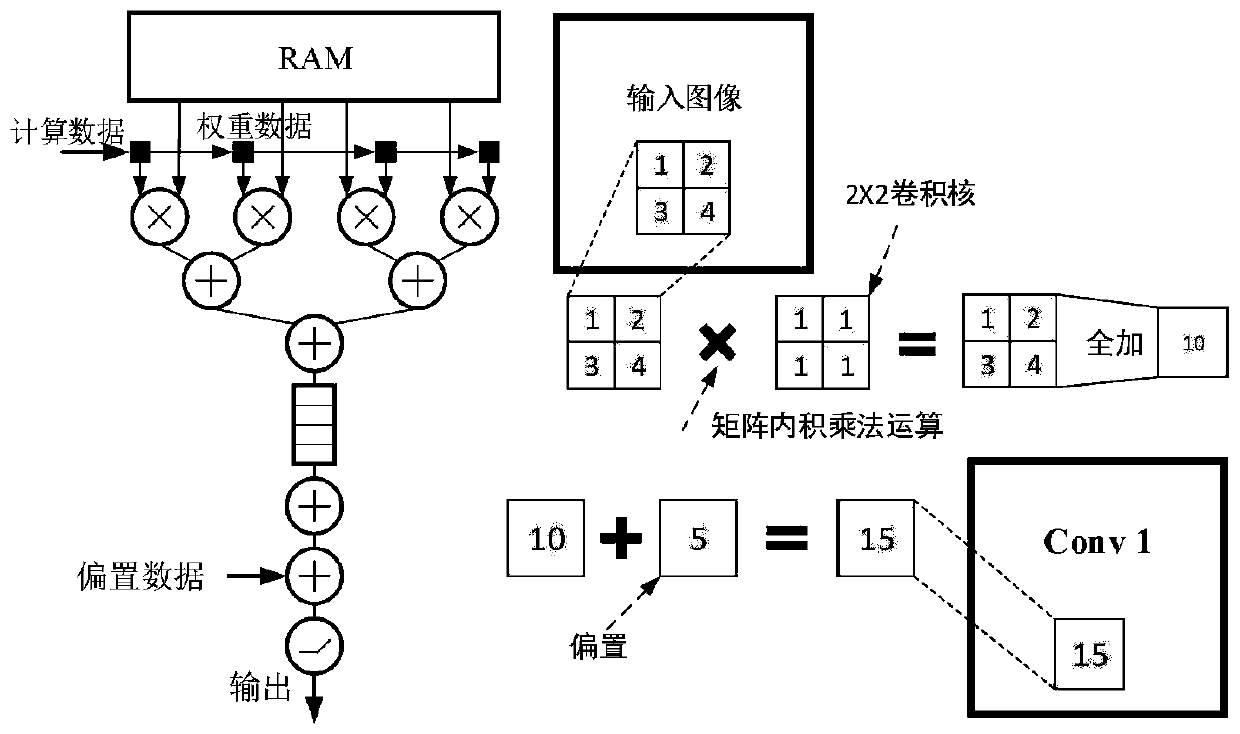

Universal computing circuit of neural network accelerator

ActiveCN110807522AReduce inference timeSimple designNeural architecturesPhysical realisationActivation functionBinary multiplier

The invention discloses a general calculation module circuit of a neural network accelerator. The general calculation module circuit is composed of m universal computing modules PE, any ith universalcomputing module PE is composed of an RAM, 2n multipliers, an adder tree, a cascade adder, a bias adder, a first-in first-out queue and a ReLu activation function module. The single PE convolution configuration, the cascaded PE convolution configuration, the single PE full-connection configuration graph and the cascaded PE full-connection configuration are utilized to respectively construct calculation circuits of different neural networks. According to the invention, the universal computing circuit can be configured according to the variable of the neural network accelerator, so that the neural network can be built or modified more simply, conveniently and quickly, the inference time of the neural network is shortened, and the hardware development time of related deep research is reduced.

Owner:HEFEI UNIV OF TECH

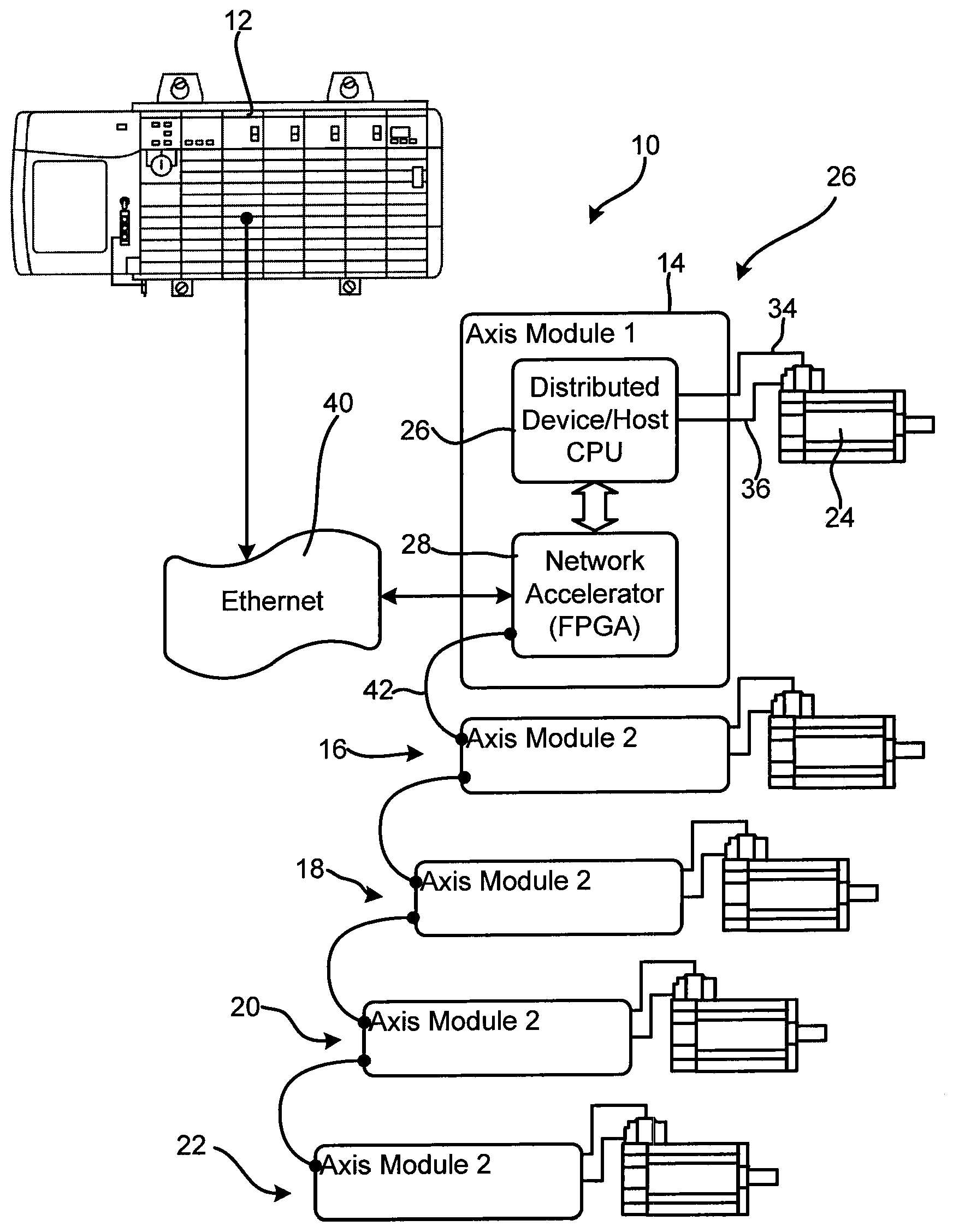

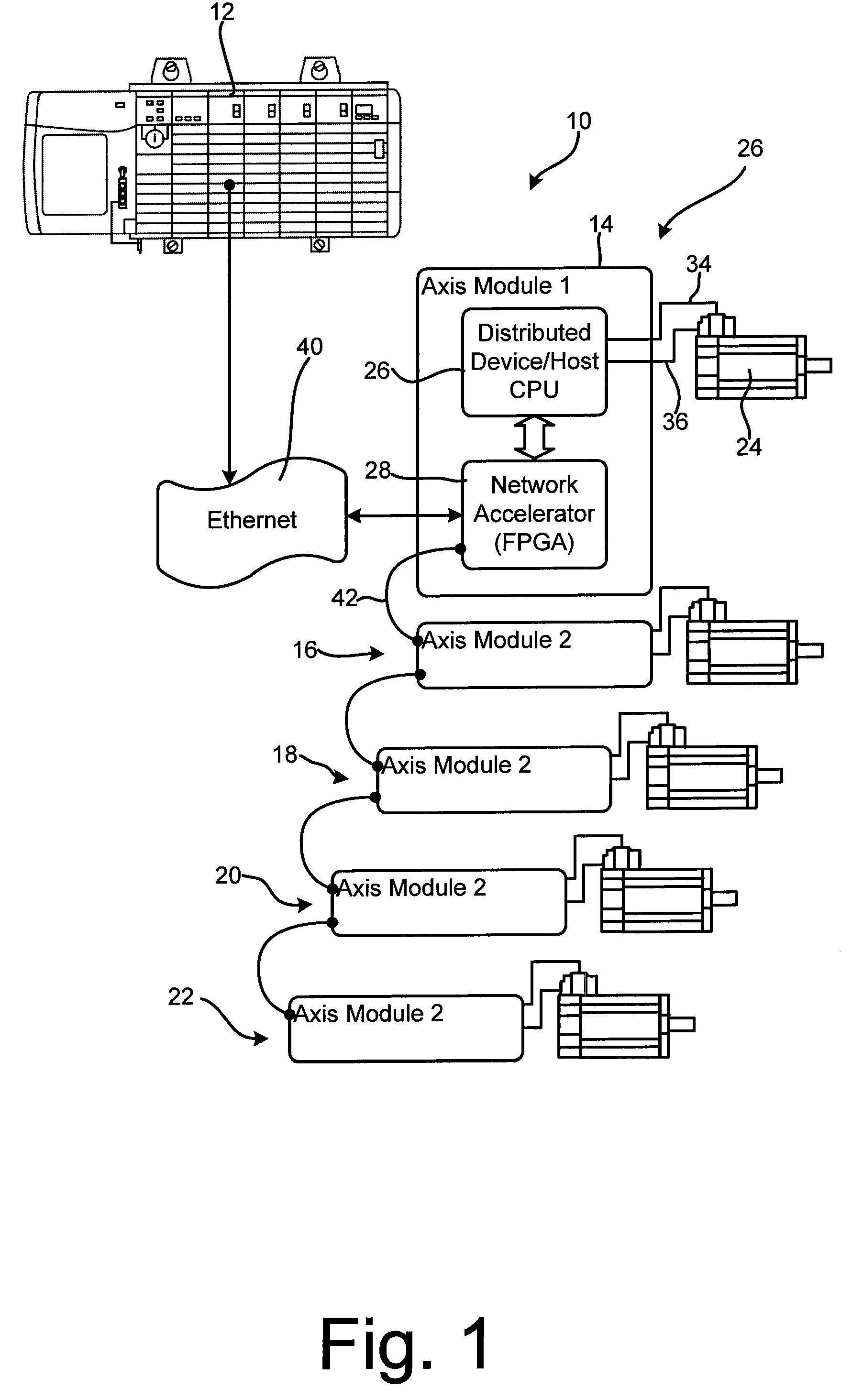

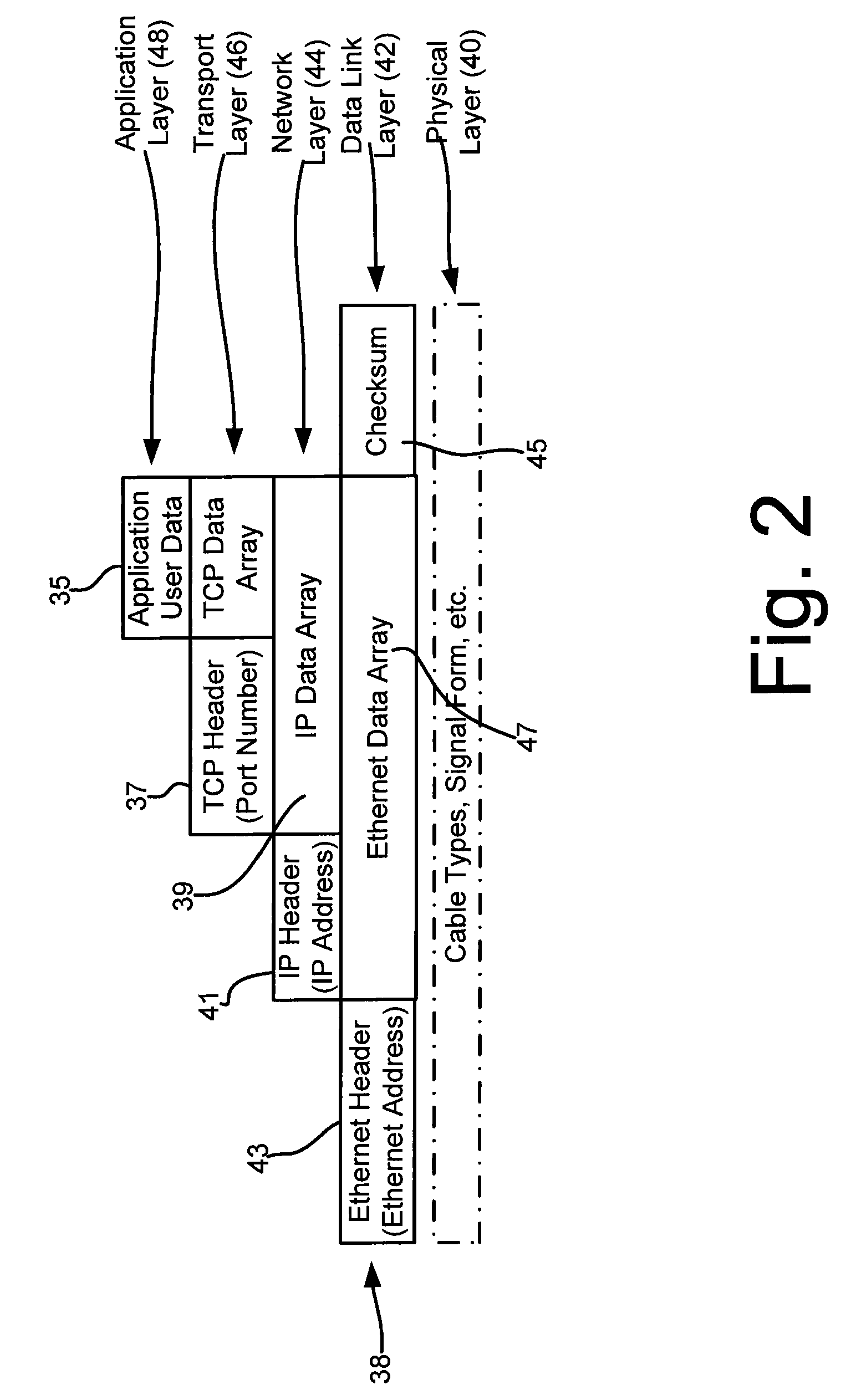

Method and apparatus for communications accelerator on cip motion networks

ActiveUS20100082837A1Speed up CIP communicationLighten the computational burdenMultiple digital computer combinationsTransmissionNetwork packetEthernet

An apparatus for accelerating communications over the Ethernet between a first processor and a second processor where the communications include CIP messages, the apparatus comprising a network accelerator that includes memory locations, the accelerator associated with the first processor and programmed to, when the second processor transmits a data packet to the first processor over the Ethernet, intercept the data packet at a first processor end of the Ethernet, extract a CIP message from the data packet, parse the CIP message to generate received data, store the received data in the memory locations and provide a signal to the first processor indicating that data for the first processor in stored in the memory locations.

Owner:ROCKWELL AUTOMATION TECH

Accelerator for deep neural networks

A system for bit-serial computation in a neural network is described. The system may be embodied on an integrated circuit and include one or more bit-serial tiles for performing bit-serial computations in which each bit-serial tile receives input neurons and synapses, and communicates output neurons. Also included is an activation memory for storing the neurons and a dispatcher and a reducer. The dispatcher reads neurons and synapses from memory and communicates either the neurons or the synapses bit-serially to the one or more bit-serial tiles. The other of the neurons or the synapses are communicated bit-parallelly to the one or more bit-serial tiles, or according to a further embodiment, may also be communicated bit-serially to the one or more bit-serial tiles. The reducer receives the output neurons from the one or more tiles, and communicates the output neurons to the activation memory.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com