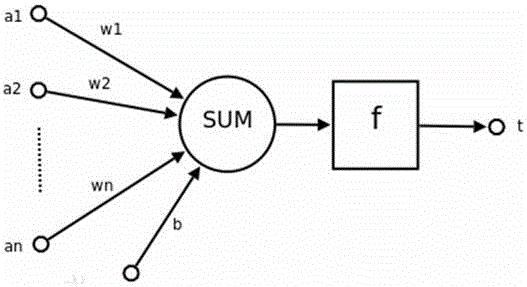

Implementation methods of neural network accelerator and neural network model

A neural network model and neural network technology, applied in biological neural network models, physical realization, etc., can solve problems such as easy data loss, poor flexibility, limited data storage bandwidth, etc., achieve low power consumption, improve performance, and reduce memory the effect of consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, but not as a limitation of the present invention.

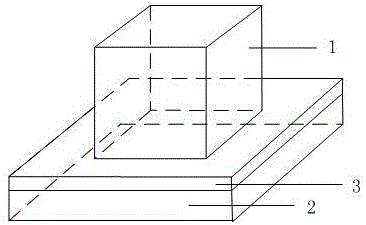

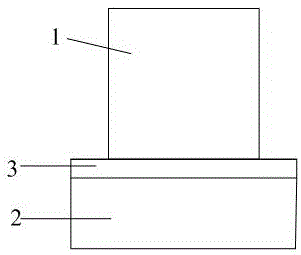

[0030] The invention proposes a method for realizing a neural network accelerator based on a nonvolatile memory. The non-volatile memory adopts a non-planar design, and stacks multi-layer data storage units in the vertical direction using the back-end manufacturing process (BEOL) to obtain higher storage density, accommodate higher storage capacity in a smaller space, and then Bring great cost savings and lower energy consumption, such as 3D NAND memory and 3D phase change memory. Below these 3D data storage arrays 1 are the peripheral logic circuits of the memories prepared by the front-end manufacturing process (FEOL). As the capacity of the memory chip continues to increase, the capacity of the data storage array 1 becomes larger and larger, but the area of the corresponding peripheral log...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com