Ways to reduce server cache pressure

A server cache and pressure technology, applied in the direction of instruments, special data processing applications, electrical digital data processing, etc., can solve the problems of consuming a lot of time, increasing query time, and waiting, so as to reduce cache pressure, improve robustness, and improve The effect of processing power

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

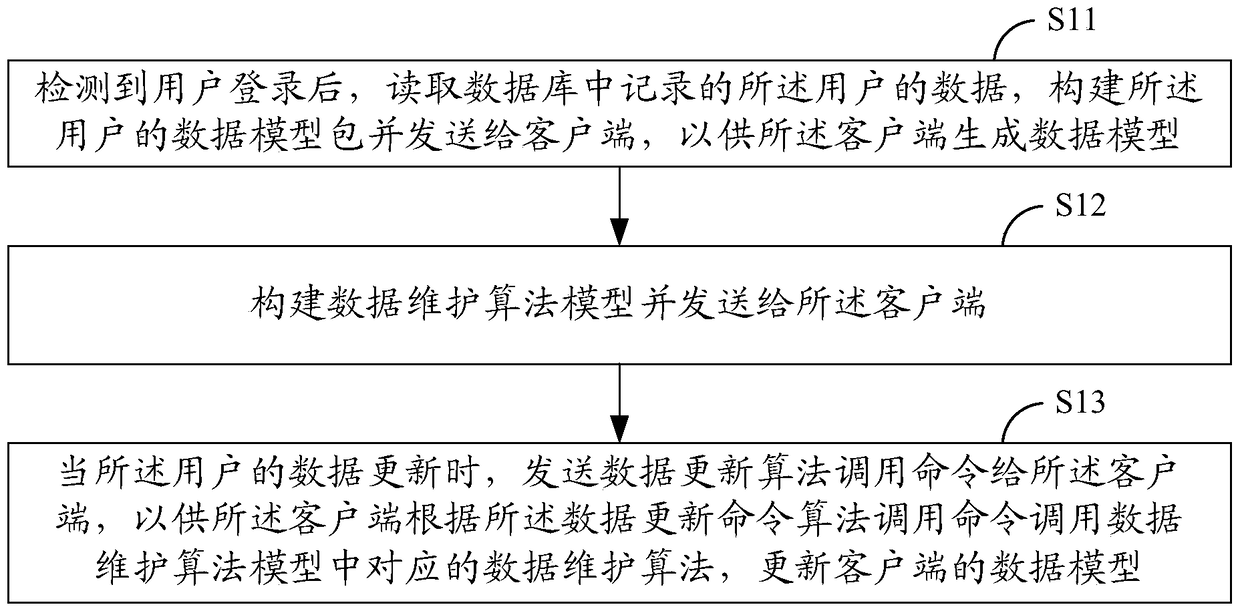

[0019] Such as figure 1 As shown, this embodiment is described from the processing process of the server, including the following steps:

[0020] S11. After detecting that the user has logged in, read the user data recorded in the database, build a data model of the user and send it to the client, so that the client can generate the data model;

[0021] After the server detects that the user has logged in, it reads the database according to the user's ID, and records all relevant data of the user in the database, including data name, data type, and data value. According to various data information, the server builds a data model package sent to the client later.

[0022] S12. Construct a data maintenance algorithm model and send it to the client;

[0023] In this step, the server also needs to build a data maintenance algorithm model and send it to the client; the data maintenance algorithm in this embodiment refers to the algorithm used when executing certain update rules f...

Embodiment 2

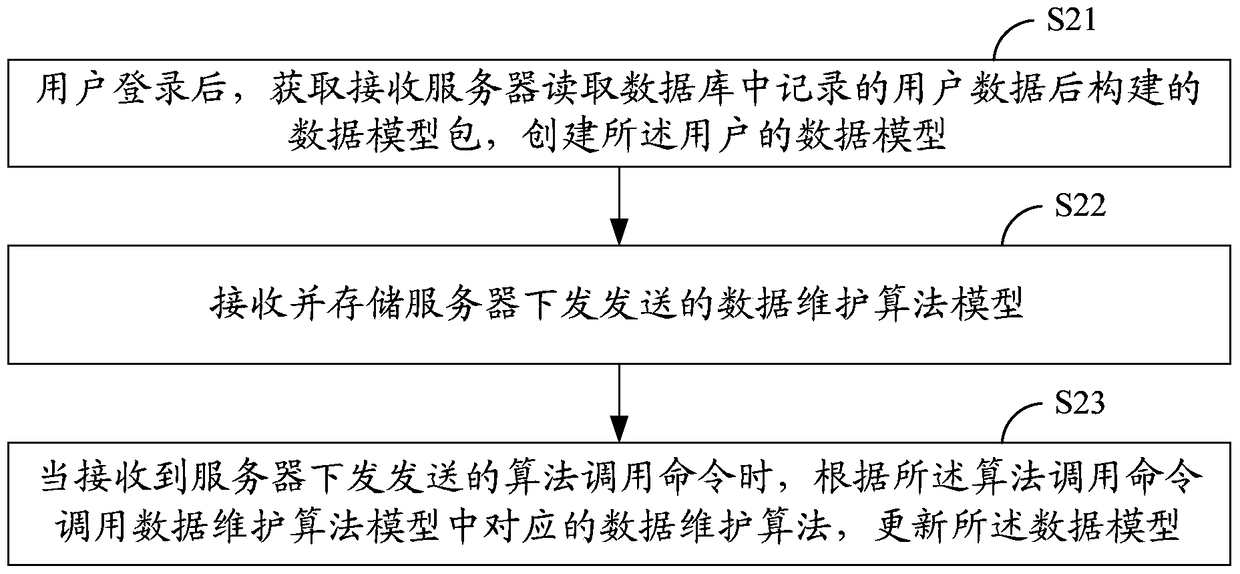

[0039] Such as figure 2 As shown, this embodiment is described from the processing process of the client, including the following steps:

[0040] S21. After the user logs in, receive the data model package constructed by the server after reading the user data recorded in the database, and create a data model of the user;

[0041] S22. Receive and store the data maintenance algorithm model sent by the server;

[0042] S23. When receiving the algorithm call command sent by the server, call the corresponding data maintenance algorithm in the data maintenance algorithm model according to the algorithm call command, and update the data model;

[0043] After the client detects that the user has logged in, it obtains the data model package created by the server after obtaining the user data from the database, and creates a data model in the applied memory according to the data model package; the client also receives the data maintenance algorithm model sent by the server and stores...

Embodiment 3

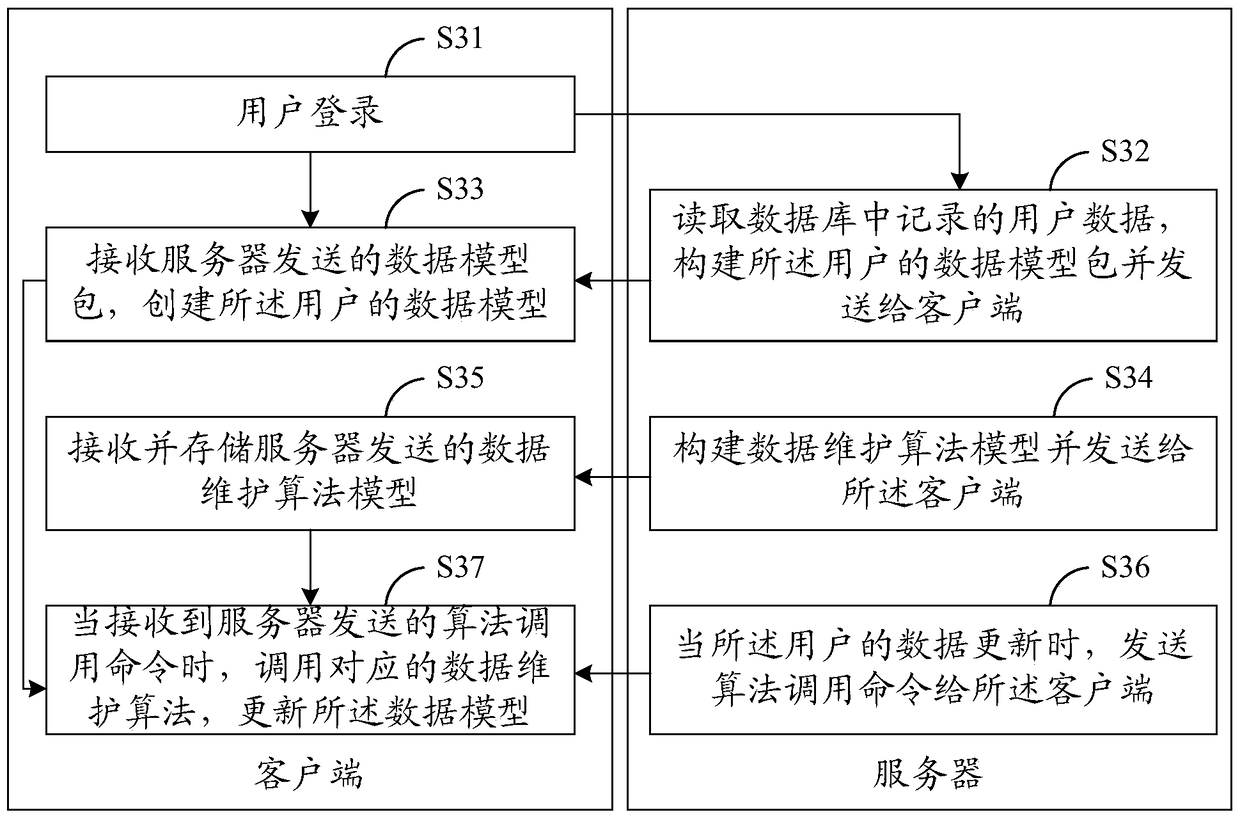

[0064] Such as image 3 As shown, this embodiment is described from the interactive processing between the server and the client as an example, including the following steps:

[0065] S31, user login;

[0066] S32. The server reads the user data recorded in the database, constructs a data model package of the user, and sends it to the client;

[0067] S33. Construct a data maintenance algorithm model and send it to the client;

[0068] S34. Receive the data model package sent by the server, and create the data model of the user;

[0069] S35. Receive and store the data maintenance algorithm model sent by the server;

[0070] S36. When the data of the user is updated, send an algorithm calling command to the client;

[0071] S37. When receiving the algorithm call command sent by the server, call the corresponding data maintenance algorithm in the data maintenance algorithm model according to the algorithm call command, and update the data model.

[0072] The method of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com