Patents

Literature

42results about How to "Reduce cache pressure" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

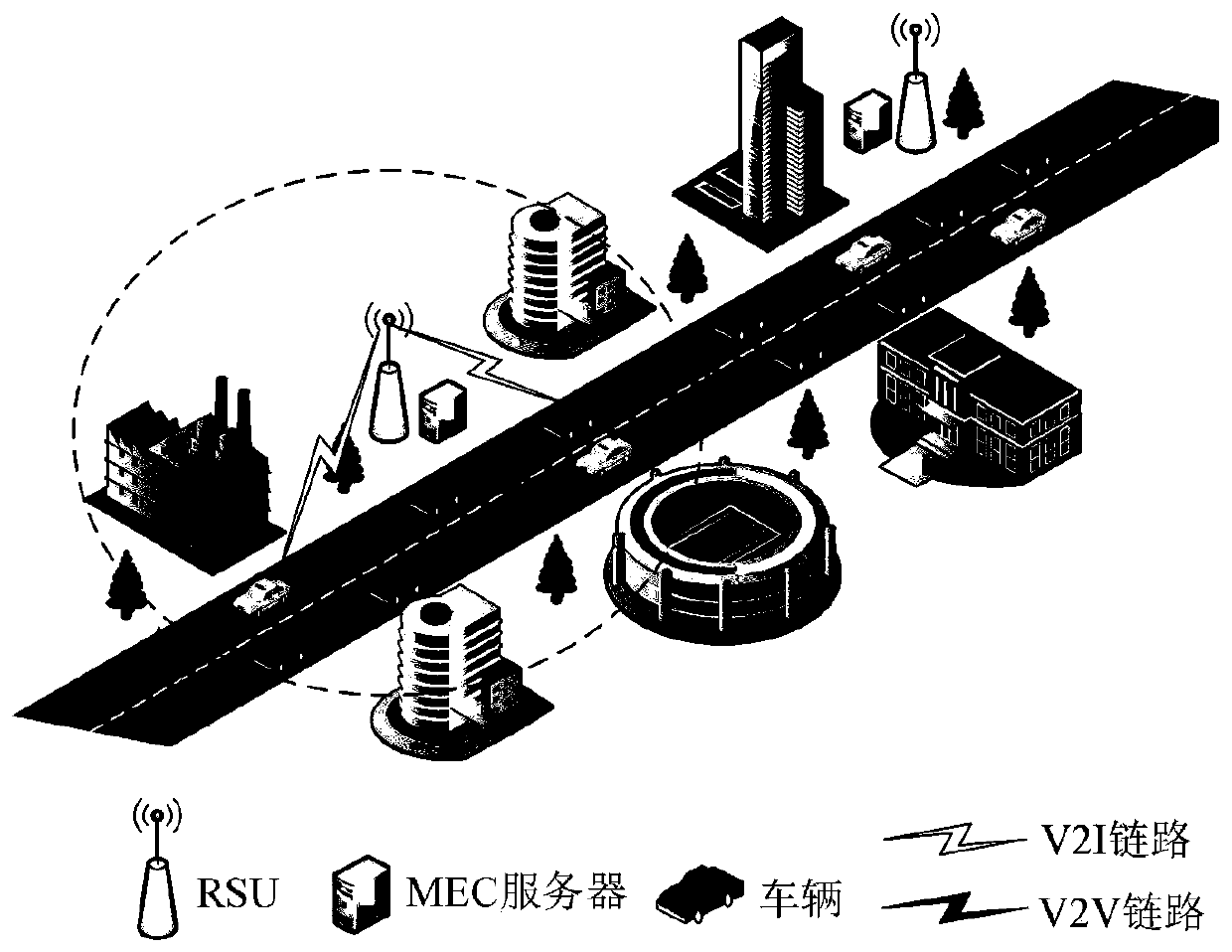

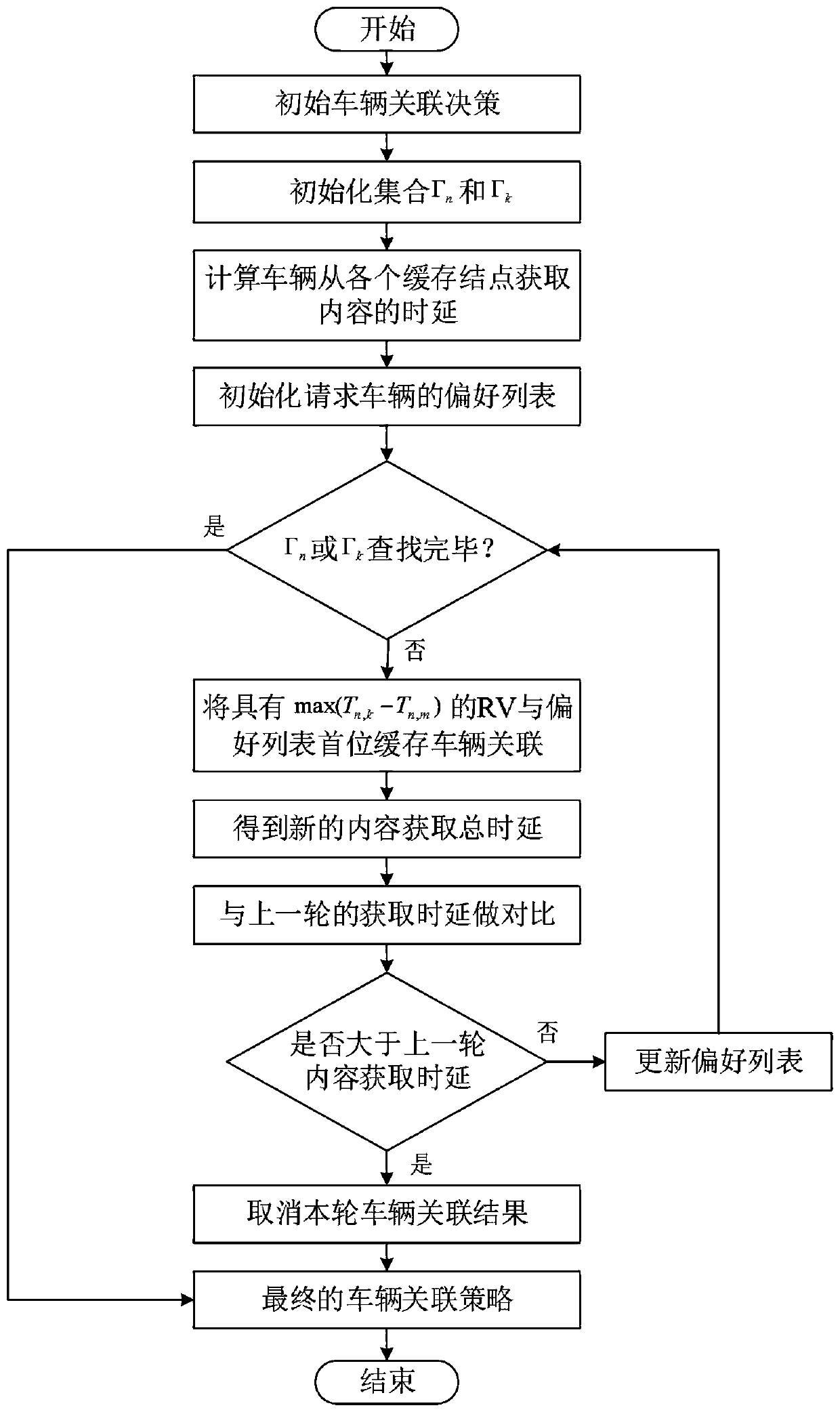

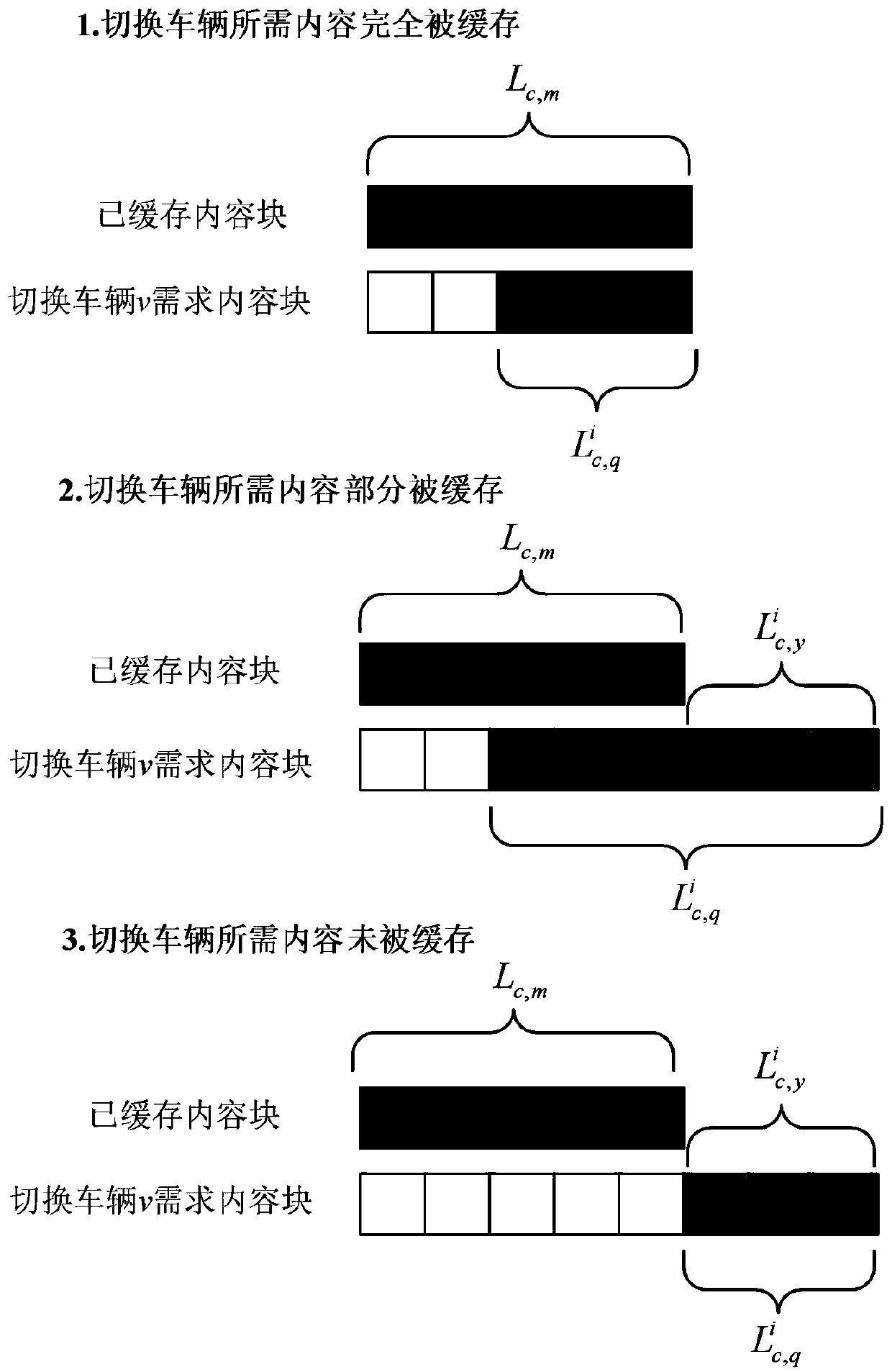

Internet of Vehicles content cache decision optimization method

ActiveCN111385734AReduce latencyReduce delivery pressureParticular environment based servicesVehicle-to-vehicle communicationMobile edge computingThe Internet

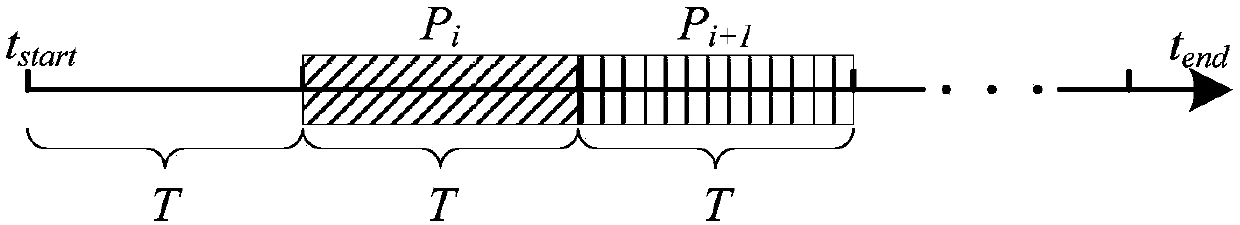

The invention relates to an Internet of Vehicles content cache decision optimization method, which belongs to the technical field of mobile communication. In the Internet of Vehicles content cache decision optimization method, the Internet of Vehicles is provided with a plurality of content cache nodes, and the content requested by a vehicle can be stored in the content cache nodes; if a nearby vehicle or a roadside unit already caches the request content of the current vehicle, the current vehicle obtains a content service from the cache node through a V2V link or a V2I link; a mobile edge computing server is deployed on the RSU side, can provide storage and computing capabilities and is used for content storage and processing; and due to the fact that the moving speed of the vehicle is high, the content request vehicle cannot completely obtain the needed content within a current RSU coverage range, and therefore the content request vehicle needs to continue to obtain the remaining content within a next RSU coverage range. The Internet of Vehicles content cache decision optimization method aims to reduce the total time delay of acquiring the required content by the content requestvehicle. According to the Internet of Vehicles content cache decision optimization method, the association problem of vehicles can be solved, and the content pre-caching is considered, so that the optimal content caching decision is obtained.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

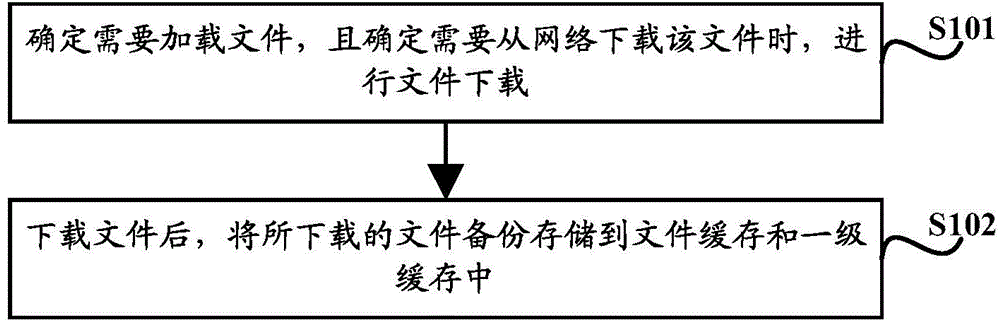

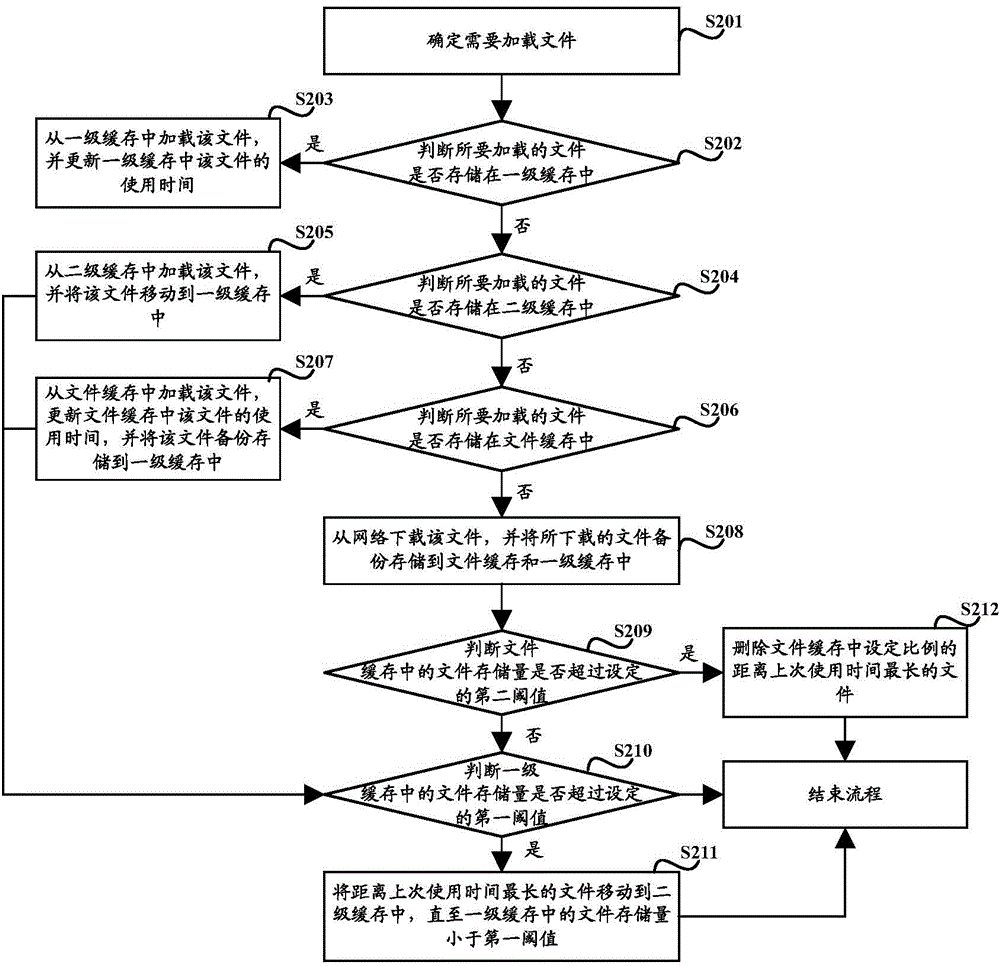

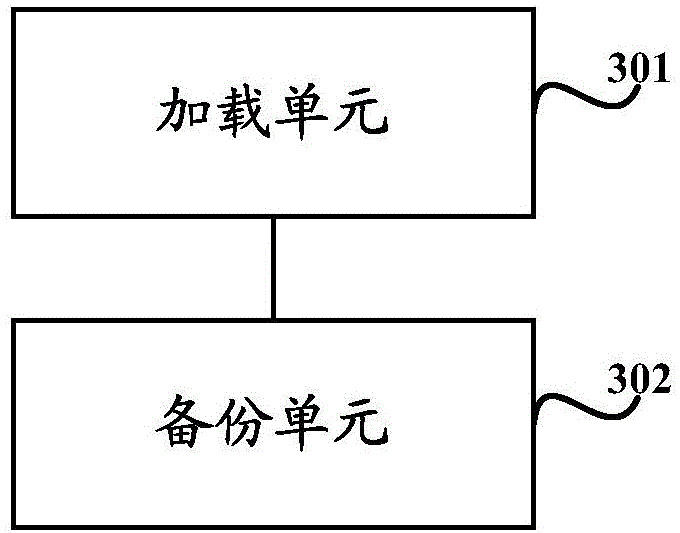

File cache method and device

InactiveCN104657378AEasy to useReduce cache pressureSpecial data processing applicationsOut of memoryComputers technology

The invention discloses a file cache method and device, and relates to computer technology. A downloaded file is stored in a primary cache and a file cache and conveniently used in subsequent loading, cache pressure of the primary cache and a secondary cache is reduced, the loading speed of files such as pictures is increased, and memory deficiency is decreased.

Owner:QINGDAO HISENSE MOBILE COMM TECH CO LTD

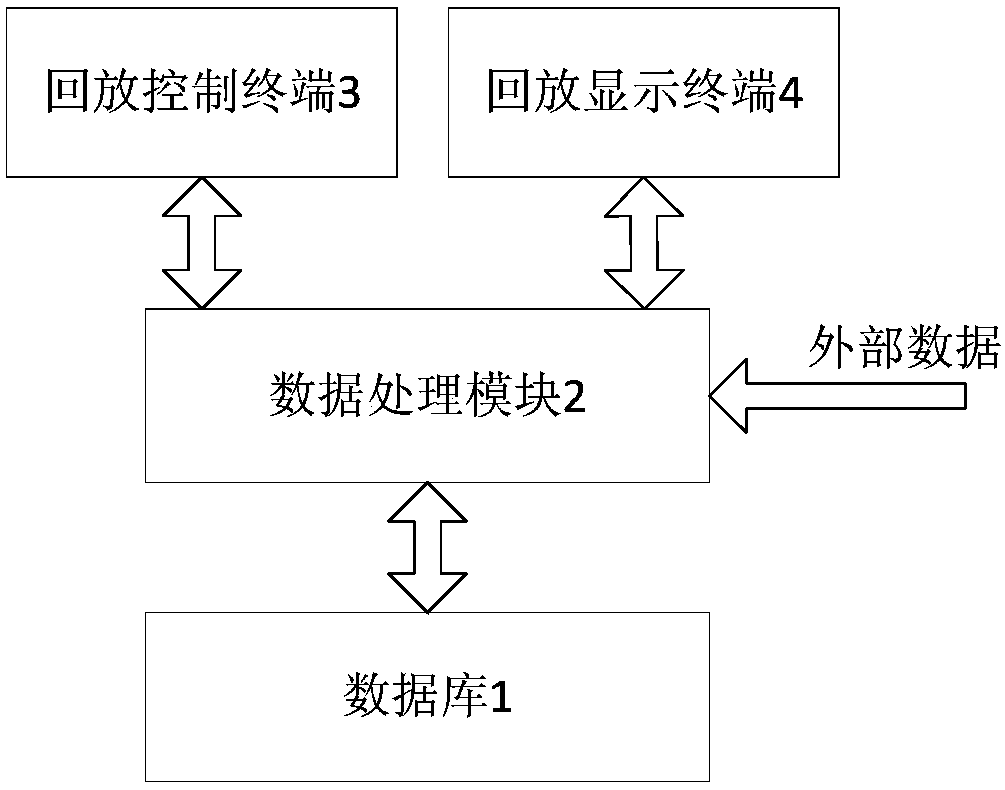

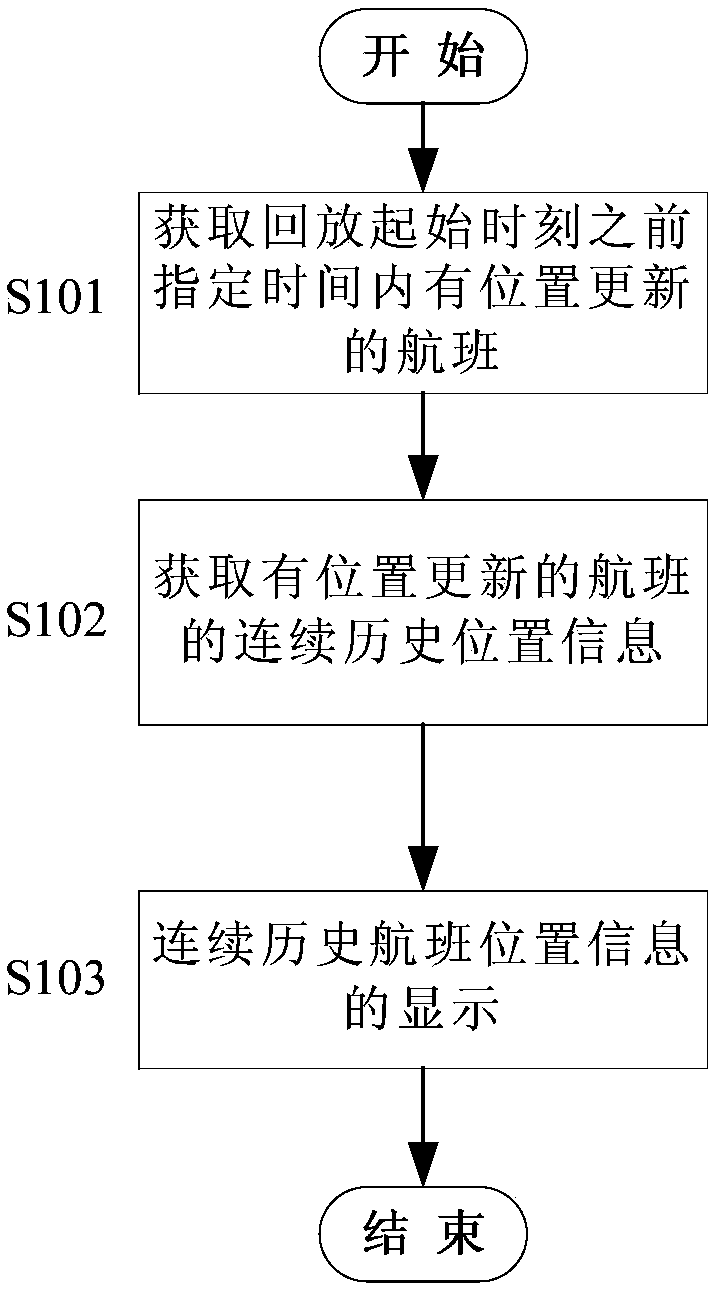

Flight location information playback system and playback method

ActiveCN107633049AReal-timeImprove query speedData processing applicationsSpecial data processing applicationsComputer terminalSelf adaptive

The invention discloses a flight location information playback system and a playback method. The system comprises a database, a data processing module, a playback control terminal and a playback display terminal, wherein the data processing module is independently connected with the database, the playback control terminal and the playback display terminal. The system has the following advantages that: (a) the database is adopted to store the flight location information, an index is configured for a playback condition data item, the query speed of playback speed is improved, and data playback instantaneity is realized; (b) effective information is displayed during playback initialization, only the flight information of a flight with location updating in appointed time is retained and displayed, and the flight information of the flight without locating updating in the appointed time is deleted, wherein the flight information includes the historical information of the flight; and (c) adaptive time slicing playback is carried out, a way of reading playback cache data according to a time period is adopted to greatly lower the data caching pressure of the playback control terminal, timeperiod length is adaptively regulated along with playback fast forward or slow forward, and playback fluency is guaranteed.

Owner:CIVIL AVIATION UNIV OF CHINA

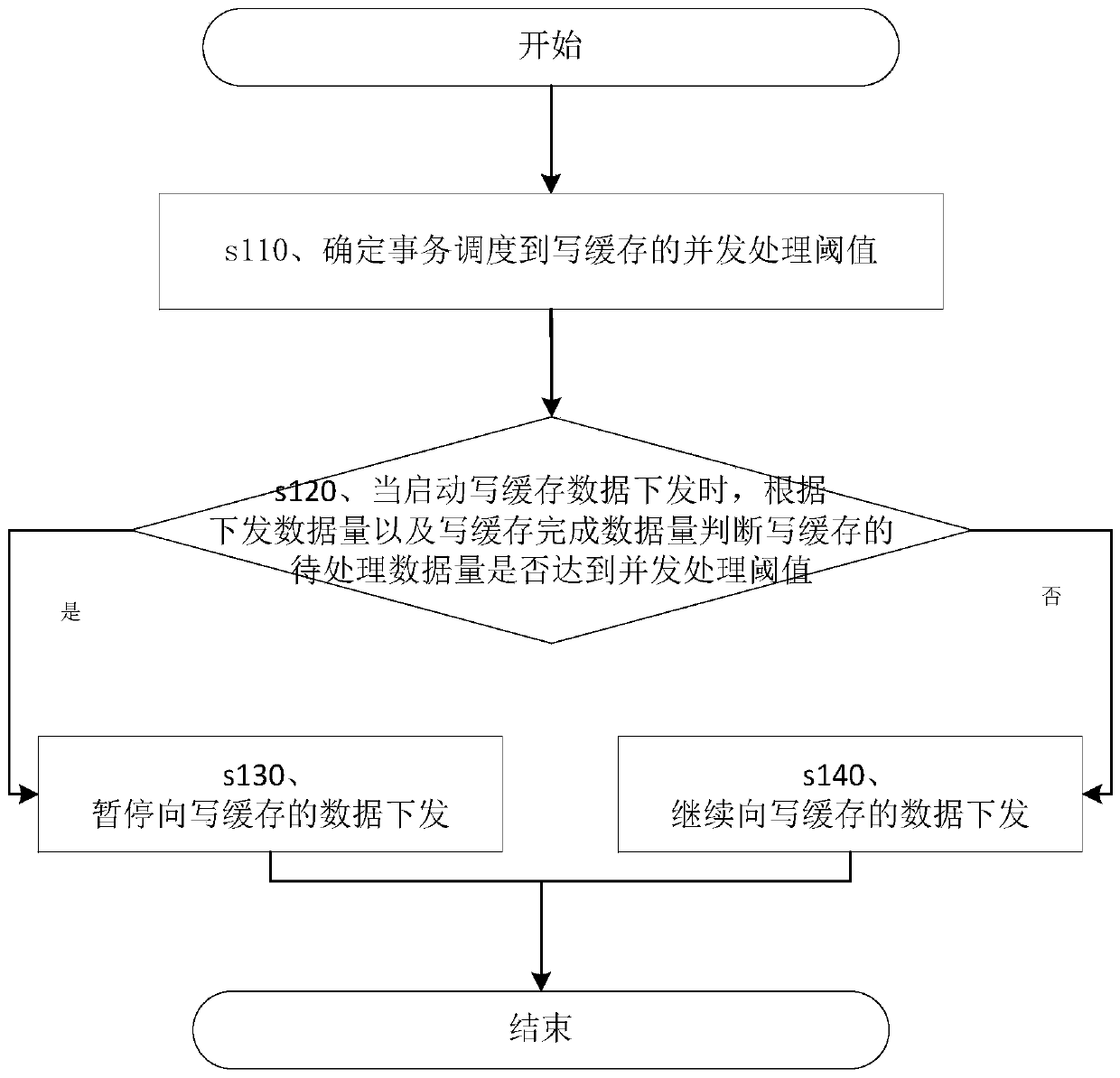

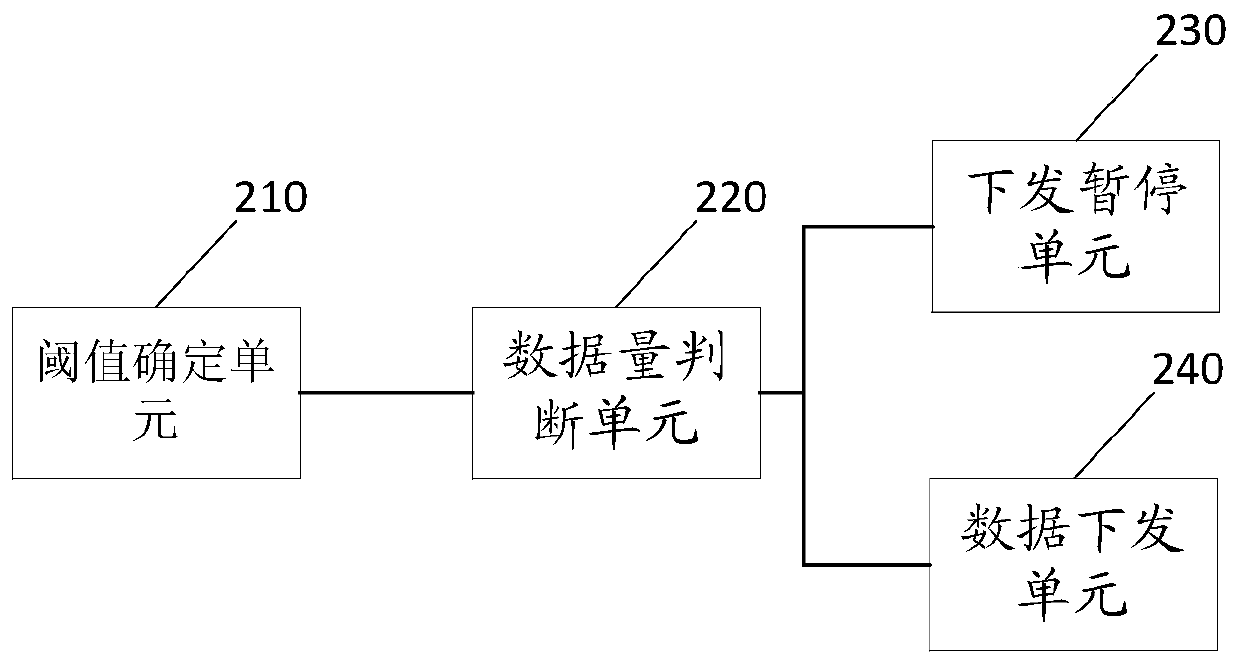

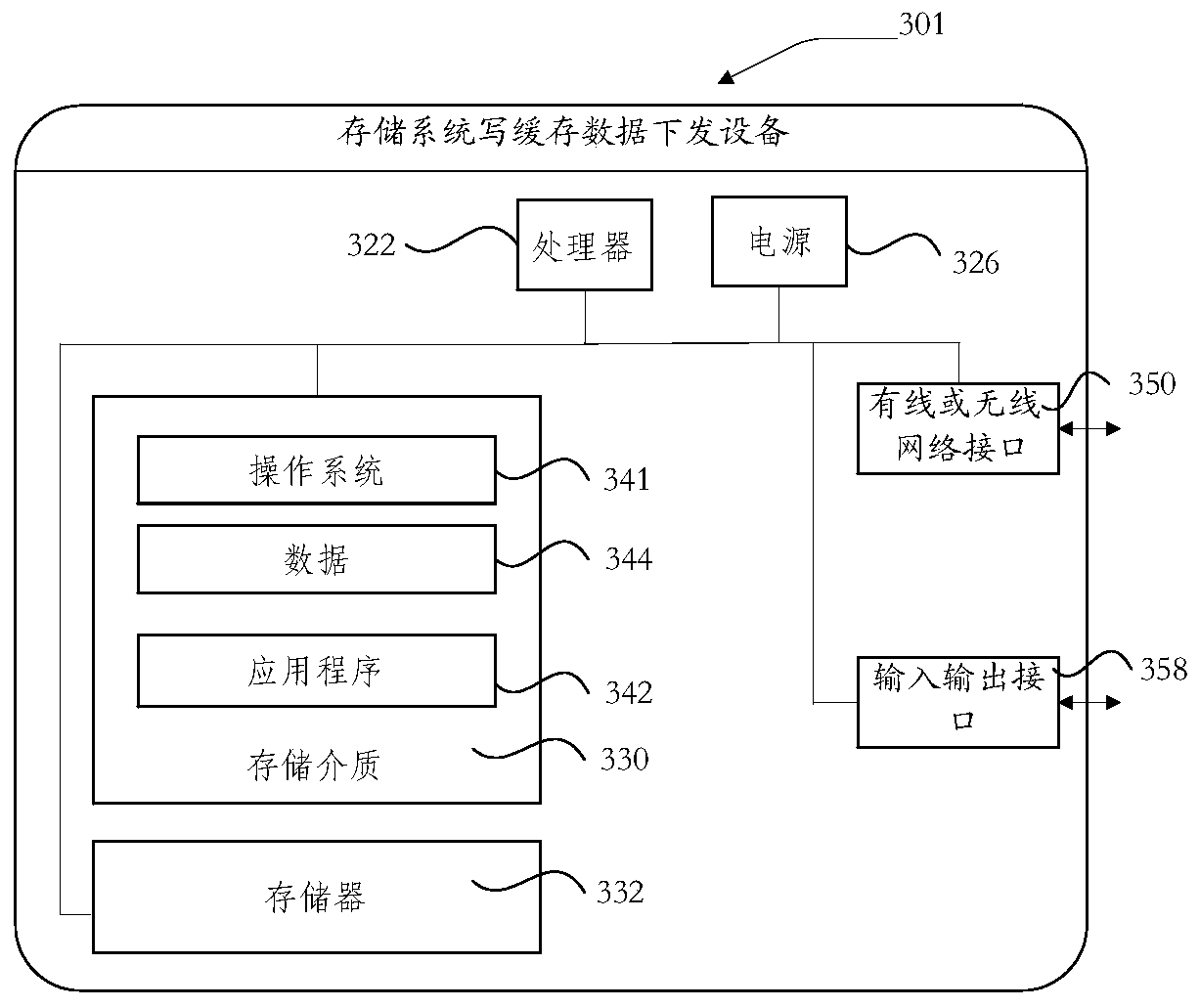

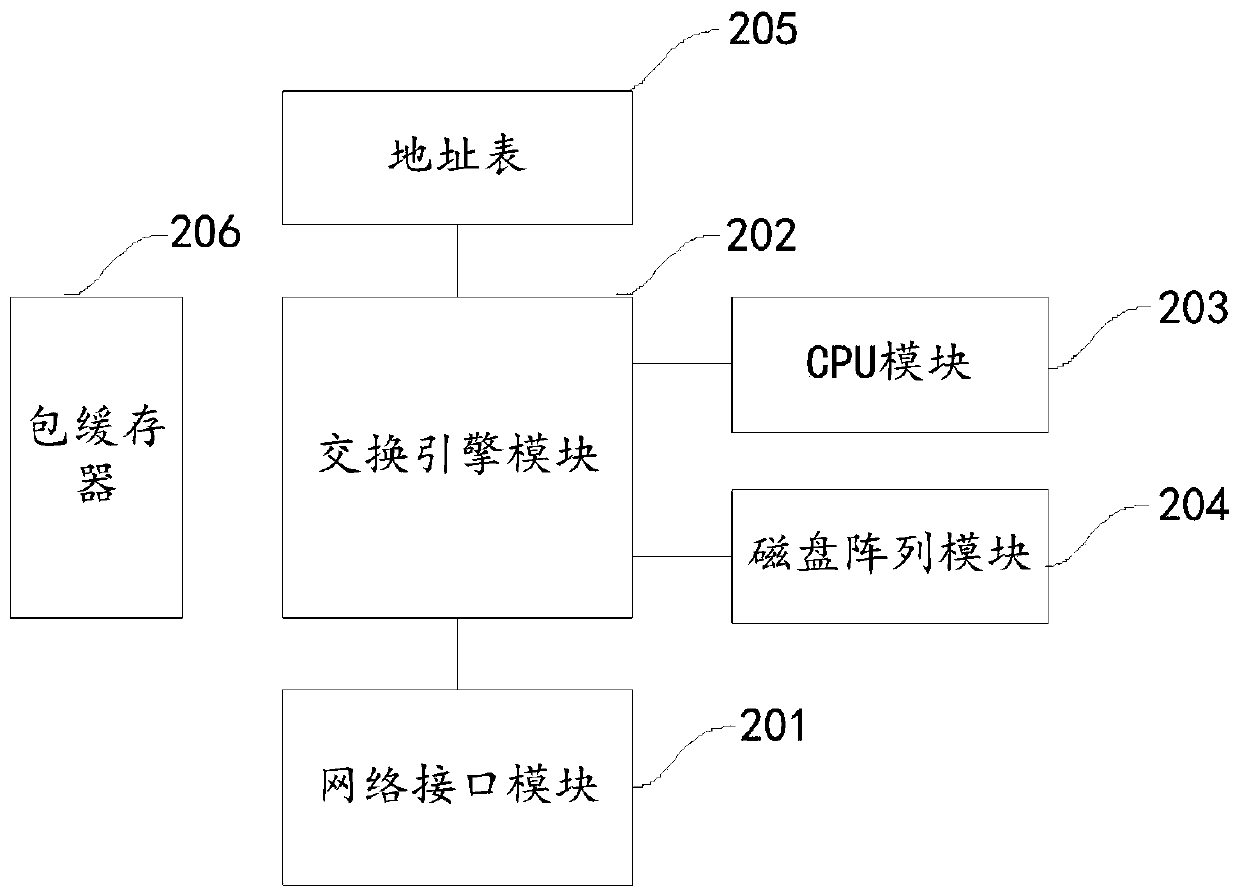

Storage system write cache data issuing method and related components

ActiveCN111008157AAvoid timeout situationsImprove experienceMemory architecture accessing/allocationRedundant operation error correctionParallel computingEngineering

The invention discloses a method for issuing write cache data of a storage system. The method comprises the steps of determining a concurrent processing threshold for scheduling a transaction to a write cache; when write cache data issuing is started, judging whether the to-be-processed data volume of the write cache reaches a concurrent processing threshold value or not according to the issued data volume and the write cache completion data volume; if so, pausing the issuing of the data to the write cache; and if not, continuing to issue the data of the write cache. According to the method, an issuing strategy of data from the transaction to the write cache in a controllable mode is provided; the concurrent threshold from transaction scheduling to write cache IO is introduced; and the transaction IO issuing thread is scheduled only when the concurrent number is smaller than the threshold value, so that the data processing pressure of the write cache is reduced; the situation that theIO of an upper-layer host is overtime is avoided; and the user experience is improved. The invention further provides a device and equipment for issuing the write cache data of the storage system anda readable storage medium, which have the above beneficial effects.

Owner:北京浪潮数据技术有限公司

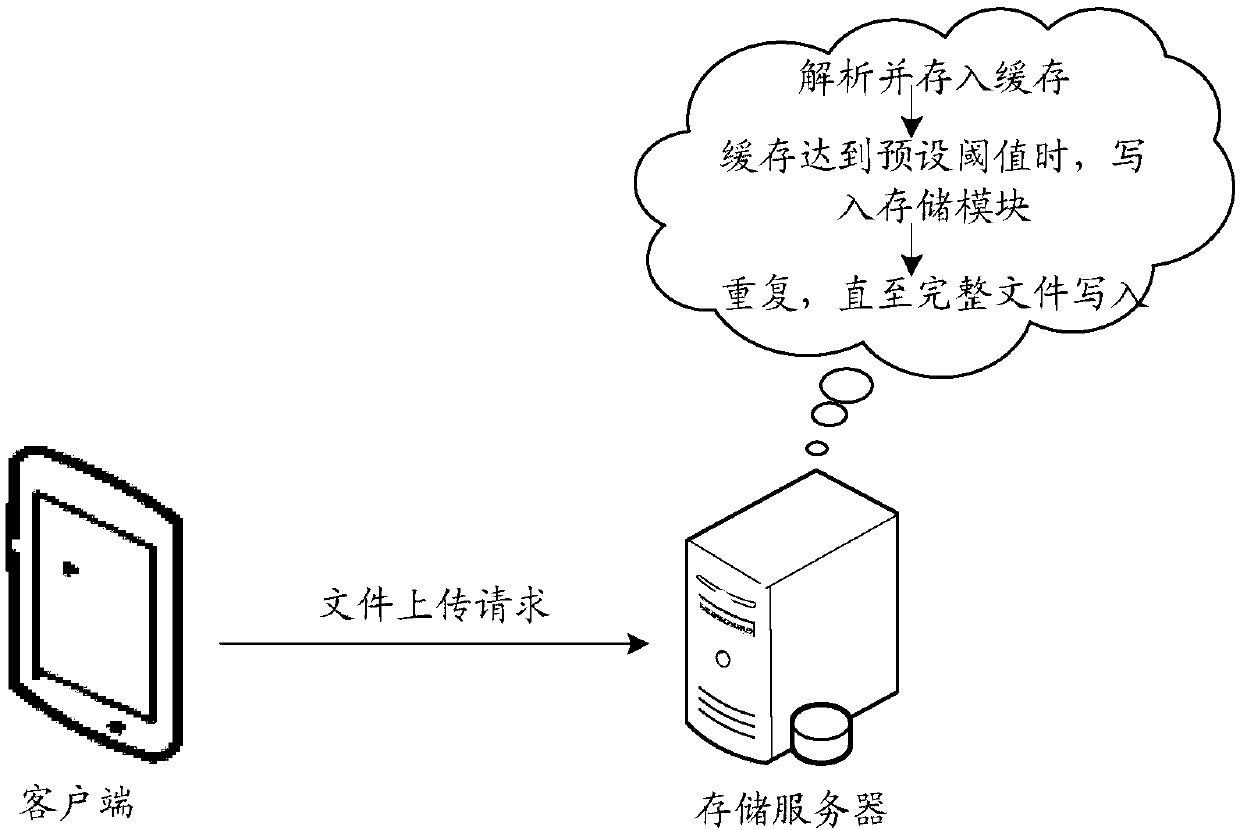

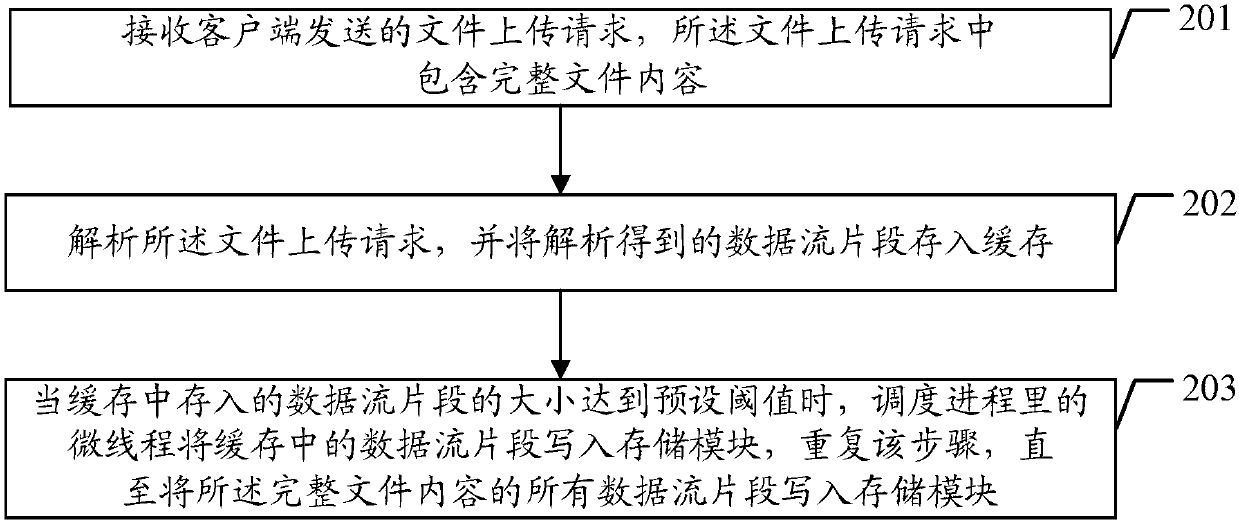

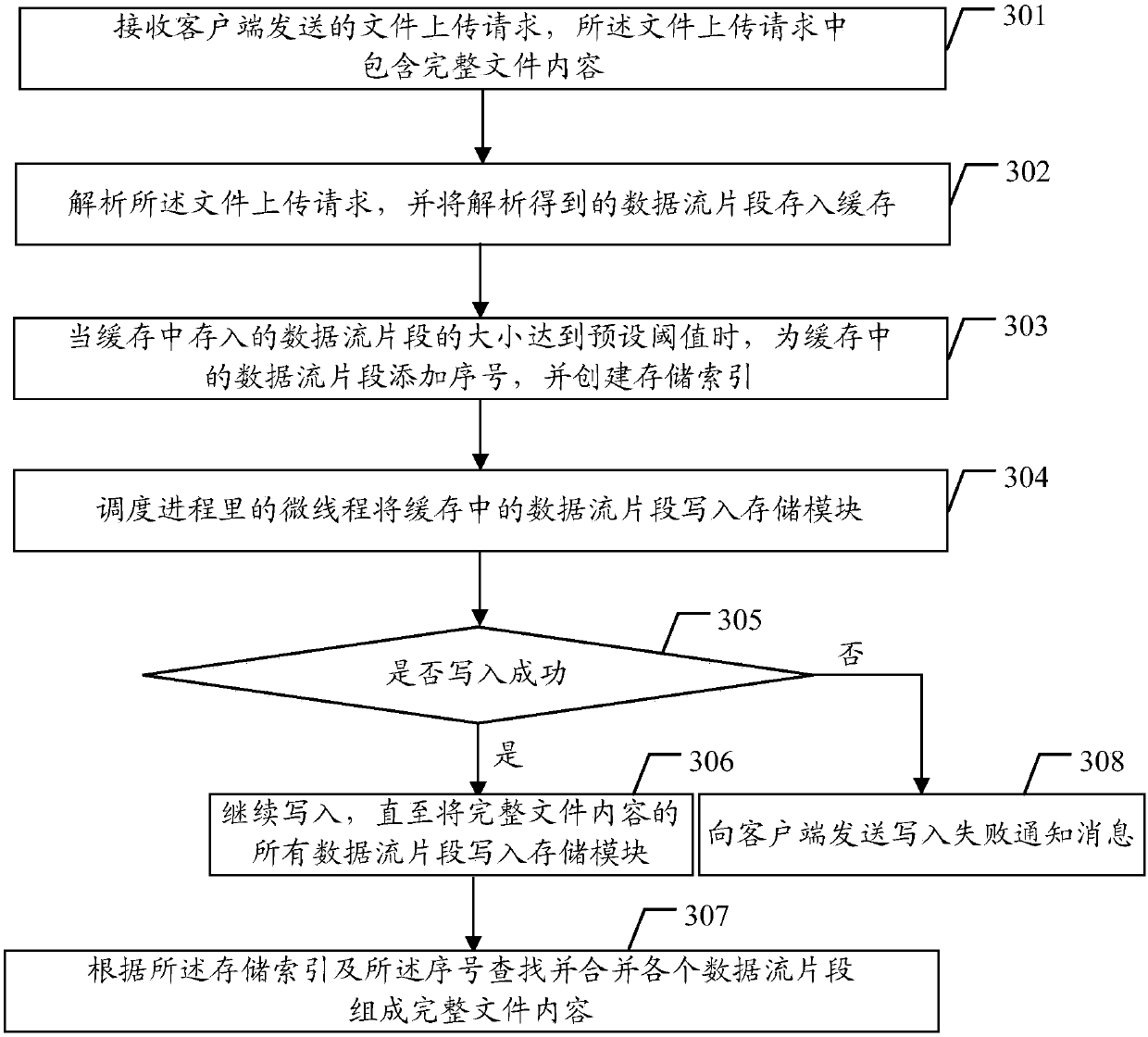

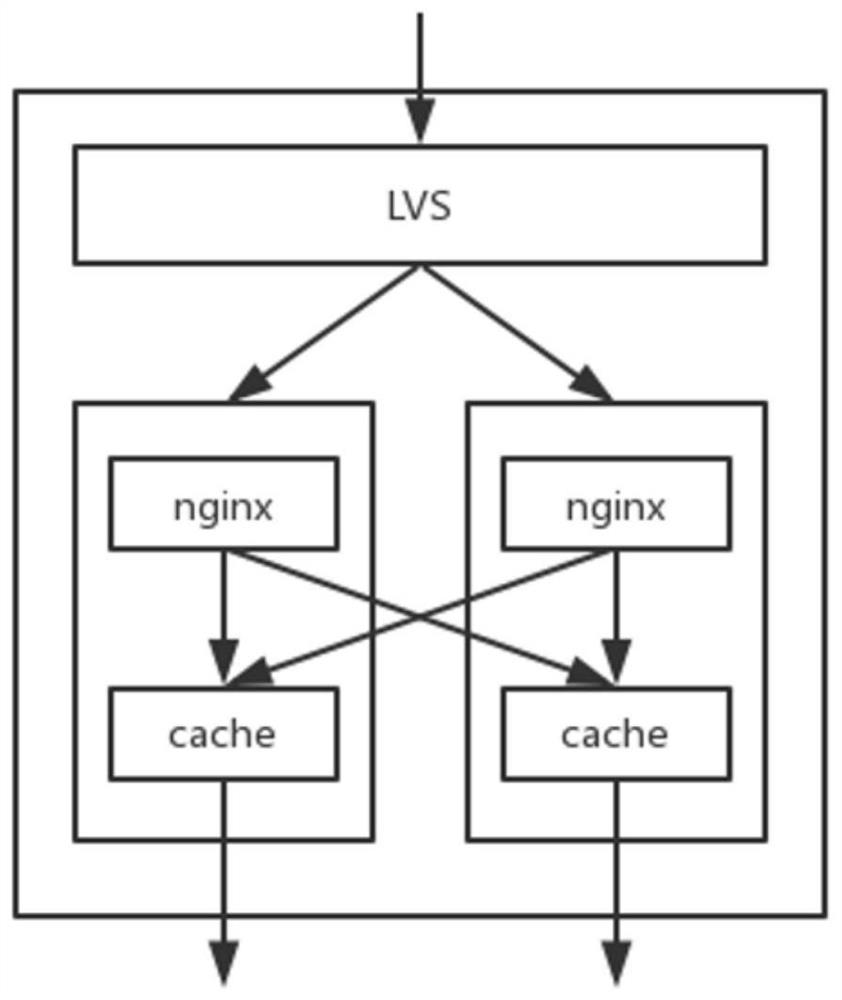

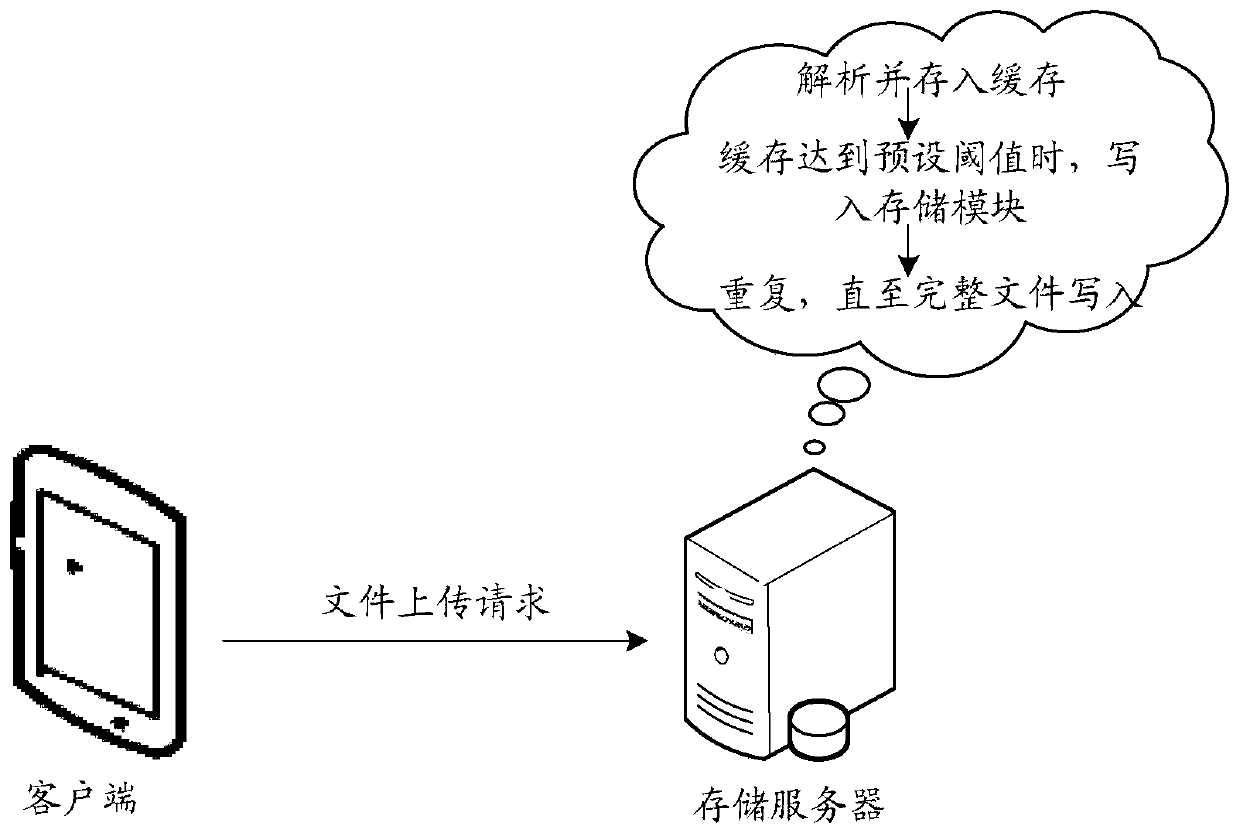

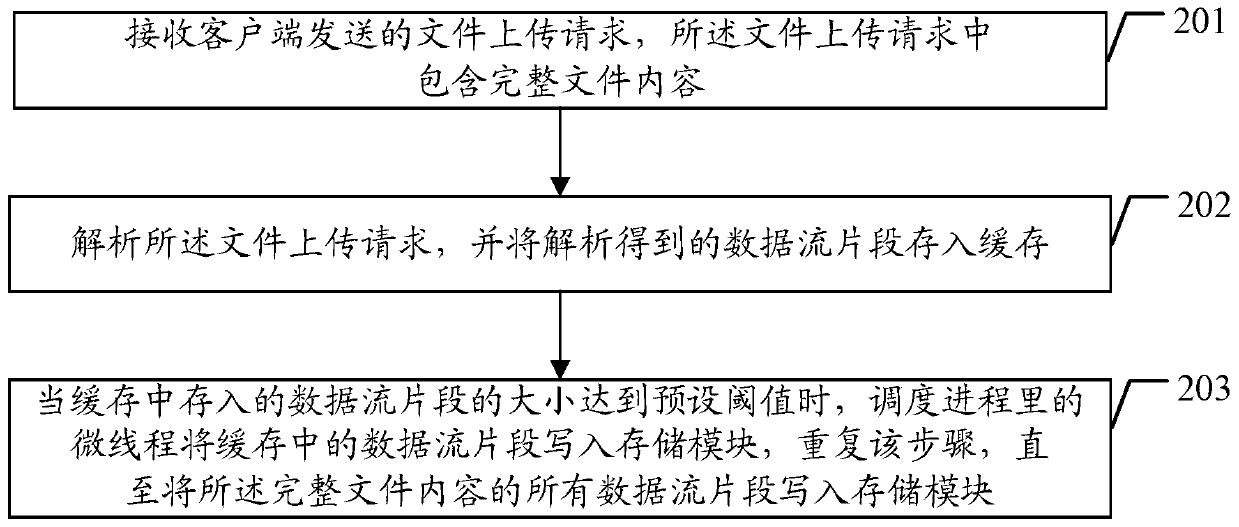

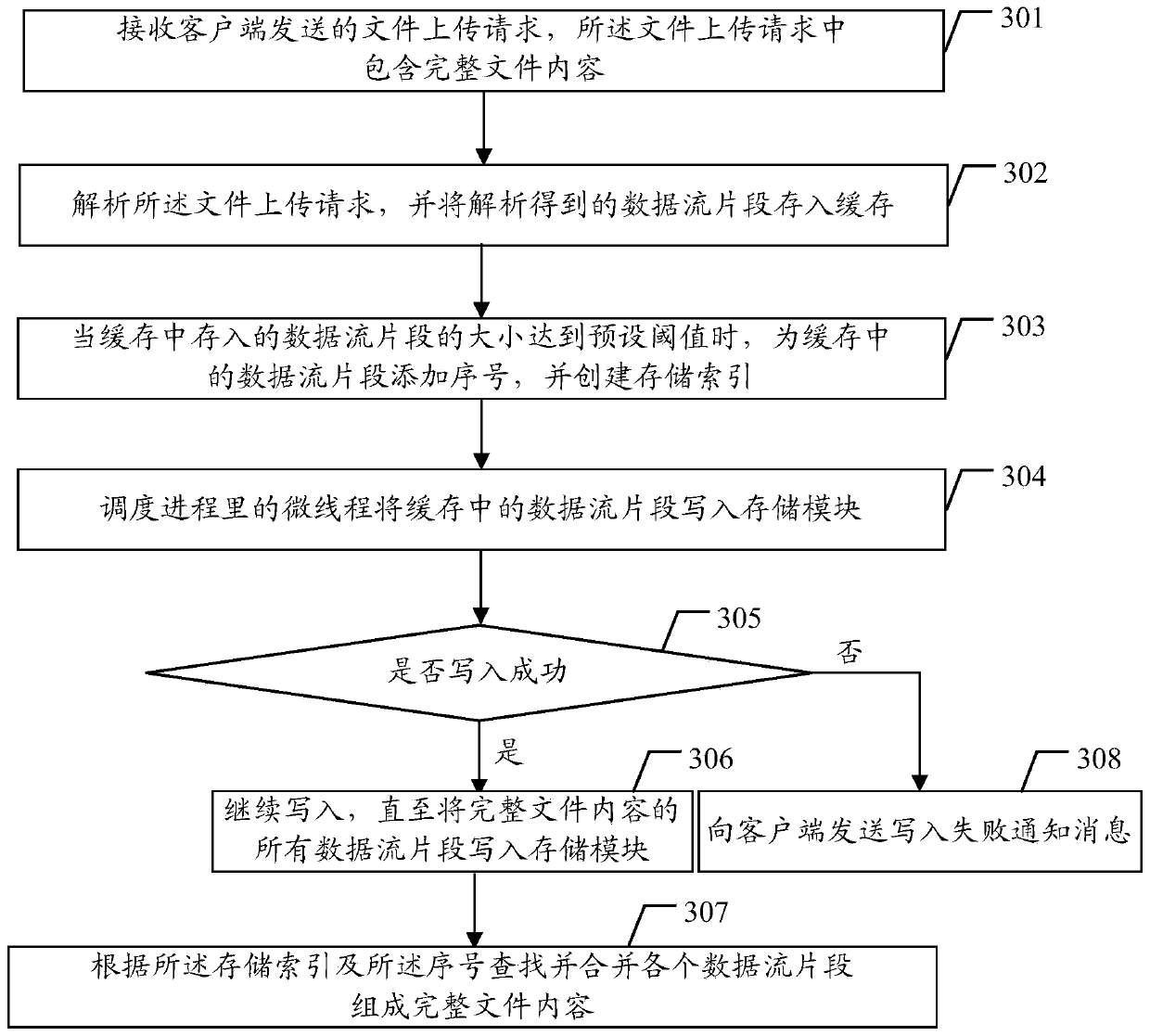

File uploading method and device

The embodiment of the invention discloses a file uploading method and device. The file uploading method comprises the steps of receiving a file uploading request sent by a client, wherein the file uploading request comprises complete file content; analyzing the file uploading request and storing data stream fragments obtained through analysis to a cache; and when the size of the data stream fragments stored to the cache reaches a preset threshold, calling a micro-thread in a process to write the data stream fragments in the cache into a memory module, and repeating the step until all data stream fragments of the complete file content are written into the memory module. According to the embodiment of the invention, the pressure of the cache can be mitigated and the uploading performance isimproved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

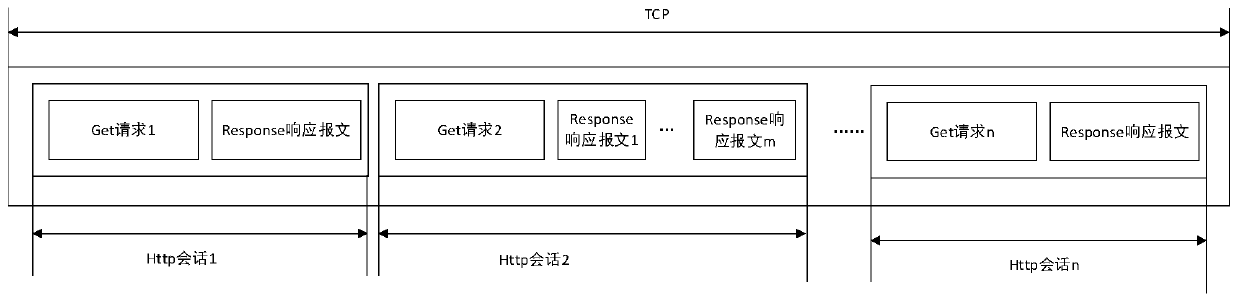

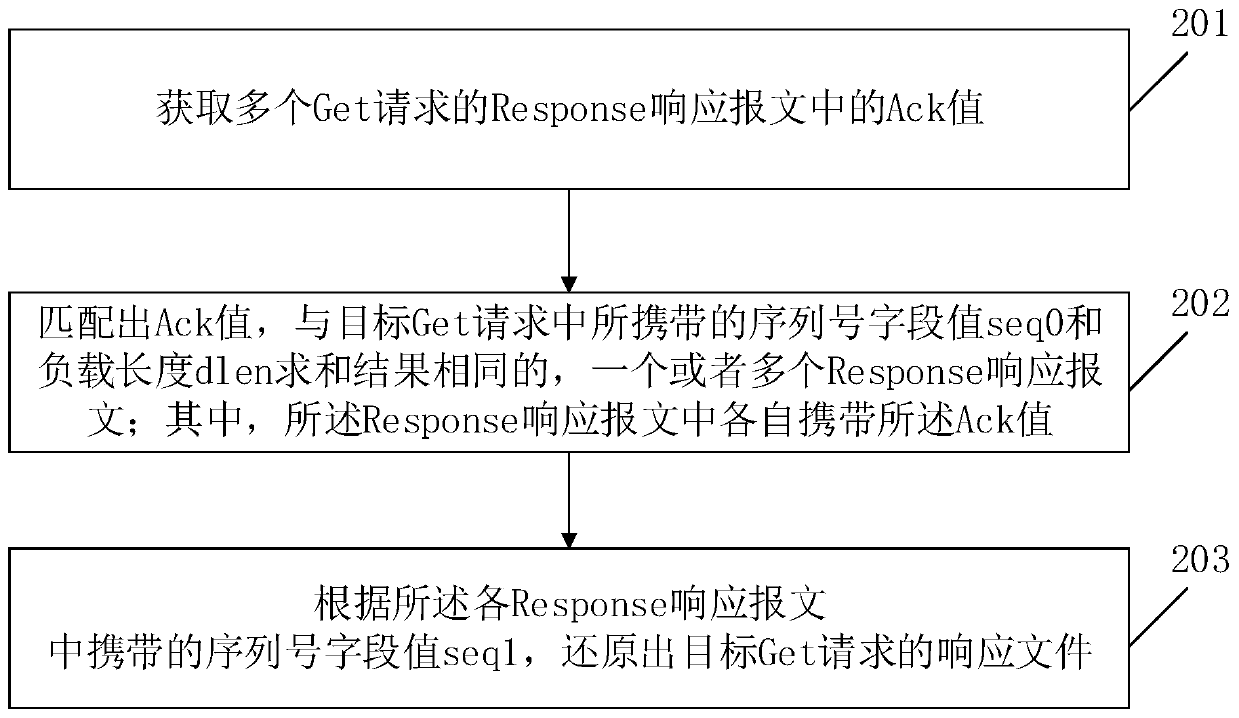

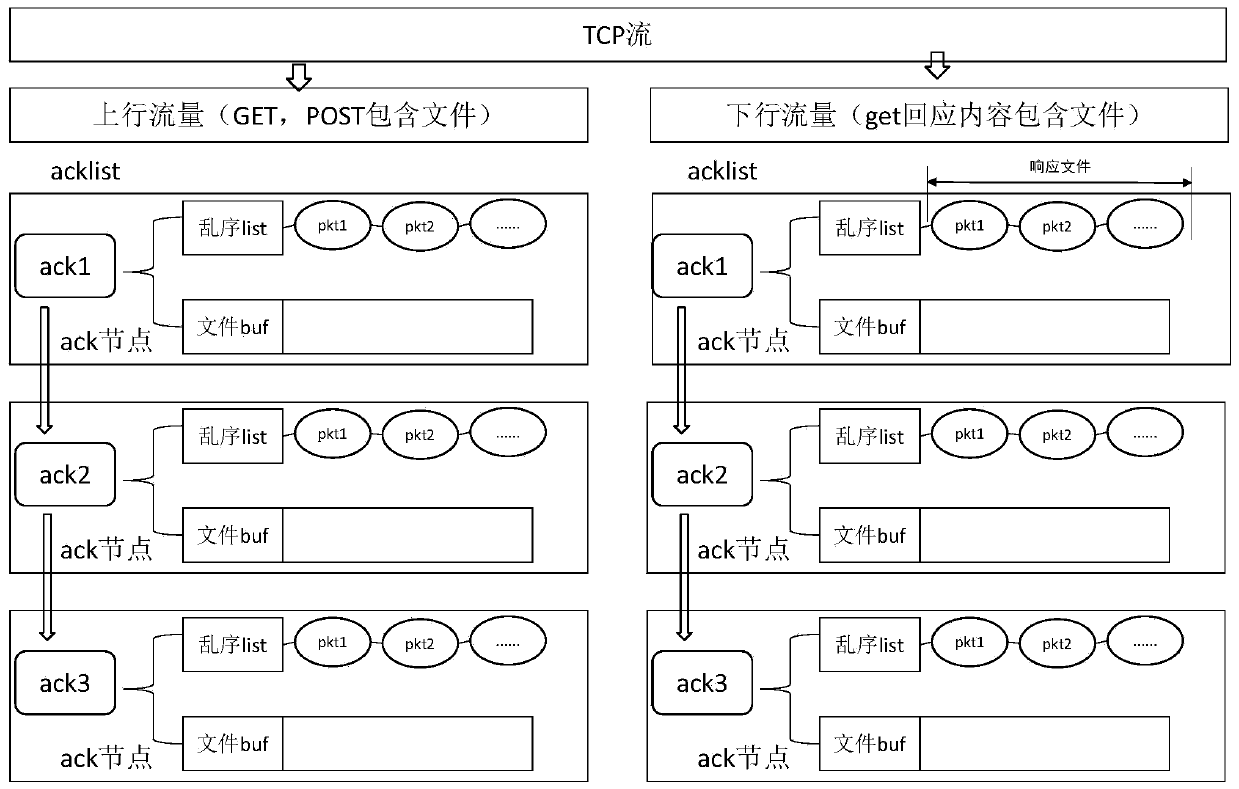

File restoration method and device for http multi-session in DPI scene

ActiveCN110839060AEliminate distractionsImprove reduction efficiencyTransmissionData packSerial code

The invention relates to the technical field of data packet recombination, and provides a file restoration method and device for http multi-session in a DPI scene. The method comprises the following steps: acquiring Ack values in Response response messages of a plurality of Get requests; matching out one or more Response response messages of which the Ack values are the same as the summation result of the serial number field value seq0 and the load length dlen carried in the target Get request; wherein the Ack values are carried in the Response response messages respectively; and restoring a response file of the target Get request according to the serial number field value seq1 carried in each Response response message. According to the invention, a plurality of http sessions in the TCP session can be processed in real time, disorder or retransmission interference among different http sessions is eliminated, and the restoration efficiency is improved.

Owner:WUHAN GREENET INFORMATION SERVICE

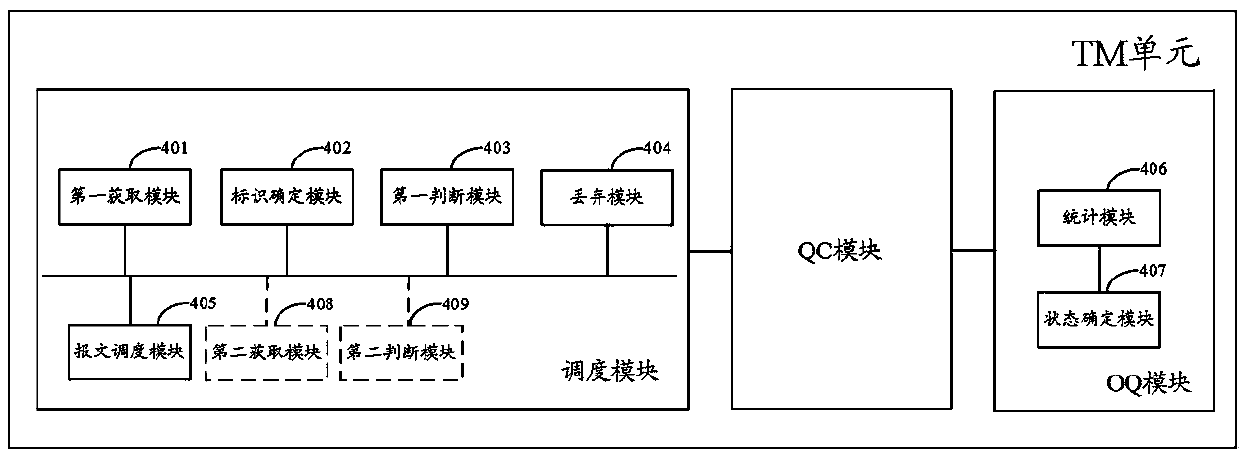

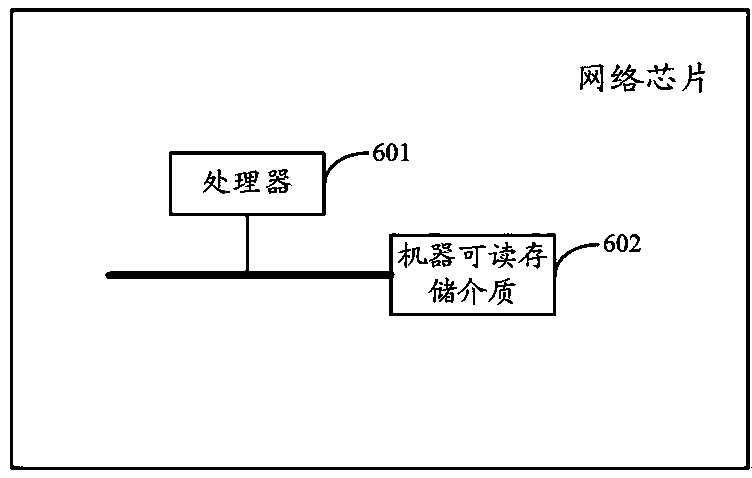

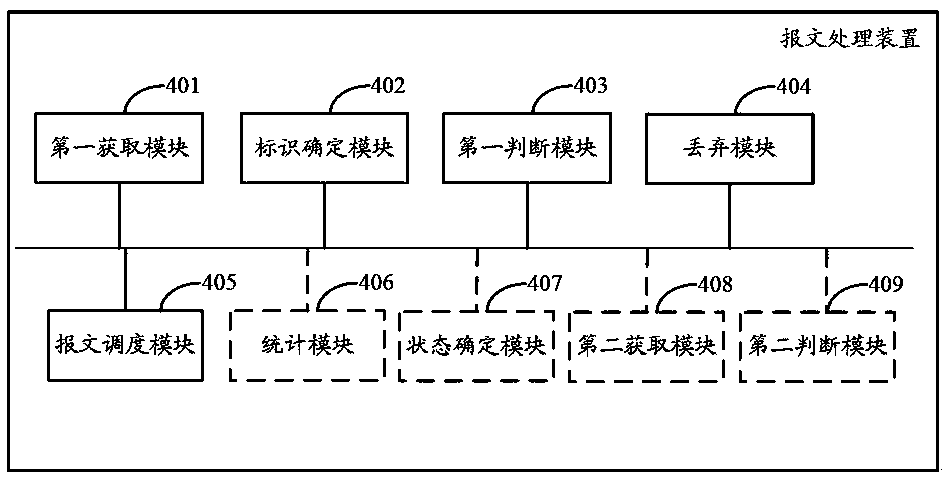

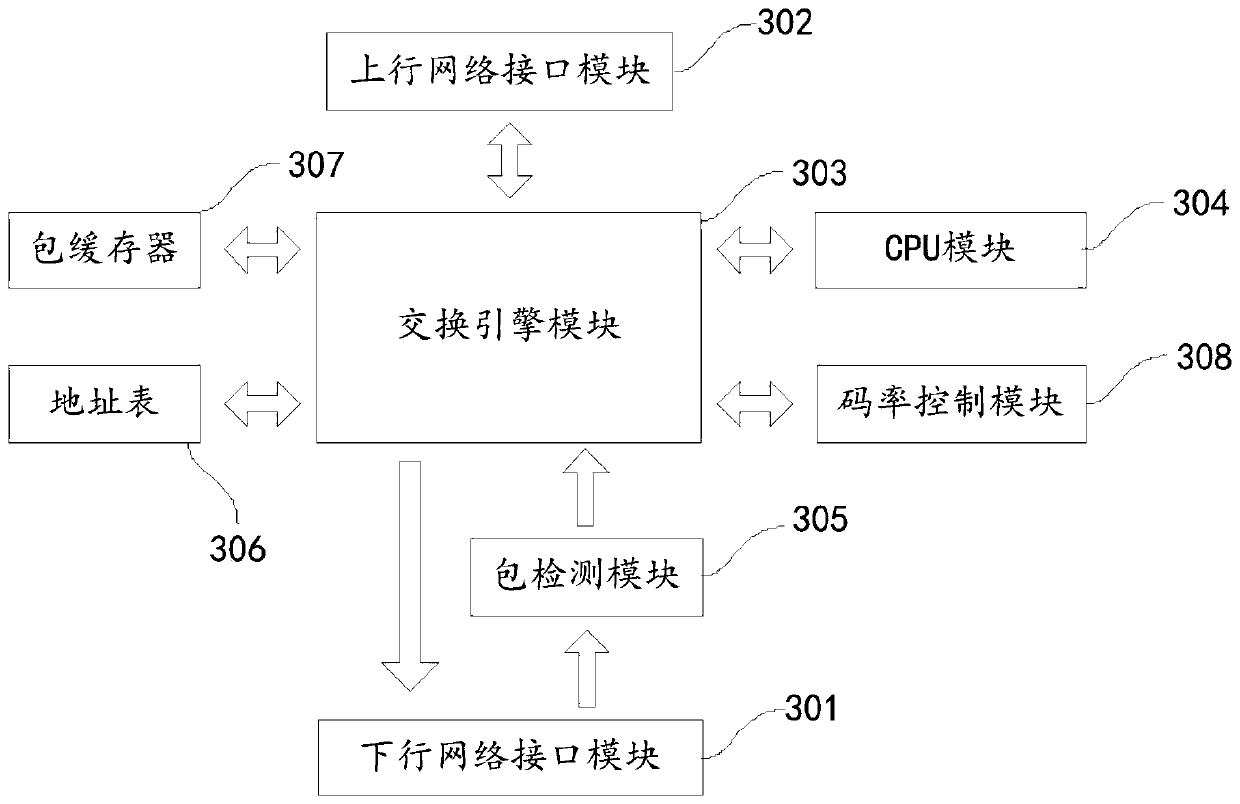

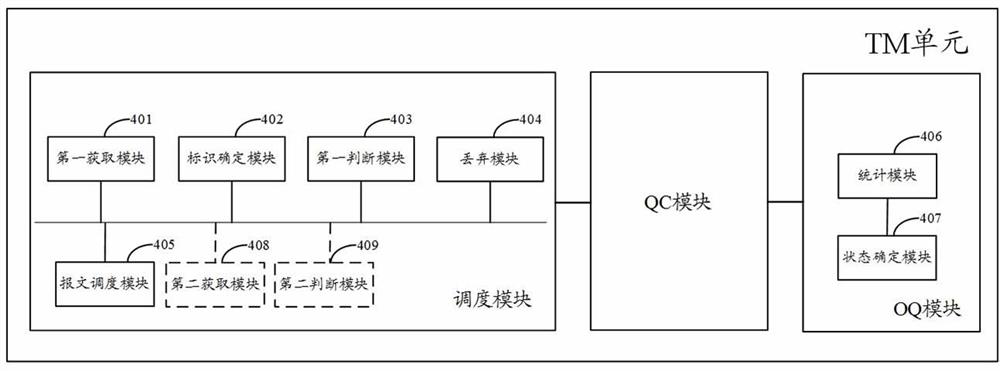

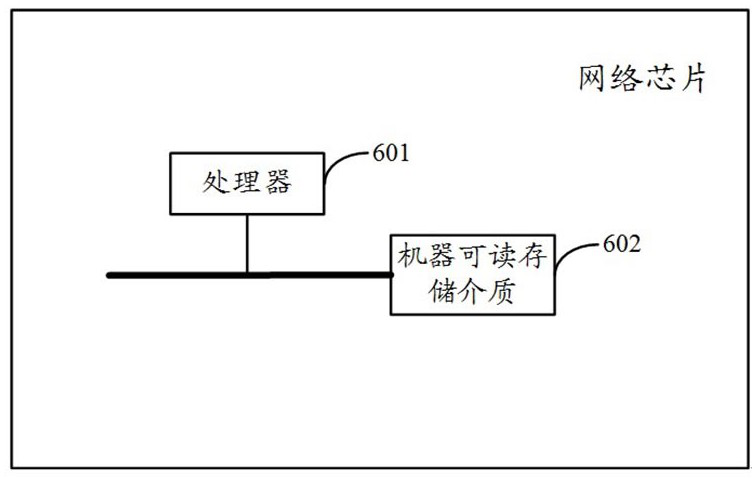

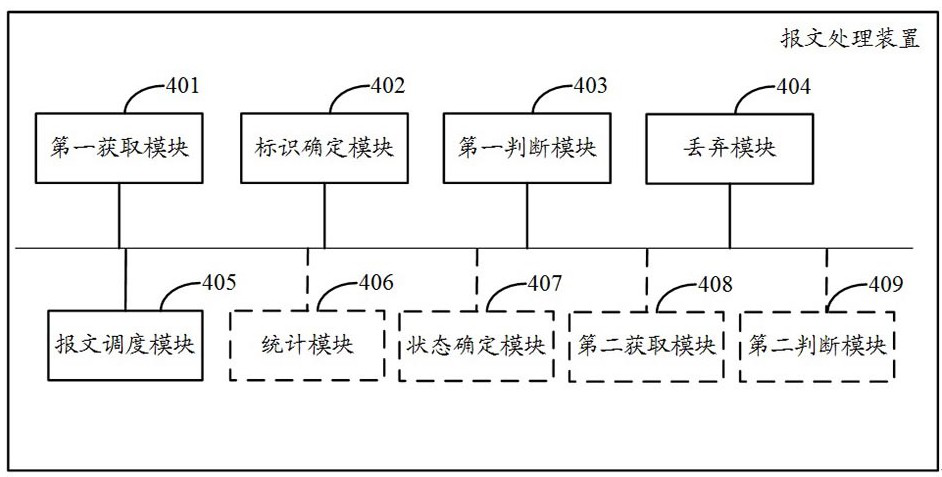

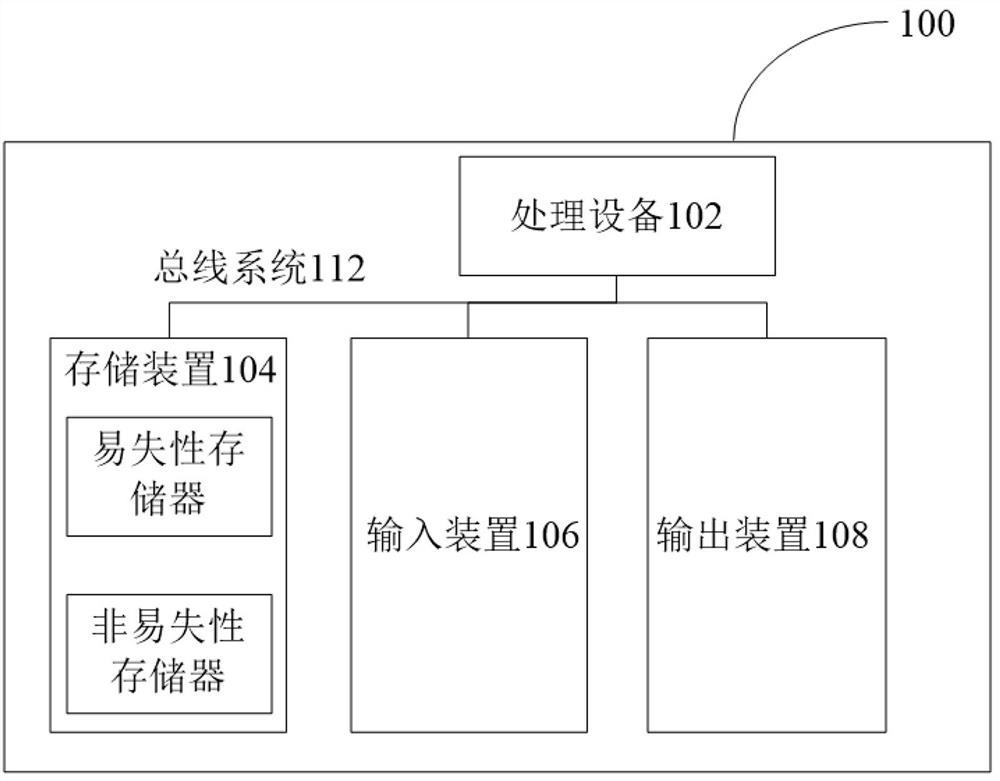

Message scheduling method and device and network chip

ActiveCN111526097ASolve congestionReduce cache pressureData switching networksEngineeringDistributed computing

The invention provides a message scheduling method and device and a network chip, which are applied to the network chip. The network chip comprises a classification layer, and the classification layercomprises a plurality of classification nodes; the method comprises the following steps: after a classification node acquires a scheduling task of a network message, and acquiring a node identifier of the classification node; determining a queue identifier corresponding to the node identifier of the classification node according to a mapping relationship between the stored node identifier and thequeue identifier of the output queue; judging whether an output queue corresponding to the determined queue identifier meets a back pressure condition or not; when a back pressure condition is satisfied, giving up the scheduling task; when the back pressure condition is not met, executing a scheduling task of the network message, so that the network message is written into the determined output queue. By the adoption of the method, scheduling of the network messages is achieved on the L3 layer, the congestion problem caused by the fact that the cache amount of the output queues is too large is effectively avoided, and the cache pressure of the corresponding output queues is reduced.

Owner:新华三半导体技术有限公司

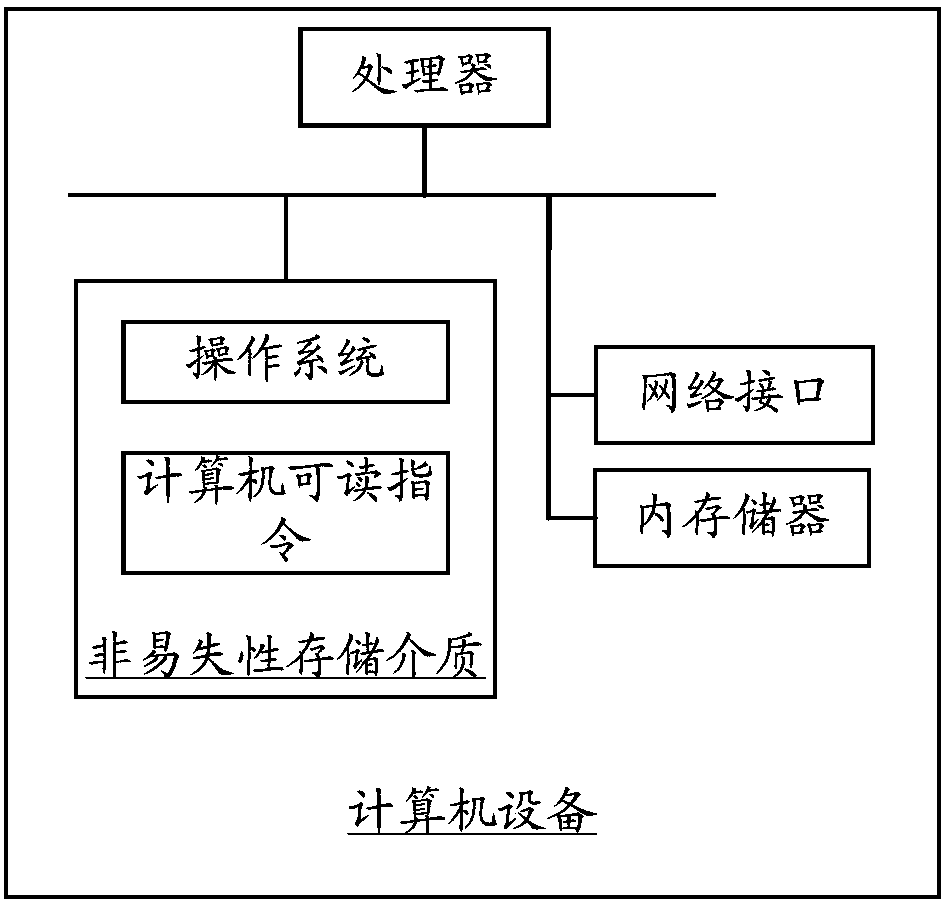

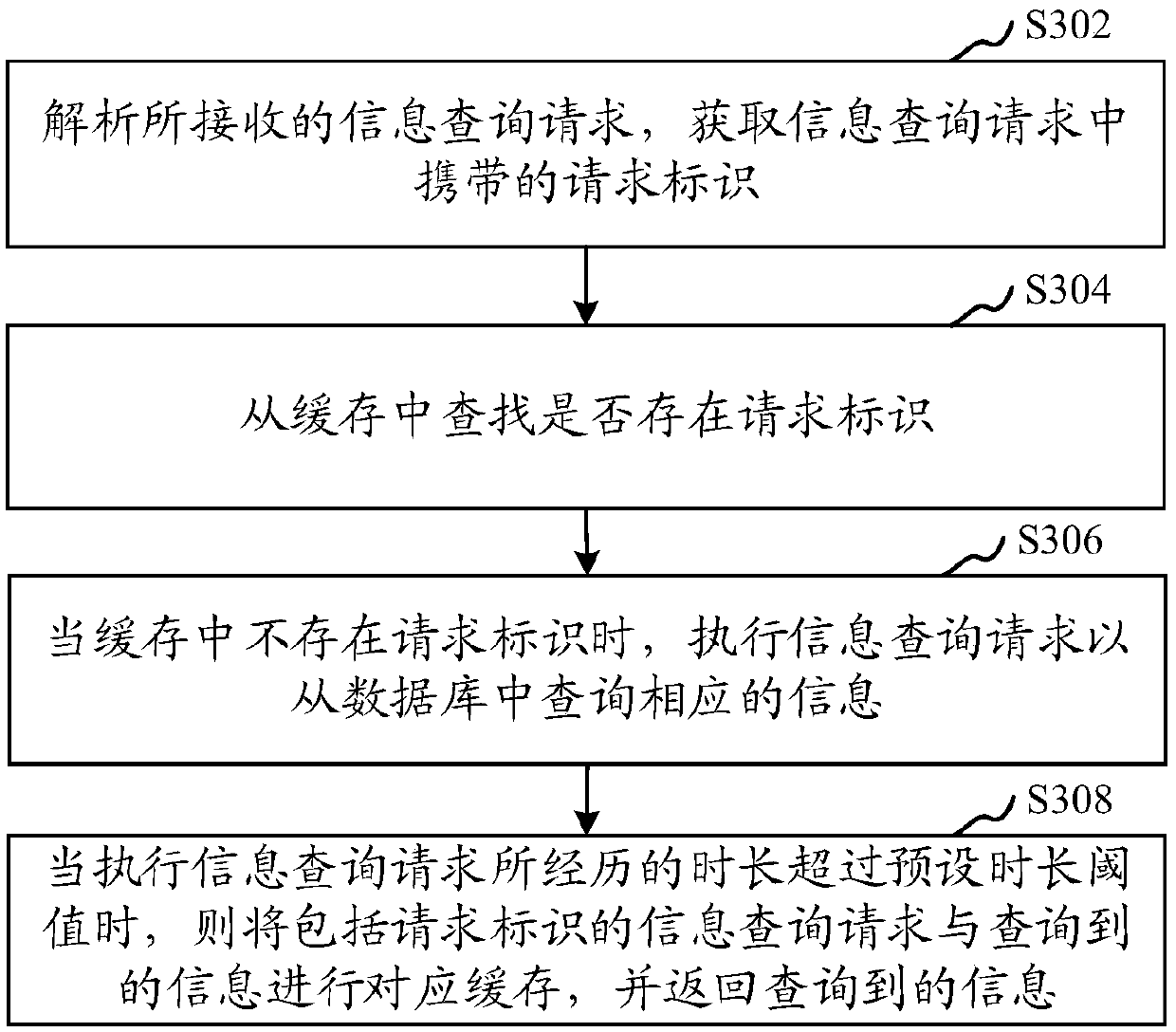

Caching processing method and device, computer equipment and storage medium

ActiveCN107665235AReduce the amount of informationReduce cache pressureSpecial data processing applicationsDatabaseData library

The invention relates to a caching processing method and device, computer equipment and a storage medium. The method comprises the steps of parsing received information query requests, and obtaining request identifiers carried in the information query requests; searching whether the request identifiers exist in a cache; executing the information query requests to query the corresponding information from a database when the request identifiers do not exist in the cache; carrying out corresponding caching of the information query requests containing the request identifiers and the queried information when the time length spent by executing the information query requests exceeds a preset time length threshold, and returning the queried information. Caching pressure is reduced through the scheme of the caching processing method.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

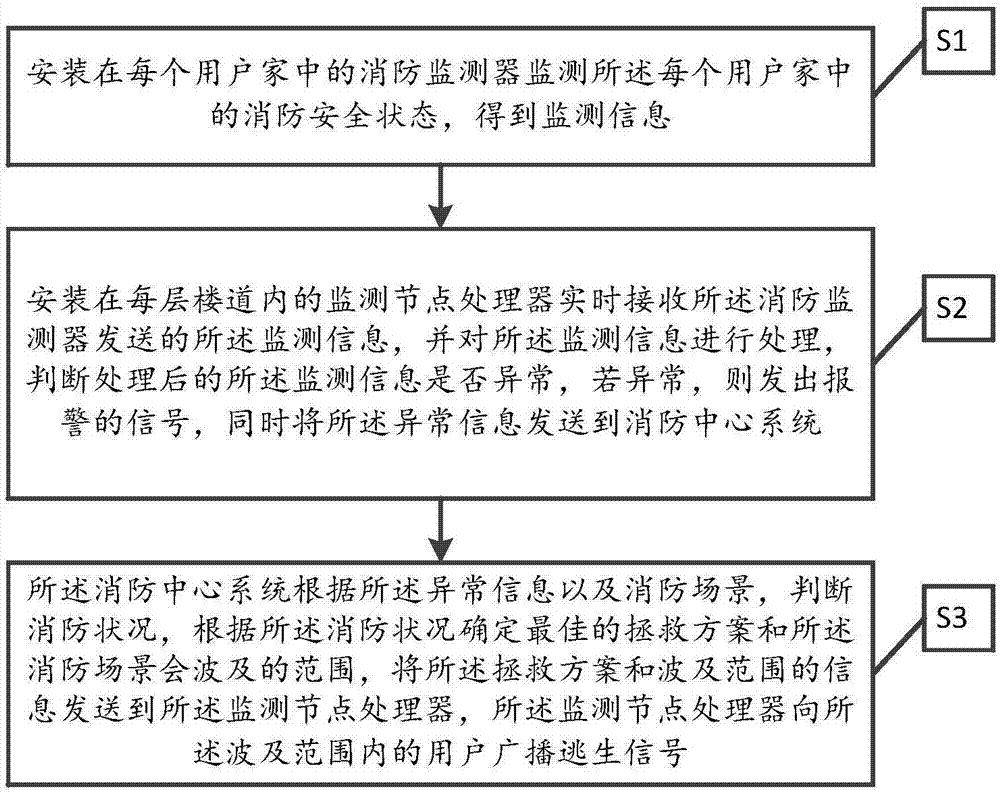

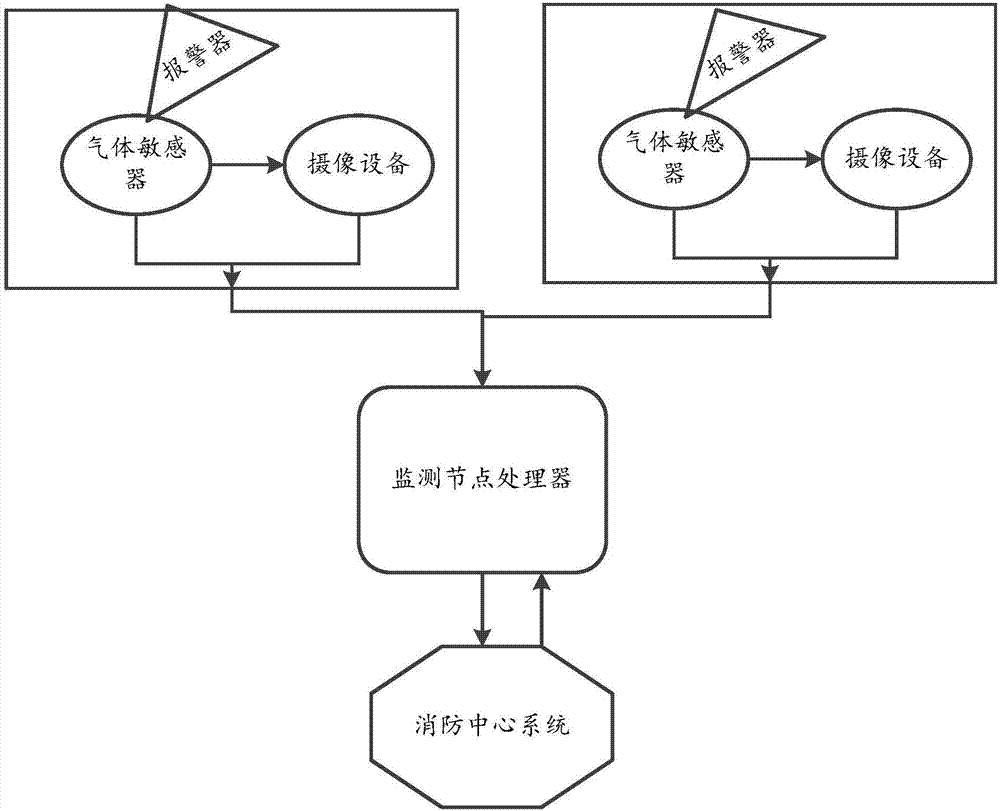

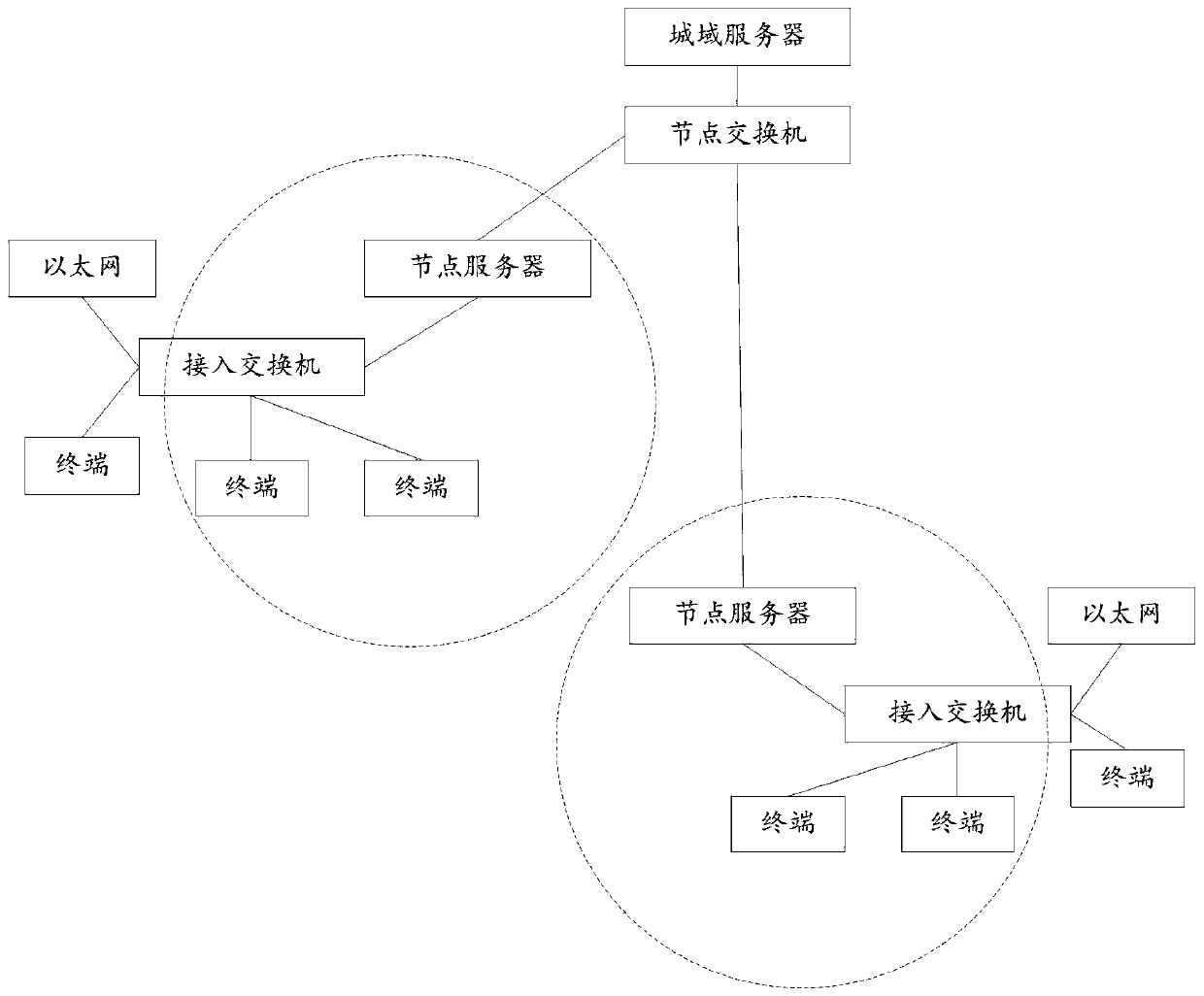

Fire-fighting monitoring method based on internet of things and fire-fighting monitoring system thereof

InactiveCN107221118AReduce casualtiesTimely notification informationFire alarmsMonitoring systemThe Internet

The invention relates to a fire-fighting monitoring method based on the internet of things and a fire-fighting monitoring system thereof. The method comprises the following steps that fire-fighting monitors are installed to monitor the fire-fighting safety situation in the home of each user; a monitoring node processor is installed to receive monitoring information and process the monitoring information; and a fire-fighting center system judges the fire-fighting situation according to abnormal information and the fire-fighting scene, determines the optimal rescue plane and the coverage scope of the fire-fighting scene according to the fire-fighting situation and transmits information of the rescue plan and the coverage scope to the monitoring node processor, and the monitoring node processor broadcasts escape signals to the users within the coverage scope. The invention also relates to the system. The system comprises the fire-fighting monitors, the monitoring node processor and the fire-fighting center system. With application of the fire-fighting monitoring method based on the internet of things and the fire-fighting monitoring system thereof, the optimal escape mode can be timely notified to the user so that personnel casualty can be reduced, the fire-fighting personnel can be timely informed to take the rescue mode in time and the fire-fighting safety problem can be solved.

Owner:SHENZHEN SHENGLU IOT COMM TECH CO LTD

A video data transmission method and device

InactiveCN109842821AImprove picture qualityQuality improvementSelective content distributionPacket lossComputer terminal

The embodiment of the invention provides a video data transmission method and a corresponding video data transmission device. The method and the device can be applied to the articulated naturality web. The articulated naturality web comprises an articulated naturality web server and an articulated naturality web terminal, the XMCU server is controlled by extending multiple points; The articulatednaturality web server is in communication connection with the articulated naturality web terminal and the XMCU server. The articulated naturality web terminal splits each collected video frame into aplurality of video data packets with packet serial numbers, receives reply confirmation information returned by the XMCU server for the video data packet when each video data packet is sent, if the reply confirmation information comprises the packet serial number of the video data packet, continues to send the next video data packet, and If the reply confirmation information does not comprise thepacket serial number of the video data packet, sends the video data packet to the XMCU server again, so it can be ensured that all video data are sent to the XMCU server, and video data packet loss isavoided.

Owner:VISIONVERA INFORMATION TECH CO LTD

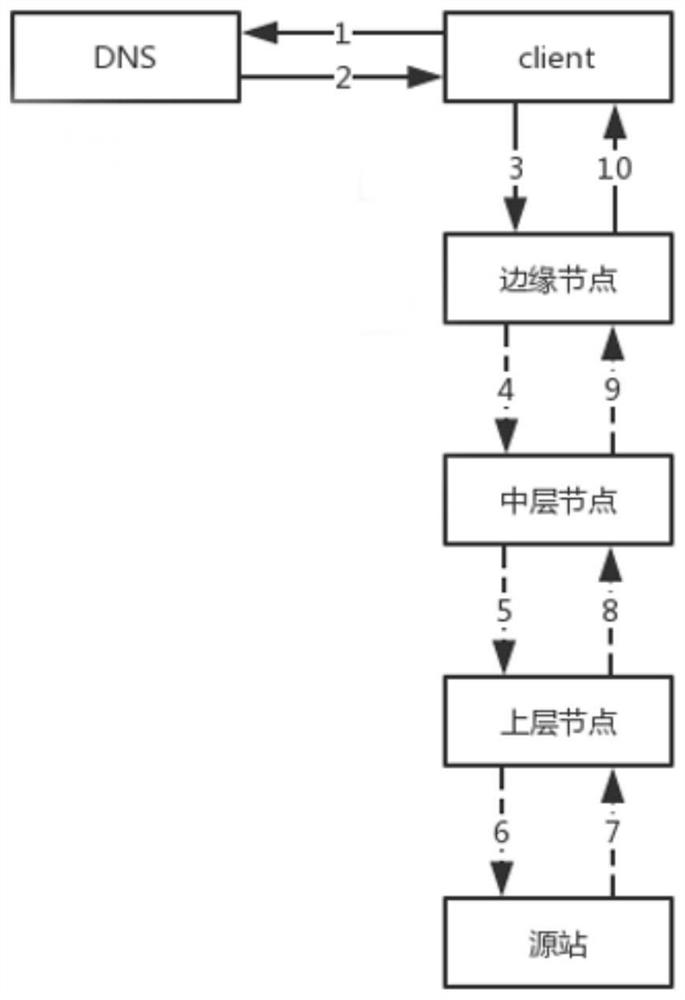

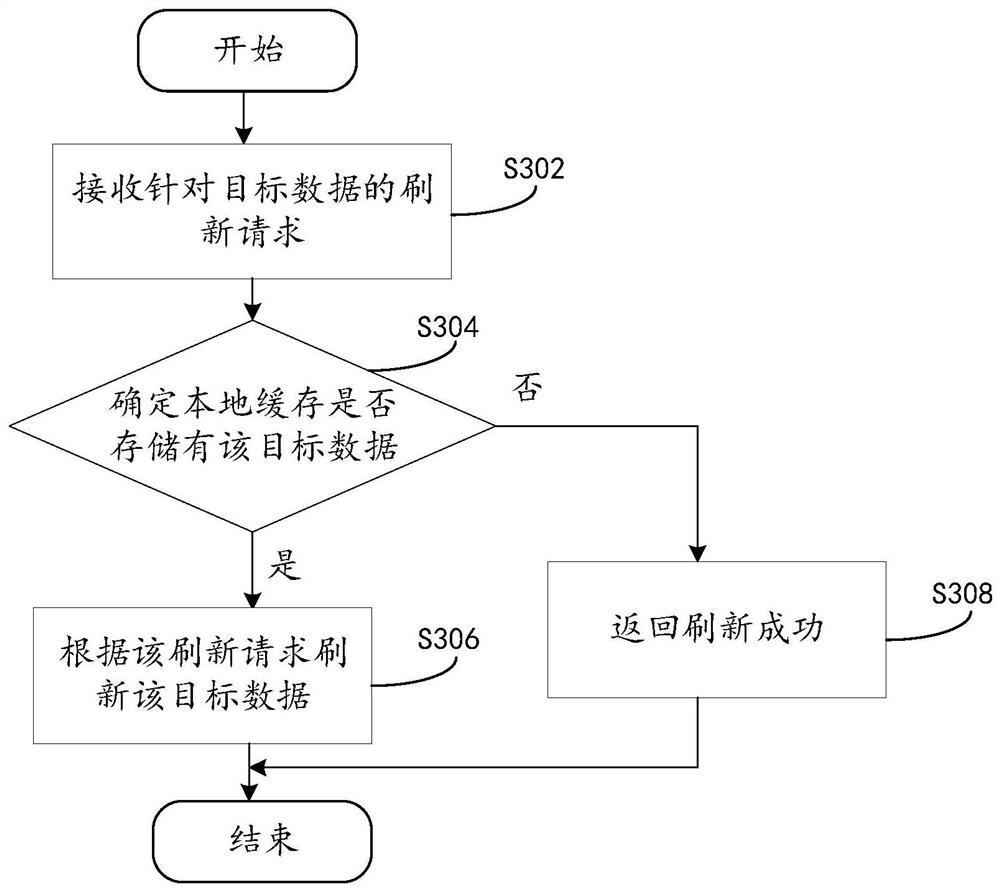

Data refreshing method and device and electronic equipment

PendingCN112463653AReduce cache pressureImprove efficiencyMemory systemsInformation transmissionSoftware engineering

The invention provides a data refreshing method and device and electronic equipment, and relates to the technical field of data processing, and the method comprises the steps: receiving a refreshing request for target data; determining whether the target data is stored in a local cache; and if the target data is stored in the local cache, refreshing the target data according to the refreshing request. According to the embodiment of the invention, the refreshing request is filtered in advance through the node server, and only the refreshing information of the data existing in the local cache istransmitted to the cache equipment for refreshing, so that the invalid refreshing request is filtered, the cache pressure of the CDN node is reduced, and the refreshing efficiency and the refreshingsuccess rate are improved.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD

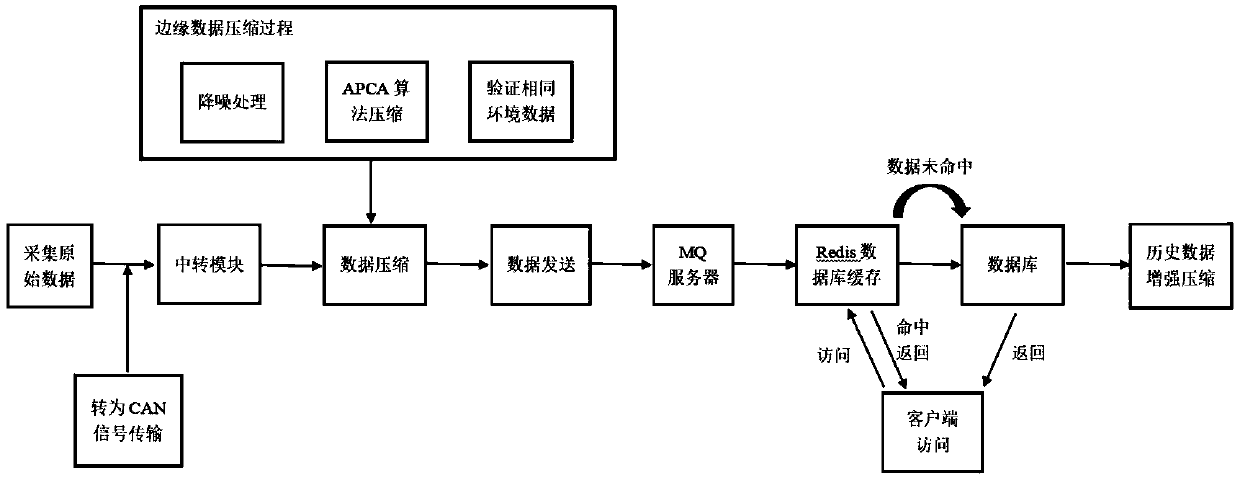

A method of processing temperature sensor data based on edge compression calculations

ActiveCN110602178AHigh acquisition frequencyImprove accuracyDigital data information retrievalResource allocationMessage queueData compression

The invention discloses a method for processing temperature sensor data based on edge compression calculation. According to the invention, edge calculation is introduced into a visual platform system,is applied to a sensor data acquisition end to compress data, and then is forwarded to a server by a sending and receiving module; the method comprises the following steps: firstly, processing data at a sensor data acquisition end, and segmenting centralized large-batch data compression calculation into small-granularity single-module compression calculation; and secondly, sending the compresseddata through a sending and receiving module, so that the pressure of the network bandwidth of the server is reduced. According to the method, the pressure of caching the data messages in the message queue is reduced, and information loss caused by full load of the queue easily occurs due to caching and processing of a large amount of data. The data is compressed before being sent, so that the dataacquisition frequency can be improved, the obtained data information is more accurate, the information loss is reduced, and the data accuracy is improved in production.

Owner:HANGZHOU DIANZI UNIV

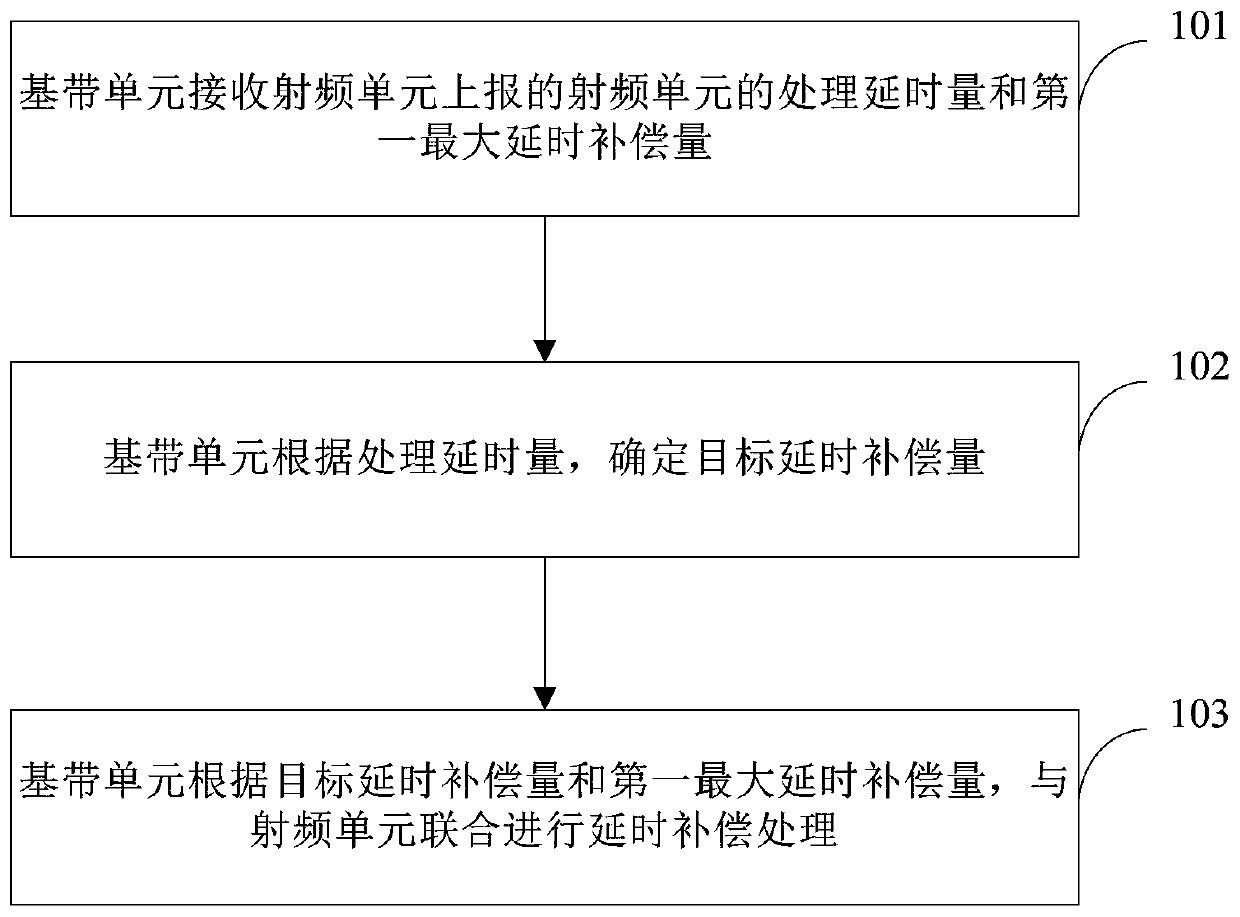

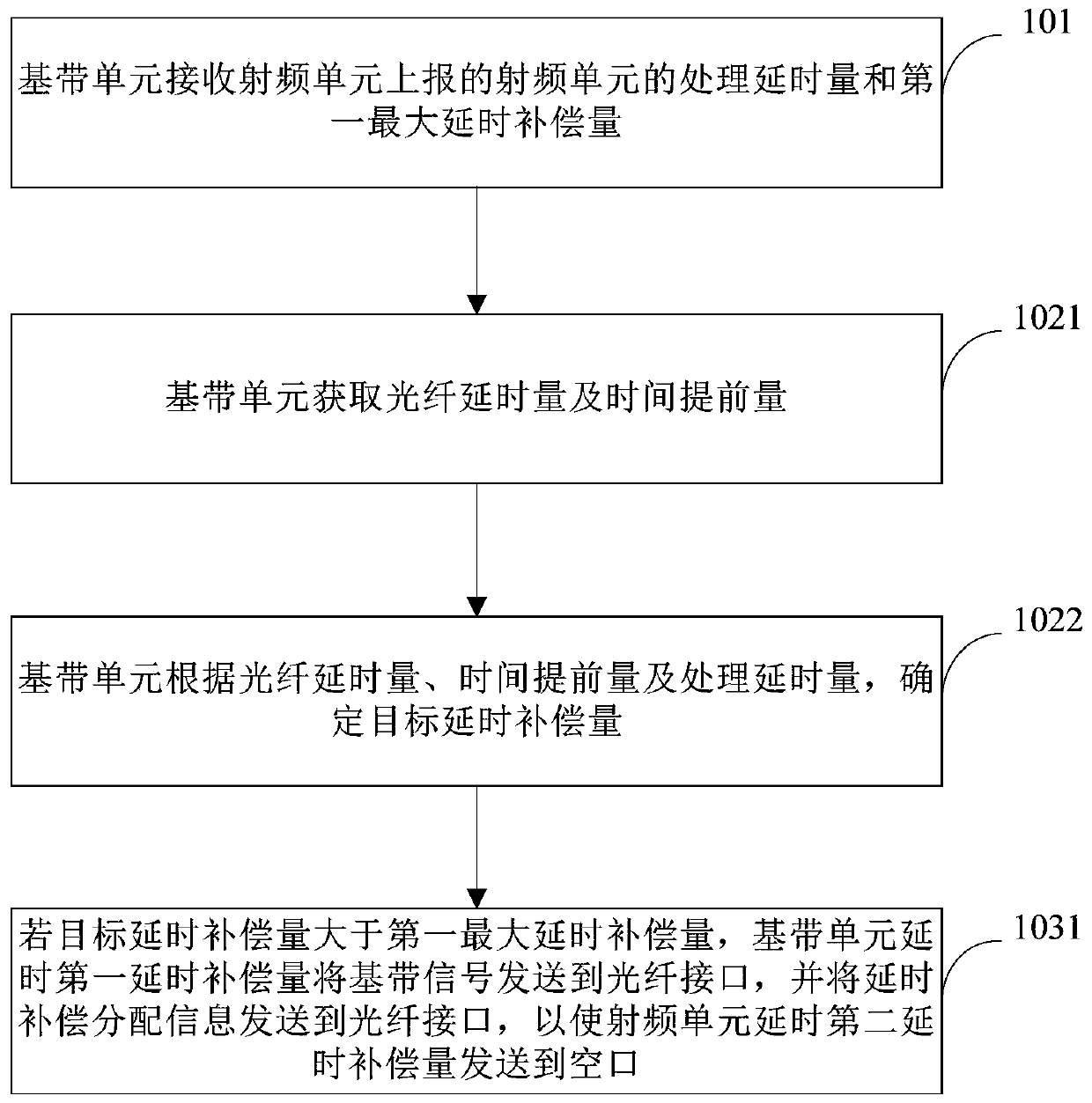

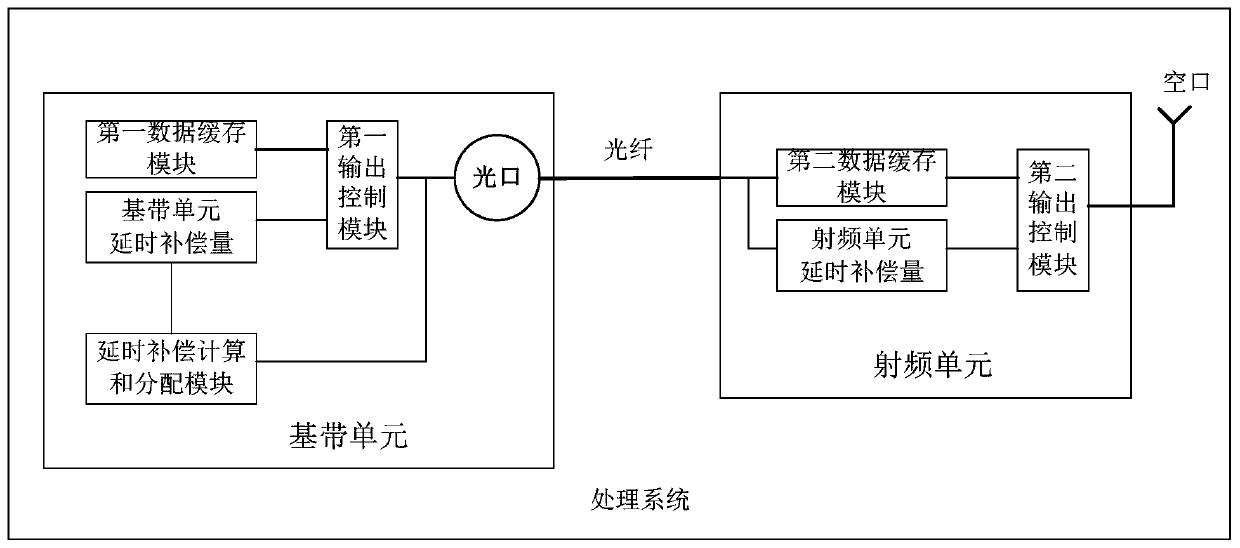

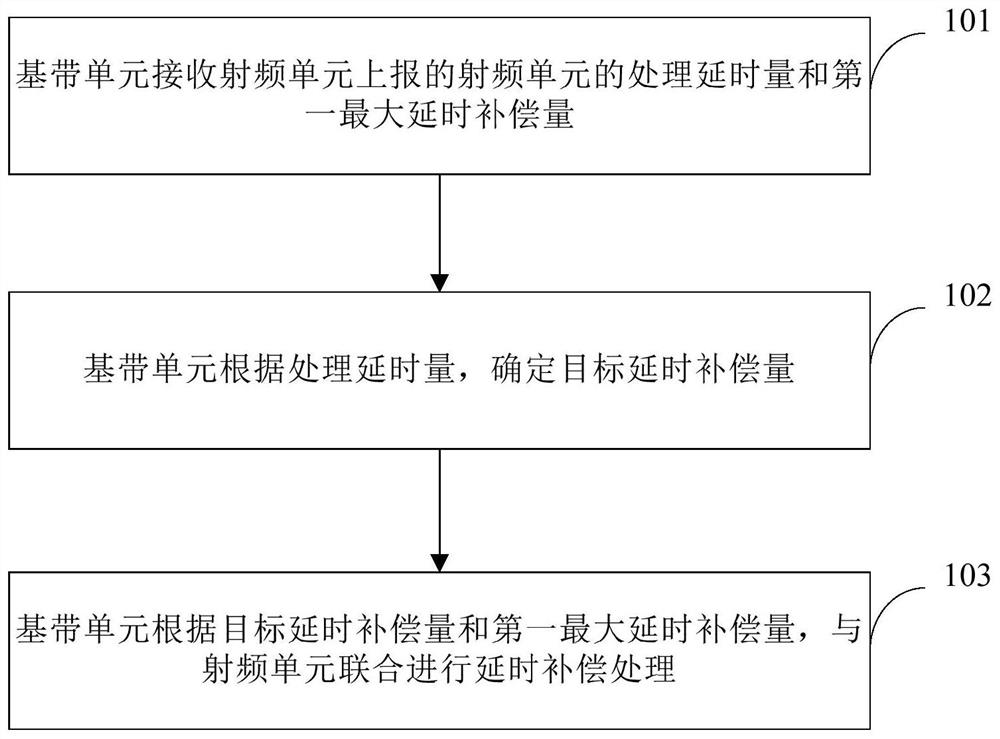

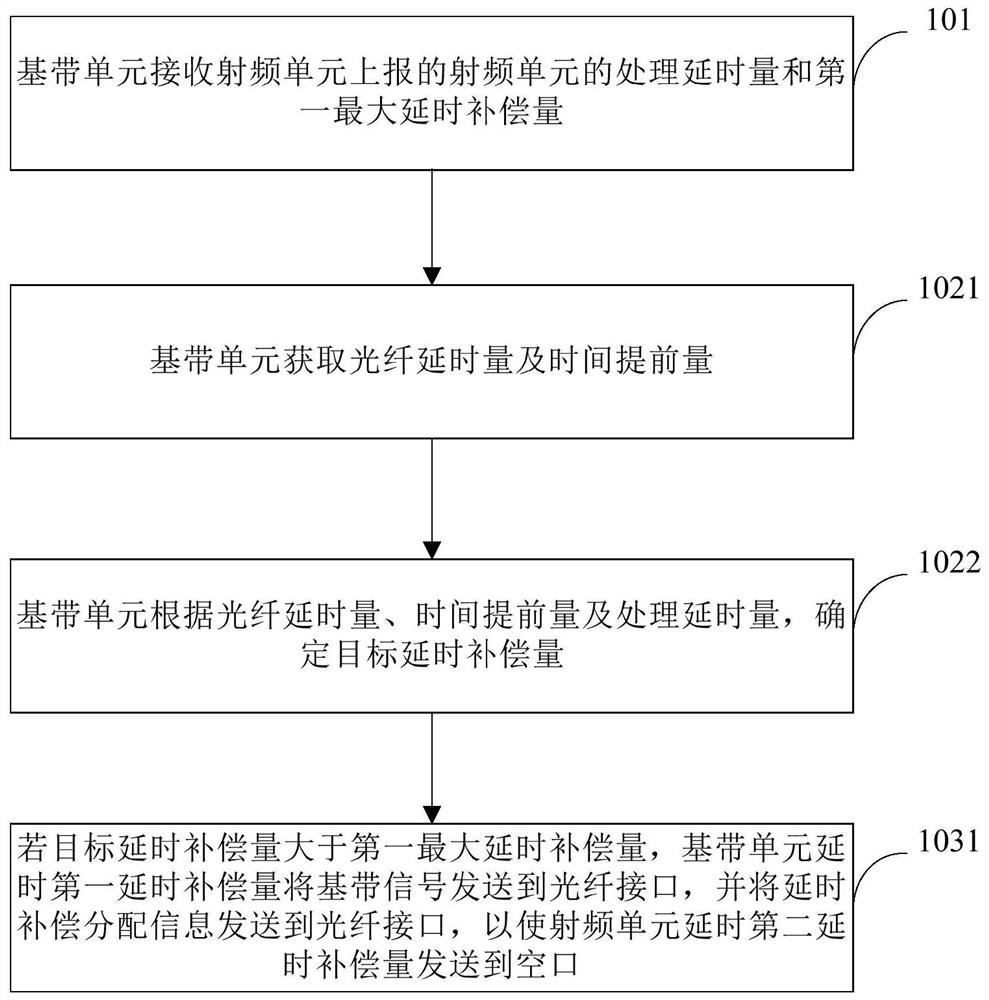

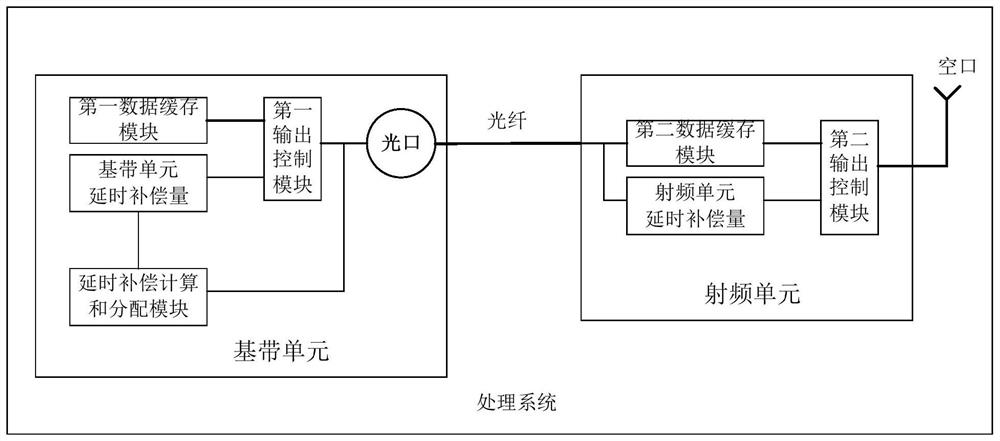

Air interface signal alignment processing method, device and equipment and storage medium

ActiveCN110213797AReduce resource usageReduce cache pressureSynchronisation arrangementNetwork traffic/resource managementRadio frequencyProcessing delay

The invention provides an air interface signal alignment processing method, device, equipment and a storage medium. The method comprises the following steps: a baseband unit receiving a processing delay amount and a first maximum delay compensation amount of a radio frequency unit reported by the radio frequency unit; the baseband unit determining a target delay compensation amount according to the processing delay amount; and the baseband unit being combined with the radio frequency unit to carry out delay compensation processing according to the target delay compensation amount and the firstmaximum delay compensation amount. Therefore, the baseband unit can share with the radio frequency unit for delay compensation, resource occupation of the radio frequency unit buffer is reduced, andthe buffer pressure of the radio frequency unit is reduced.

Owner:TD TECH COMM TECH LTD

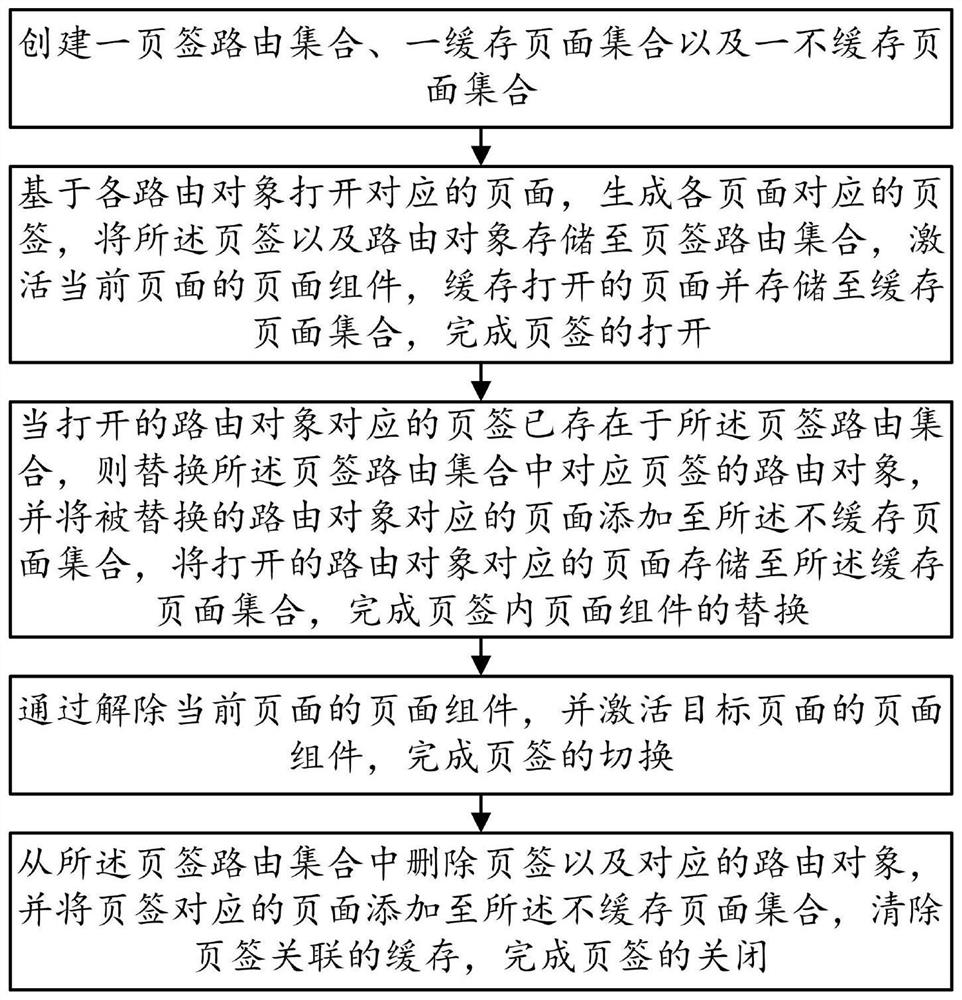

SPA multi-tab management method based on VUE

ActiveCN112685663AReduce in quantityReduce cache pressureProgram loading/initiatingEnergy efficient computingSoftware engineeringWeb development

The invention provides an SPA multi-tab management method based on VUE in the technical field of WEB development. The SPA multi-tab management method comprises the steps of: S10, creating a tab routing set, a cache page set and a non-cache page set; S20, opening the page, generating a tab, storing the tab and the routing object into a tab routing set, activating a page component, caching the opened page, and storing the cached opened page into a cached page set to finish tab opening; S30, replacing the routing object of the tab in the tab routing set with an opened routing object, and storing a page corresponding to the opened routing object into a cache page set to complete page component replacement; S40, switching the activated page components to complete tab switching; and S50, deleting the tabs and the corresponding routing objects from the tab routing set, adding the pages to the non-cached page set, and clearing the cache to finish closing the tabs. The method has the advantages that on the premise that basic functions of the tabs are met, the number of the tabs is greatly reduced, and then the user experience is improved.

Owner:FUJIAN NEWLAND SOFTWARE ENGINEERING CO LTD

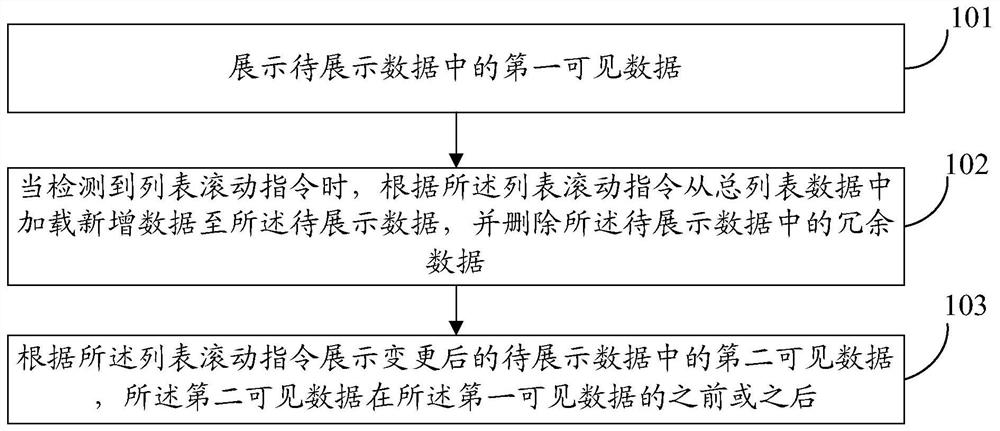

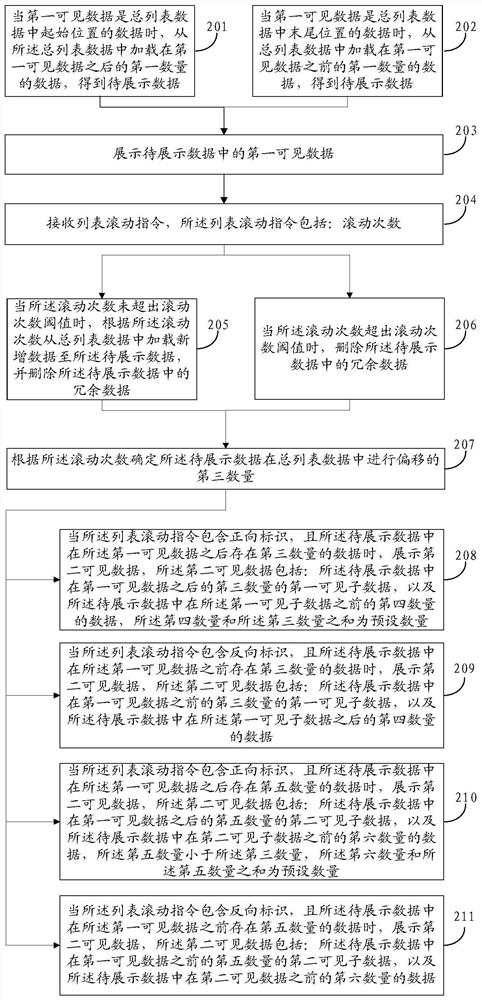

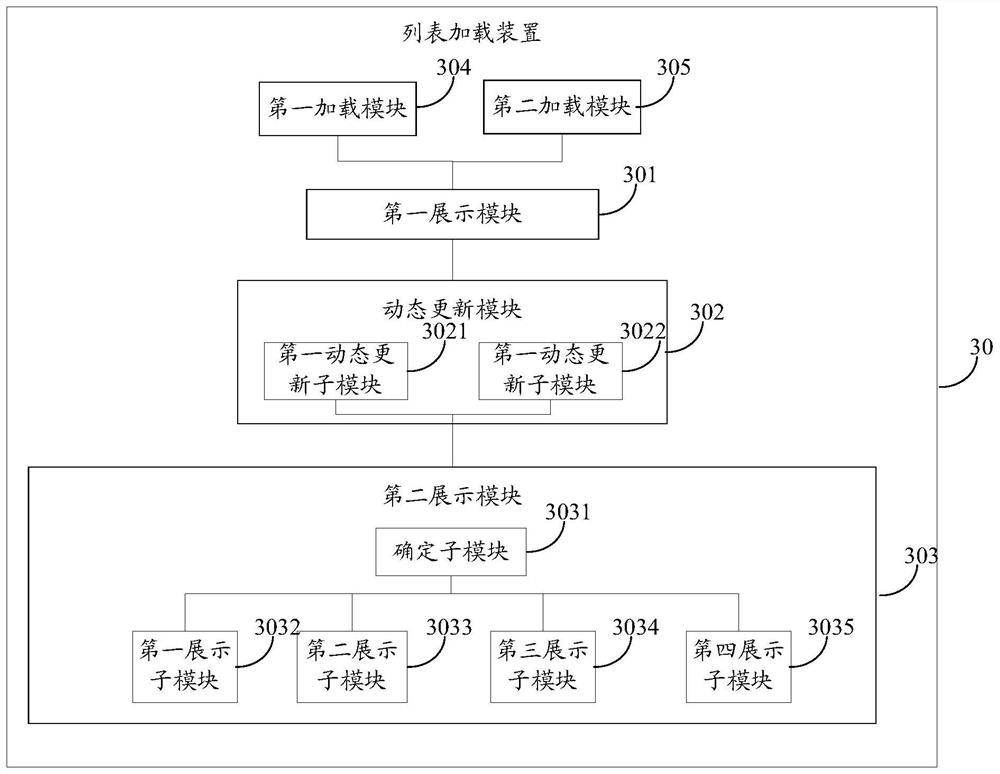

List loading method, terminal equipment, electronic equipment and storage medium

PendingCN112541140AReduce cache pressureImprove real-time performanceSpecial data processing applicationsWeb data browsing optimisationComputer hardwareTerminal equipment

The invention provides a list loading method and apparatus, an electronic device and a storage medium. The method comprises the steps of displaying first visible data in to-be-displayed data; when a list rolling instruction is detected, loading newly added data from total list data to the to-be-displayed data according to the list rolling instruction, and deleting redundant data in the to-be-displayed data; and displaying second visible data in the changed to-be-displayed data according to the list rolling instruction, wherein the second visible data is in front of or behind the first visibledata. According to the method, when the pull-down list rolls, the newly added data is loaded to the to-be-displayed data in advance, and the corresponding redundant data in the to-be-displayed data isdeleted, so that the real-time performance of data loading of the pull-down list is improved, and the cache pressure of storing the to-be-displayed data of a browser is reduced.

Owner:BEIJING GRIDSUM TECH CO LTD

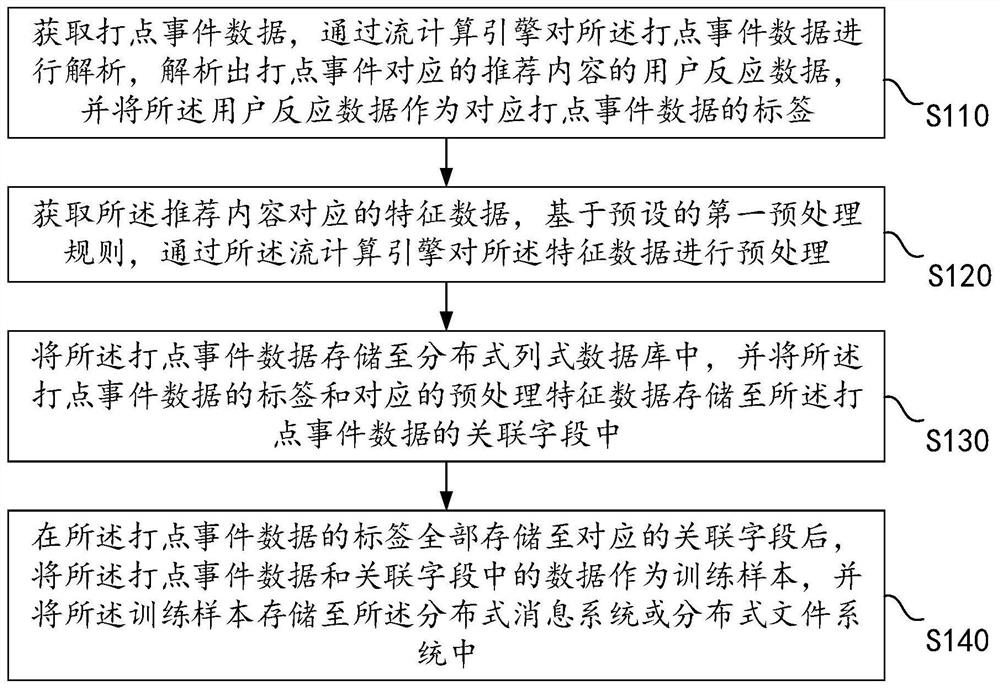

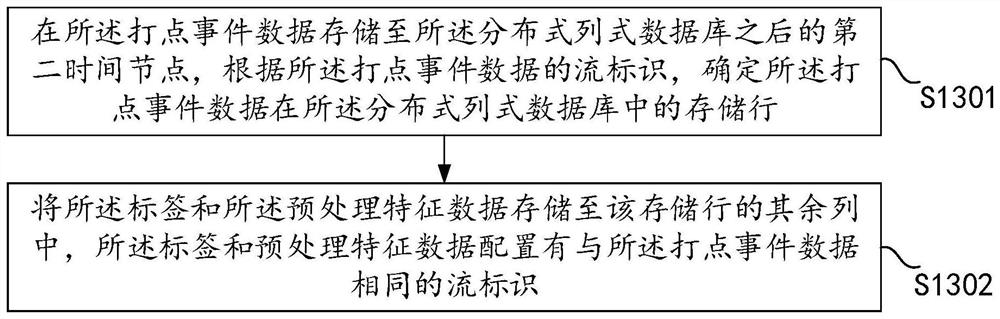

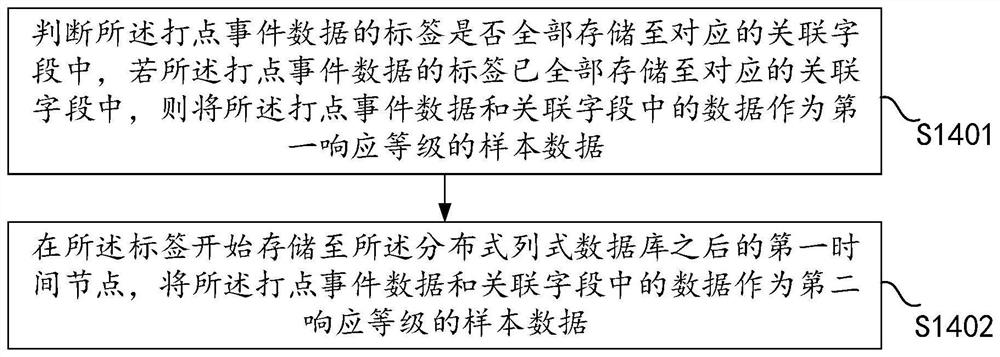

Training sample processing method and device, equipment and storage medium

PendingCN112925947ASave memoryReduce cache pressureMetadata video data retrievalMarketingDistributed File SystemEngineering

The embodiment of the invention discloses a training sample processing method and device, equipment and a storage medium. The method comprises the following steps: acquiring dotting event data, analyzing the dotting event data through a stream computing engine, analyzing user response data of recommended content corresponding to a dotting event, and taking the user response data as a label of the corresponding dotting event data; obtaining feature data corresponding to the recommended content, and preprocessing the feature data through a stream computing engine based on a preset first preprocessing rule; storing the dotting event data into a distributed column database, and storing tags of the dotting event data and the corresponding preprocessed feature data into associated fields of the dotting event data; and after the tags of the dotting event data are all stored in the corresponding associated fields, taking the dotting event data and the data in the associated fields as training samples, and storing the training samples in a distributed message system or a distributed file system so as to solve the problem that the training samples cannot be output in real time.

Owner:BIGO TECH PTE LTD

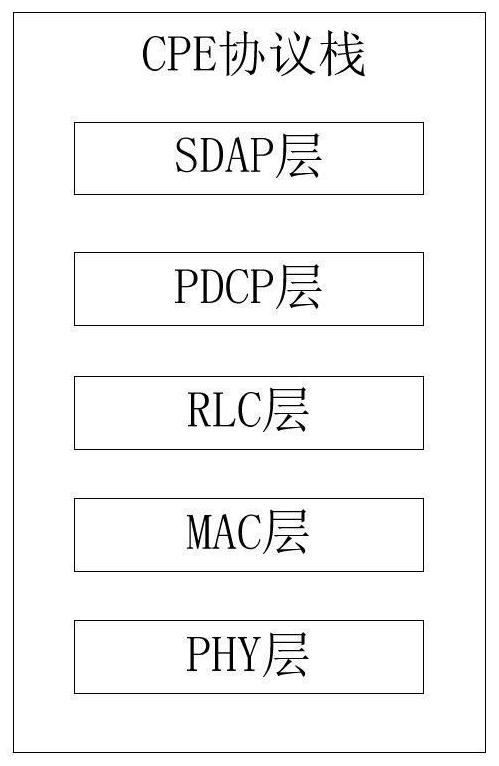

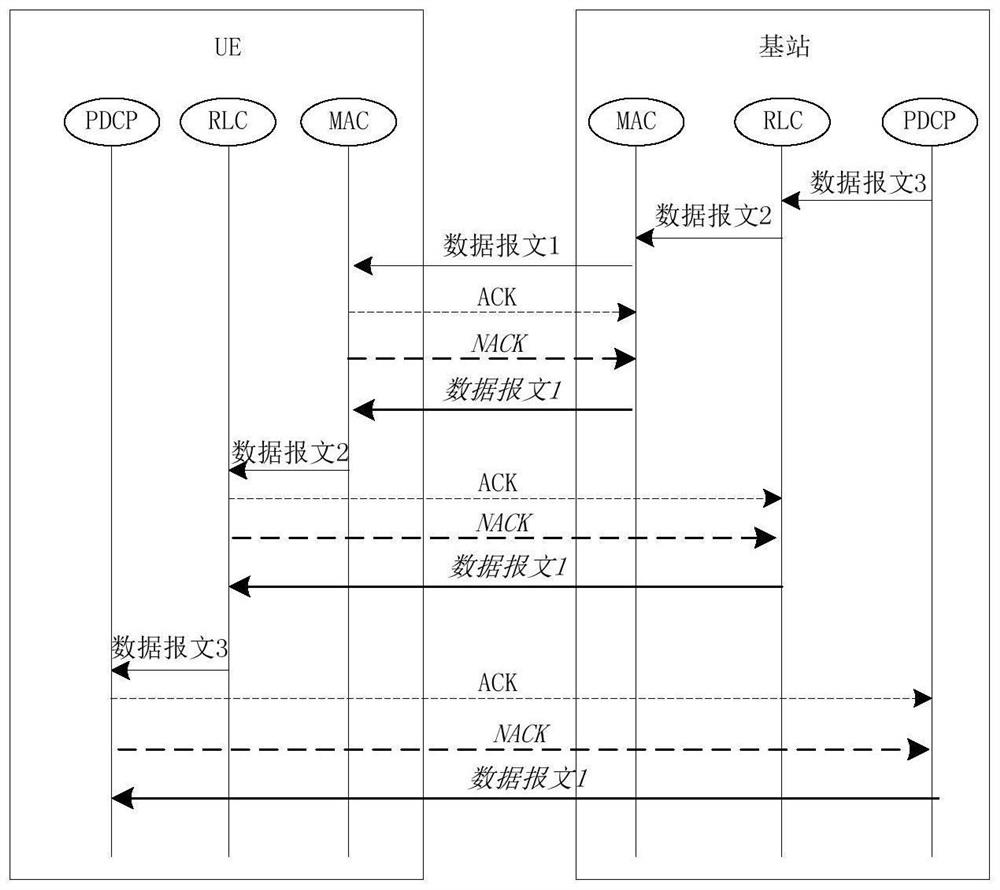

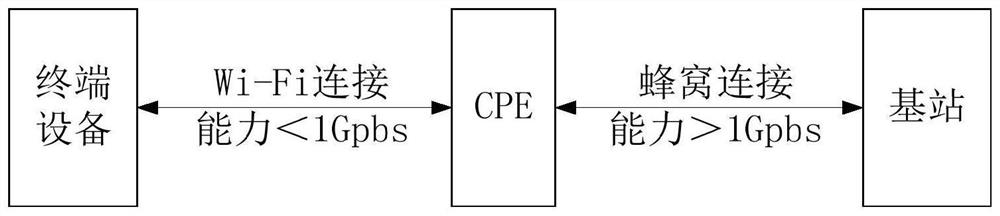

Message transmission method, system and related device

ActiveCN113301605AReduce cache pressureLow costNetwork traffic/resource managementData packEngineering

The invention provides a message transmission method, a message transmission system and a related device. The method comprises the steps that a CPE sends a response message of a first message, wherein the response message of the first message is used for indicating a second message and / or delay time, the second message is a message associated with the first message, the delay time is used for indicating delayed transmission of the second message, and a cache queue of a local area network (LAN) port of the CPE is used for caching a data packet of a message; and the CPE receives the second message. According to the embodiment of the invention, the traffic control of the CPE is realized without additionally setting equipment cache, and the stability and success rate of data transmission of the CPE are improved.

Owner:CHENGDU OPPO TELECOMM TECH CORP LTD

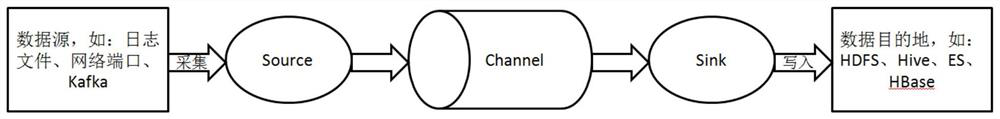

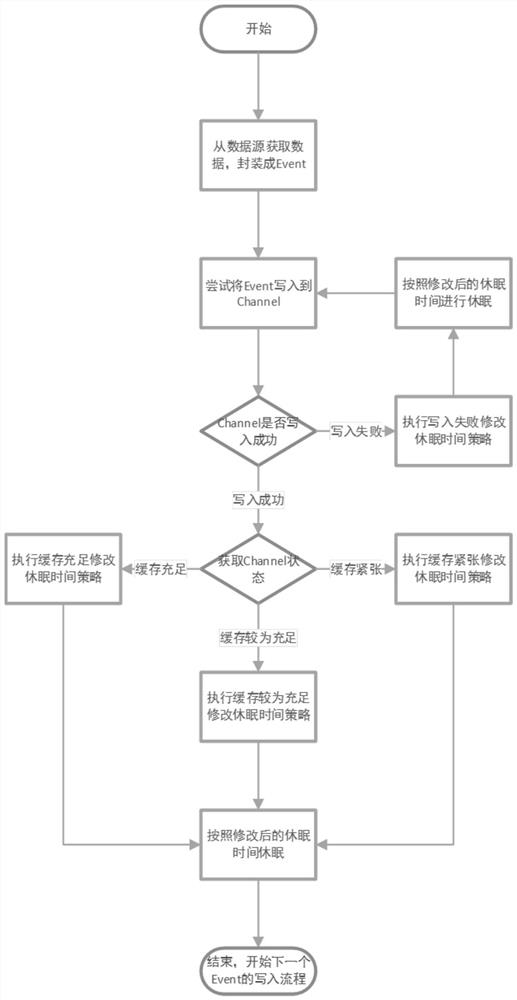

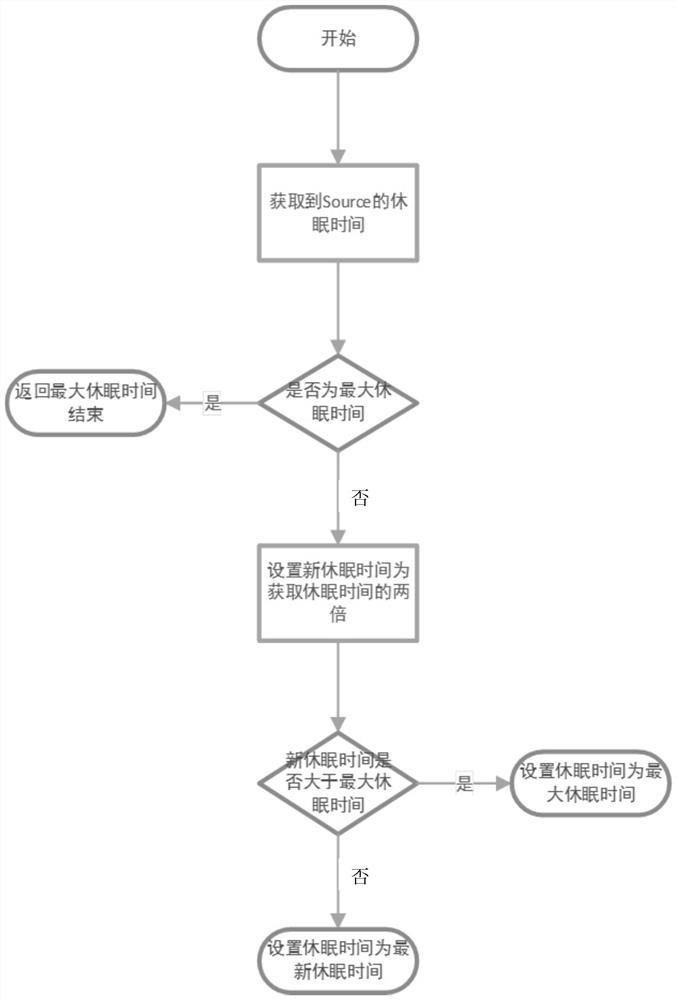

Data acquisition speed control method and device and storage medium

ActiveCN111930304AReduce loadReduce cache pressureInput/output to record carriersResource allocationComputer hardwareSleep time

The invention discloses a data collection speed control method and device and a storage medium, and the method comprises the steps: collecting data of a data source, and requesting to write the data into a system temporary storage; and adjusting the sleep time of the system between two times of data acquisition according to a judgment result of whether data writing is successful or not and the usecapacity of the temporary storage. The equipment and the storage medium realize the steps of the data acquisition speed control method. Different dormancy strategies are adopted according to different states such as data writing and the use capacity of the temporary storage, so that the whole data acquisition process can be stably carried out, and the impact on the stability of the whole acquisition process due to sudden increase or sudden decrease of the acquisition frequency is avoided.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

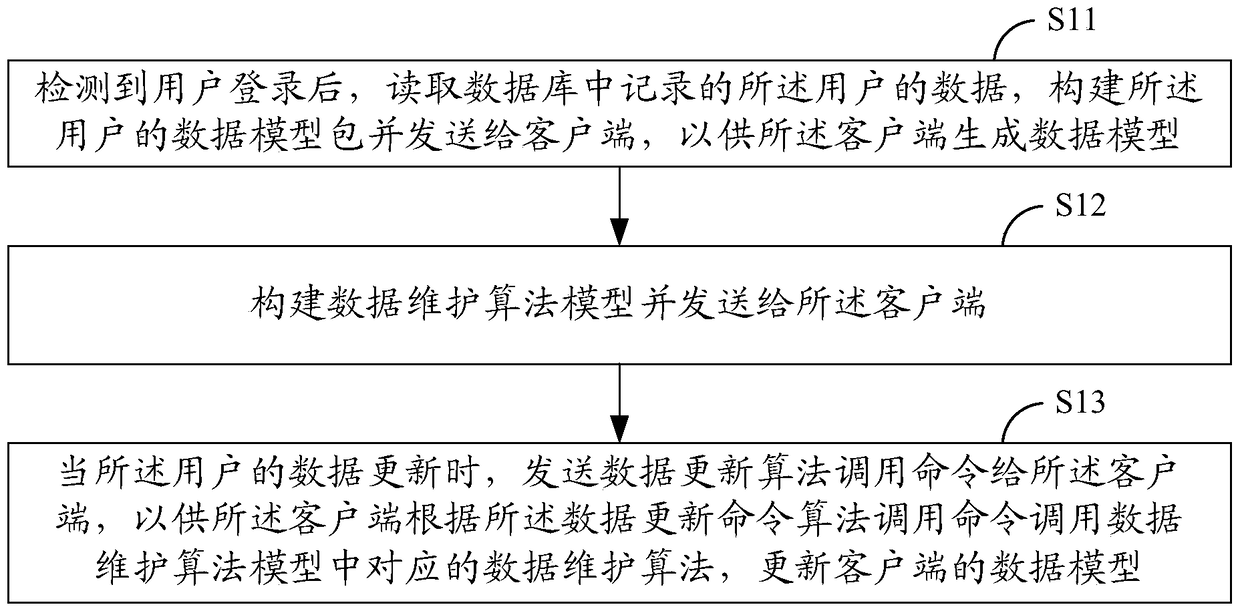

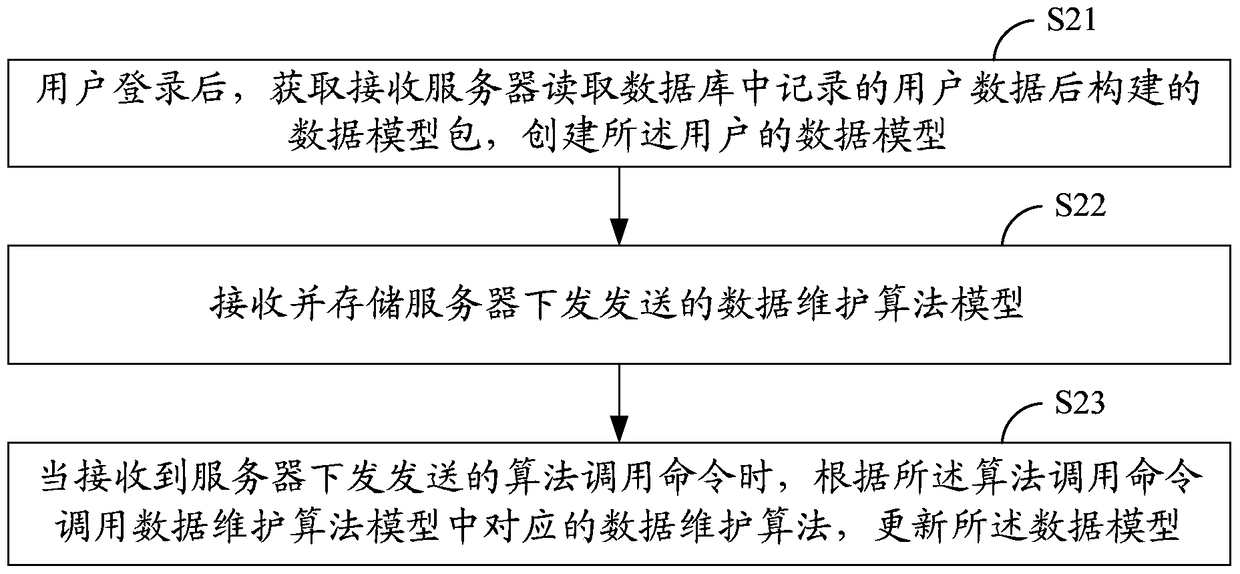

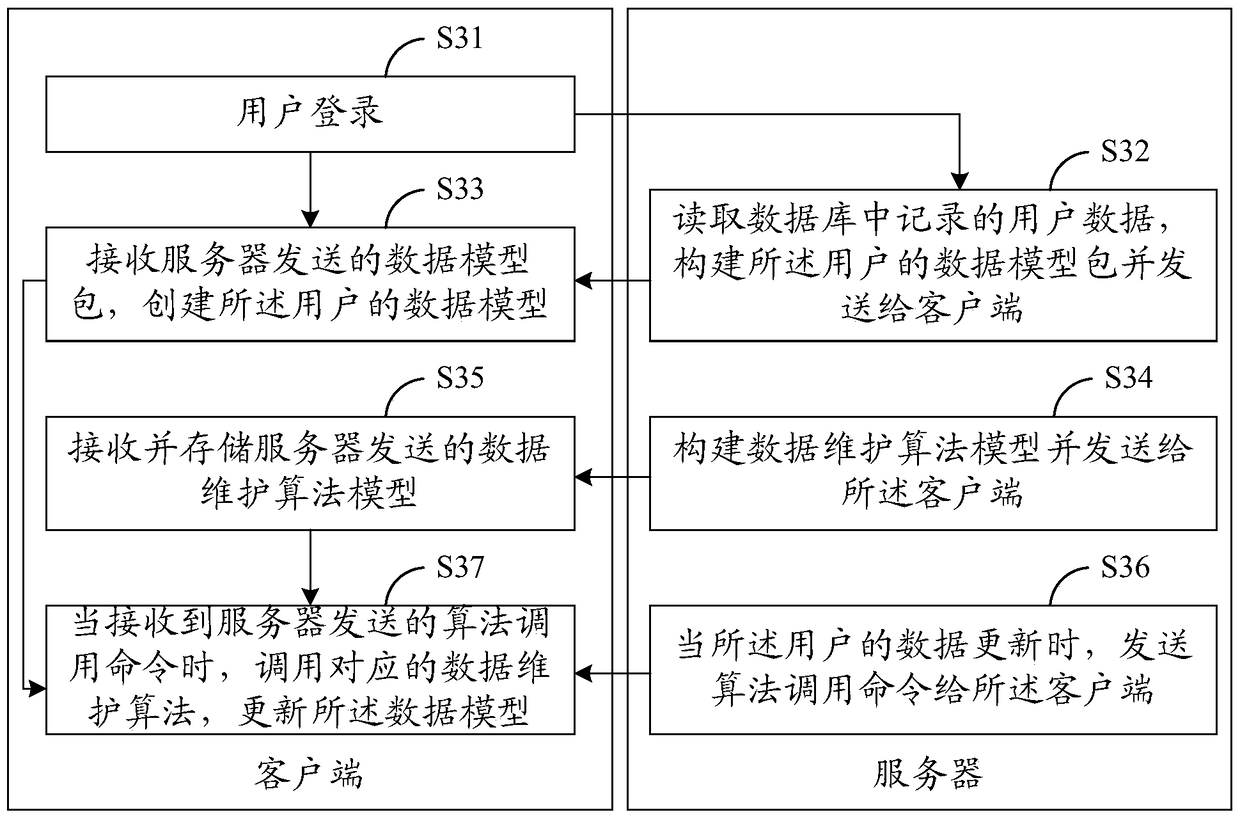

Ways to reduce server cache pressure

ActiveCN103995824BReduce cache pressureImprove robustnessDatabase updatingTransmissionClient-sideData library

The invention provides a method for relieving caching pressure of a server. The method includes the steps that after it is detected that a user logs in, user data recorded in a database are read, and a data model package for the user is constructed and sent to a client so that the client can generate data models; a data maintenance algorithm model is constructed and sent to the client; when data of the user are updated, an algorithm calling command is sent to the client so that the client can call corresponding data maintenance algorithms in the data maintenance algorithm model according to the algorithm calling command, and the data models of the client are updated. The method for relieving caching pressure of the server can effectively solve the problem of pressure maintenance when the server caches user data.

Owner:GUANGZHOU HUADUO NETWORK TECH

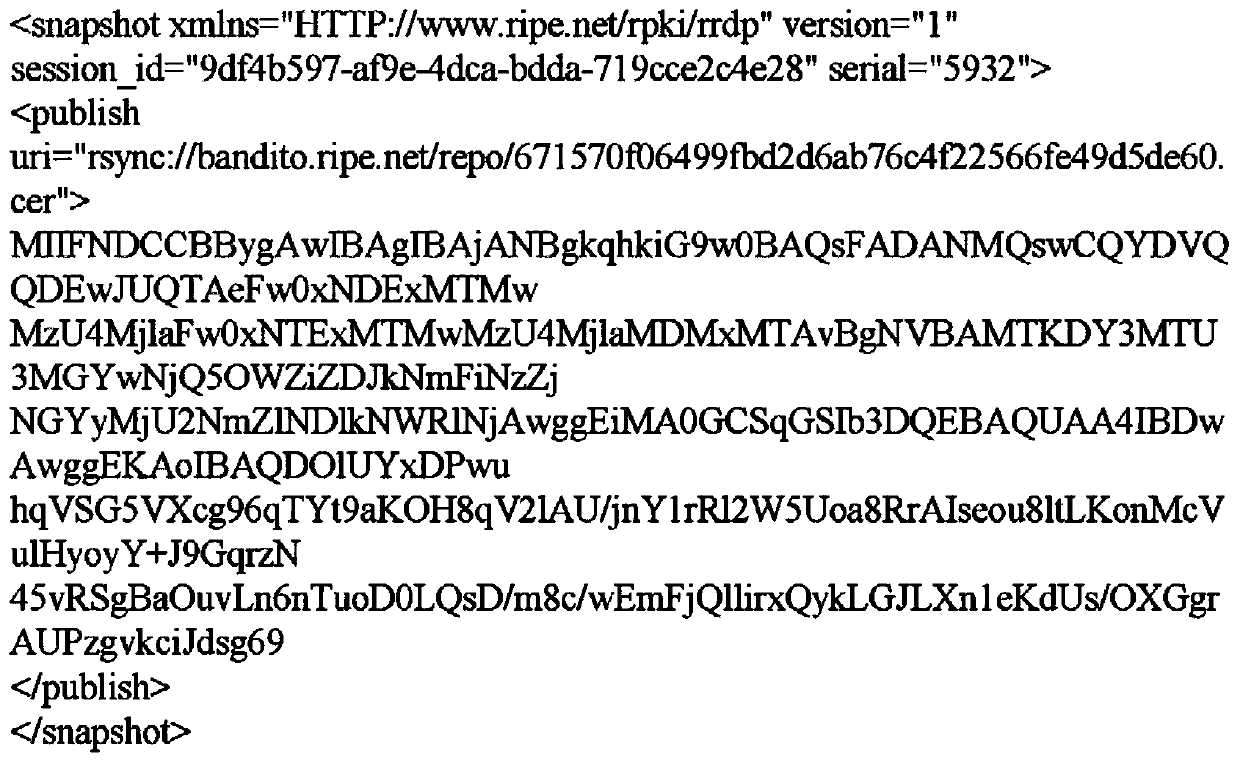

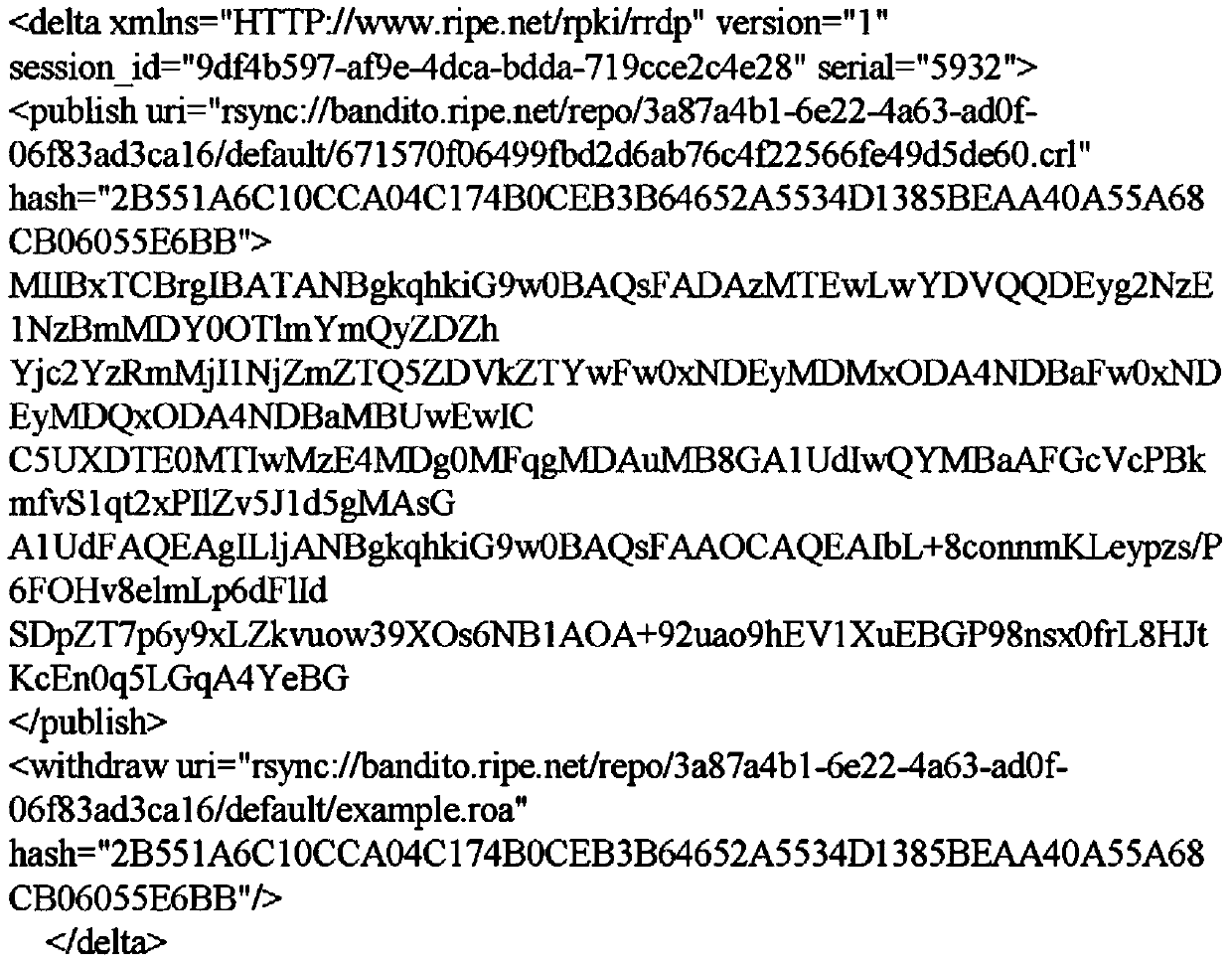

A Method for Incremental Synchronization of RPKI Data Warehouse

ActiveCN105634721BReduce loadReduce cache pressurePublic key infrastructure trust modelsData synchronizationData warehouse

The invention relates to a RPKI (Resource Public Key Infrastructure) data warehouse incremental synchronization method. The method comprises the following steps: 1) an updating element is added in an incremental file, and for an object file of which the content is partially updated, a RPKI relying party performs data synchronization with the RPKI data warehouse through the updating element; 2) an active informing mechanism is added, when the content in the RPKI data warehouse is changed, a new updating informing file is generated and actively sent to the RPKI relying party, thus, the RPKI relying party is enabled to synchronize the updated file; 3) an incremental file monitoring system is established, wherein the system is used for monitoring acquisition condition of the incremental file and deleting the incremental file which is only acquired by a very small amount of the RPKI relying parties. With the method, caching load of the RPKI data warehouse is reduced, and bandwidth pressure during a data synchronization process is relieved.

Owner:CHINA INTERNET NETWORK INFORMATION CENTER

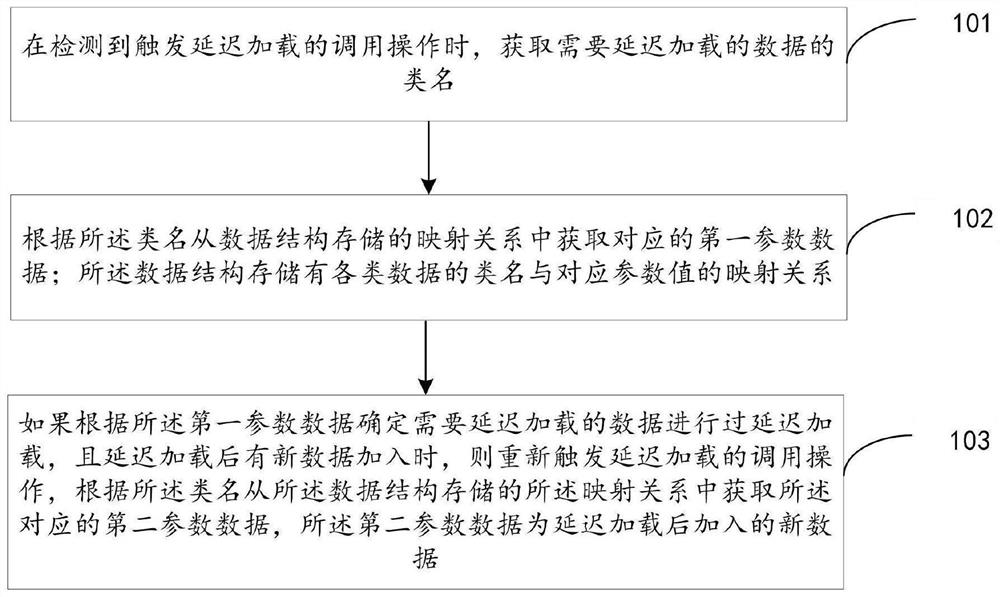

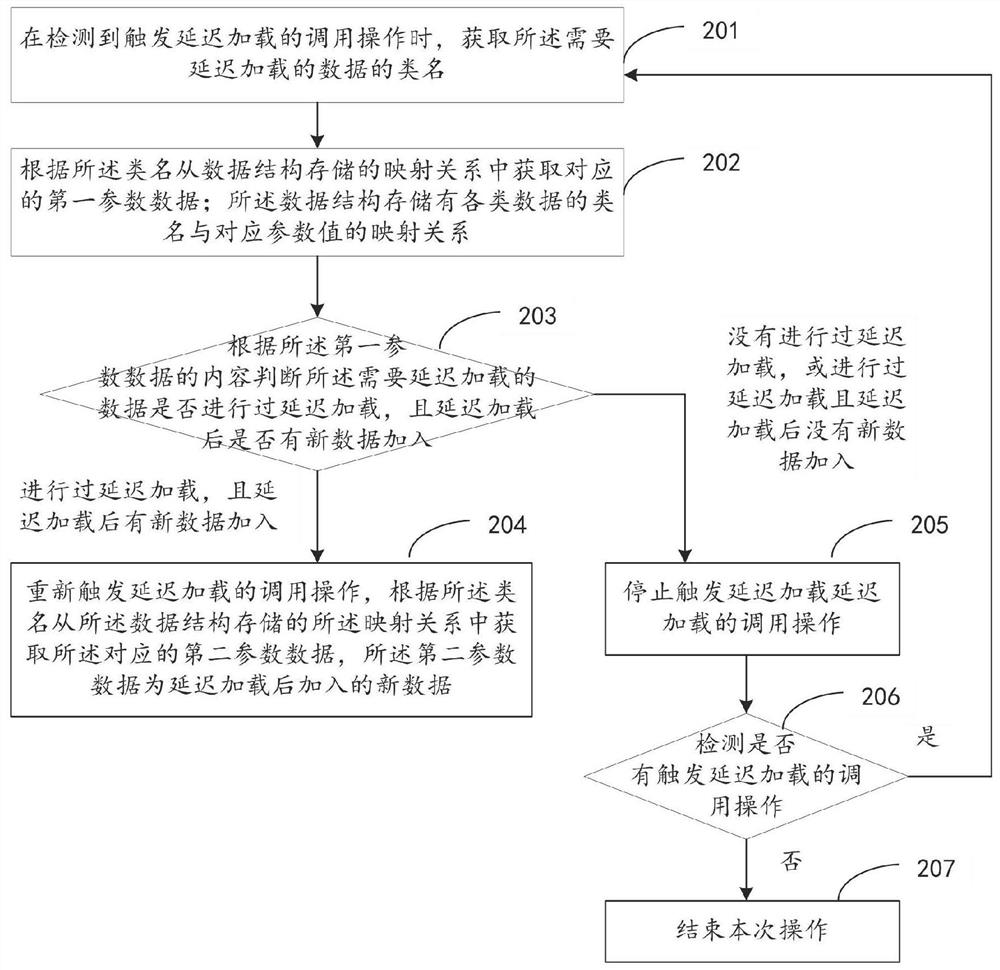

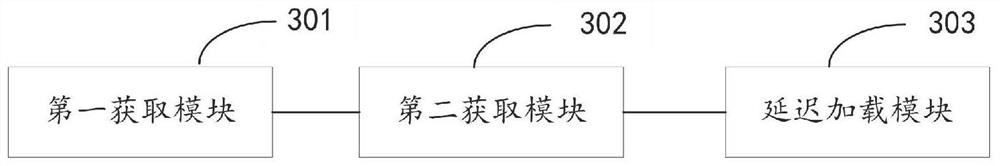

Delay loading detection method and device, electronic equipment, storage medium and product

PendingCN112765512AIntegrity guaranteedReduce local cache pressureOther databases indexingOther databases queryingData needsReal-time computing

The invention provides a detection method and device for delayed loading, electronic equipment, a storage medium and a computer program product, and the method comprises the steps: obtaining a class name of data needing to be loaded in a delayed manner when a call operation for triggering the delayed loading is detected; acquiring corresponding first parameter data from a mapping relation stored in the data structure according to the class name; if it is determined that data needing to be loaded in a delayed mode is loaded in a delayed mode according to the first parameter data, and when new data are added after the data need to be loaded in the delayed mode, triggering calling operation of the delayed loading again, and obtaining corresponding second parameter data from the mapping relation stored in the data structure according to the class name; the second parameter data are new data added after delayed loading. According to the method and the device, when the calling operation for triggering the delayed loading is detected, whether the calling operation for triggering the delayed loading is re-triggered or not is determined according to the class name of the data so as to obtain new data, so that the integrity of the data is ensured, meanwhile, the local cache pressure is also reduced, and the system performance is improved.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

A message scheduling method, device and network chip

ActiveCN111526097BSolve congestionReduce cache pressureData switching networksEngineeringPacket scheduling

Owner:新华三半导体技术有限公司

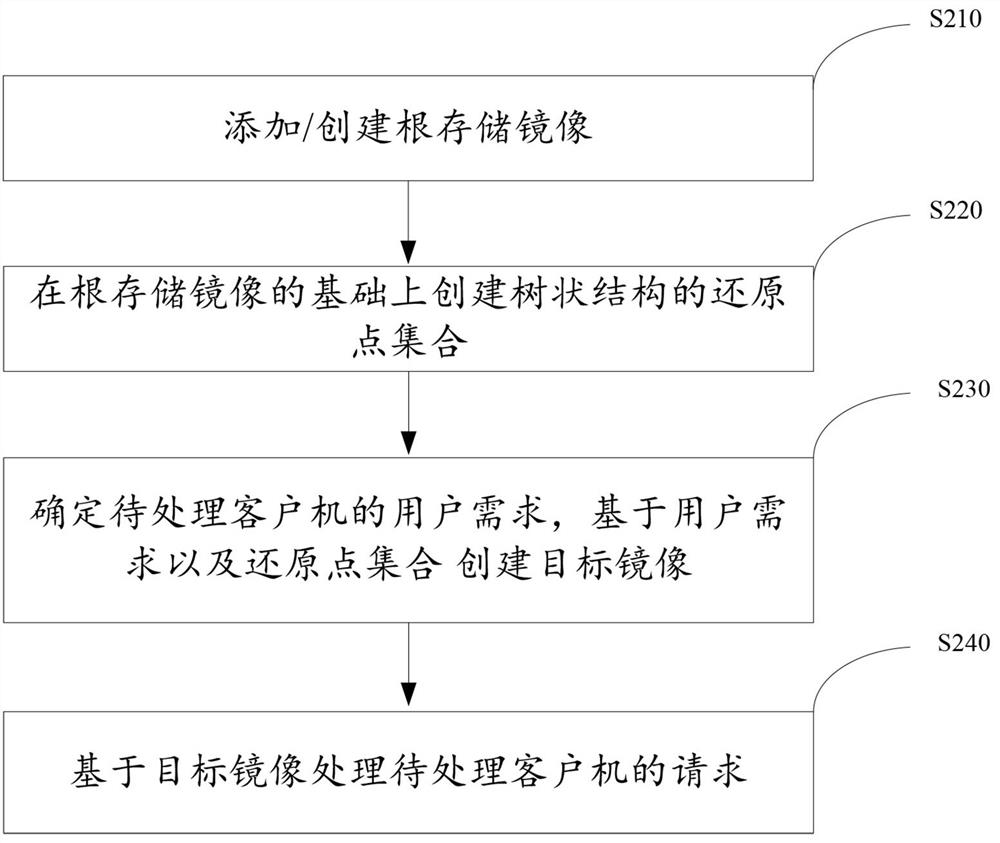

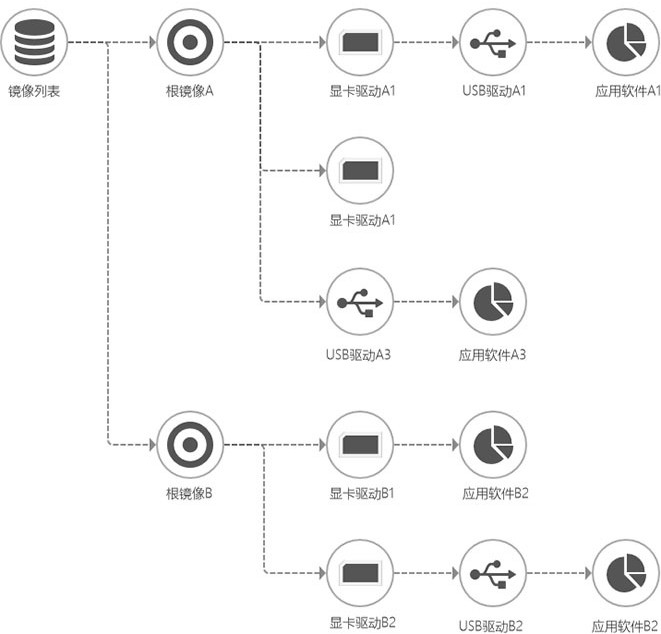

Data processing method and device based on cloud diskless tree mirror image

ActiveCN114860350AReduce cache pressureProgram loading/initiatingExecution for user interfacesComputer hardwareStorage security

The invention provides a data processing method and device based on a cloud diskless tree mirror image. By creating a reduction point set of a tree structure, the root storage supports addition of a physical disk and / or data file storage; the restoration point set comprises a plurality of restoration points; determining a user demand for processing a to-be-processed client, and creating a target mirror image based on the user demand and the restoration point set; and processing the request for processing the client to be processed based on the target mirror image. A flexible mirror image configuration mode of multiple restoring points and / or root storage service multiple terminals is achieved, it is guaranteed that universal diskless services can achieve service sharing without repeated storage, mirror image branching processing is carried out on customized services, the effects of reducing server storage pressure and improving storage safety are achieved, and user experience is improved. The diskless tree-shaped storage technology can be generally used for application scenes of server resource hot standby, dynamic load transfer, dynamic load balance and the like.

Owner:杭州子默网络科技有限公司

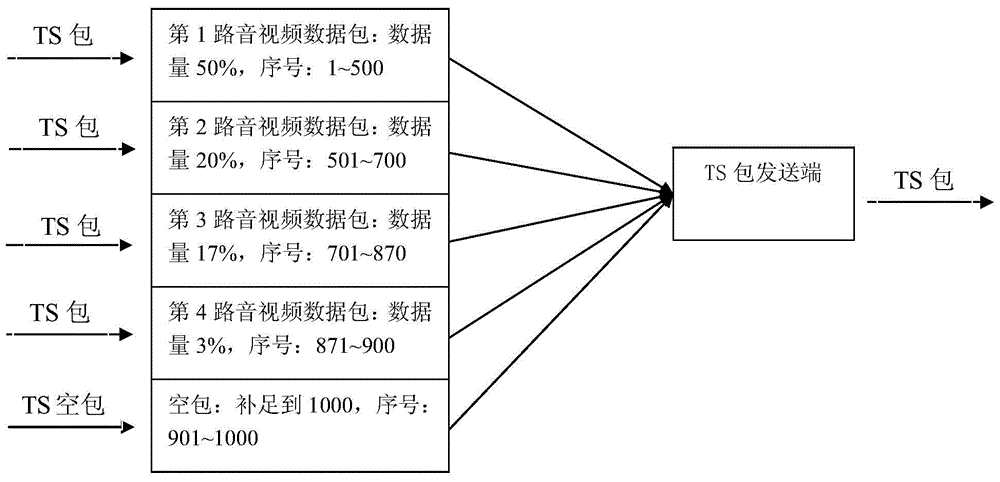

A method for evenly distributing code streams of each program when constructing multi-program ts streams

ActiveCN104185035BReduce cache pressureIncreased cache pressureSelective content distributionNetwork packetBroadcasting

The invention relates to a method for achieving uniform distribution of all paths of program code streams in the construction process of multiple program TS streams. When TS packages are transmitted at a time, N memory blocks are allocated to N paths of audio / video data packages needing to be transmitted, and list information and the integral number of TS packages are stored in each memory block. The total number of the TS packages in the N memory blocks is counted at the transmitting moment, the percentage of the number of the TS packages in each memory block on the total number of the TS packages is calculated, and a range of consecutive serial numbers is allocated for each memory block according to the corresponding percentage; an uniform distribution random number with the value range smaller than the total number of the TS packages is generated; the TS package, with the generated random falling into the memory block serial number range, in the corresponding memory block is read and transmitted. Uniform distribution random numbers are generated repeatedly until all the TS packages are transmitted. Thus, sudden mass data transmission in a certain path of program is avoided, the stability of broadcasting is improved, and the audio / video data caching pressure at the receiving end of a cable television is relieved.

Owner:XI AN JIAOTONG UNIV

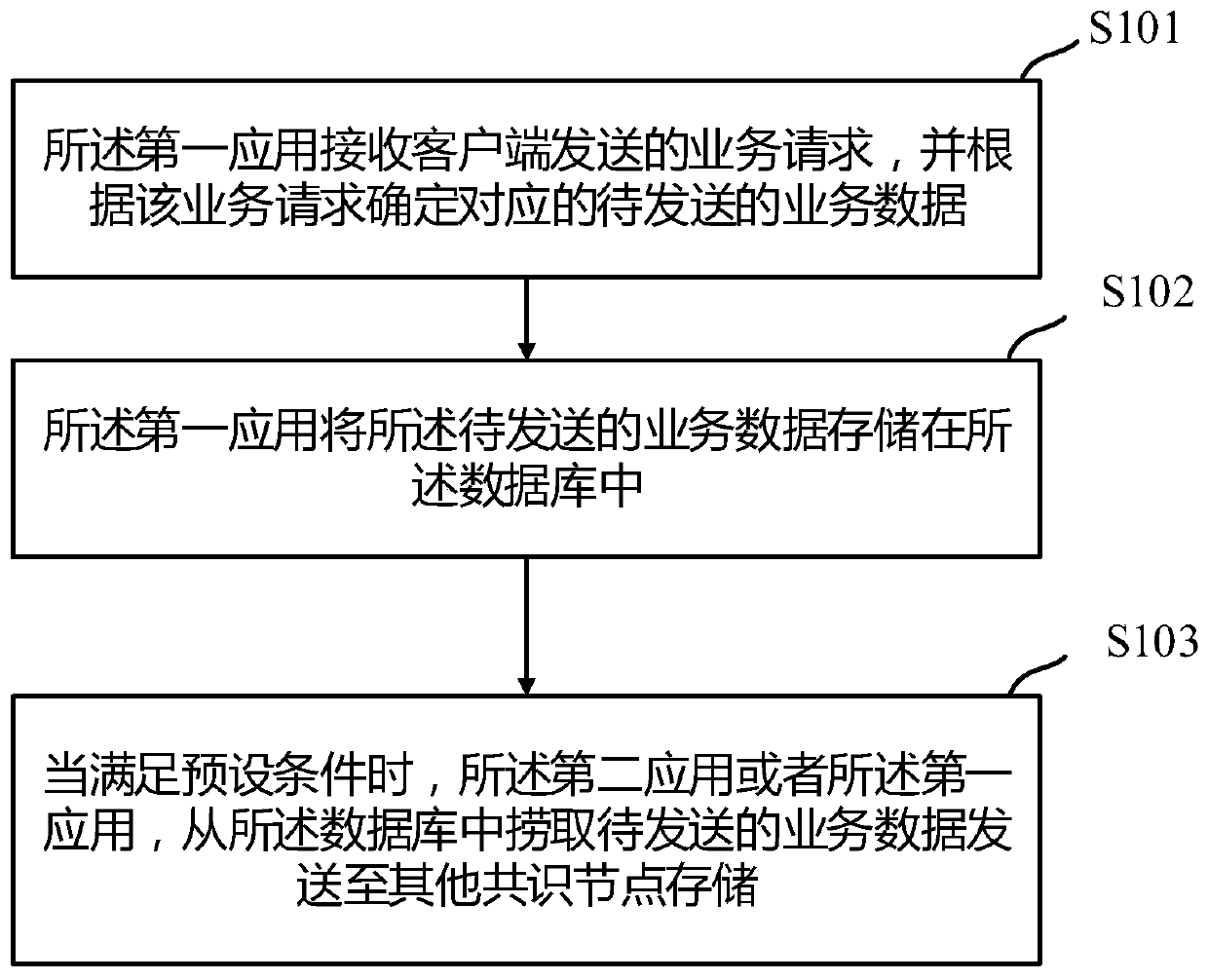

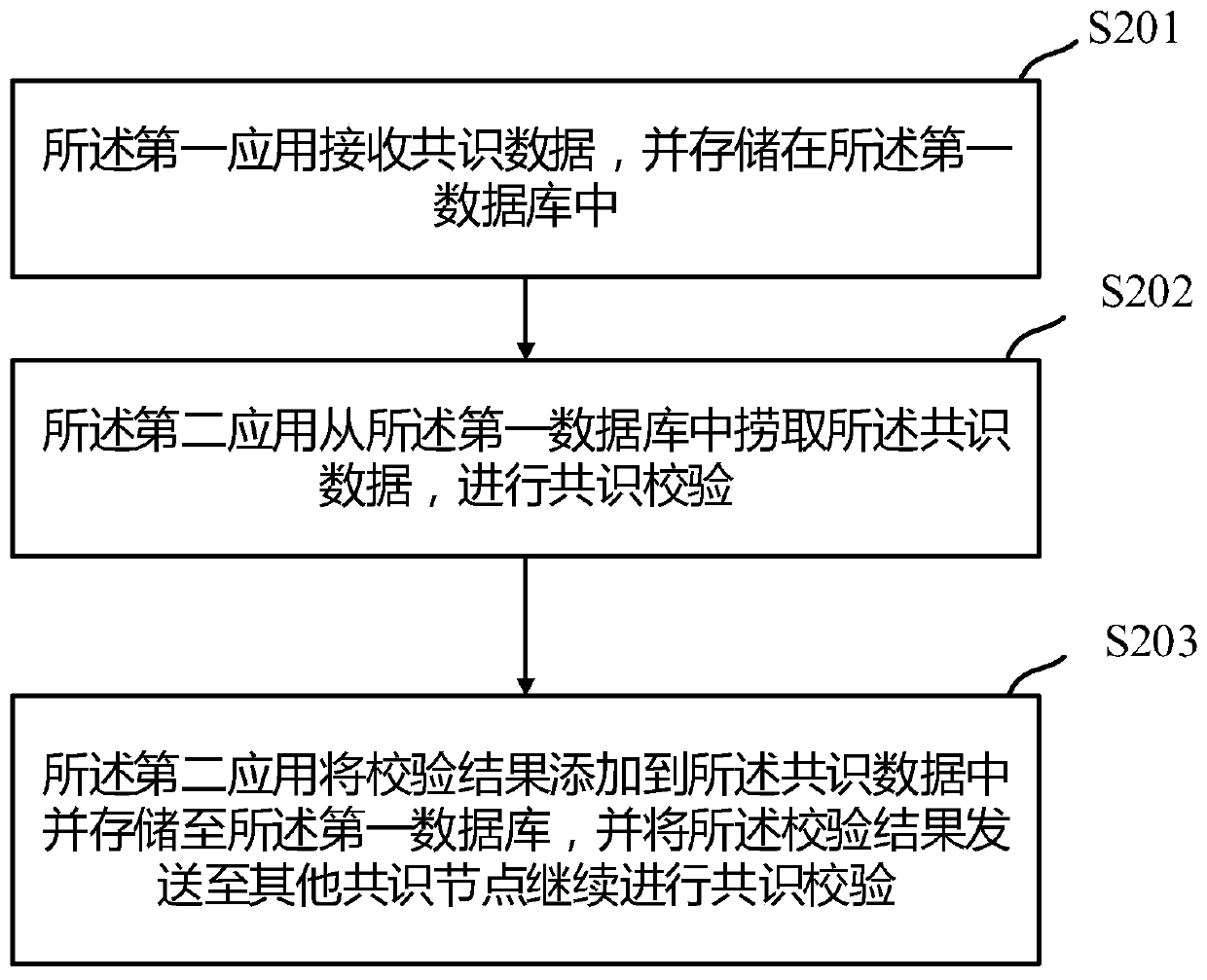

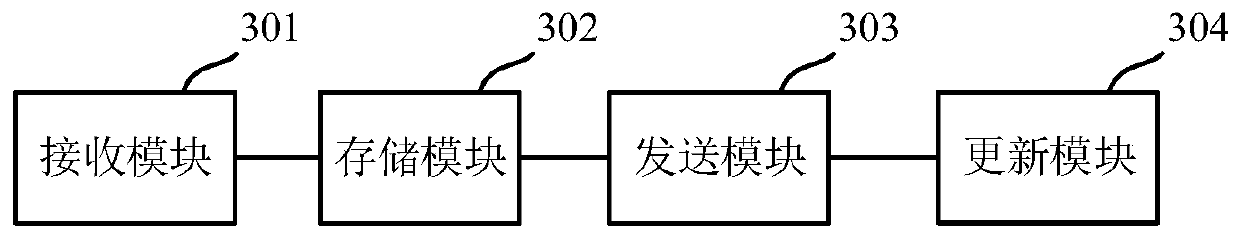

A blockchain business acceptance and business consensus method and device

ActiveCN107395665BReduce cache pressureReduce occupancySpecial data processing applicationsSecuring communicationBusiness dataConsensus

This application discloses a blockchain business acceptance and business consensus method and device. The consensus node includes a first application, a second application, and a database, and the database is used to store business data processed by the first application and the second application. , in order to reduce the cache pressure of consensus nodes. It can be seen that through the method provided by the embodiment of this application, as long as the consensus node determines the business data corresponding to the business request, it can store the business data in the database and wait to send it to other consensus nodes, so the cache occupation of the consensus node The lower rate increases the number of businesses that consensus nodes can process per unit time, thereby improving the efficiency of consensus verification and processing of business requests by consensus nodes.

Owner:ADVANCED NEW TECH CO LTD

Air interface signal alignment processing method, device, equipment and storage medium

ActiveCN110213797BReduce cache pressureReduce resource usageSynchronisation arrangementNetwork traffic/resource managementAir interfaceRadio frequency

The present application provides a processing method, device, device and storage medium for air interface signal alignment, the method comprising: the baseband unit receives the processing delay amount and the first maximum delay compensation amount of the radio frequency unit reported by the radio frequency unit; The baseband unit determines a target delay compensation amount according to the processing delay amount; the baseband unit performs delay compensation jointly with the radio frequency unit according to the target delay compensation amount and the first maximum delay compensation amount deal with. The baseband unit and the radio frequency unit can share delay compensation, reduce the resource occupation of the buffer of the radio frequency unit, and reduce the buffer pressure of the radio frequency unit.

Owner:TD TECH COMM TECH LTD

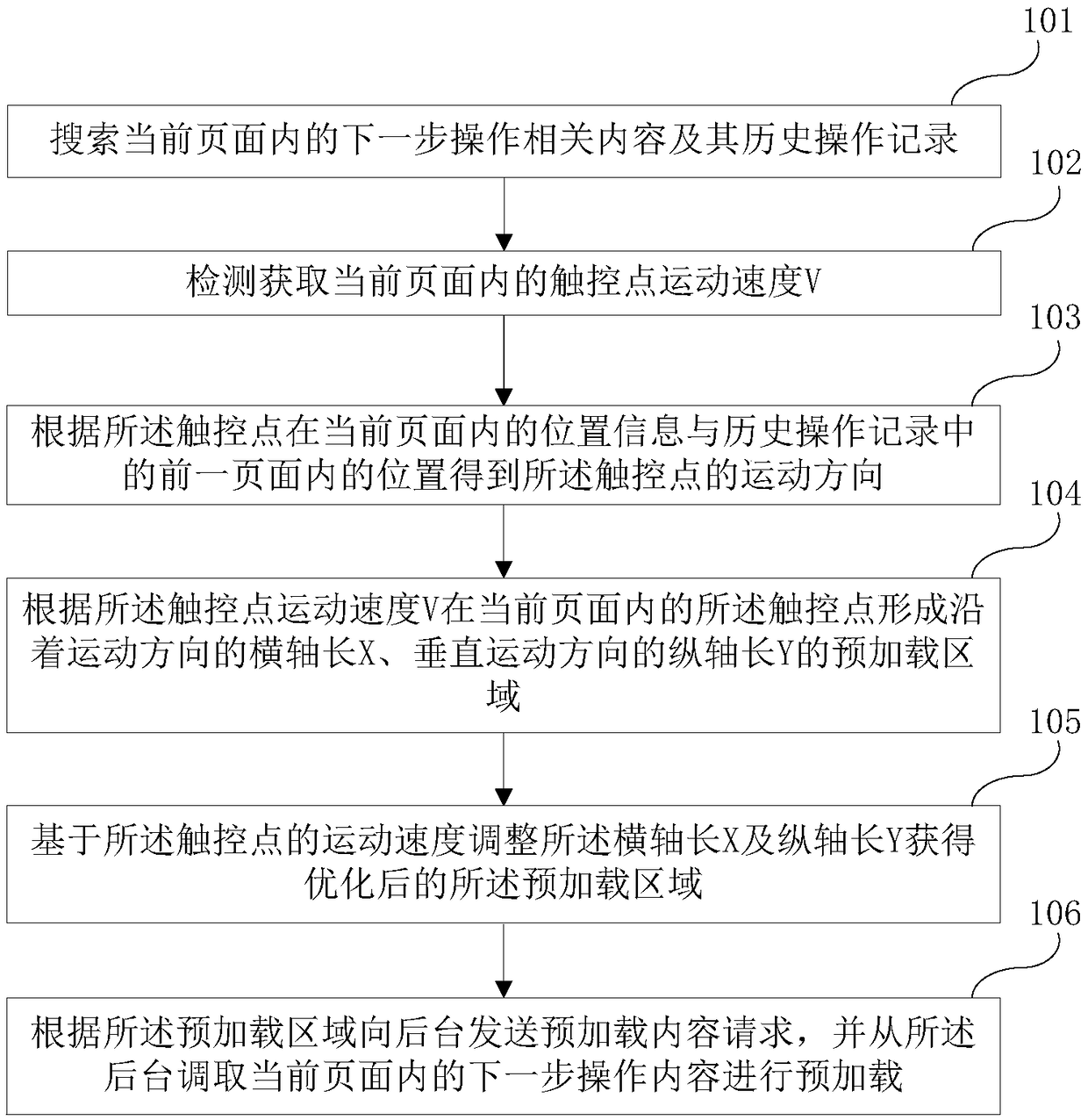

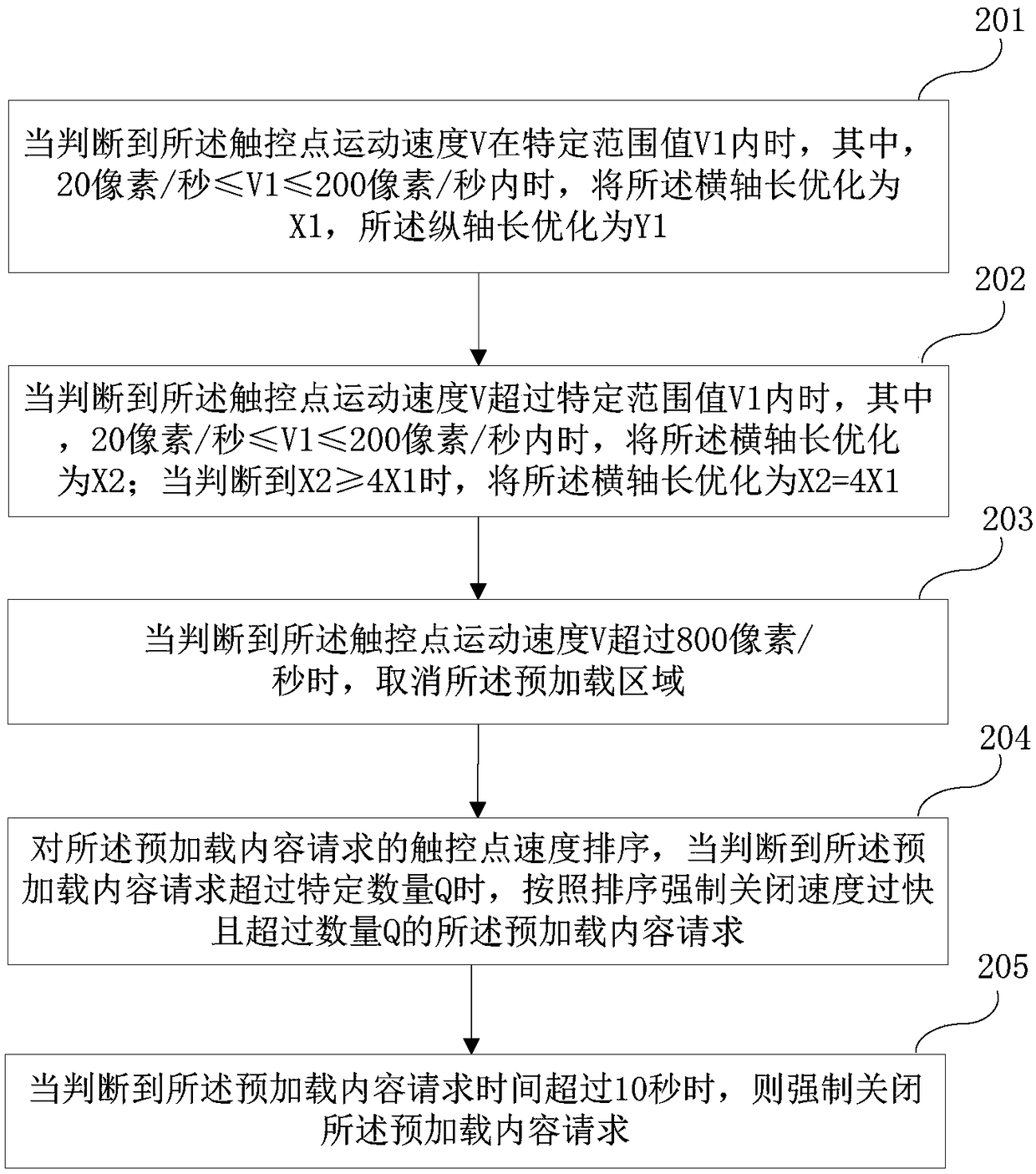

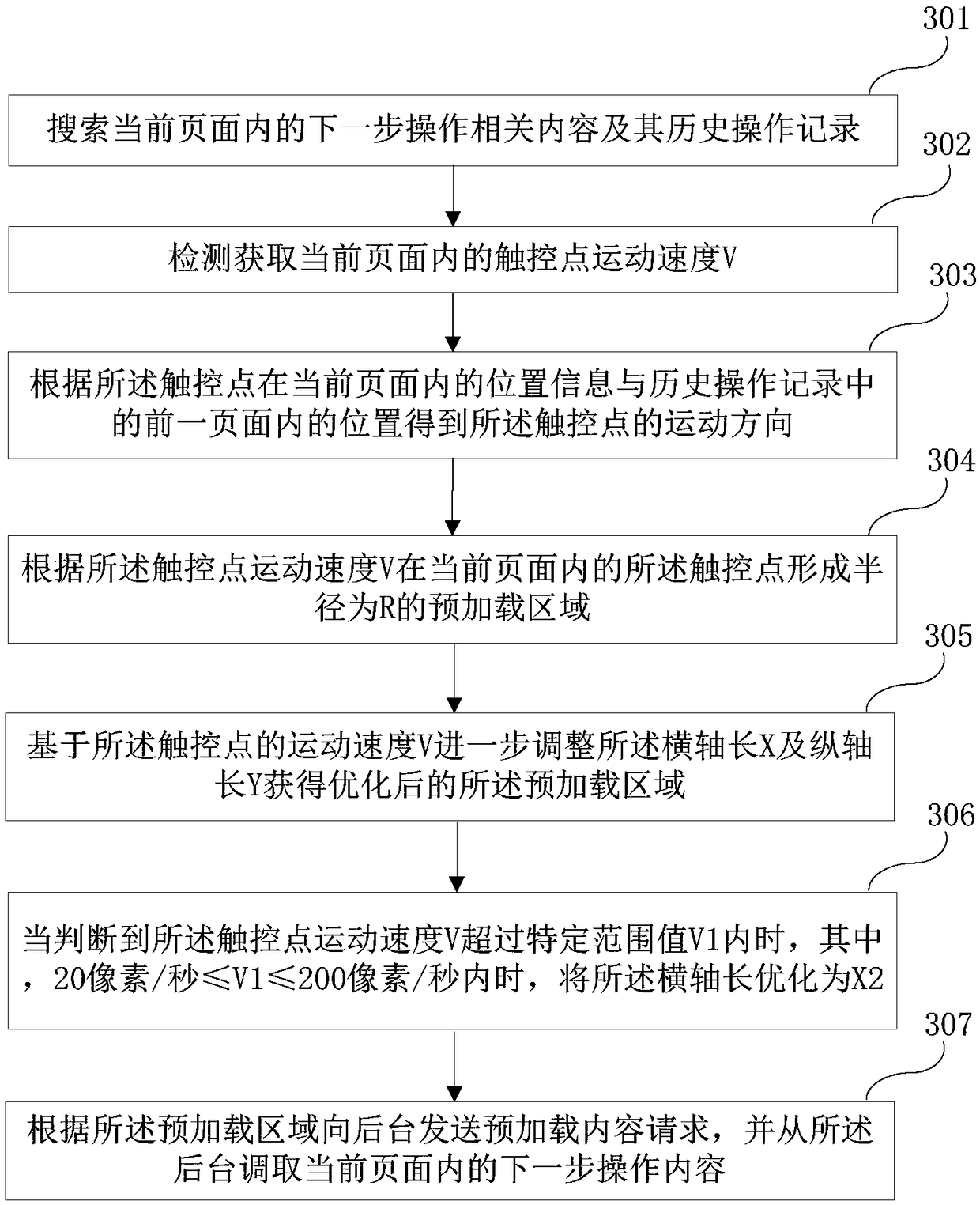

Method and system for preloading page information

ActiveCN105677327BEliminate waiting timeImprove the efficiency of preloadingExecution for user interfacesSpecial data processing applicationsHorizontal axisComputer science

The application discloses a page information preloading method and system. The method comprises: searching next operation related content within current webpage and a historical operation record thereof; detecting and acquiring the moving velocity of a touch point within the current page; obtaining the moving direction of the touch point based on the position information of the touch point within the current page and the position of the touch point within the previous page in the historical operation record; based on the moving velocity of the touch point, forming a preloading area of a horizontal axis length along a moving direction and a longitudinal axis length perpendicular to the moving direction at the touch point within the current page; based on the moving velocity of the touch point, further adjusting the preloading area after optimization of the horizontal axis length and the longitudinal axis length; based on the preloading area, sending a preloading content request to a backend, and invoking next operation content from the backend for preloading. The method and system of the invention search information data within a trend range from the backend and preload the information data to the page, which eliminates waiting time for loading information data when users conduct next page operation.

Owner:ALIBABA (CHINA) CO LTD

A file upload method and device

The embodiment of the present invention discloses a file uploading method and device. The file uploading method includes: receiving a file uploading request sent by a client, the file uploading request includes complete file content; parsing the file uploading request, and analyzing to obtain Store the data flow fragments in the cache; when the size of the data flow fragments stored in the cache reaches the preset threshold, the micro-thread in the scheduling process writes the data flow fragments in the cache into the storage module, and repeat this step until All data stream segments of the complete file content are written into the storage module. The embodiments of the present invention can reduce cache pressure and improve upload performance.

Owner:TENCENT TECH (SHENZHEN) CO LTD

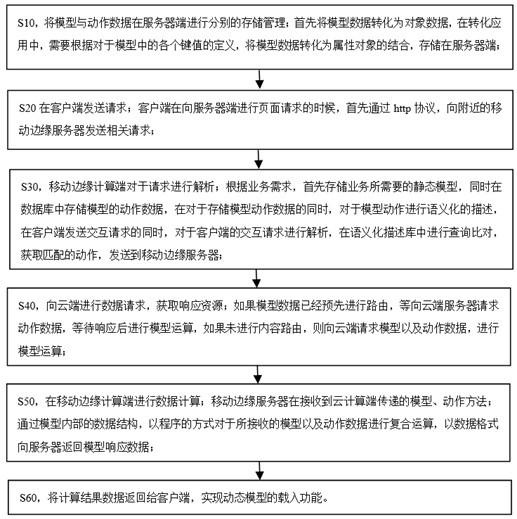

A web AR data presentation method based on attribute separation

ActiveCN109617960BImprove operational efficiencyFlexible serviceDigital data information retrievalTransmissionDynamic modelsMobile edge computing

The invention discloses a method for presenting web AR data based on attribute separation, which belongs to the technical field of computer algorithm processing, and includes the following steps: S10, by separately storing and managing the model and action data at the server end, sending a request at the client end ; S20, the mobile edge computing terminal parses the request, and requests data from the cloud to obtain response resources; S30, performs data calculation on the mobile edge computing terminal, returns the calculation result data to the client, and realizes the dynamic model loading function . The method reduces the pressure of cloud computing and improves performance by adopting web augmented reality call and rendering calculation on the model, providing web augmented reality model service mode in interface mode, and improving the interface operation of mobile edge computing and other technologies.

Owner:ZHEJIANG UNIVERSITY OF MEDIA AND COMMUNICATIONS

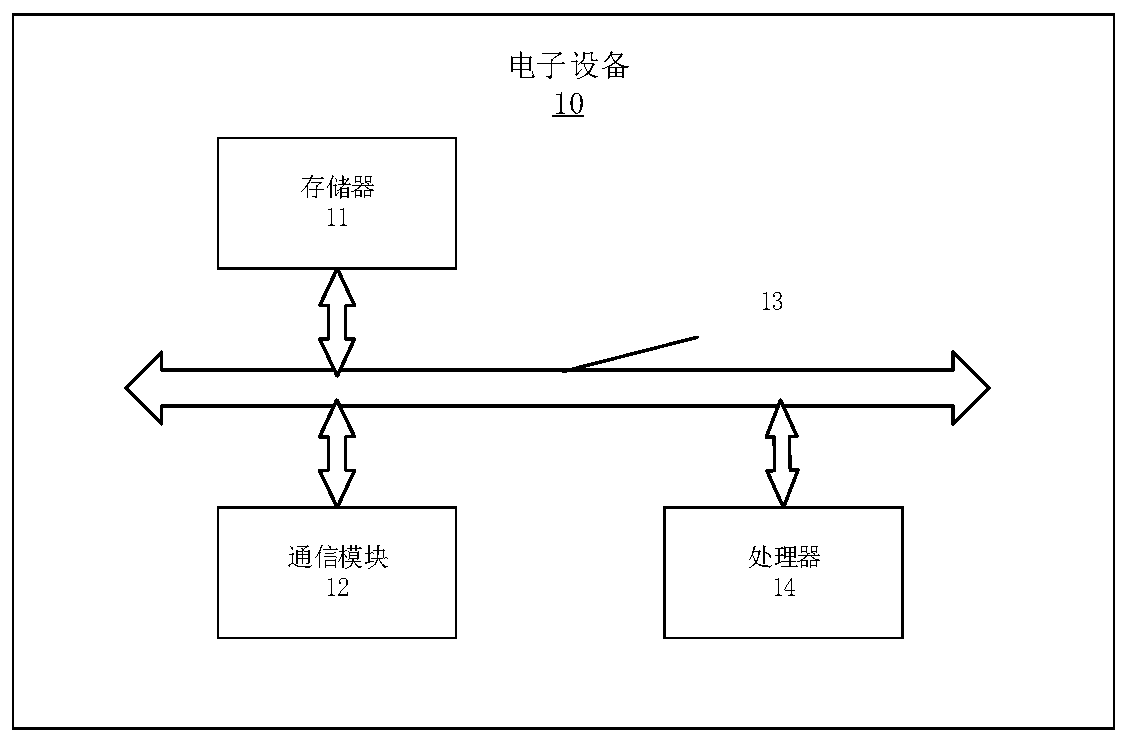

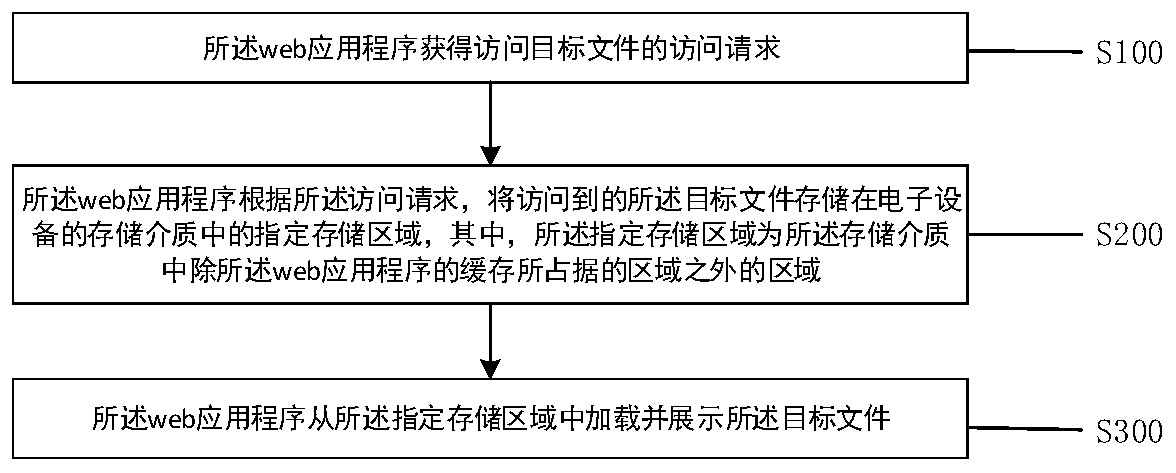

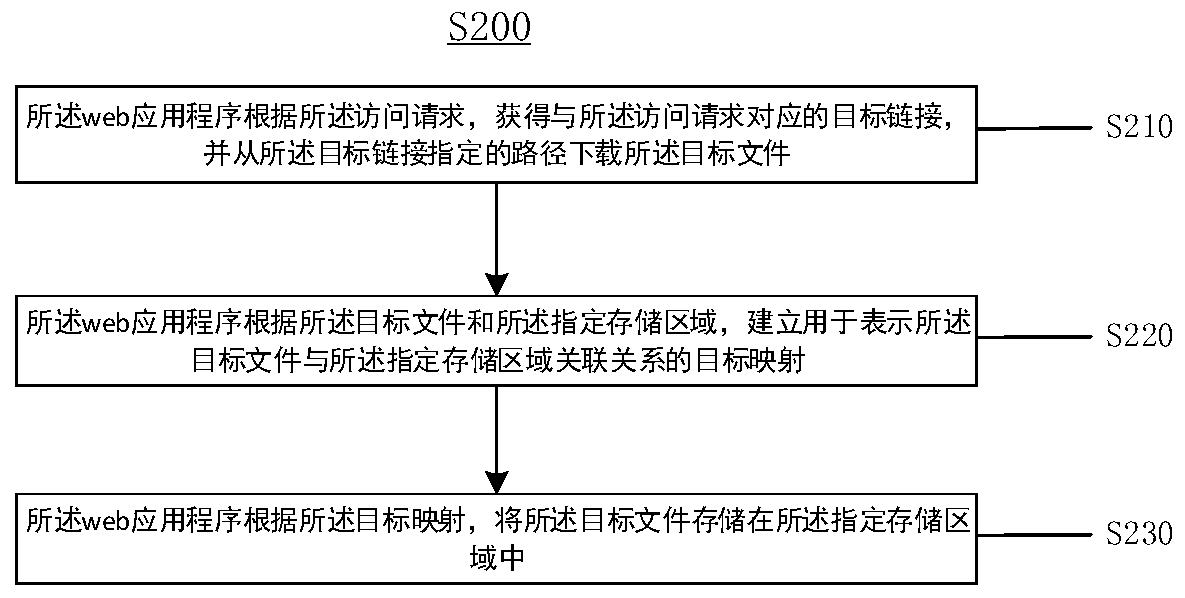

Data processing method and device

InactiveCN109710867AReduce cache pressureImprove fluencyWeb data browsing optimisationWeb applicationObject file

The invention provides a data processing method and device, and the method is applied to a web application program, and comprises the steps that the web application program obtains an access request for accessing a target file; the web application program stores the accessed target file in a designated storage area in a storage medium of the electronic equipment according to the access request, and the designated storage area is an area, except for an area occupied by a cache of the web application program, in the storage medium; and the web application program loads and displays the target file from the specified storage area. The method comprises the following steps: when a web application program needs to access a target file; and storing the accessed target file in a specified storagearea of the electronic equipment except the area occupied by the cache of the web application program, According to the method and the device, the caching pressure of the web application program during operation can be reduced, when the file accessed by the web application program has the local disk, the file can be directly loaded from the local disk, and the file downloading from the network isreduced, so that the operation fluency of the web application program is improved, and the user experience is improved.

Owner:GUIYANG LONGMASTER INFORMATION & TECHNOLOGY CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com