Three-dimensional visual information acquisition method based on two-dimensional and three-dimensional video camera fusion

An acquisition method and 3D vision technology, applied in image data processing, graphics and image conversion, instruments, etc., can solve problems such as lack of information, restrictions on the accuracy and quality of two-dimensional image matching mapping, and restrictions on the accuracy and reliability of three-dimensional visual information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0054] Based on computer vision technology, the present invention proposes a fusion matching method of a three-dimensional camera and a two-dimensional camera, which can provide high-quality two-dimensional images of space scenes and obtain corresponding three-dimensional information.

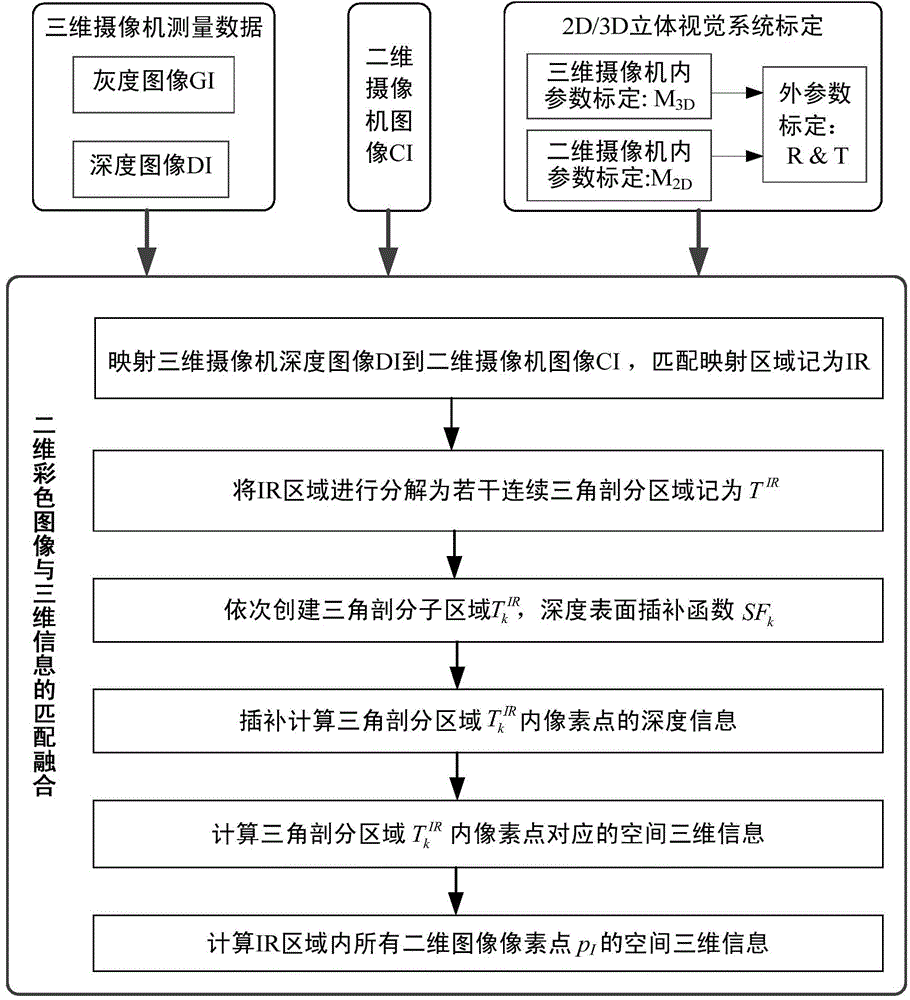

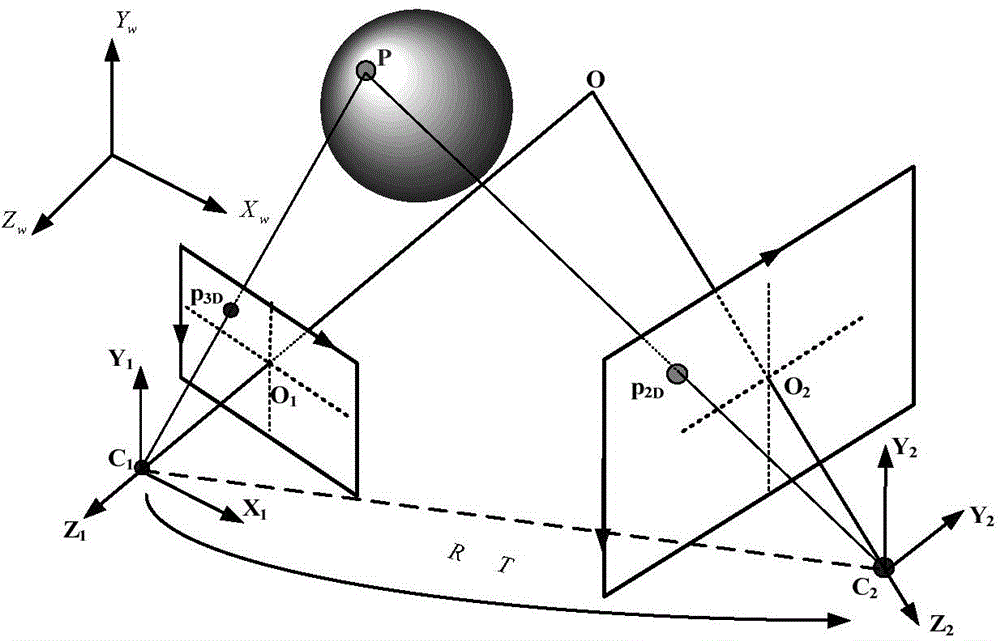

[0055] Such as figure 2 As shown, the present invention discloses a method for acquiring 3D visual information based on fusion of 2D and 3D cameras. The basic flow of the method includes: 1) Synchronous imaging of the scene by a composite camera based on a 2D camera and a 3D camera, and by establishing a matching mapping model of the 3D camera depth image and 2D camera image, the pixels of the 3D camera depth image are mapped to Two-dimensional camera image area; 2) Decompose the mapped area of the two-dimensional camera image, and construct several triangular interpolation areas with the mapping points as vertices; 3) Depth information based on vertices and adjacent vertices of each triangu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com