Vision-based autonomous unmanned plane landing guidance device and method

A technology of autonomous landing and guidance device, which is applied in the directions of measurement device, navigation, surveying and mapping, and navigation, etc. It can solve the problems of long-distance detection of weak and small infrared targets, and the inability to obtain the attitude, speed and acceleration of the UAV.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

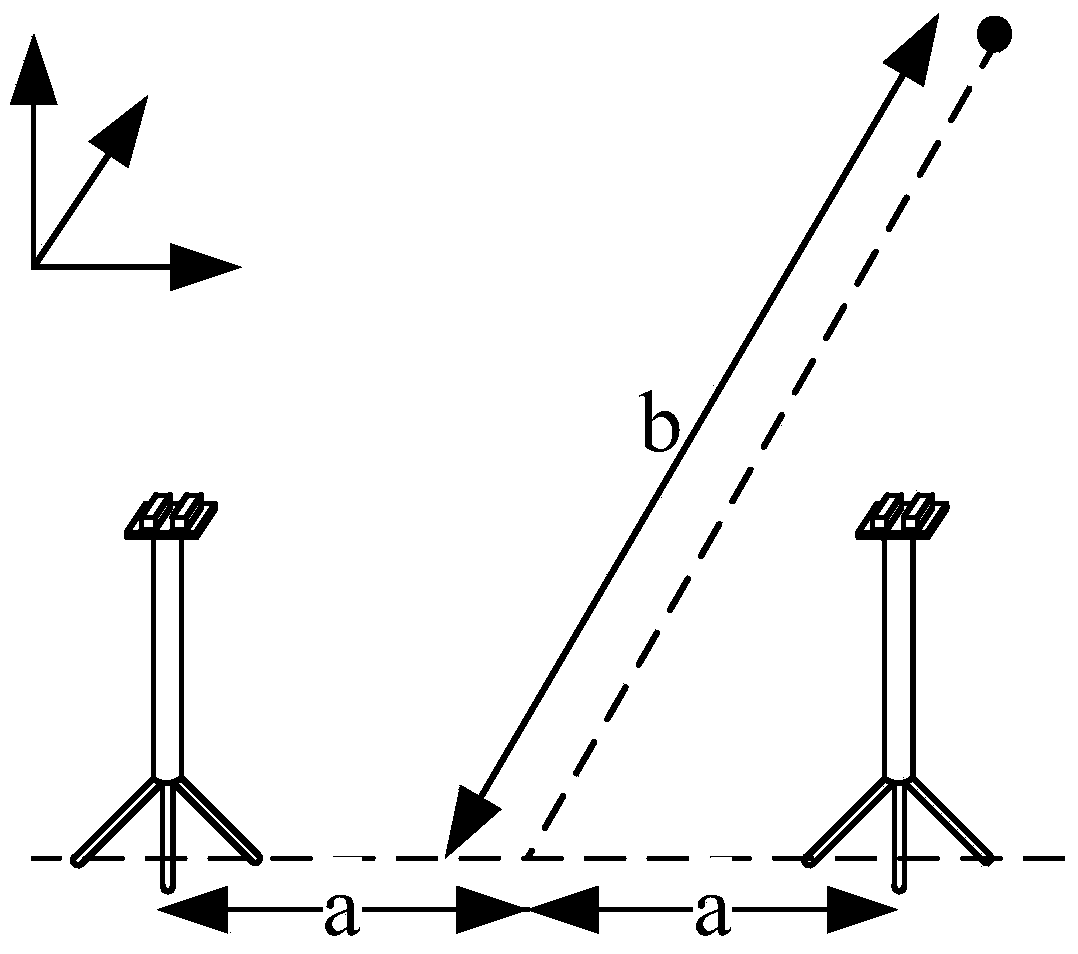

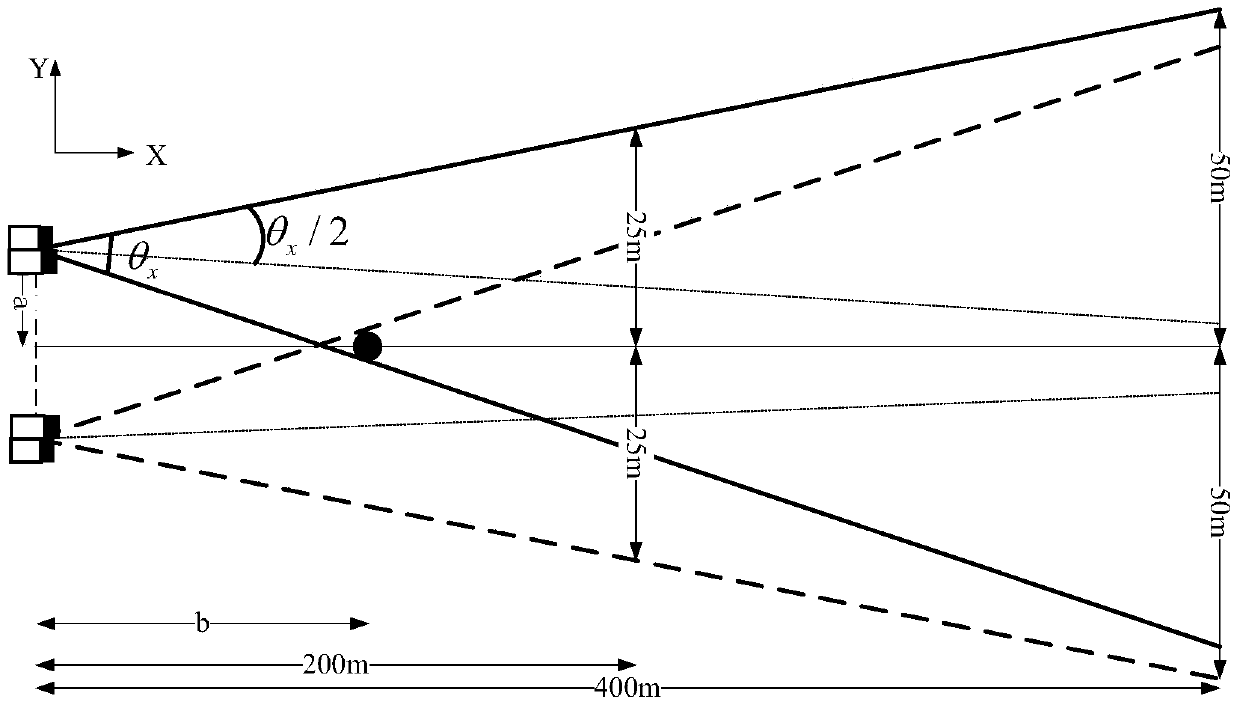

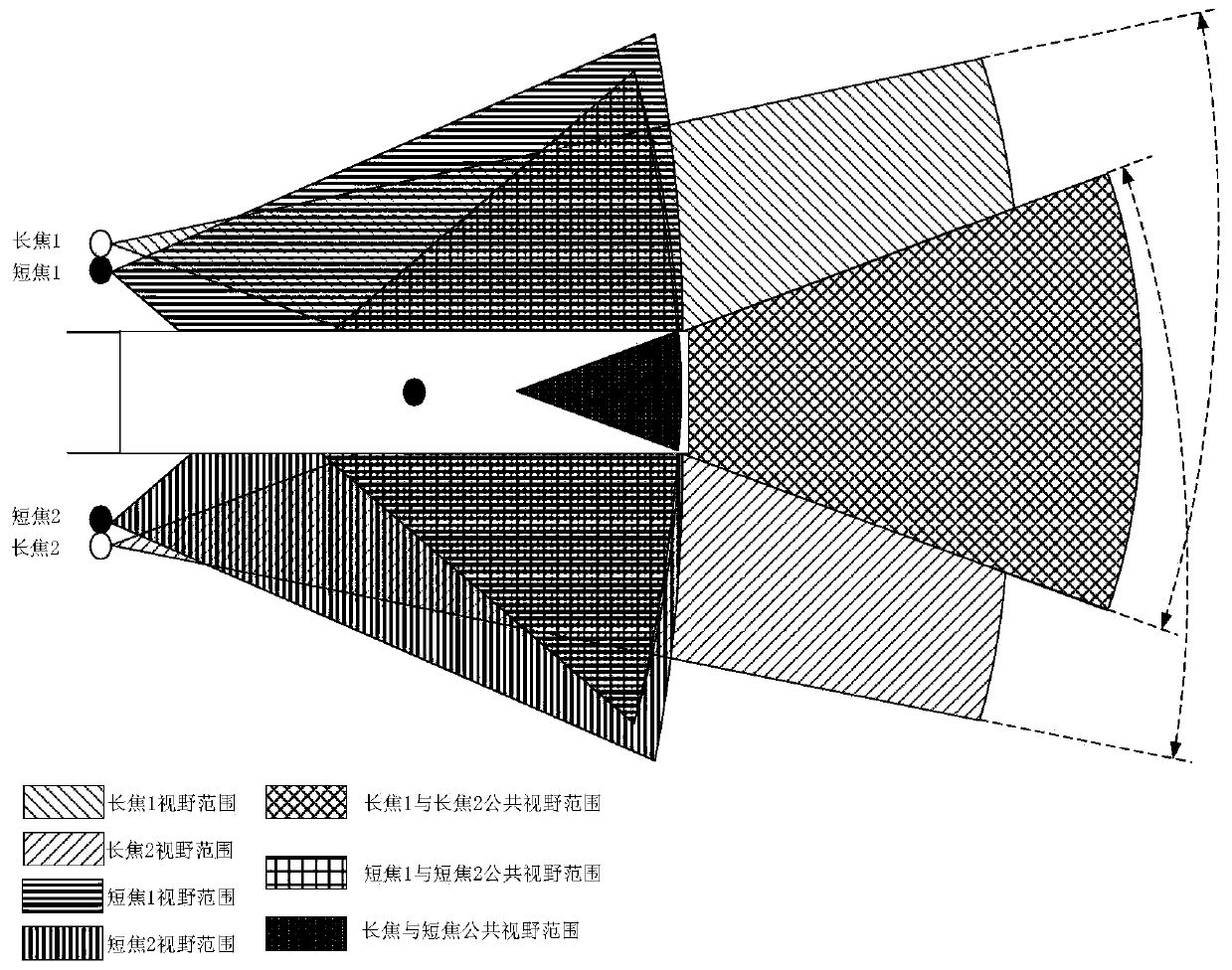

[0039] A vision-based autonomous landing guidance device for unmanned aerial vehicles, including a measuring camera, a visible light flashlight, a total station, a cooperation sign light, a tripod, a prism and a computer. There are 4 cameras, and its model is PointGrey Flea3-FW -03S1C / M-C high frame rate measurement camera, using 1 / 4 CCD sensor, frame rate up to 120Hz, resolution 640×480, camera size 3cm×3cm×6cm, camera base size 1cm×9cm ×11cm. Its installation location is as Figure 1-2 As shown, two of the measurement cameras are equipped with a 12mm telephoto lens for long-distance aerial UAV target detection and positioning, and two measurement cameras are equipped with an 8mm short-focus lens for precise taxiing positioning after the UAV enters the runway area; four The measurement cameras are divided into two groups, each group includes a long-focus measu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com