Data prefetching method based on location awareness in on-chip cache network

A data prefetch and network technology, applied in the field of storage access of many-core processors, can solve problems such as increasing programming difficulty, dependence, and increasing compiler complexity, so as to reduce conflicts, improve accuracy, and efficiently prefetch data Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The following further describes the present invention with reference to the accompanying drawings of the specification and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

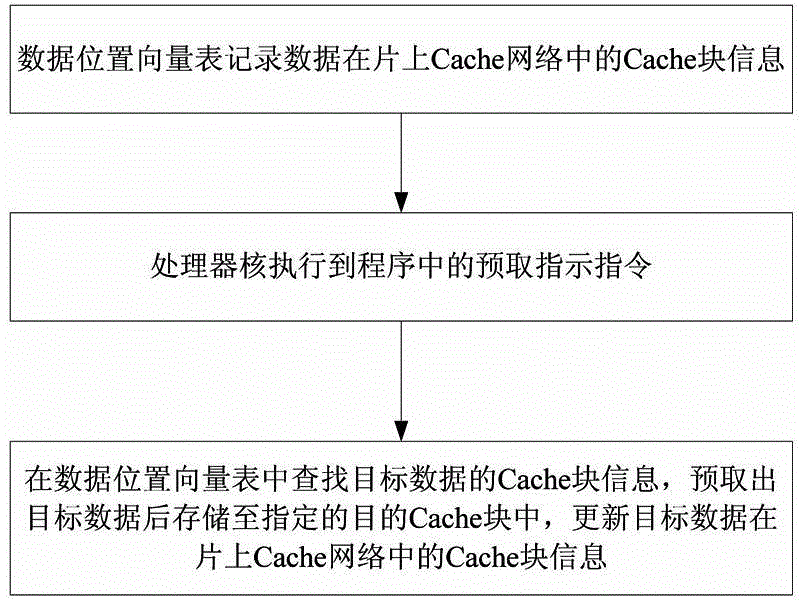

[0030] Such as figure 2 Said, the location-aware data prefetching method in the on-chip Cache network in this embodiment includes the following steps:

[0031] 1) After the processor is started, the Cache block information in the on-chip Cache network of the data taken into the on-chip Cache network of the processor is recorded based on the data location vector table;

[0032] 2) When the processor core executes the prefetch instruction embedded in the program, jump to step 3);

[0033] 3) Look up the Cache block information of the target data of the prefetch instruction instruction in the on-chip Cache network in the data location vector table. When the target data is prefetched from the memory, it is stored in the destination Cache block specified b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com