Method for dynamic allocation of Map/Reduce data processing platform memory resources based on prediction

A memory resource and dynamic allocation technology, applied in the field of distributed computing, can solve problems such as excessive application of memory resources, fluctuation of memory resource usage, failure to actively release memory resources, etc., and achieve the effect of improving execution efficiency and usage efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

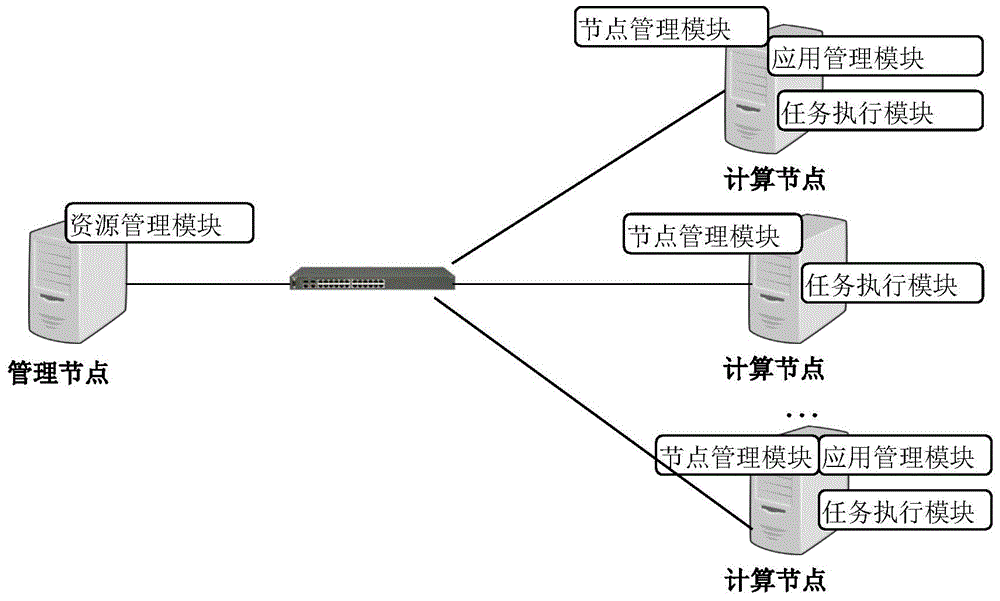

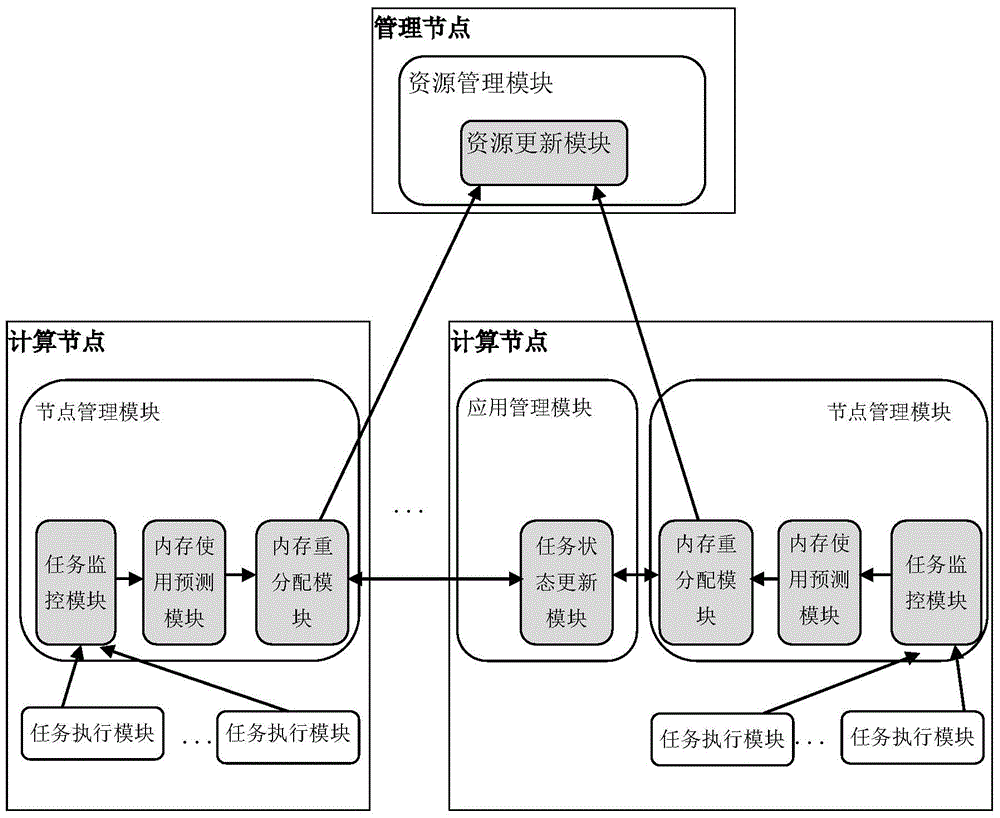

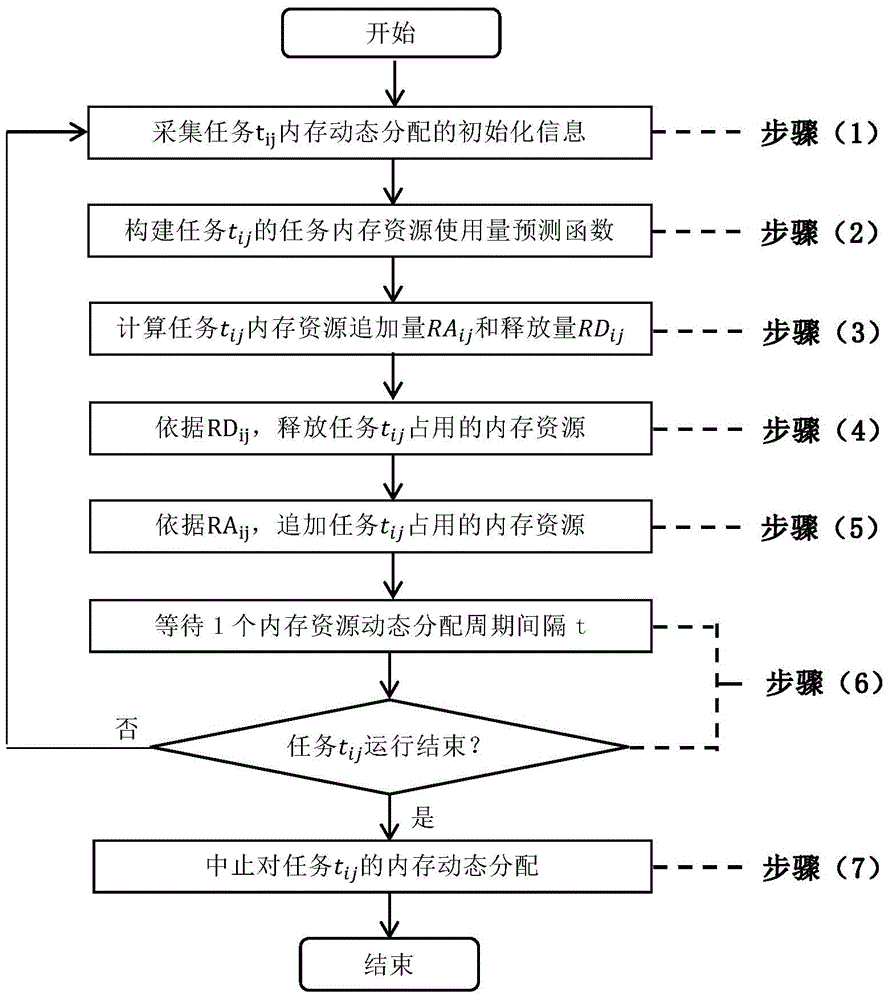

Method used

Image

Examples

Embodiment approach

[0077] Wherein, each record in the task memory resource usage history record set includes recording time and memory usage information. In order to implement the method of the present invention, the MemCollector on each computing node needs to periodically collect the memory resource usage and running progress information of the running tasks on the node, and add the collected information to the corresponding list unit of the shared variable RunTasklist for memory Use the prediction module MemPredictor to get the usage. Specific implementation methods include:

[0078] 1) Task registration task t ij After the corresponding TaskContainer is started, it registers the task with the MemCollector of the node in the way of RPC call. MemCollector creates a new list unit in the shared variable RunTasklist according to the task registration information sent by TaskContainer, including task number, job number, task process number, and the IP address of TaskUpdator corresponding to the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com