Objective positioning and classifying algorithm based on deep learning

A technology of target positioning and classification algorithm, applied in the field of deep learning, can solve the problems of increasing the difficulty of designing a real system, unable to achieve practical value, and unable to share different features, achieve good economic benefits, improve the efficiency of detection, improve The effect of information processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

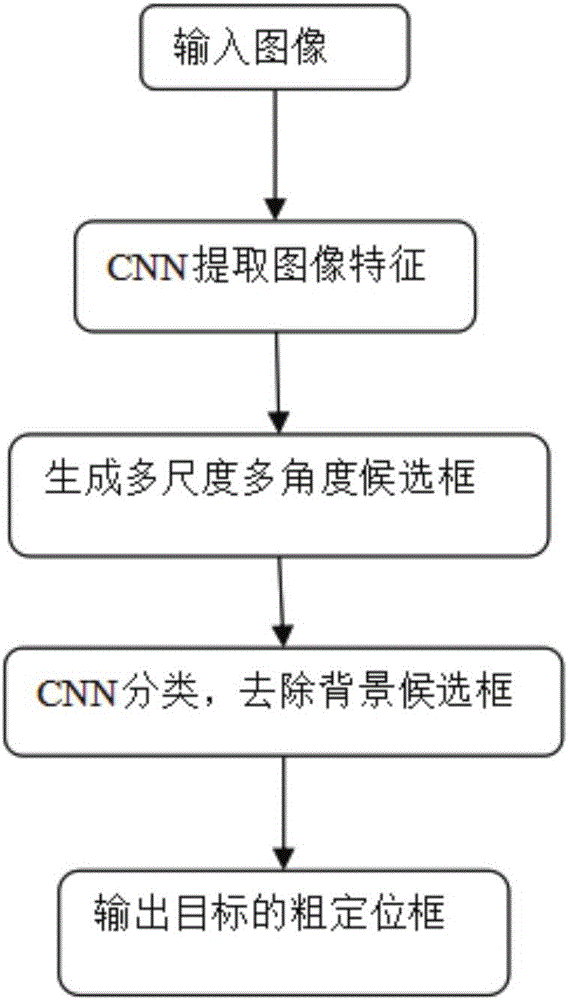

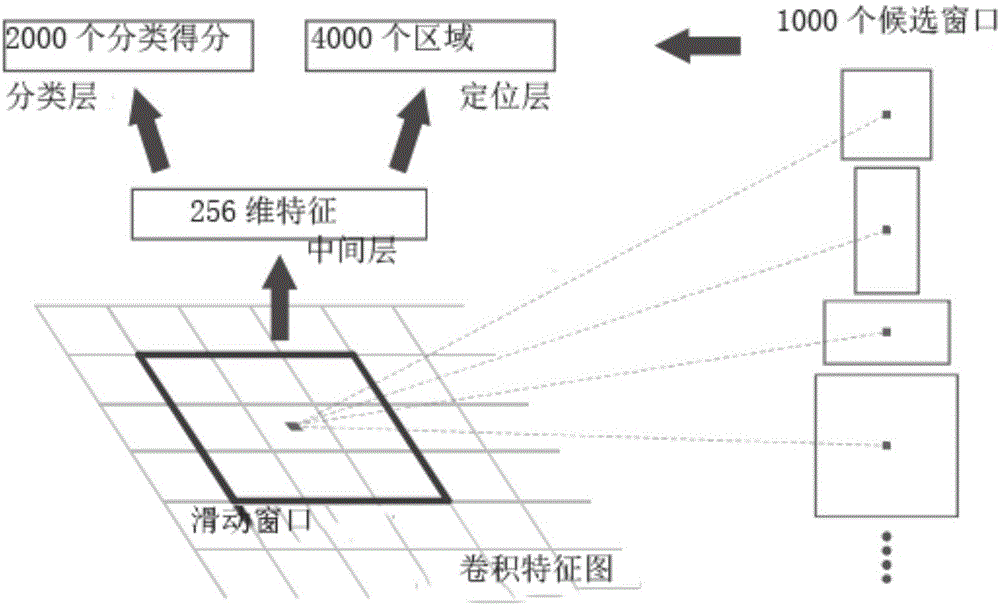

[0023] refer to Figure 1-4 , the present embodiment proposes a target location and classification algorithm based on deep learning, comprising the following steps:

[0024] S1: Input pictures to the first network, and output a series of target positioning boxes and scores;

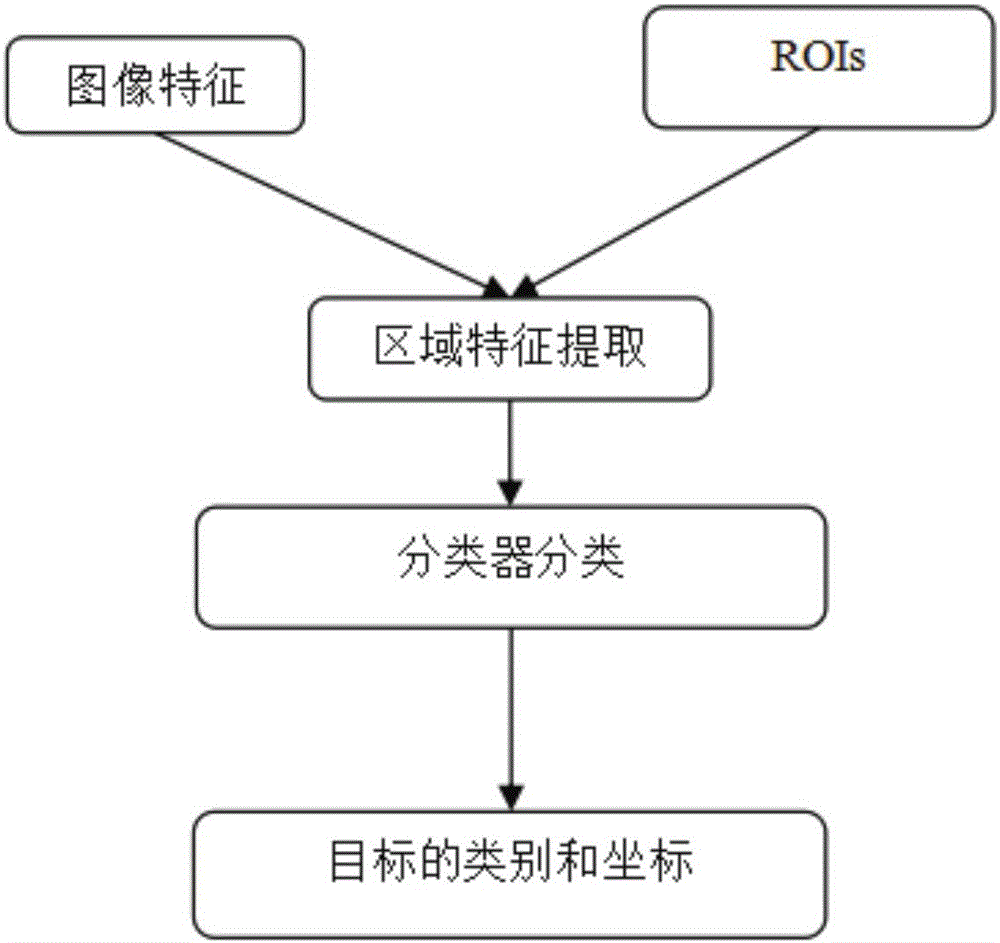

[0025] S2: input a picture and a series of sub-windows to the second network;

[0026] S3: Propagate the network forward to the last convolutional layer to generate a feature map;

[0027] S4: Use the zoom factor to perform coordinate transformation on the sub-window, so that the coordinates are mapped to the feature map;

[0028] S5: Use the zoomed sub-window to obtain features on the feature map, and down-sample to a fixed size;

[0029] S6: classify the data after mining, and obtain the classification results and scores of the regions;

[0030] S7: Input the classification results of the target positioning frame and area into the classifier for classification, and the output is the category and coo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com