Scene Recognition Method Based on Deconvolutional Deep Network Learning with Weights

A deep network and scene recognition technology, applied in the scene recognition field of deconvolution deep network learning, can solve the problems of hindering classification accuracy, indistinguishable runway and road scenes, and low classification accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

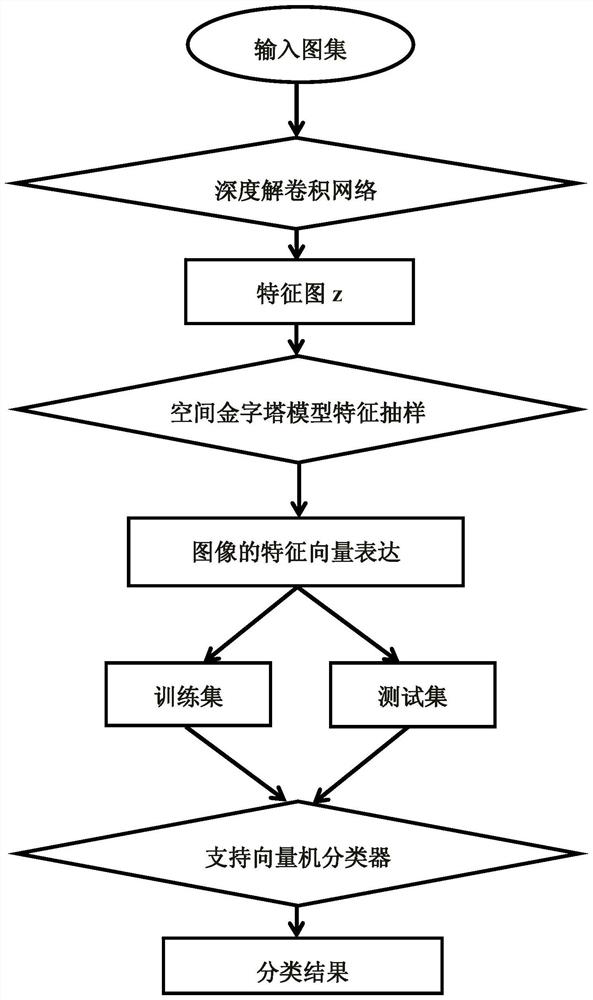

[0062] see figure 1 , the present invention provides a kind of scene recognition method based on deconvolution depth network learning with weight, it comprises the following steps:

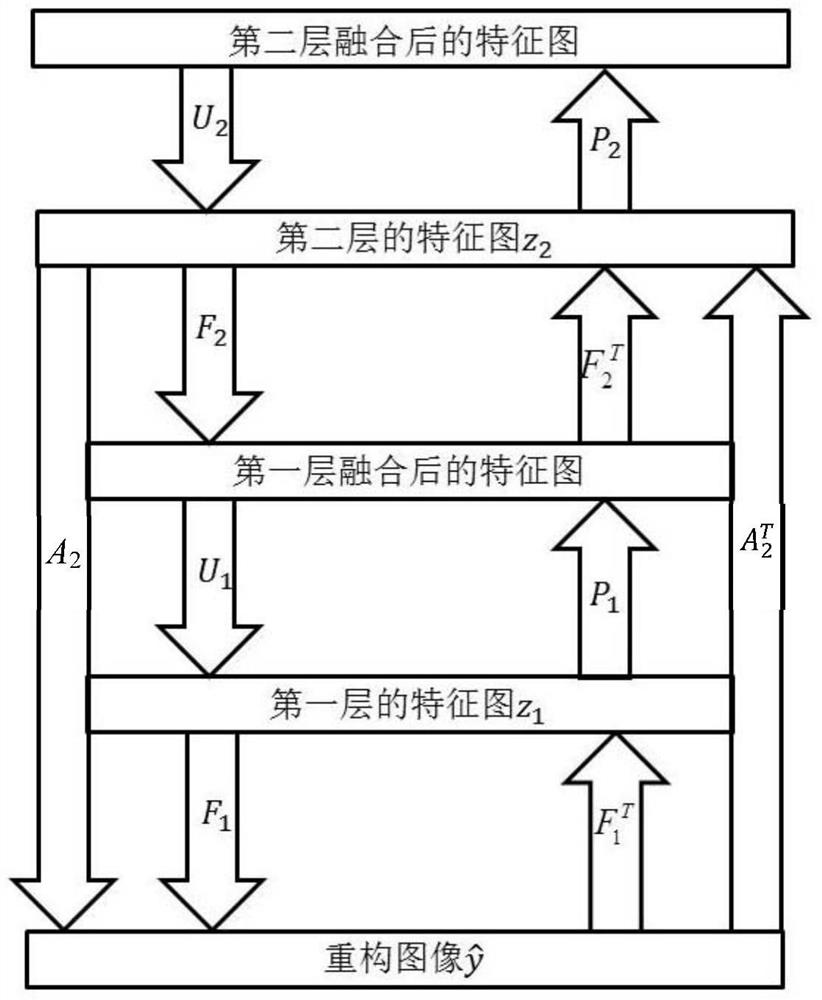

[0063] 1) Construct a weighted deconvolution deep network model, use the weighted deconvolution deep network model to learn the original input image, and obtain the feature maps on different scales of each image;

[0064] Build a deconvolutional deep network model with weights:

[0065]

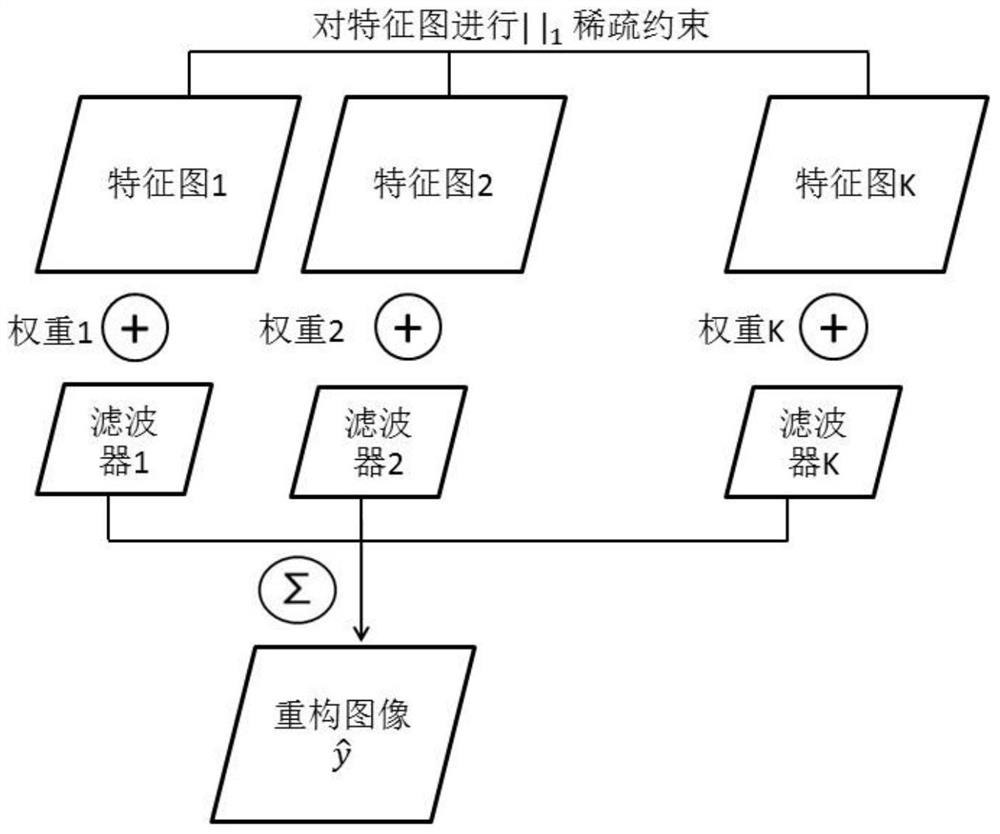

[0066] where C(l) is the objective function of the weighted deconvolution deep network model, l is the number of layers of the weighted deconvolution deep network structure, λ l is the regular term parameter, y is the original input image, is the image obtained by downward reconstruction of the feature map of layer l, z k,l is the kth feature map of layer l, K l is the total number of feature maps in layer l, | | 1 is a sparse constraint on the feature map;

[0067] Such as figure 2 As shown, for the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com