Food image segmentation method and system based on dynamic transformer

An image segmentation and food technology, applied in the fields of computer vision and food computing, can solve problems such as long-tailed distribution and unbalanced distribution of food datasets, so as to improve precision and accuracy, alleviate untargetedness, and improve richness. and overall effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

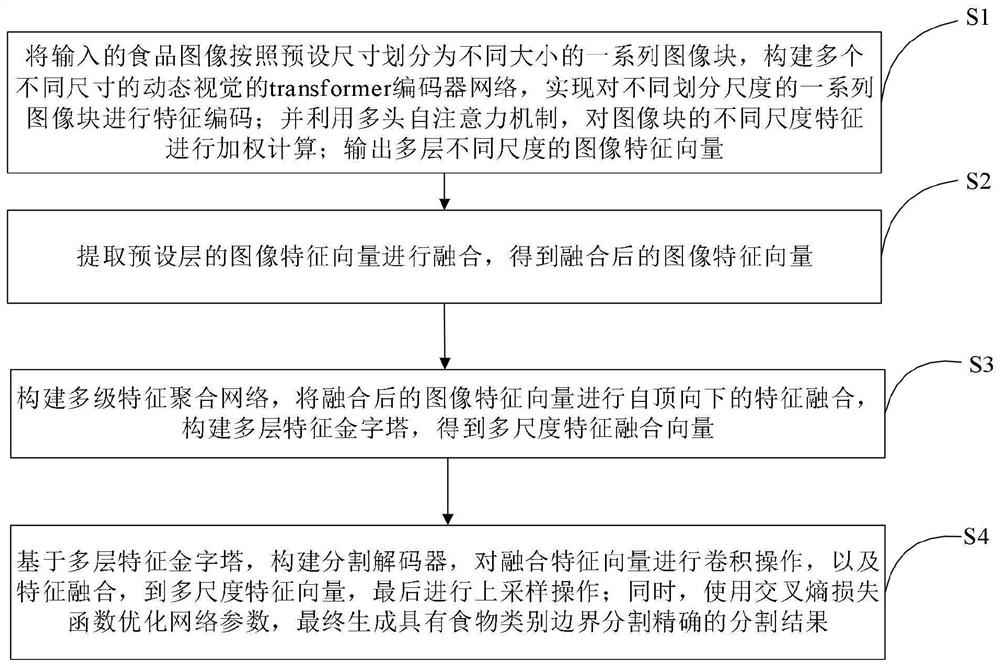

[0020] like figure 1 As shown, a dynamic transformer-based food image segmentation method provided by an embodiment of the present invention includes the following steps:

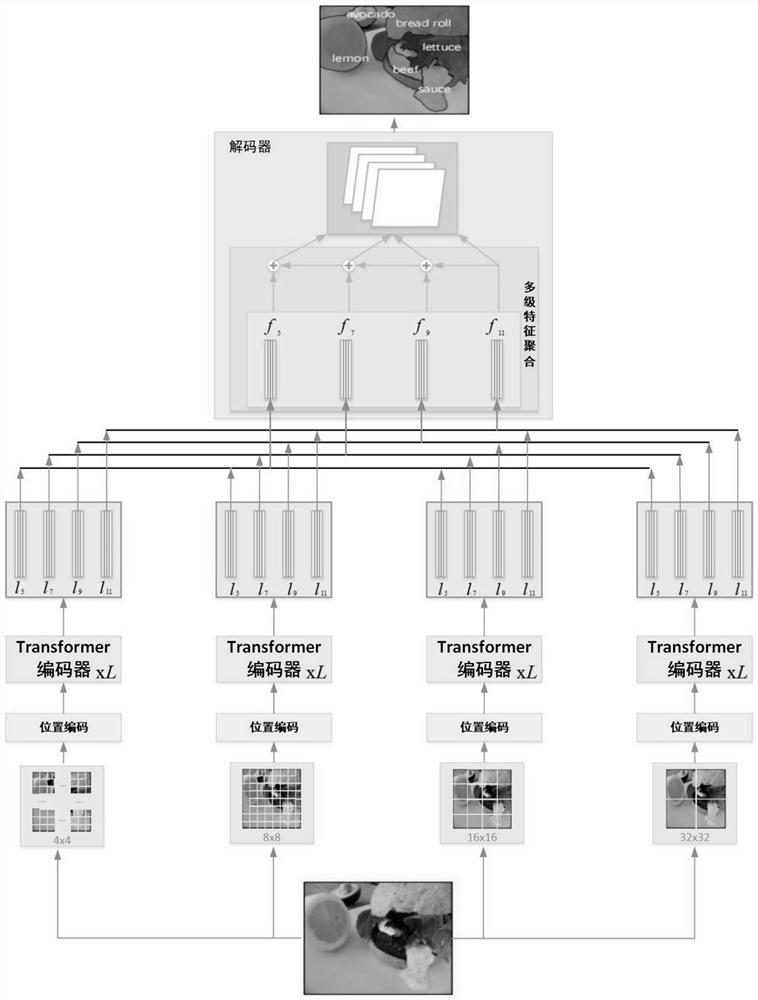

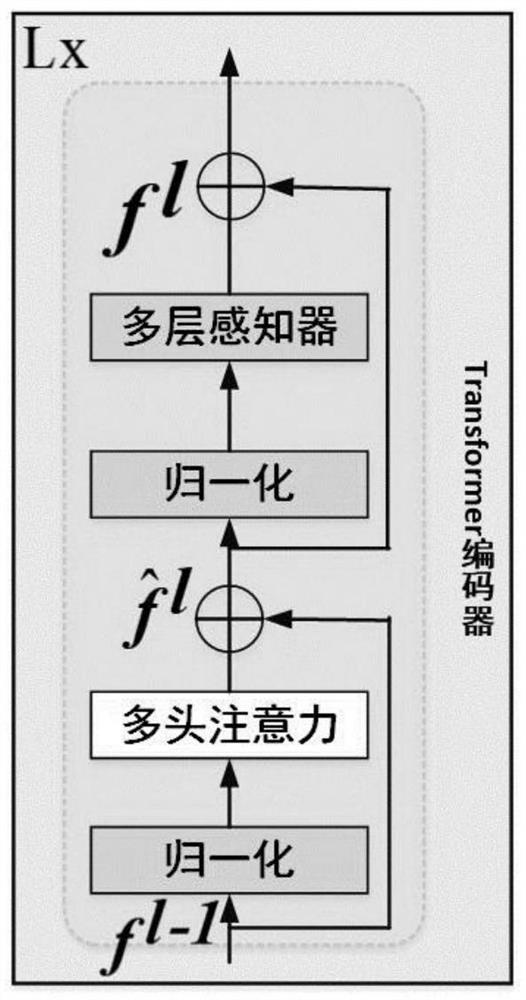

[0021] Step S1: Divide the input food image into a series of image blocks of different sizes according to the preset size, construct a plurality of dynamic vision transformer encoder networks of different sizes, and implement feature encoding for a series of image blocks of different division scales; And use the multi-head self-attention mechanism to weight the different scale features of the image block; output multi-layer image feature vectors of different scales;

[0022] Step S2: extracting the image feature vector of the preset layer for fusion to obtain a fused image feature vector;

[0023] Step S3: constructing a multi-level feature aggregation network, performing top-down feature fusion on the fused image feature vector, constructing a multi-layer feature pyramid, and obtaining a multi-scale featu...

Embodiment 2

[0050] like Figure 4 As shown, an embodiment of the present invention provides a dynamic transformer-based food image segmentation system, including the following modules:

[0051] The acquiring image feature vector module 51 is used to divide the input food image into a series of image blocks of different sizes according to the preset size, construct a plurality of dynamic vision transformer encoder networks of different sizes, and realize a series of different division scales. The image block is feature encoded; and the multi-head self-attention mechanism is used to weight the different scale features of the image block; the multi-layer image feature vectors of different scales are output;

[0052] The fusion image feature vector module 52 is used for extracting the image feature vector of the preset layer for fusion to obtain the fused image feature vector;

[0053] A multi-layer feature pyramid module 53 is constructed, which is used to construct a multi-level feature ag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com