Method and device for interaction between VR/AR system and user

An interaction device and user technology, applied in the field of VR/AR system and user interaction, can solve the problems of low interaction efficiency, long interaction time, cumbersome interaction steps, etc., so as to reduce costs, reduce interaction steps and data, and improve interaction efficiency. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

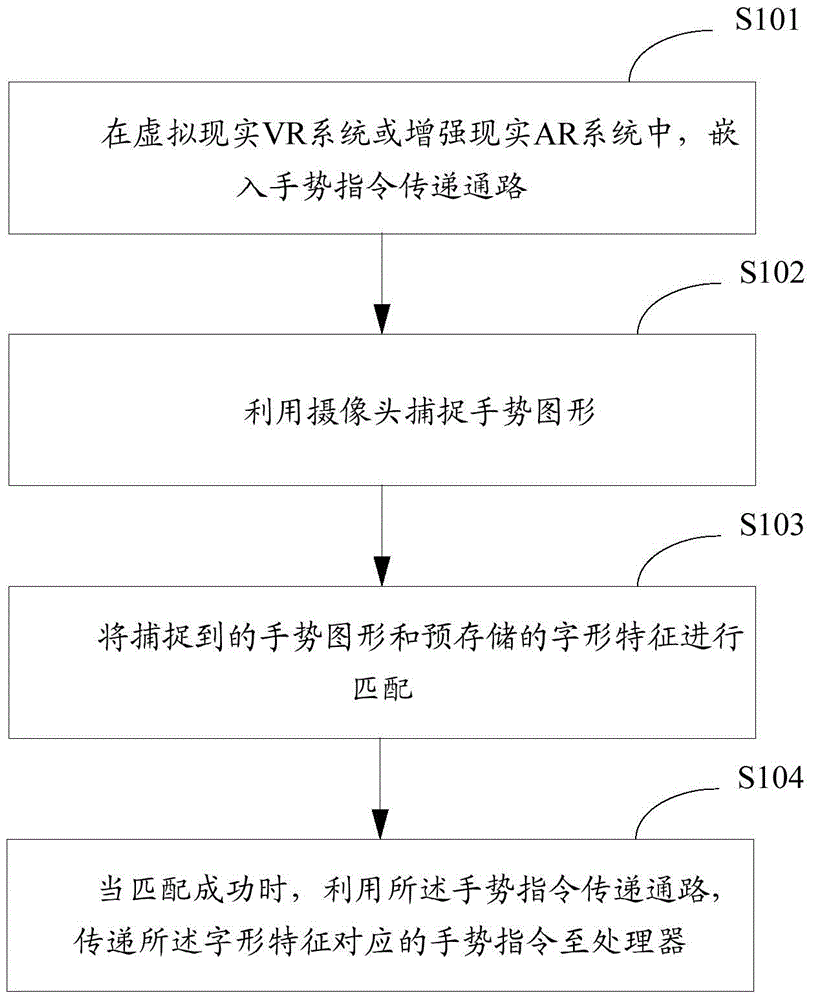

[0027] figure 1 It is an implementation flowchart of a method for interacting between a VR / AR system and a user provided by an embodiment of the present invention, and is described in detail as follows:

[0028] In step S101, in a virtual reality VR system or an augmented reality AR system, a gesture command transmission path is embedded;

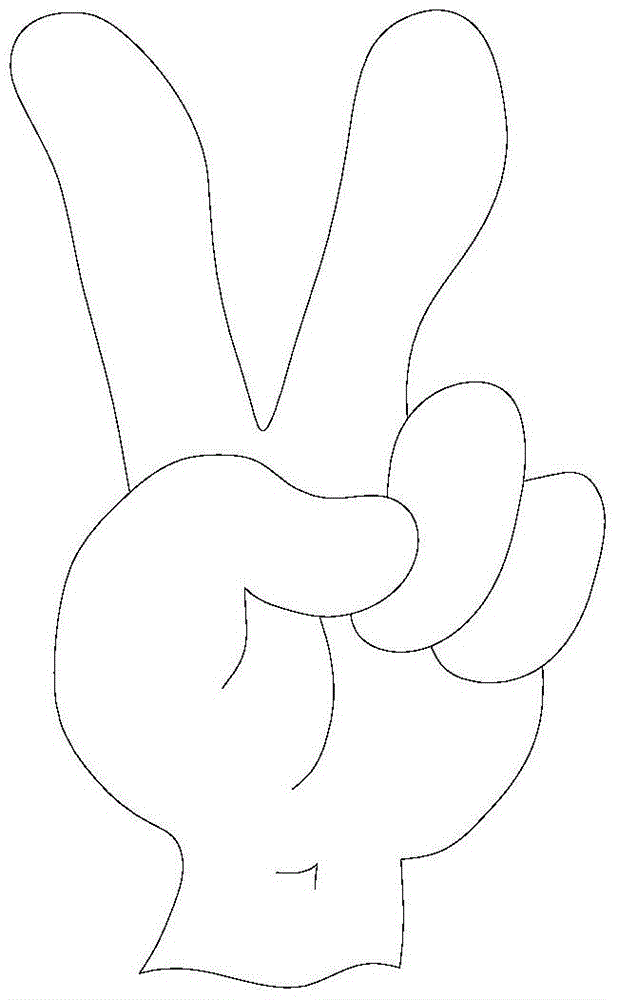

[0029] In step S102, utilize camera to capture gesture figure;

[0030] In step S103, the gesture figure captured and the font feature of pre-stored are matched;

[0031] Wherein, step S103 is specifically:

[0032] Analyze the captured gesture graphics to obtain gesture features;

[0033] Match the gesture features with the pre-stored font features.

[0034] In step S104, when the matching is successful, the gesture command corresponding to the font feature is transmitted to the processor by using the gesture command transmission channel.

[0035] Wherein, step S104 is specifically:

[0036] When the matching is successful, acquire t...

Embodiment 2

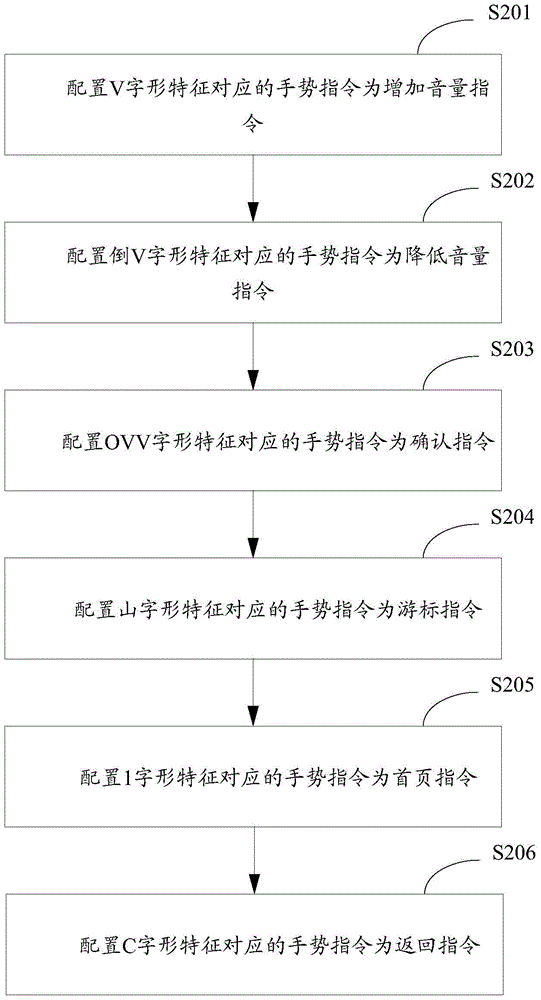

[0040] The embodiment of the present invention describes the type of font features, which are described in detail as follows:

[0041] The font features include at least one of V-shaped features, inverted V-shaped features, OVV-shaped features, mountain-shaped features, 1-shaped features, and C-shaped features.

[0042] In the embodiment of the present invention, multiple types of font features are set to facilitate subsequent matching of multiple different gesture commands.

Embodiment 3

[0044] The implementation flowchart of step S103 of the method for interacting between the VR / AR system and the user provided by the embodiment of the present invention is described in detail as follows:

[0045] matching the captured gesture pattern with the pre-stored V-shaped feature; or,

[0046] matching the captured gesture pattern with the pre-stored inverted V-shaped feature; or,

[0047] Matching the captured gesture graphics with the pre-stored OVV font features; or,

[0048] matching the captured gesture graphics with the pre-stored chevron-shaped features; or,

[0049] matching the captured gesture graphics with the pre-stored 1-shaped features; or,

[0050] Match the captured gesture graphics with the pre-stored C-shaped features.

[0051] In the embodiment of the present invention, the captured gesture graphics are matched with the pre-stored glyph features according to a preset matching sequence, so as to meet the interaction requirements between the VR / AR sy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com