Image description generation method based on depth LSTM network

An image description and network technology, applied in the field of image understanding, can solve problems such as insufficient levels of multi-modal information transformation, weak semantic information of sentences, and difficulty in improving overall performance, so as to improve semantic expression ability, prevent over-fitting phenomenon, and accurately sex high effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0057] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments. This embodiment is carried out on the premise of the technical solution of the present invention, and detailed implementation and specific operation process are given, but the protection scope of the present invention is not limited to the following embodiments.

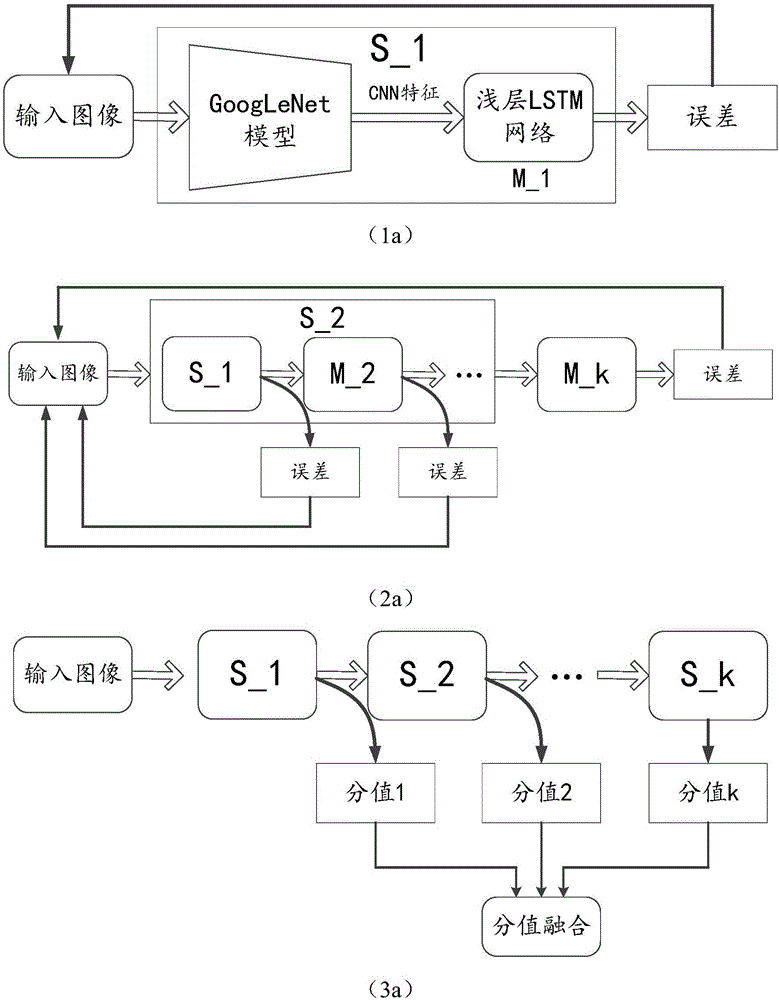

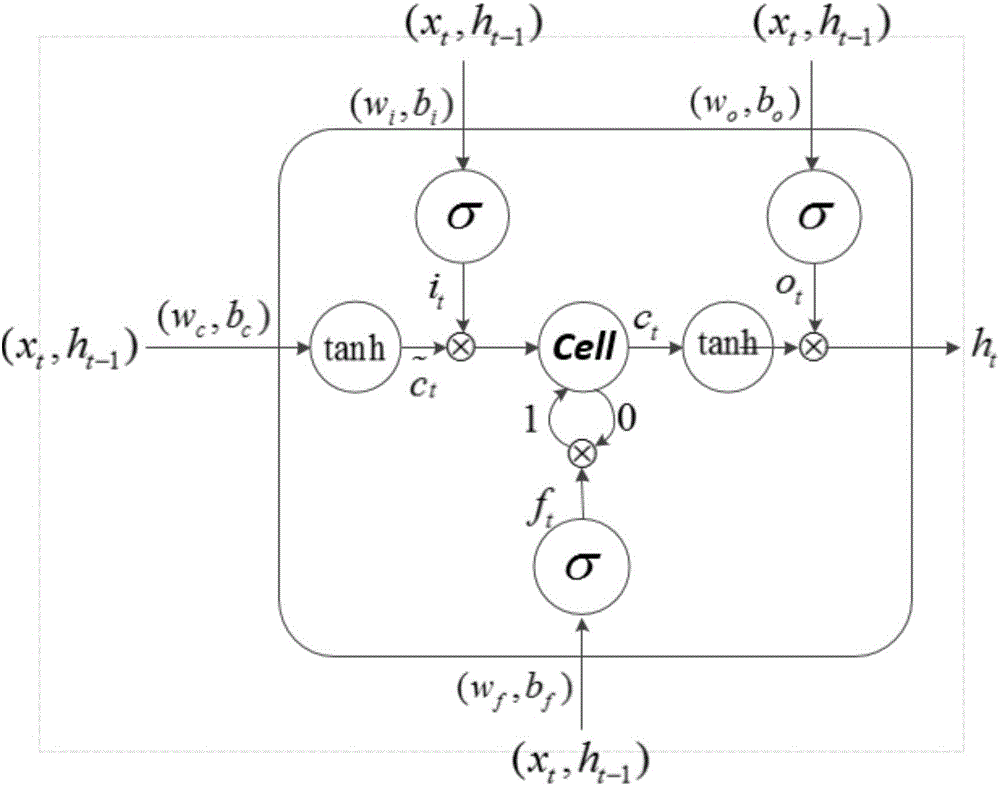

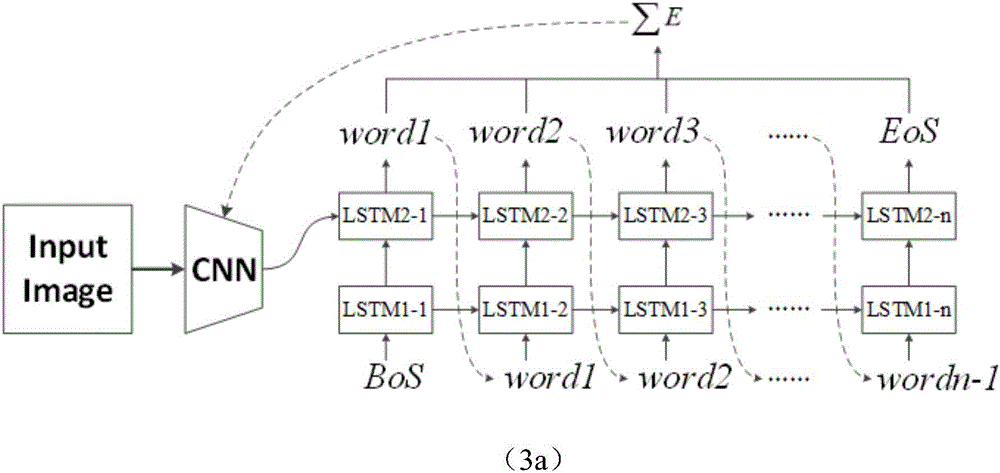

[0058] A method of image description generation based on deep LSTM network, such as image 3 , Figure 4 with Figure 5 shown, including steps:

[0059] 1) Make a training set, a verification set and a test set, and use the GoogLeNet model to extract the CNN features of the image; the specific process includes:

[0060] 11) training set, verification set and test set are converted into hdf5 format, each image corresponds to a plurality of tags, and each tag is a word in the reference sentence corresponding to the image;

[0061] 12) Read the image, scale it to a size of 256×256, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com