Video interaction method

A video interaction and video technology, applied in the field of video interaction, can solve the problems of intensive interactive content, affecting viewing effect, poor quality of video interactive content, etc., and achieve the effect of accurate positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

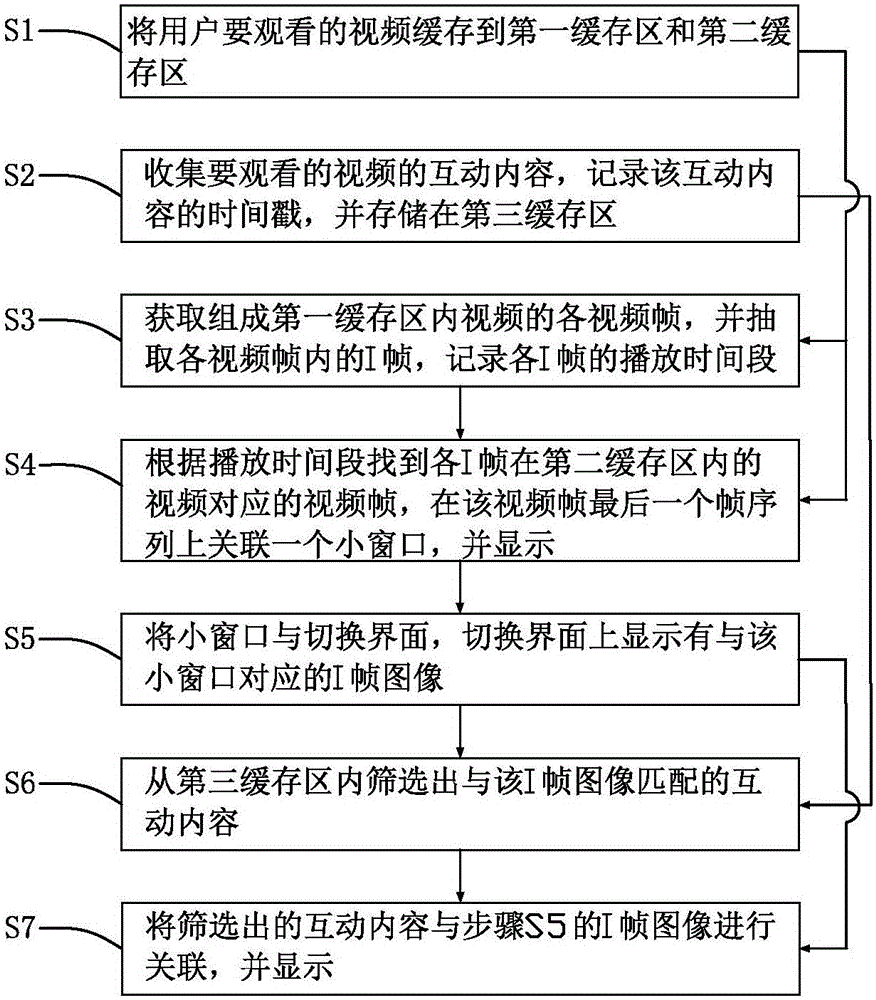

[0064] The present invention provides a video interaction method, such as figure 1 As shown, the interaction methods include:

[0065] S1: Cache the video that the user wants to watch into the first buffer area and the second buffer area;

[0066] S2: Collect the interactive content of the video to be watched, record the time stamp of the interactive content, and store it in the third cache area. The interactive content is the existing interactive content stored by the interactive content server and the interactive content of new comments collected in real time ;

[0067] S3: Obtain each video frame that forms the video in the first buffer area, and extract the I frame in each video frame, and record the playing time period of each I frame;

[0068] S4: find the video frame corresponding to the video of each I frame in the second buffer area according to the playing time period, associate a small window on the last frame sequence of the video frame, and display;

[0069] S5...

Embodiment 2

[0074] The difference between the video interaction method provided by Embodiment 2 of the present invention and Embodiment 1 is that the small window described in step S4 is the corresponding I-frame image at the position or the keyword recorded in the I-frame.

[0075] The specific method of step S4 is:

[0076] S41: Judging whether the video data packet reduction number in the second buffer area in the preset time period is greater than the preset reduction number threshold, if greater, the small window is displayed with the I frame image corresponding to the position, otherwise, the small window is displayed with The keywords recorded in this I frame are displayed.

[0077] The small window provided by the present invention can be an I-frame image, or a text note, which one is selected for display, and the main consideration is not to cause a phenomenon of stuck or network delay in the video playback process, so the present invention monitors and plays in real time The ne...

Embodiment 3

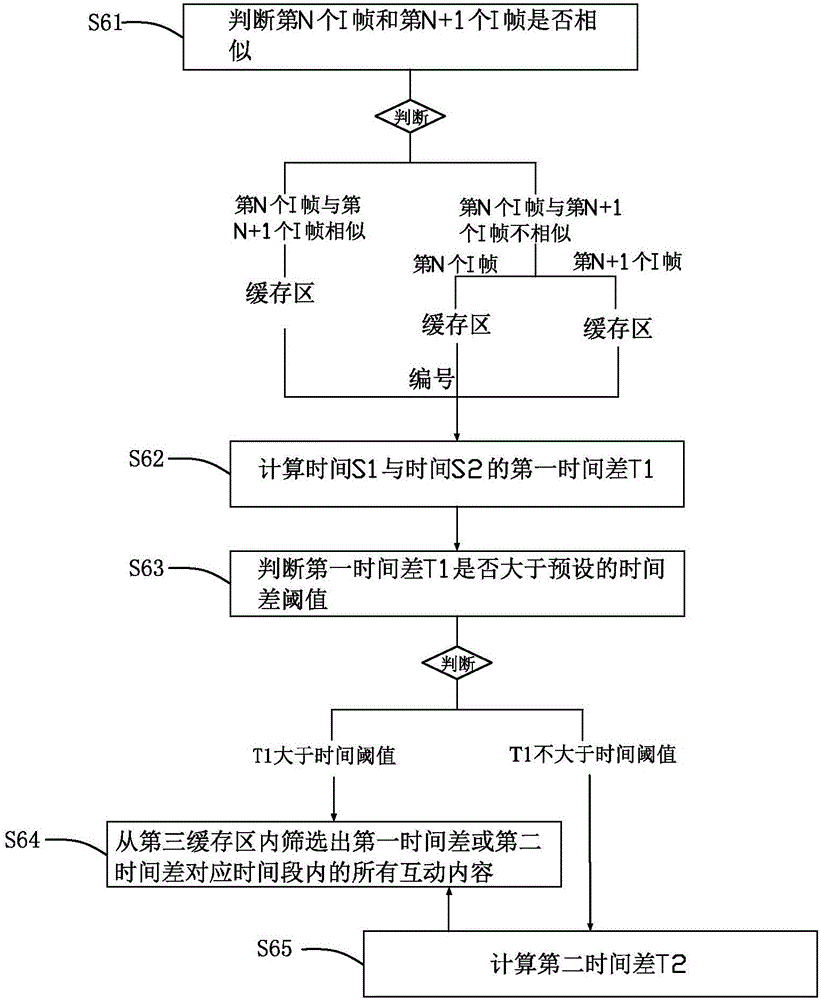

[0079] The video interaction method provided by Embodiment 3 of the present invention is different from Embodiment 1 in that, as figure 2 As shown, the specific method of step S6 includes the following steps:

[0080] S61: Determine whether the Nth I frame and the N+1th I frame are similar, N≥1, if they are similar, put the Nth I frame and the N+1th I frame into the same storage area, otherwise, Put the Nth I frame and the N+1th I frame into different storage areas respectively, and number each storage area;

[0081] S62: Calculate the first time difference T1 of the time S1 corresponding to the first frame sequence of the first I frame corresponding to the video frame in each storage area and the time S2 corresponding to the last frame sequence of the last I frame corresponding to the video frame;

[0082] S63: Determine whether the first time difference T1 is greater than a preset time difference threshold, if so, go to step S64, otherwise go to step S65;

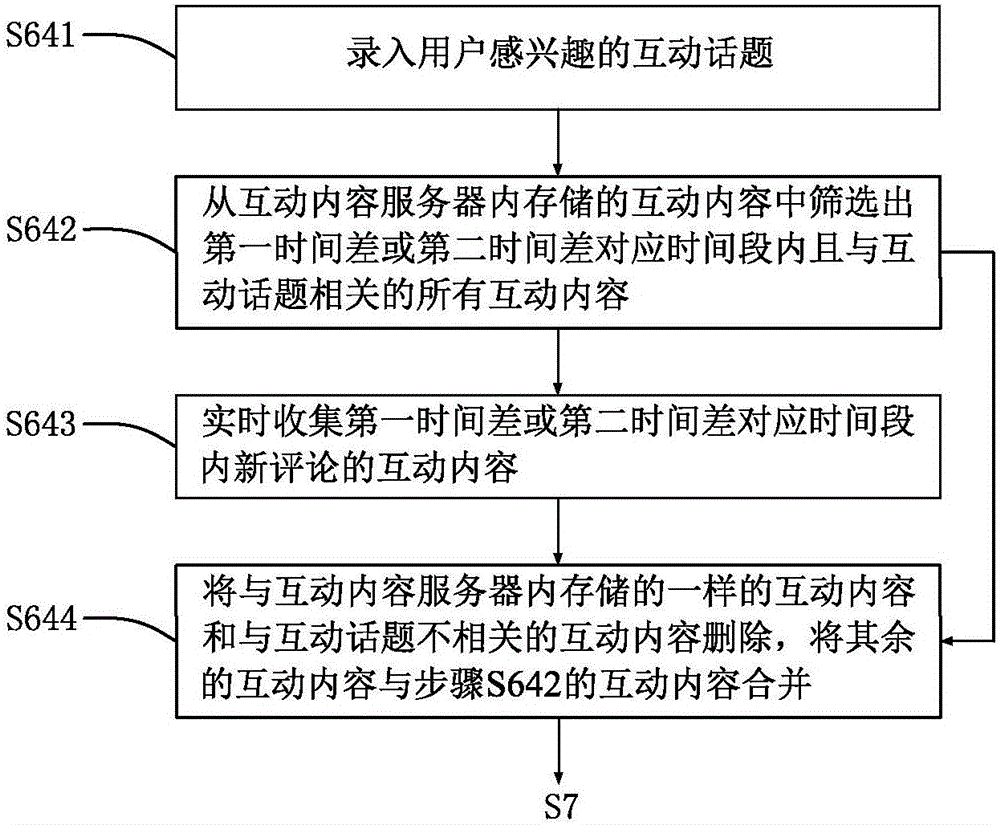

[0083] S64: Fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com