Hand positioning method and device in three-dimensional space, and intelligent equipment

A three-dimensional space and hand technology, applied in image data processing, instruments, calculations, etc., can solve problems such as poor robustness, susceptibility to environmental interference, and large amount of calculations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

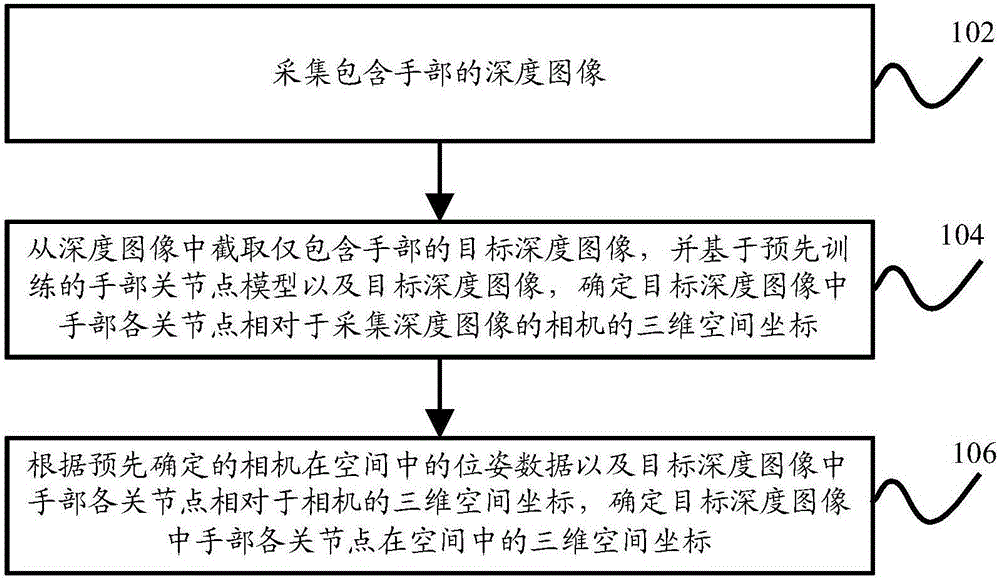

Method used

Image

Examples

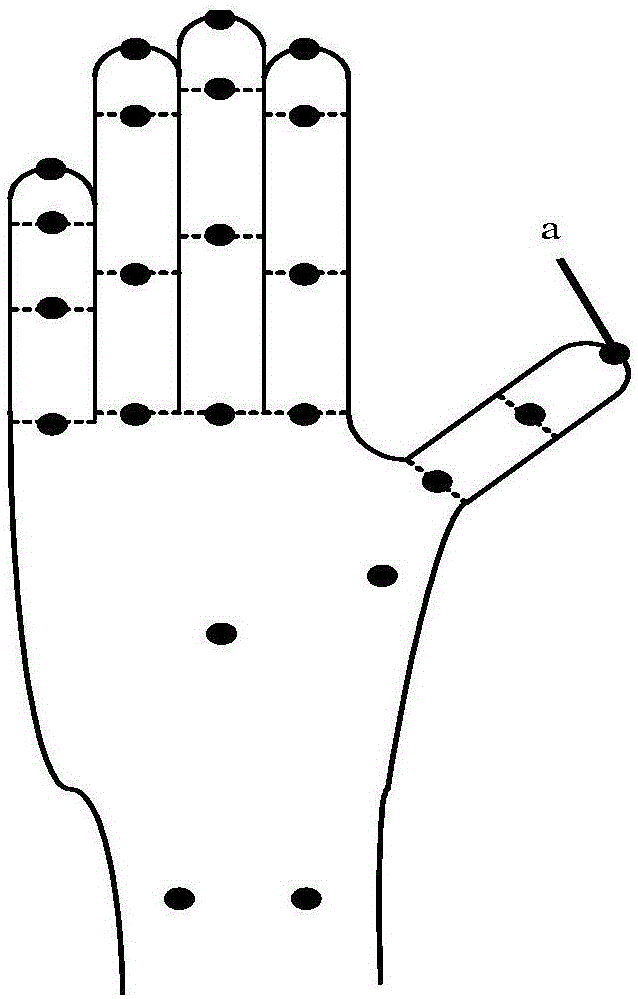

Embodiment approach

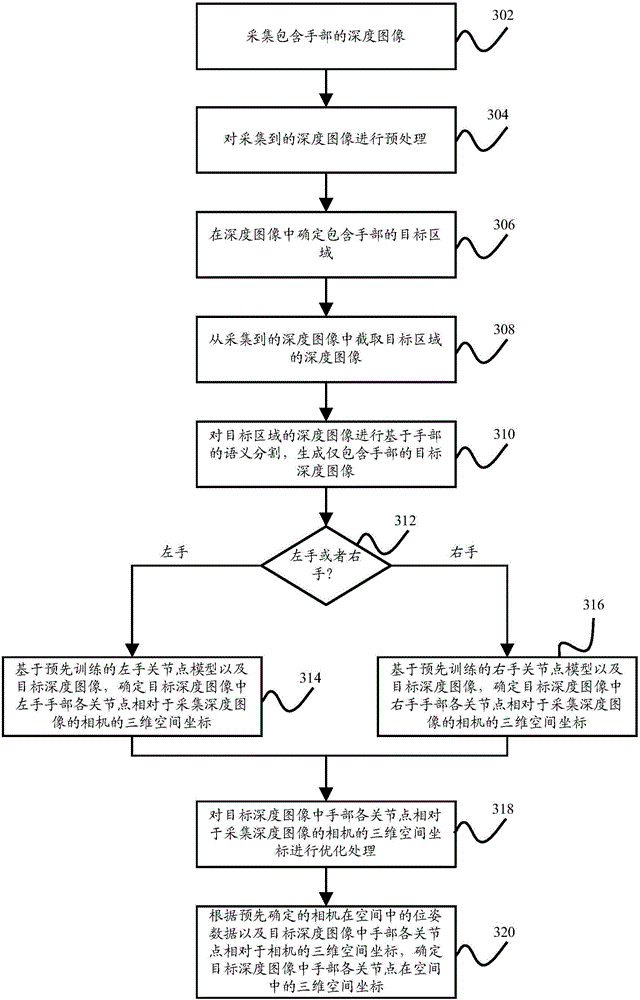

[0049] Determine the target area containing the hand in the depth image containing the hand. The target area can be slightly larger than the size of the hand. The following two implementation methods can be used for specific implementation. Specifically:

Embodiment approach 1

[0050] Embodiment 1. Under the condition that the multi-frame depth images collected before the current frame all contain hands, according to the movement track of the target area containing the hand in the multi-frame depth images collected before the current frame, determine the depth image of the current frame. Target region containing the hand.

[0051] More preferably, the multi-frame depth images collected before the current frame mentioned in this embodiment are collected continuously with the current frame depth image, that is, the multi-frame depth images collected before the current frame mentioned in this embodiment are continuous A multi-frame depth image is collected, and the last frame of the multi-frame depth image is the previous frame of the current frame.

[0052] As a more specific embodiment, the two frames of depth images collected before the current frame are depth image A and depth image B. Under the condition that both depth image A and depth image B co...

Embodiment approach 2

[0056] Embodiment 2: Hands are not included in the depth image of the previous frame of the current frame or in the depth image of the previous frame of the current frame only in the depth image of the previous frame of the multi-frame depth image collected before the current frame or in the depth image of the current frame Under the condition of a new hand, based on the pre-trained hand detection model and the current frame depth image, determine the target area containing the hand in the current frame depth image.

[0057] During specific implementation, under the condition that the previous frame depth image does not contain hands, the current frame depth image may contain hands. Therefore, based on the pre-trained hand detection model, determine whether the current frame depth image contains hands. part, under the condition that the current frame depth image contains the hand, determine the target area containing the hand in the current frame depth image.

[0058] Since th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com