RGB-D video based robot target recognition and localization method and system

A target recognition and robot technology, which is applied in the field of robot target recognition and positioning, can solve the problems that there is no systematic RGB-Depth video target recognition and precise positioning method, the complexity of the robot's working scene, and the high computational complexity, so as to achieve the enhanced spatial level Sensitive ability, guarantee of identity and relevance, high accuracy of recognition and positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

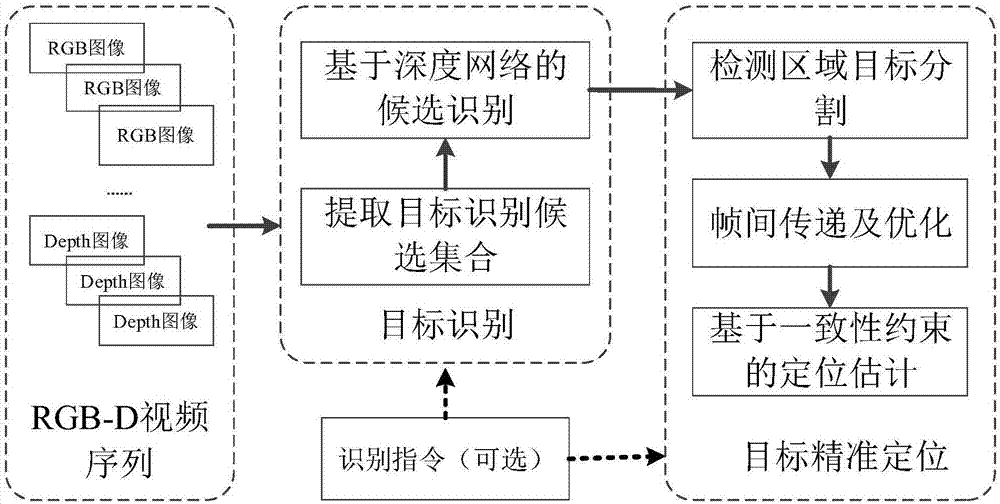

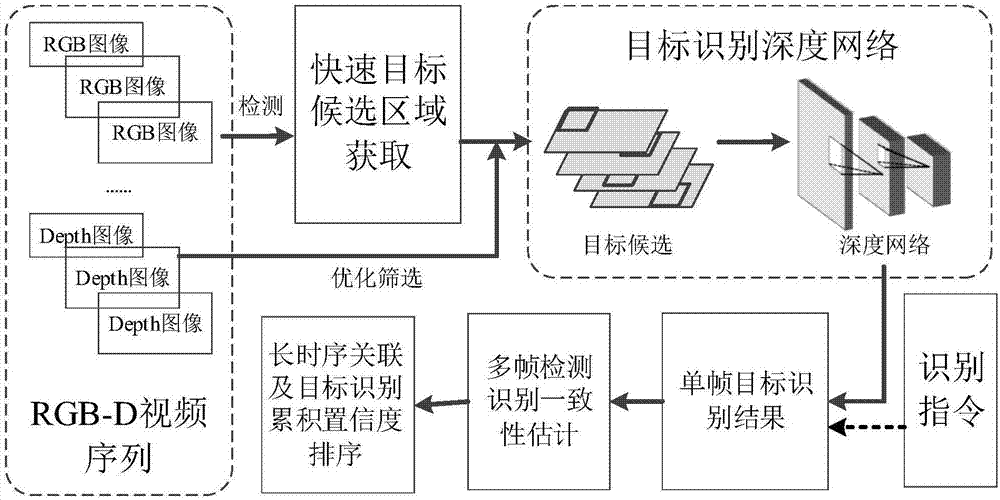

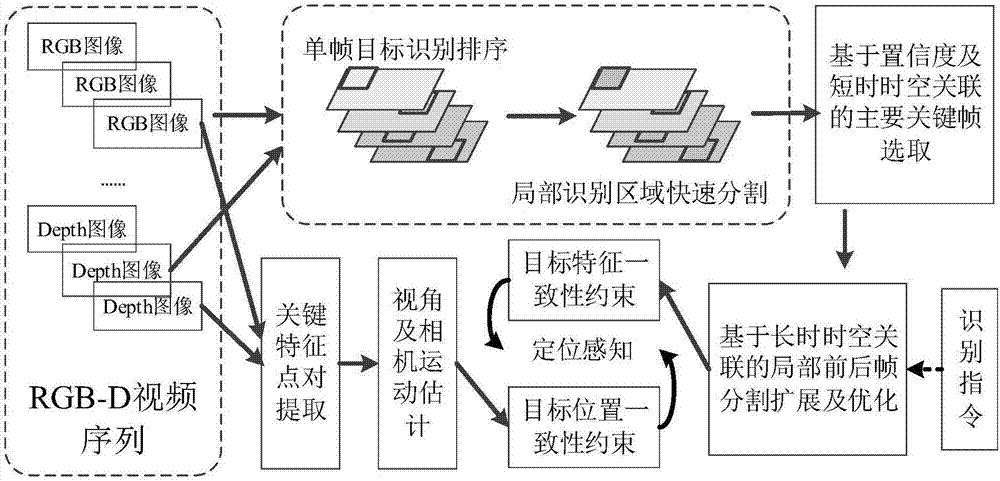

[0042] Such as figure 1 Shown is the overall flow diagram of the method of the embodiment of the present invention. from figure 1 It can be seen that this method includes two steps: target recognition and target precise positioning, and target recognition is a prerequisite for precise target positioning. Its specific implementation is as follows:

[0043] (1) Obtain the RGB-D video frame sequence of the scene where the positioning target is to be identified;

[0044] Preferably, in one embodiment of the present invention, the RGB-D video sequence of the scene where the target to be identified and positioned can be collected by depth vision sensors such as Kinect; RGB image pairs can also be collected by binocular imaging equipment, and estimated by calculating the parallax The scene depth information is used as the depth channel information to synthesize RGB-D video as input.

[0045] (2) extract the key video frame in the RGB-D video frame sequence, and extract the target...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com