Batch streaming computing system performance guarantee method based on queue modeling

A computing system and batch flow technology, applied in the field of distributed computing, can solve the problems of inability to evaluate performance, reduce the efficiency of performance guarantee, and not comprehensively consider performance dependencies.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0081] The present invention will be described below in conjunction with the accompanying drawings and specific embodiments.

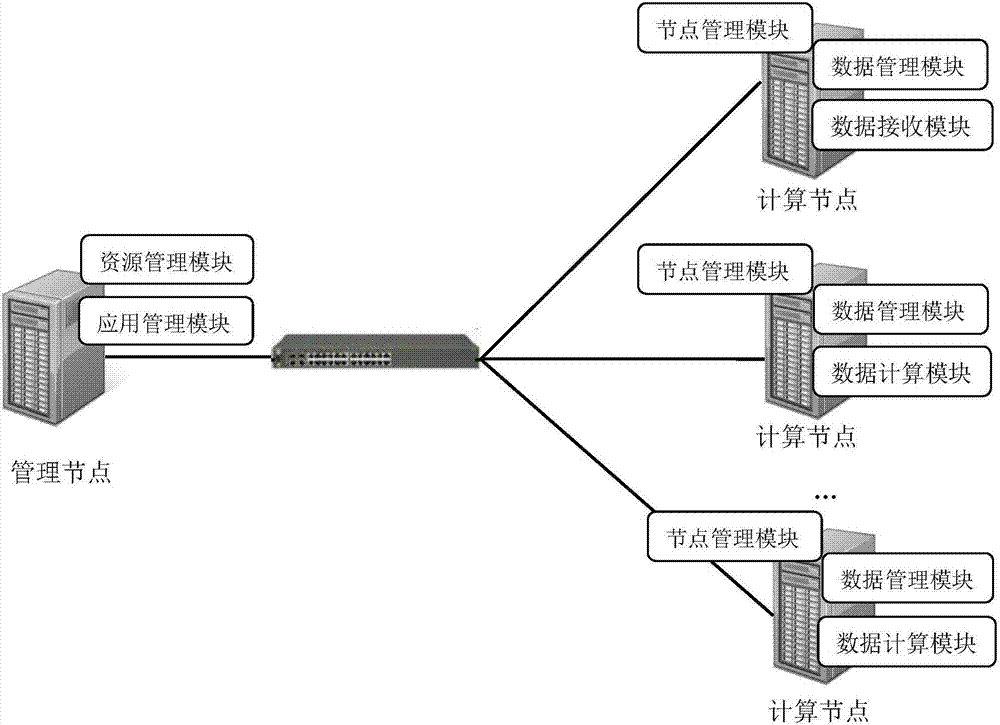

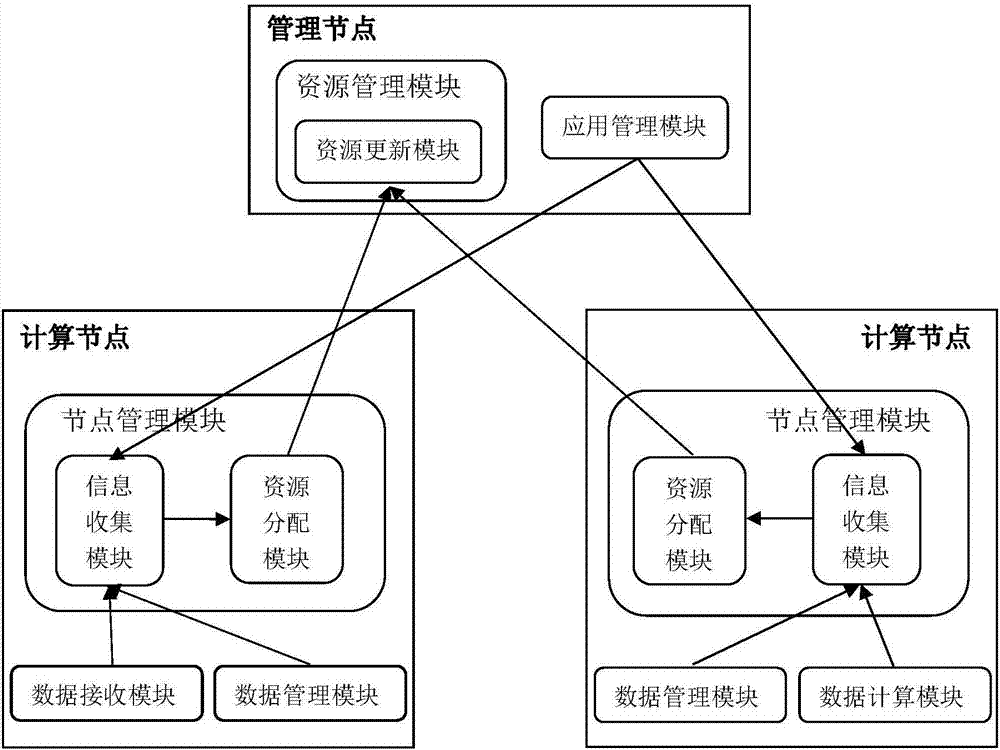

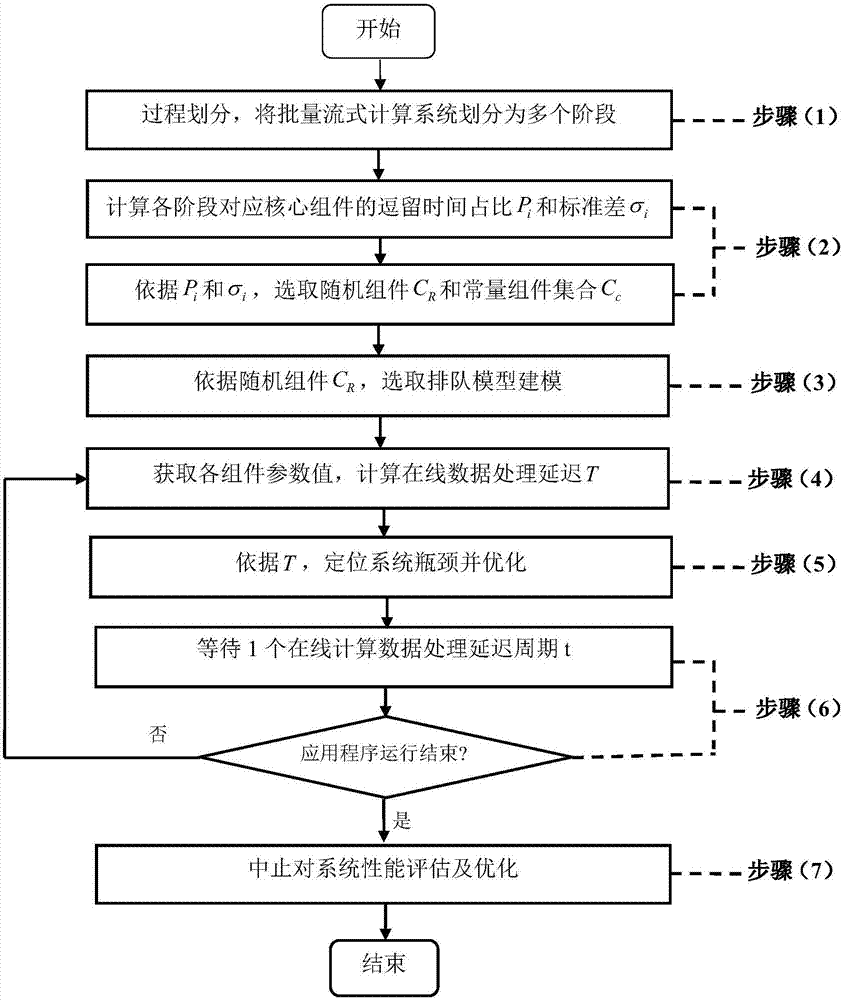

[0082] The present invention describes the specific implementation of the proposed performance guarantee method in combination with Spark Streaming, a batch stream computing system widely used at present. figure 1 It is a deployment diagram of the batch stream computing platform on which this method is attached. The platform is composed of multiple computer servers (platform nodes), and the servers are connected through a network. Platform nodes are divided into two categories: including a management node (Master) and multiple computing nodes (Slave). The platform on which the present invention is attached includes the following core software modules: a resource management module, a node management module, an application management module, a data receiving module, a data management module and a data calculation module. Among them, the resource manage...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com