VR video live broadcasting interaction method and device based on eye tracking technology

A technology of eye-tracking and live video broadcasting, which is applied to color TV parts, TV system parts, TVs, etc. It can solve problems such as high delay, large amount of VR video data, and large bandwidth demand, so as to ensure quality, Reduces bandwidth requirements, reduces distortion and chromatic aberration effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] All the features disclosed in this specification, except mutually exclusive features and / or steps, can be combined in any way.

[0048] The present invention will be described in detail below in conjunction with the accompanying drawings.

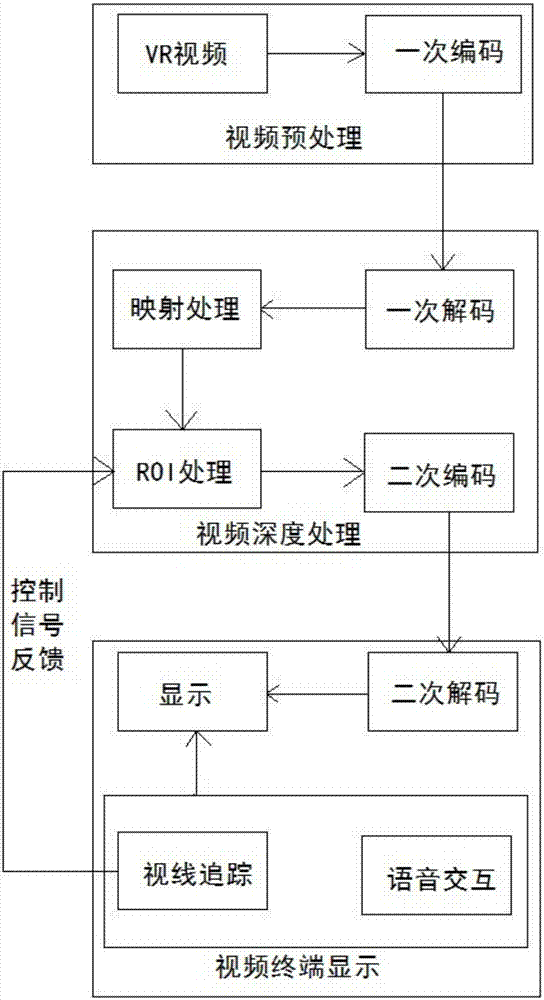

[0049] A VR video live broadcast interaction method based on eye-tracking technology, such as figure 1 shown, including the following steps:

[0050] S1: VR video preprocessing

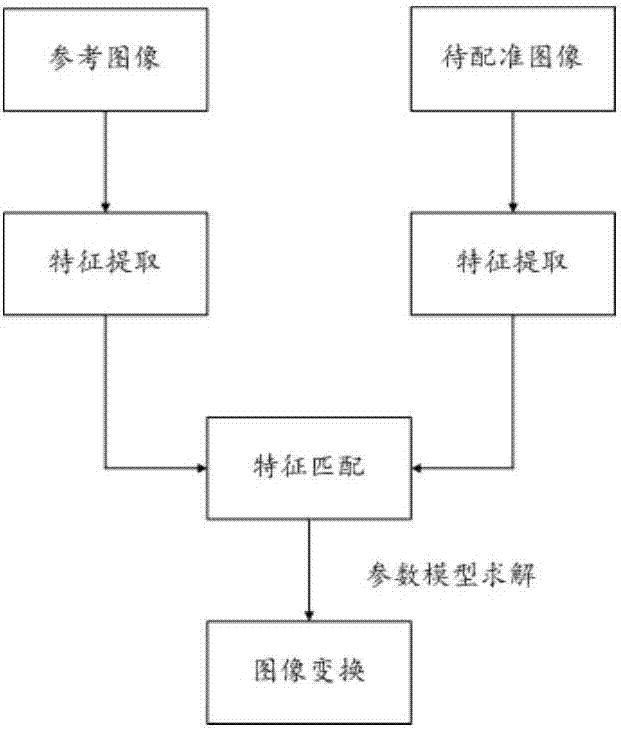

[0051] S11: Use multiple cameras to obtain multiple video sources, and splicing the multiple video sources to obtain a fused spherical VR video; the method of video splicing adopts the splicing method of invariant feature matching, such as figure 2The following steps are shown:

[0052] S11: Feature extraction, including establishment of scale space, detection of extreme points, precise positioning of extreme points, and generation of feature vectors;

[0053] S12: Feature matching, through a certain search strategy, based on the BBF algorithm and the R...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com