Object grabbing method of robot

A robot and robot movement technology, applied in the direction of manipulators, manufacturing tools, etc., can solve the problems of being unsuitable for dark environments and low positioning accuracy, and achieve the effects of accurately grasping objects, improving accuracy, and expanding the scene and scope

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

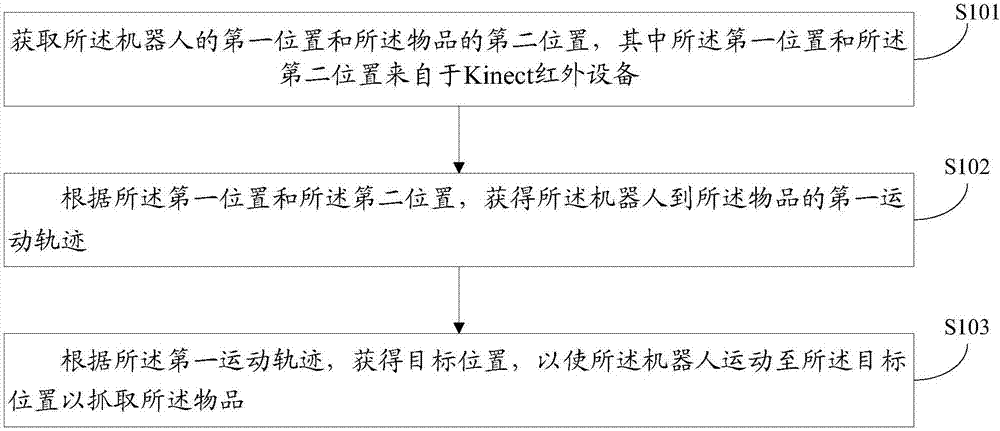

[0046] This embodiment provides a method for a robot to grab an item, the method comprising:

[0047] Step S101: Obtaining the first position of the robot and the second position of the item, wherein the first position and the second position come from a Kinect infrared device;

[0048] Step S102: According to the first position and the second position, obtain a first movement trajectory from the robot to the object;

[0049] Step S103: Obtain a target position according to the first movement trajectory, so that the robot moves to the target position to grab the object.

[0050]In the above method, obtain the first position of the robot and the second position of the article by the Kinect infrared device, and send the above-mentioned first position and the second position to the robot, and establish a data connection between the Kinect infrared device and the robot, and can For interaction, Kinect infrared device acquisition can obtain accurate location information, which can...

Embodiment 2

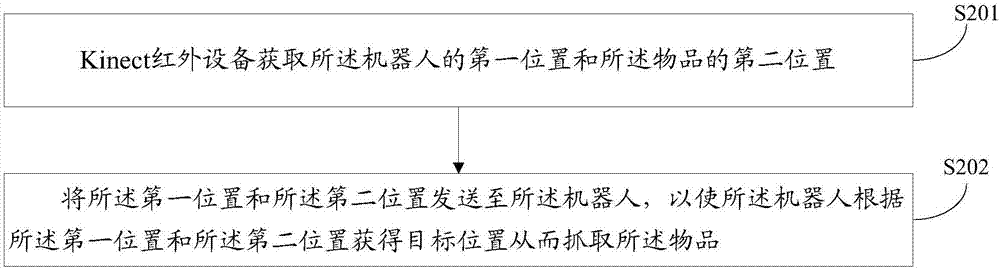

[0077] Based on the same inventive concept as in Embodiment 1, Embodiment 2 of the present invention also provides a method for a robot to grab an item from the perspective of a Kinect infrared device, the method comprising:

[0078] Step S201: the Kinect infrared device acquires the first position of the robot and the second position of the item;

[0079] Step S202: Sending the first position and the second position to the robot, so that the robot obtains a target position according to the first position and the second position so as to grab the object.

[0080] Specifically, in the method for grabbing an item provided in an embodiment of the present invention, the Kinect infrared device acquires the first position of the robot and the second position of the item, including:

[0081] Obtain a spatial depth image;

[0082] Obtain pixel coordinates and depth coordinates according to the spatial depth image;

[0083] Obtain the first position of the robot and the second positi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com