Network text named entity recognition method based on neural network probability disambiguation

A technology of named entity recognition and neural network, applied in the field of network text named entity recognition based on neural network probability disambiguation, can solve the problems of many typos, training neural network, irregular grammatical structure, etc., to achieve good practicability and high accuracy rate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

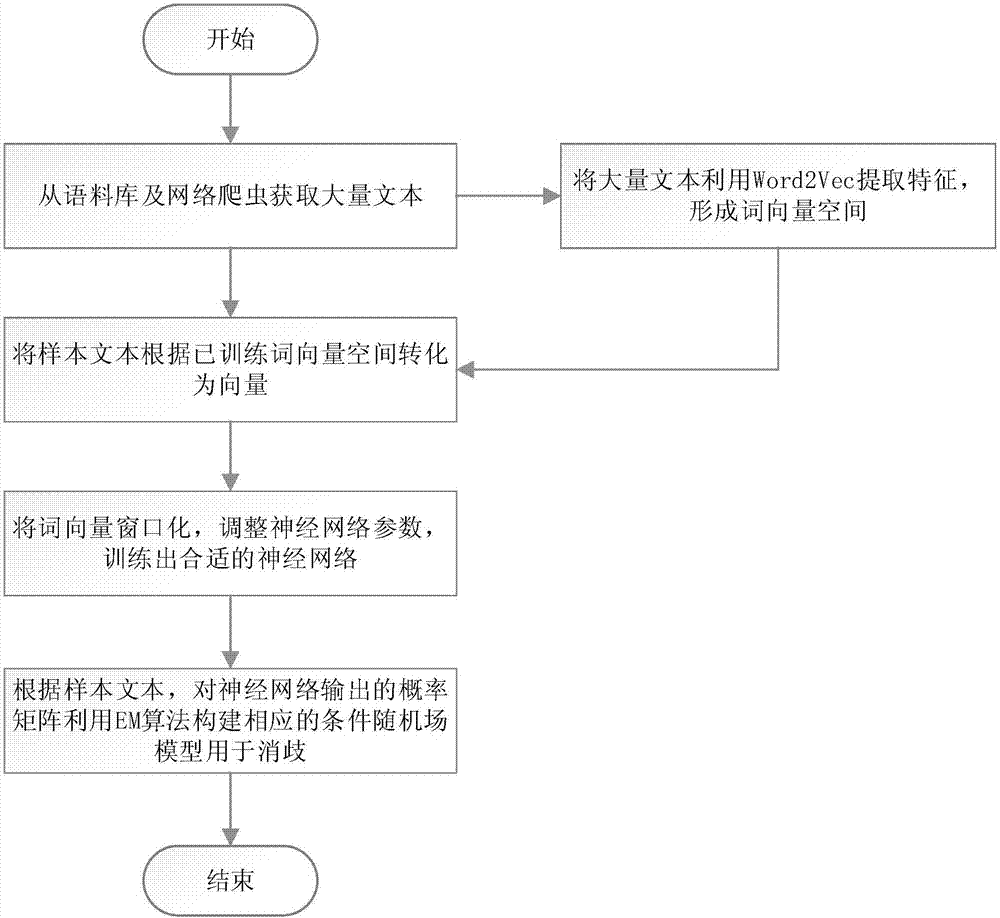

Method used

Image

Examples

example

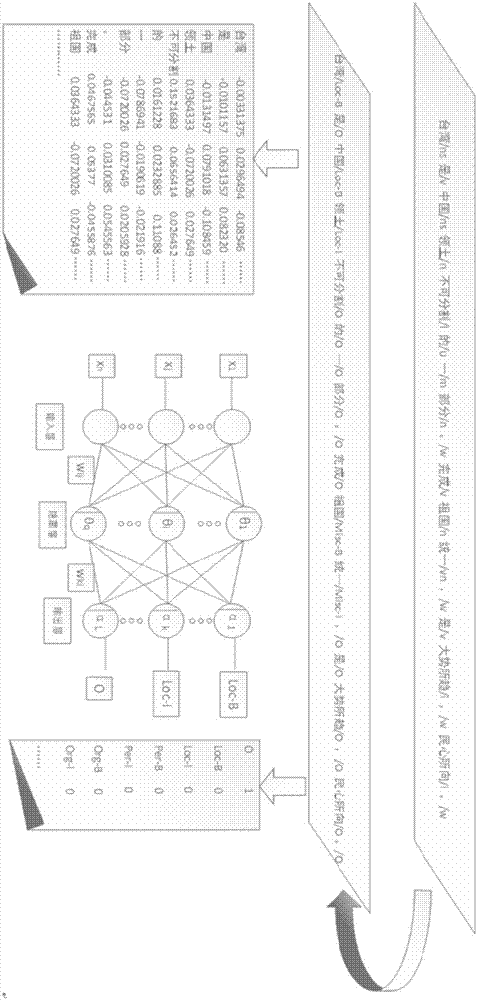

[0040] Download the named entity corpus from the Sogou news website crawler network text as a sample corpus, use natural language tools to segment the crawler network text, and use the gensim package in python to pass the Word2Vec model through the corpus of good words and the sample corpus Carry out the training of the word vector space, the specific parameters are as follows, the length of the word vector is 200, the number of iterations is 25, the initial step size is 0.025, the minimum step size is 0.0001, and the CBOW model is selected.

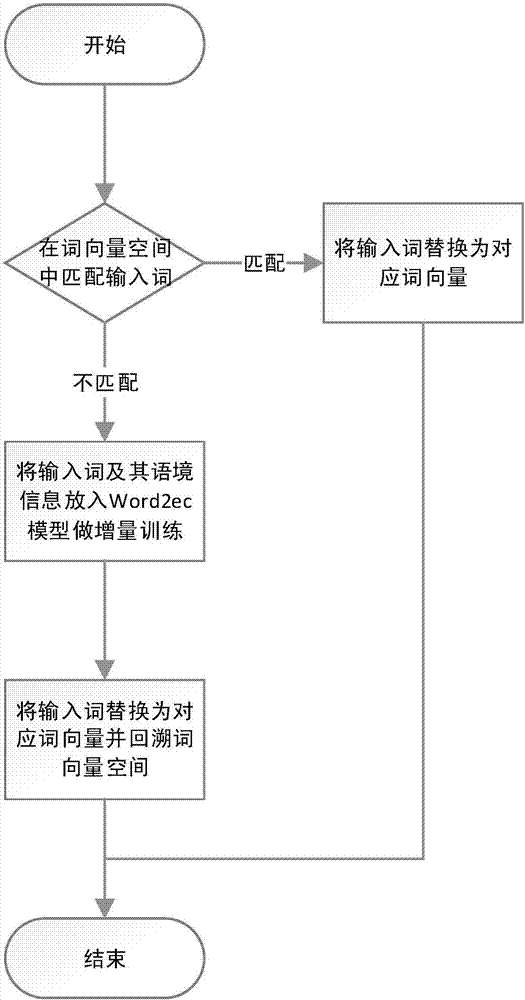

[0041] The text of the sample corpus is converted into a word vector representing word features according to the trained Word2Vec model. If the Word2Vec model does not contain the corresponding training vocabulary, the method of incremental learning, obtaining word vectors, and backtracking the word vector space is used to convert the word Convert to word vectors. as a feature of each word. Convert " / o", " / n", " / p" and other tags in the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com