Attention mechanism-based video classification method

A technology of video classification and attention, applied in the field of optical communication, can solve the problems of unfavorable video content recognition, loss of timing information of video features, etc., to achieve the effect of improving the accuracy rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0041] For the convenience of description, the relevant technical terms appearing in the specific implementation are explained first:

[0042] CNN (Convolutional Neural Network): convolutional neural network;

[0043] LSTM (Long Short-Term Memory): long short-term memory network;

[0044] BPTT (Back Propagation Through Time): time backpropagation algorithm;

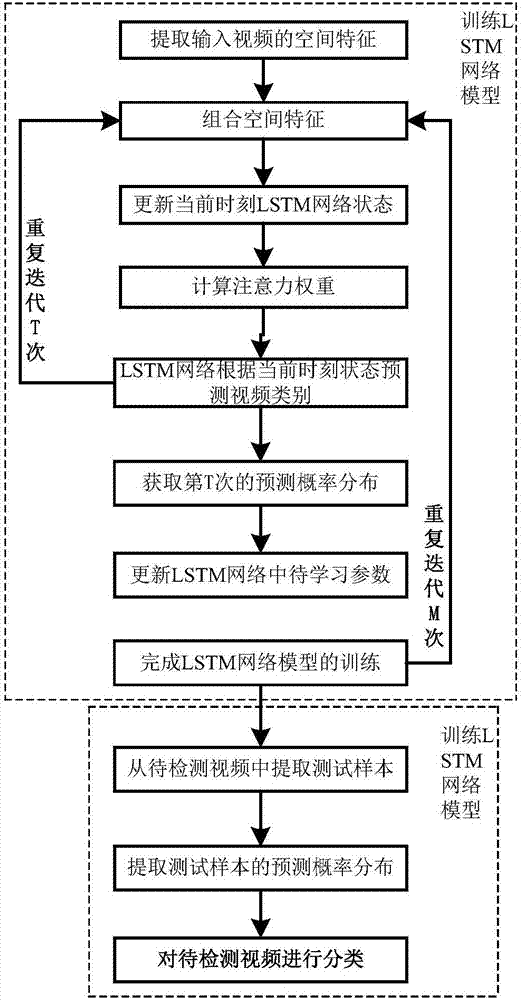

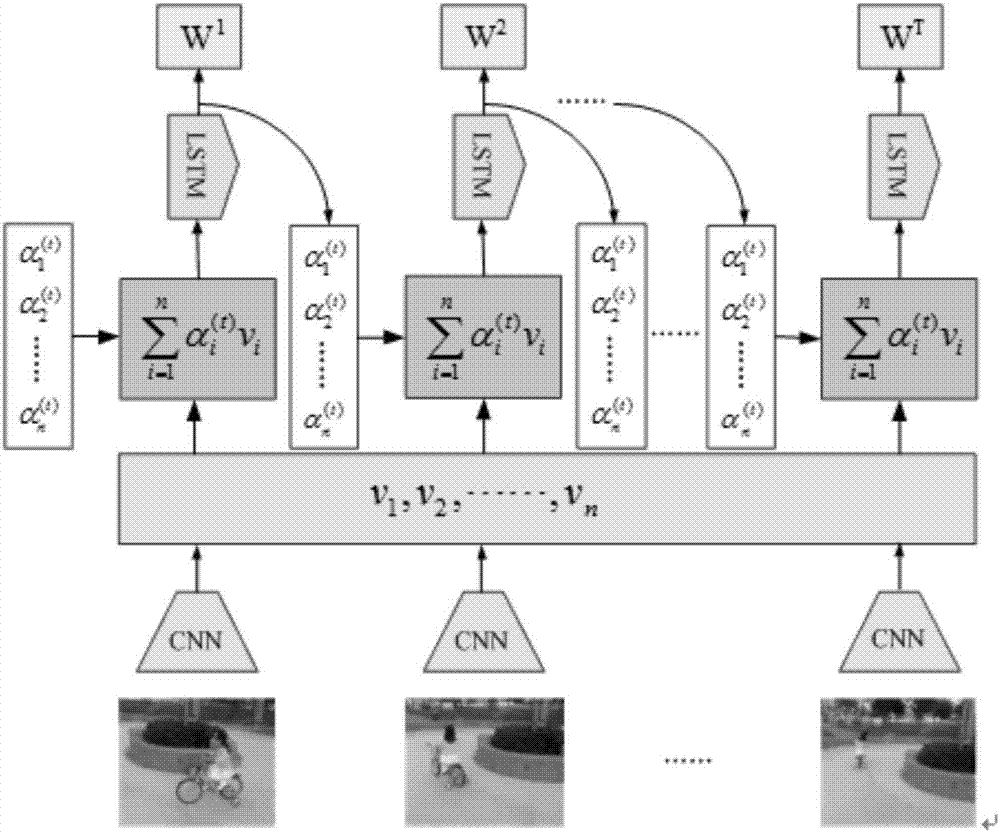

[0045] figure 1 It is a flow chart of the video classification method based on the attention mechanism of the present invention.

[0046] In this embodiment, the UCF-101 dataset is downloaded from the CRCV official website as a sample video for training. The UCF-101 dataset contains C=101 category videos, such as ApplyEyeMakeup, ApplyLipstick, ... YoYo, etc., each category corresponds to a Video IDs, as shown in Table 1, are arranged in alphabetical order. For example, the ID of ApplyEyeMakeup is (1,0,0...0), the ID of ApplyLipstick is (0,1,0...0), and the ID of YoYo is (0, 0,0...1), the logo is a C-dimensional vector...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com