Method for image feature deep learning and perceptibility quantification through computer

A deep learning and image feature technology, applied in computer parts, computing, instruments, etc., can solve problems such as poor overall performance, limitations, and long training time, and achieve a simple and convenient operation method, stable and reliable performance, and shortened training time. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

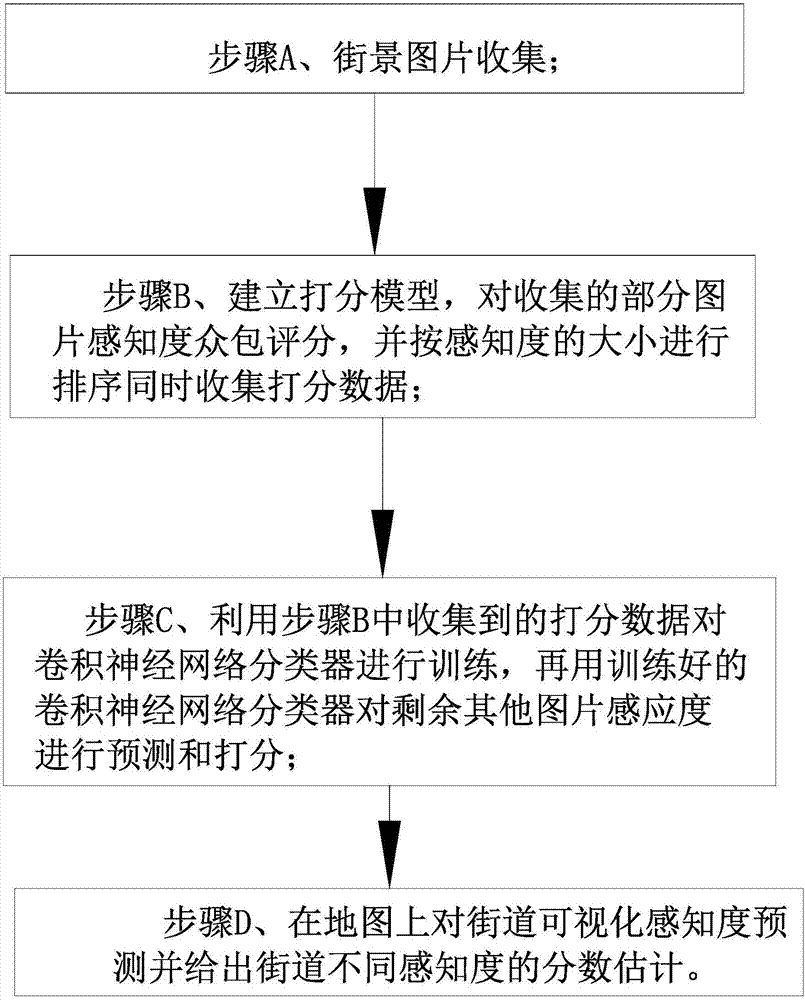

[0021] figure 1 A specific embodiment of the invention is shown in which figure 1 It is a structural flow diagram of the present invention.

[0022] See figure 1 Shown, a kind of computer deep learning image feature and the method for quantifying perceptual degree, the method comprises the following steps:

[0023] Step A, collection of street view images;

[0024] Step B. Establish a scoring model, crowdsource and score some of the collected pictures for perception, and sort according to the size of perception and collect scoring data at the same time;

[0025] Step C, using the scoring data collected in step B to train the convolutional neural network classifier, and then use the trained convolutional neural network classifier to predict and score the remaining sensitivity of other pictures;

[0026] Step D. Predict the visual perception of the street on the map and give a score estimate of different perceptions of the street.

[0027] In step A, first divide the city a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com