Video action segmentation method based on hybrid time convolution and cycle network

A technology that mixes time and network, applied in biological neural network models, character and pattern recognition, instruments, etc., can solve problems such as extraction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

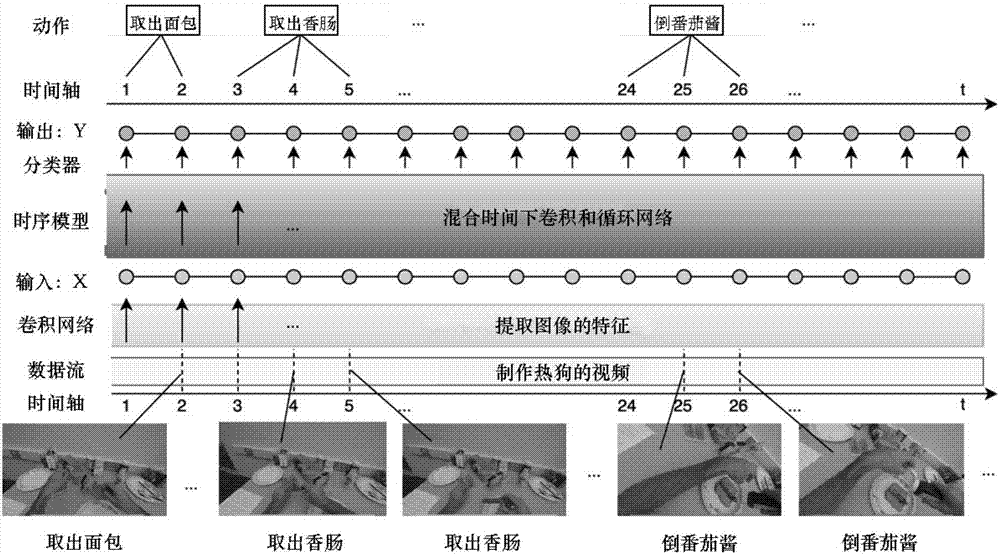

[0035] figure 1 It is a system flowchart of a video action segmentation method based on a hybrid temporal convolution and recurrent network in the present invention. It mainly includes data input; model structure; model migration and variation; model parameter setting.

[0036] Among them, the model structure, including network architecture and action classification.

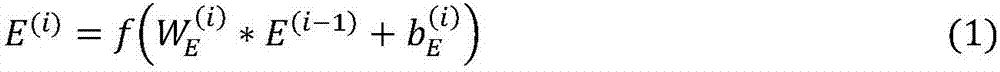

[0037] The network architecture consists of input, encoder L E , middle layer L mid , decoder L D Composed of and classifier: Among them, the input layer receives the original video frame data stream signal, and outputs the intermediate signal after being processed by the module composed of the convolution layer and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com