360-degree panoramic video coding method based on motion attention model

An attention model and panoramic video technology, which is applied in the field of 360-degree panoramic video coding based on motion attention model, and can solve the problem of reducing the compression rate of 360-degree panoramic video.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described now in conjunction with accompanying drawing.

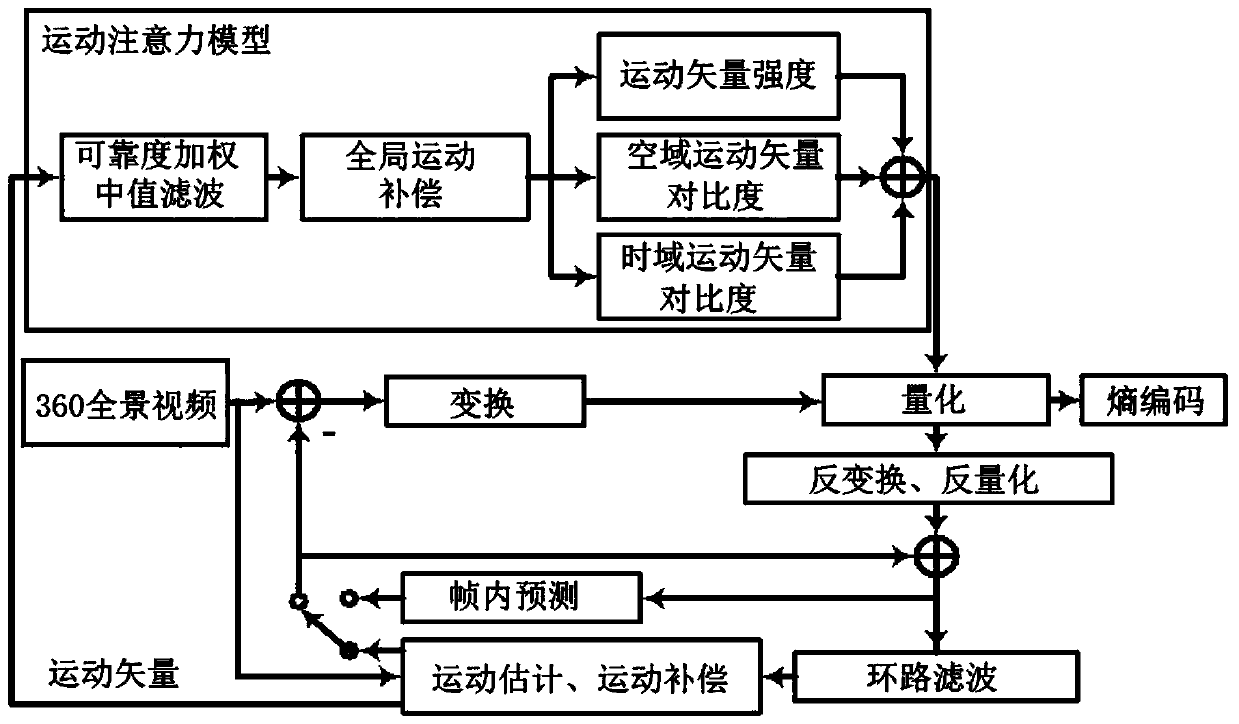

[0041] see figure 1 , figure 1 Shown is the HEVC coding framework of an embodiment of the present invention. The purpose of this embodiment is to provide a 360-degree panoramic video coding method based on a motion attention model, adding the attention model to it, mainly including the following steps:

[0042] Step 1: Extract the motion vector to obtain the motion vector field, and calculate the reliability of each motion vector.

[0043] The motion vector is extracted from the HEVC reference encoder HM16.0 used in this embodiment, and a motion vector field is obtained. see figure 2 , figure 2 The single arrow shown in is the motion vector, which is the relative displacement of the coding block relative to the reference frame within a certain search range; the motion vector field densely distributed in the video together constitutes the motion vector field.

[0044] S...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com