Sensor fusion using inertial and image sensors

An image sensor and inertial sensor technology, applied in image enhancement, image analysis, image data processing, etc., can solve problems such as inaccuracy and unfavorable functions of unmanned aerial vehicles

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

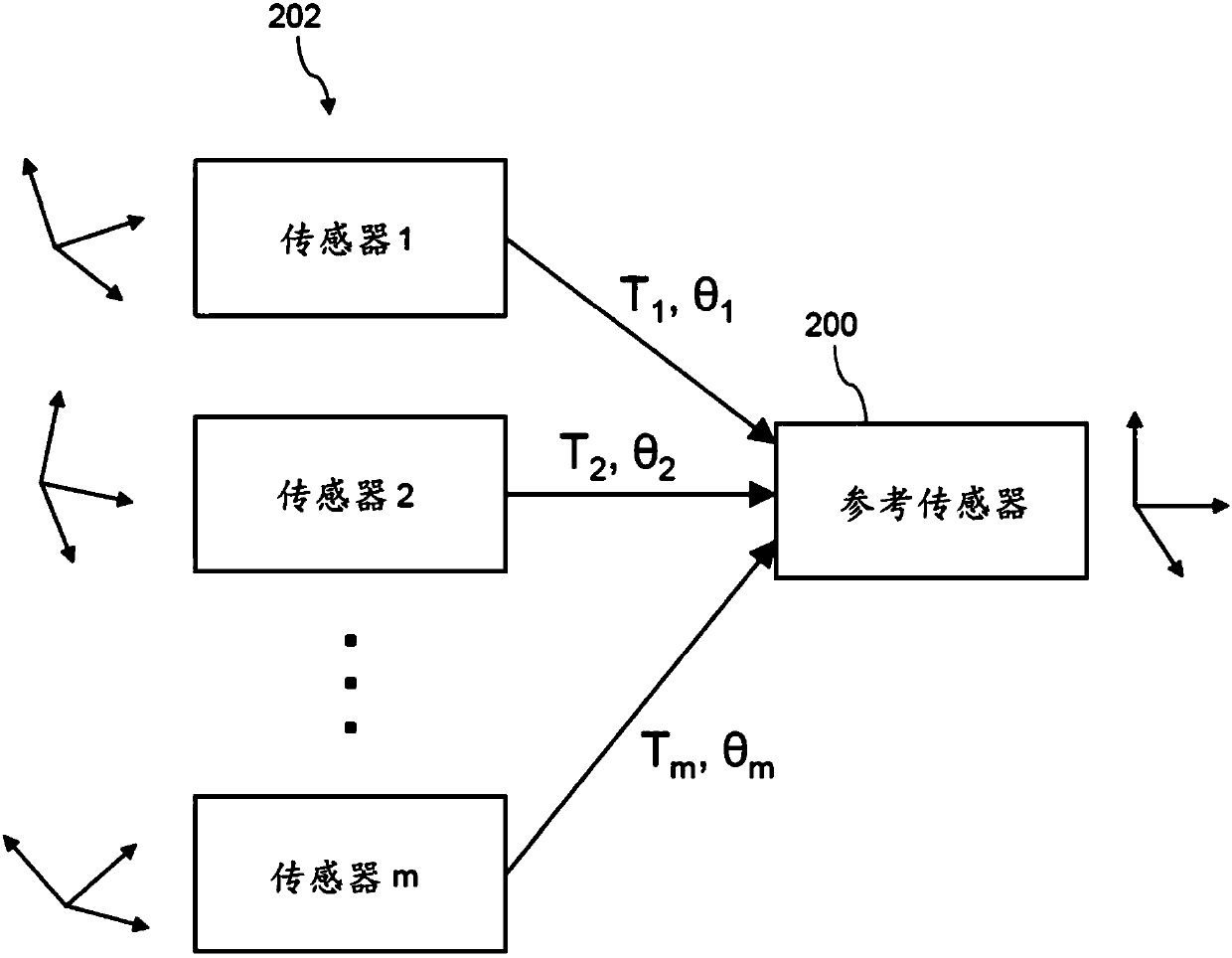

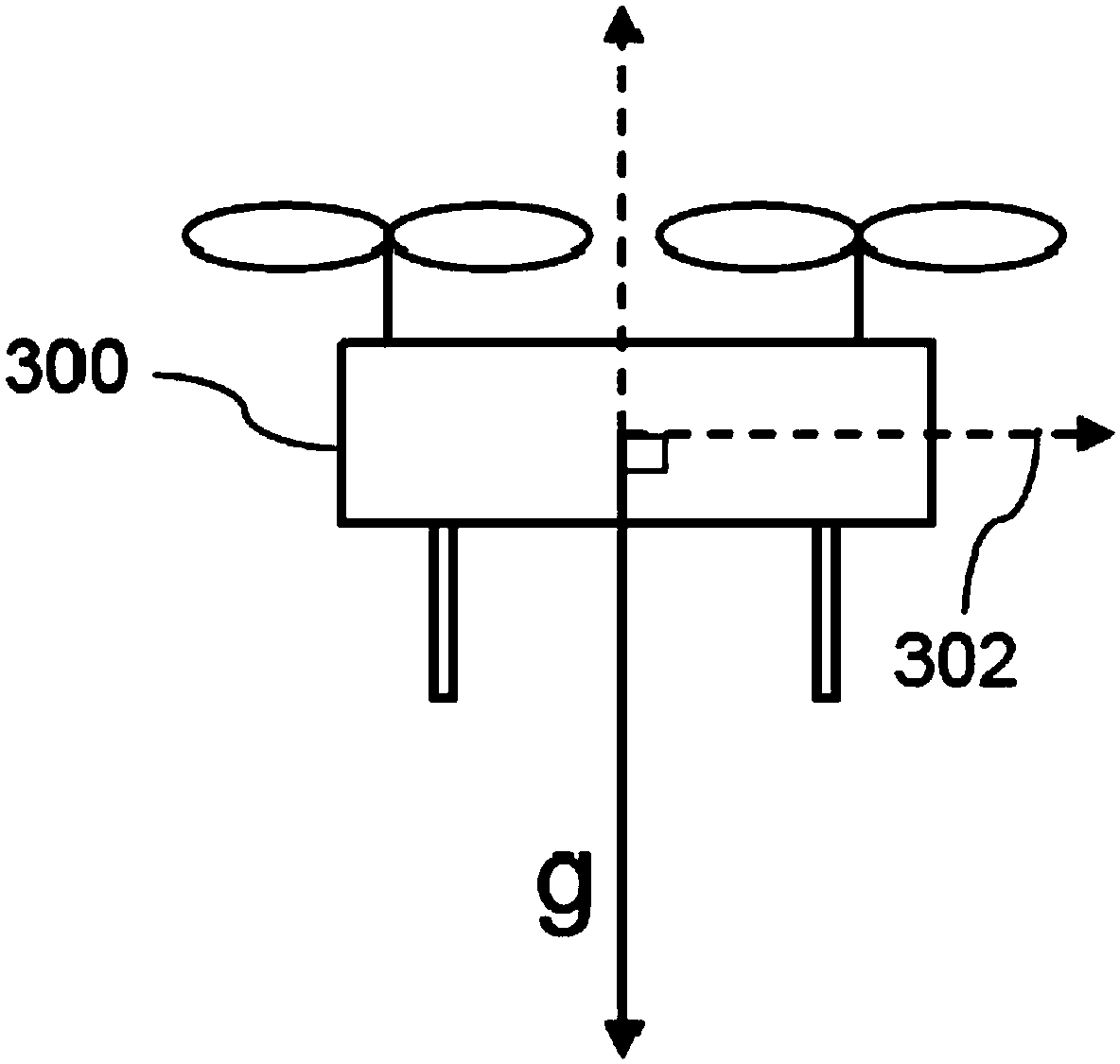

[0083] The systems, methods, and apparatus of the present disclosure support determining information related to the operation of movable objects, such as unmanned aerial vehicles (UAVs). In some implementations, the present disclosure utilizes sensor fusion techniques to combine sensor data from different sensor types in order to determine various types of information useful for UAV operations, such as for state estimation, initialization, error recovery, and / or or parameter calibration information. For example, an unmanned aerial vehicle may include at least one inertial sensor and at least two image sensors. Data from different types of sensors can be combined using various methods such as iterative optimization algorithms. In some implementations, an iterative optimization algorithm involves iteratively linearizing and solving a nonlinear function (eg, a nonlinear objective function). The sensor fusion techniques presented in this paper can be used to improve the accuracy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com