A Fingertip Detection Method Based on Kinect Depth Information

A technology of fingertip detection and depth information, which is applied in the field of human-computer interaction, can solve problems such as cumbersome steps, complex algorithms, and poor real-time performance, and achieve the effect of accurate acquisition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

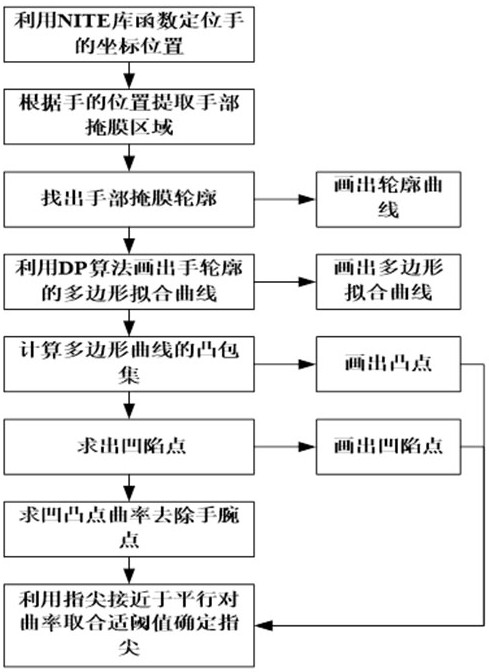

Method used

Image

Examples

Embodiment Construction

[0028] The implementation of the present invention will be described in detail below in conjunction with the drawings and specific examples.

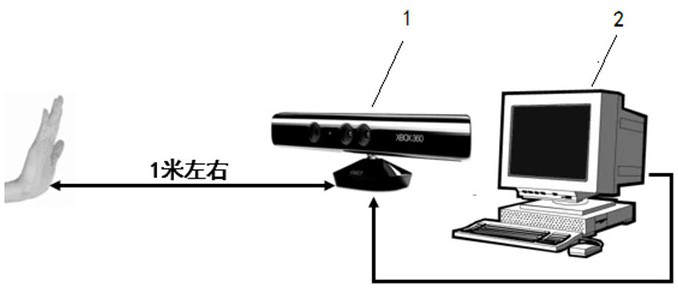

[0029] Step S11, Kinect is placed on the desktop, the palm is perpendicular to the desktop, the palm faces the Kinect, and the distance from the Kinect is about one meter, such as figure 1 shown. Push the palm forward and then retract it to trigger the gesture tracking function of the NITE function library.

[0030] Step S12, use the NITE function library to obtain the coordinates of the palm, and then calculate the approximate depth range of the hand through the depth of the palm point.

[0031] Step S13, use the depth range obtained in S12 to set the search area and the depth threshold, use the depth binary mask to pass through an n*n matrix, and multiply the elements in n rows and n columns with the palm area to separate the hand image from the background .

[0032] Step S21 , first collect the depth image and the color image of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com