Visual tracking method for following robot

A technology of following robots and visual tracking, applied in instruments, image data processing, computing, etc., can solve the problems of low tracking accuracy and large amount of calculation, and achieve the effect of enhancing stability and smoothing changes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

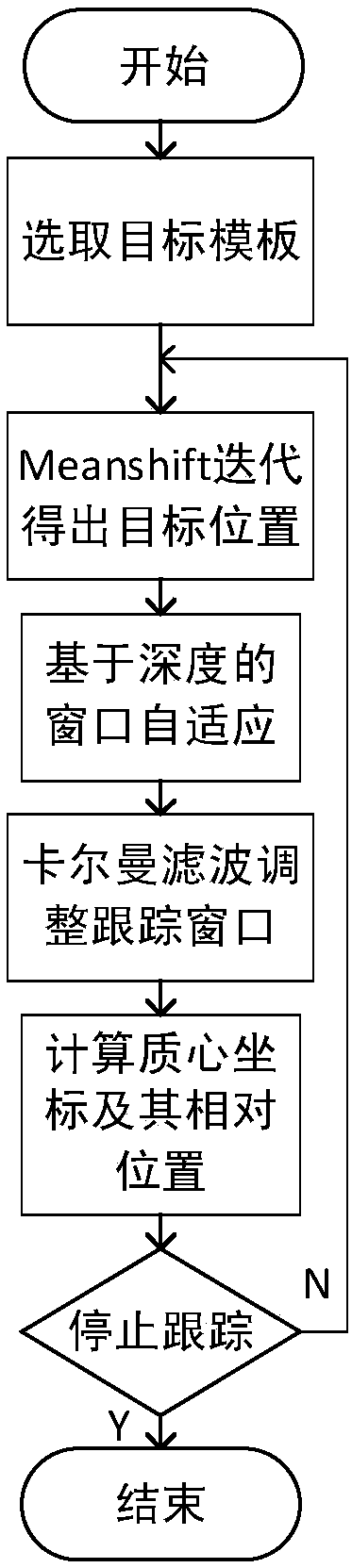

[0027] The present invention will be further described below in conjunction with the accompanying drawings.

[0028] refer to Figure 1 to Figure 5 , a kind of visual tracking method for following robot, described method comprises the following steps:

[0029] Step 1) Fusion the first frame of image information and depth information and calculate the centroid of the user, and compare the position of the centroid with the depth values of surrounding pixels to calculate the range belonging to the user in the depth map, which is the target user template;

[0030] Step 2) Establish the color probability statistical model of the target user template and the candidate user template in the next frame, use the similarity function to measure, and continuously iterate through the meanshift algorithm, the area with the highest similarity coefficient is the best candidate user in the frame template;

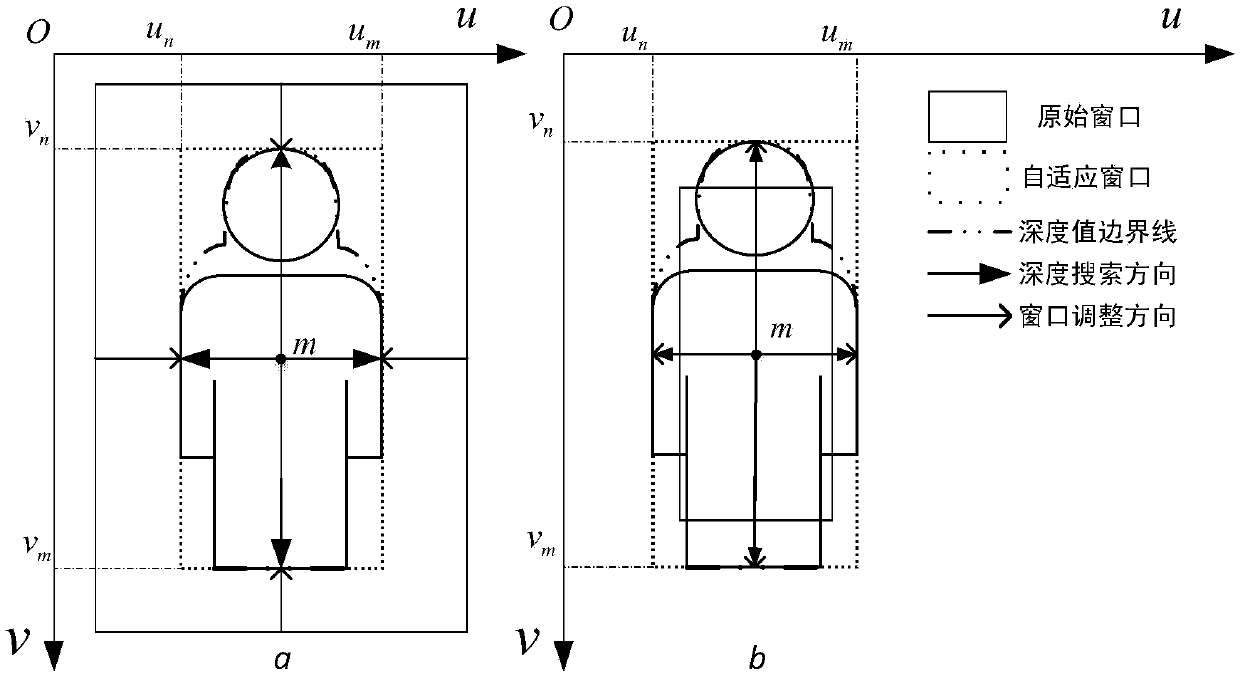

[0031] Step 3) By comparing the depth values of the center point of the best candi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com