Method and system for generating natural languages for describing image contents

A technology of image content and natural language, applied in the field of image processing, can solve problems such as unidentified, missing skis, and image recognition errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

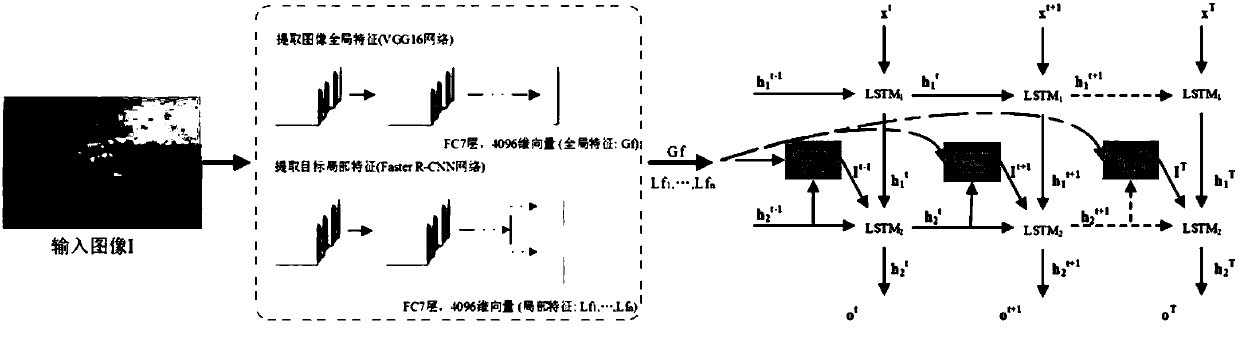

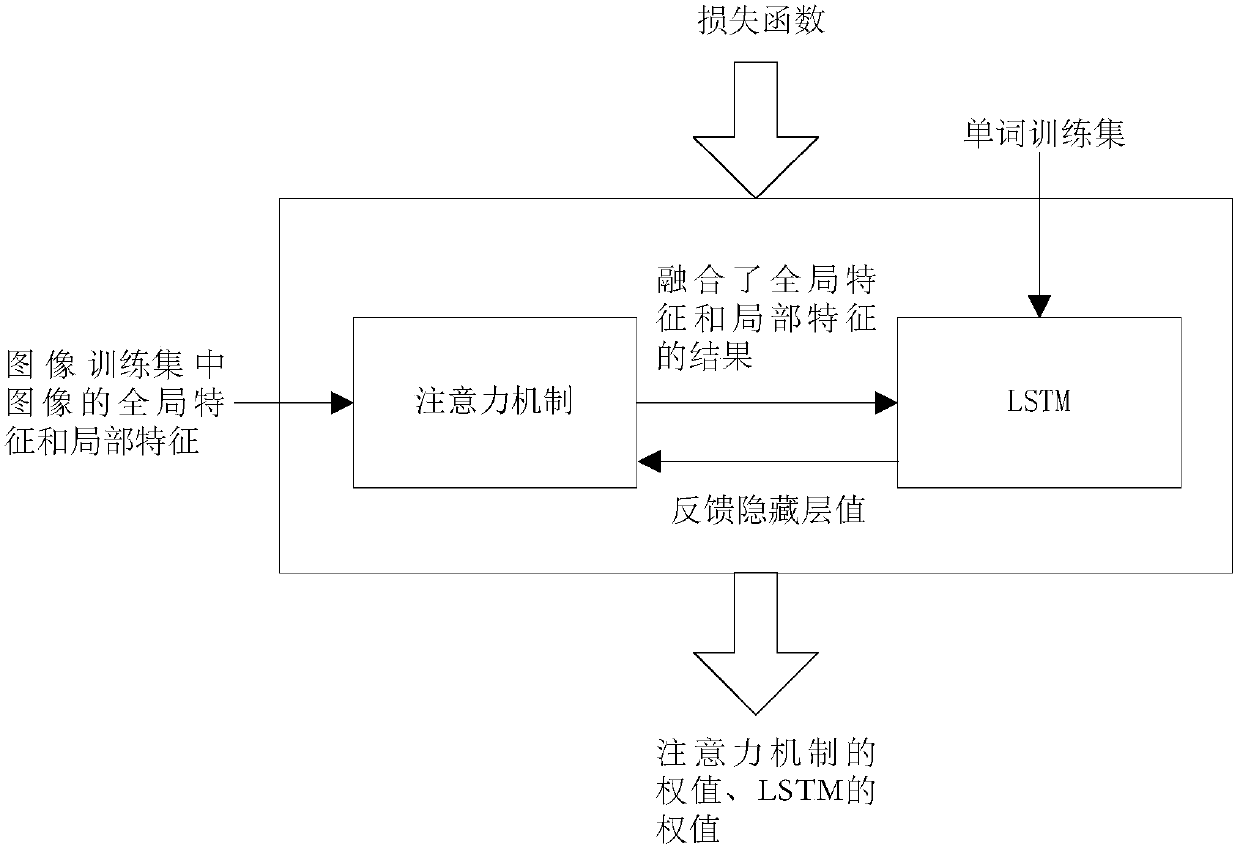

[0061] In order to obtain all kinds of features on the image to be processed comprehensively, two concepts of "global features" and "local features" are used in this application. Among them, the global feature refers to the image feature used to describe the context information containing the image object; in contrast, the local feature refers to the image feature used to describe the detailed information containing the image object. Both global and local features are important when representing an image.

[0062] For example, refer to figure 1 (i), "crowd", "snow", "slope" belong to global features, while "skis worn under people's feet", "hats on people's heads", "windows on houses", etc. belong to local features. Similarly, refer to figure 1 (ii), "person" and "soccer field" are global features, while "kite placed on the football field" ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com