Object classification in image data using machine learning models

A machine learning model and image data technology, applied in machine learning, computing models, instruments, etc., can solve problems such as inaccurate positioning and identification

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] The current subject matter is directed to enhanced techniques for locating (ie, detecting, etc.) objects within multidimensional image data. Such multi-dimensional image data can, for example, be generated by optical sensors specifying both color and depth information. In some cases, the multidimensional image data is RGB-D data, while in other cases other types of multidimensional image data can be utilized, including but not limited to point cloud data. Although described below primarily in connection with RGB-D image data, it should be understood that, unless otherwise stated, the present subject matter is applicable to other types of multidimensional image data (i.e., data that combines color and depth data / information) that Types of multidimensional image data include video streams from depth sensors / cameras (which can be represented as a series of RGB-D images).

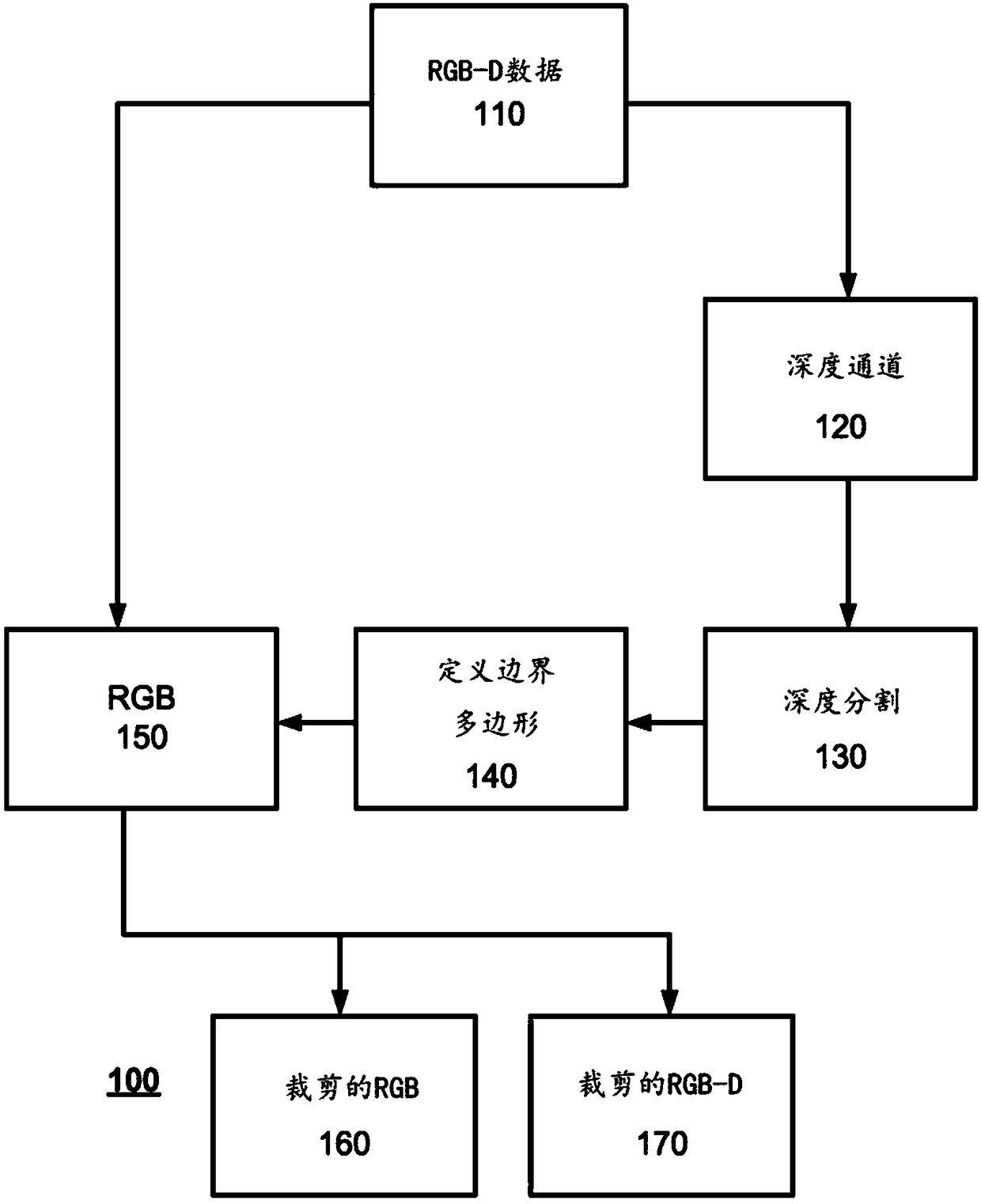

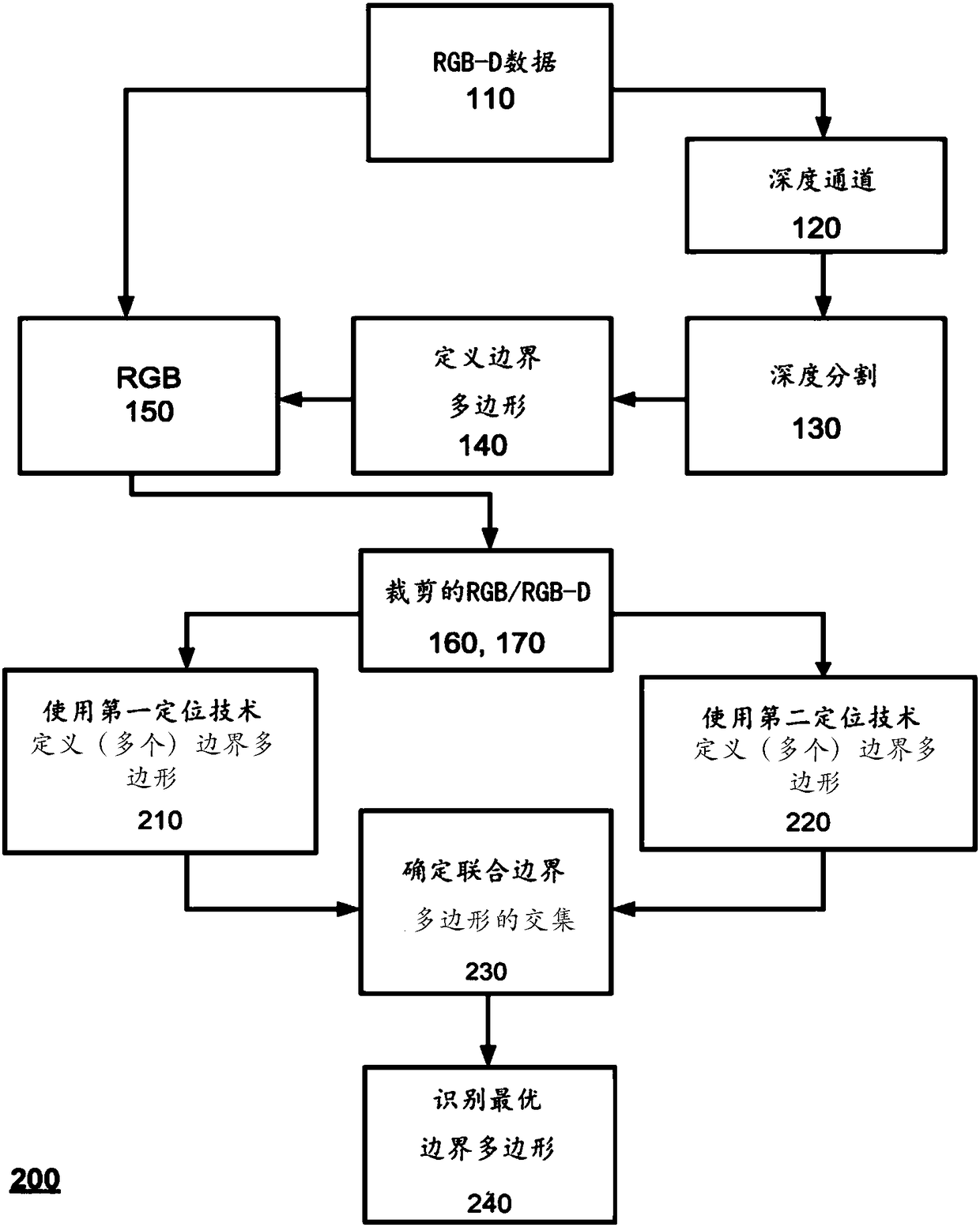

[0029] figure 1 is a process flow diagram 100 illustrating the generation of a bounding box (box) u...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap