Spatial scalable fast coding method

A fast coding and spatial technology, applied in the field of spatially scalable fast coding, to achieve the effect of shortening coding time, reducing computational complexity, and improving coding real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

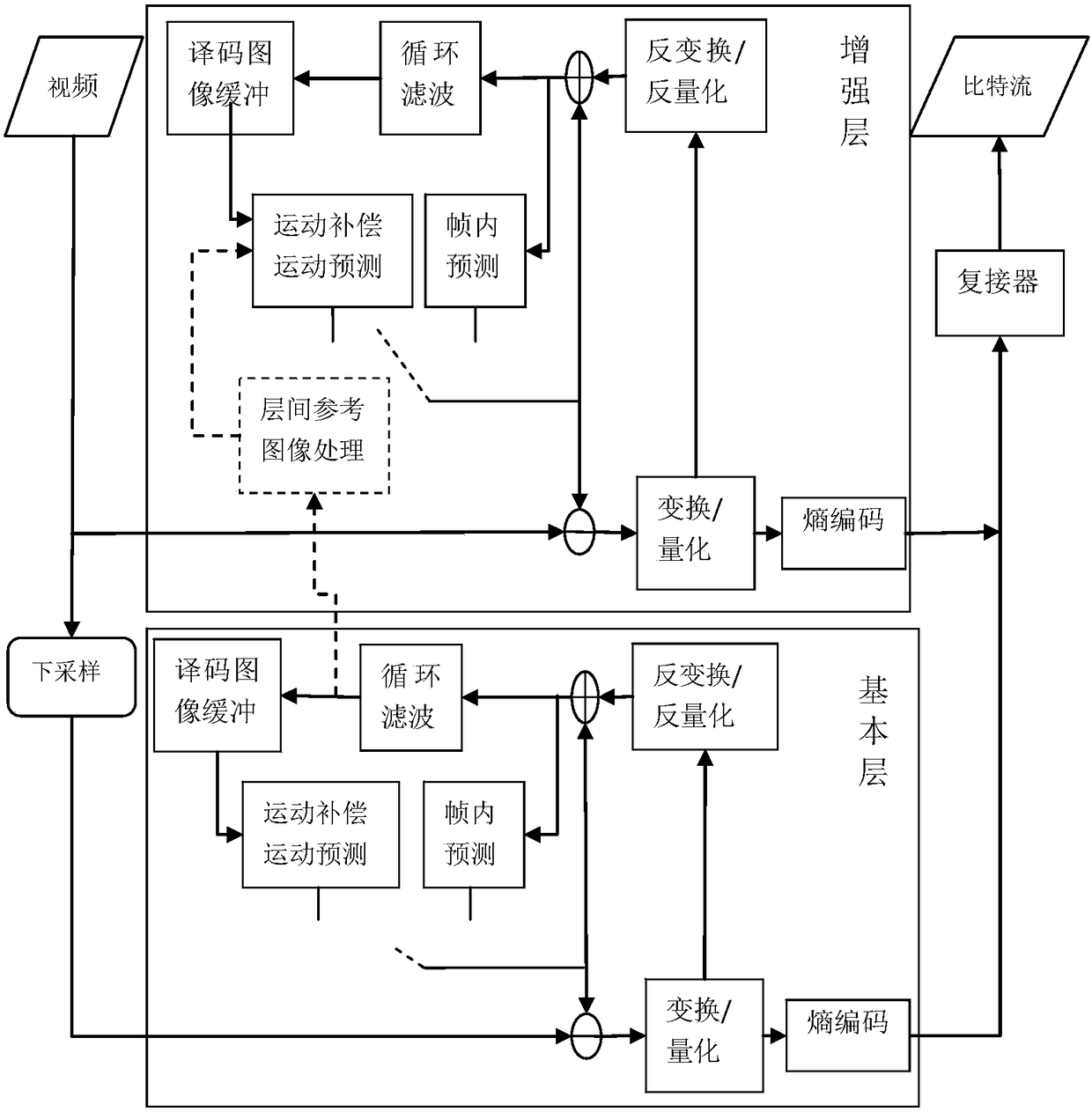

Method used

Image

Examples

Embodiment 1

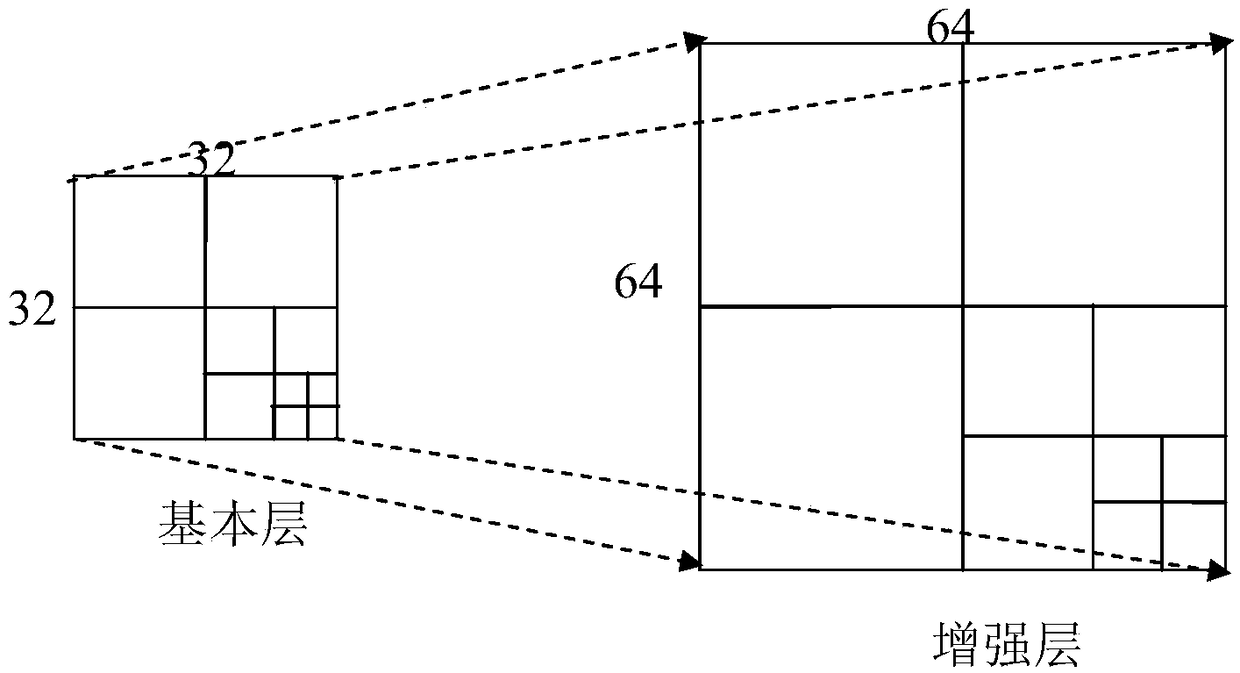

[0046] The video sequence of the enhancement layer is down-sampled according to the ratio of 2:1, and the base layer is encoded to obtain the video sequence of the base layer;

[0047] Because the video sequence of the base layer of spatial scalable video coding technology is obtained by downsampling the enhancement layer sequence according to the ratio of 2:1, so according to image 3 It can be seen that the size of a coding tree unit (Coding TreeUnit, CU) in the enhancement layer is 64×64, and the size of the corresponding coding tree unit in the base layer is 32×32.

[0048] The coding unit (CodingUnit, CU) is split from the coding tree unit, and the size of the coding unit cannot exceed the coding tree unit at most. Moreover, the present invention is aimed at the intra-frame prediction of the spatially scalable video coding technology, and there are only two ways to divide the coding unit, that is, 2N×2N and N×N.

[0049] Further, the spatially scalable coding method adop...

Embodiment 2

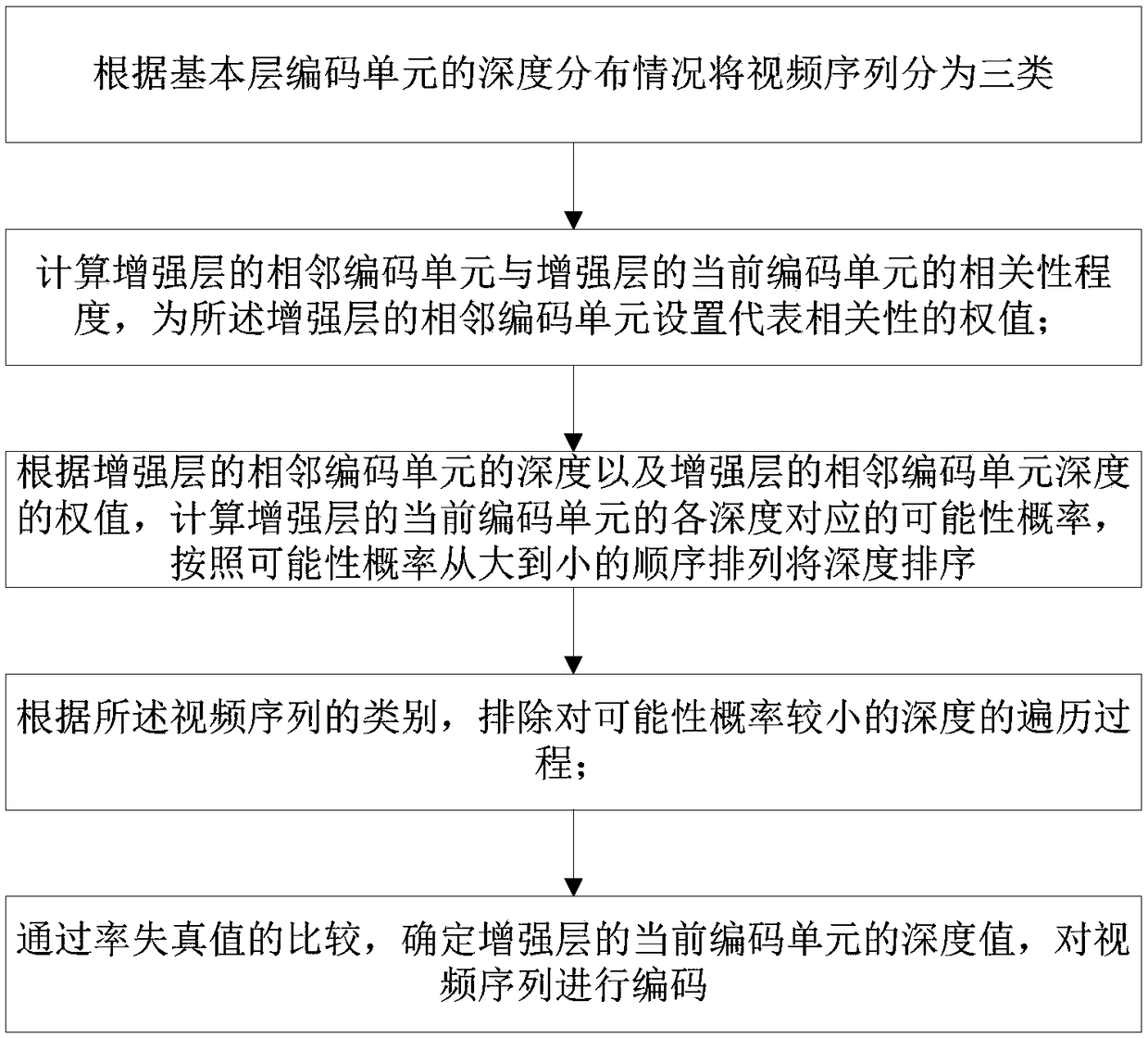

[0073] like Image 6 As shown in the figure, it can be seen that the process of depth prediction of the current coding unit of the enhancement layer according to the adjacent coding units is a flowchart of a preferred embodiment of the present invention.

[0074] Another achievable method for depth prediction of the current coding unit of the enhancement layer is:

[0075] In the first step, the original data can be generated according to the international standard technology, and the depth of the coding unit of the basic layer in the original data is calculated mathematically, and the ratio of the depth to 3 is calculated, and the video sequence is classified;

[0076] As an alternative, when the ratio of depth to 3 is greater than the upper threshold, the video sequence is the first sequence (sequence with complex background), and when the ratio of depth to 3 is smaller than the lower threshold, the video sequence is the second sequence (background simple sequence), otherwi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com