Method and system for automatic treebank conversion based on tree-shaped recurrent neural network

A cyclic neural network and tree technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as general transformation effect, insufficient utilization of source tree bank, and missing dual-tree alignment data.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0071] The present embodiment is based on the automatic tree bank conversion method of the tree-shaped recurrent neural network, including:

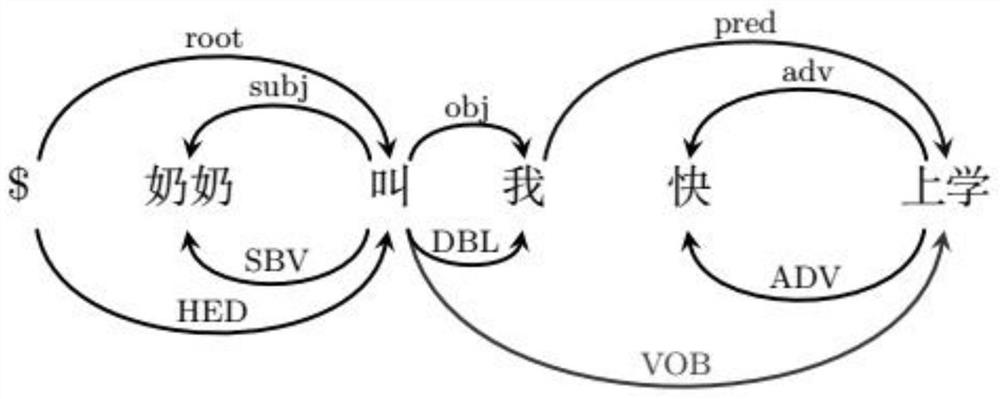

[0072] Obtaining a double-tree alignment database, which stores sentences marked with two annotation specifications;

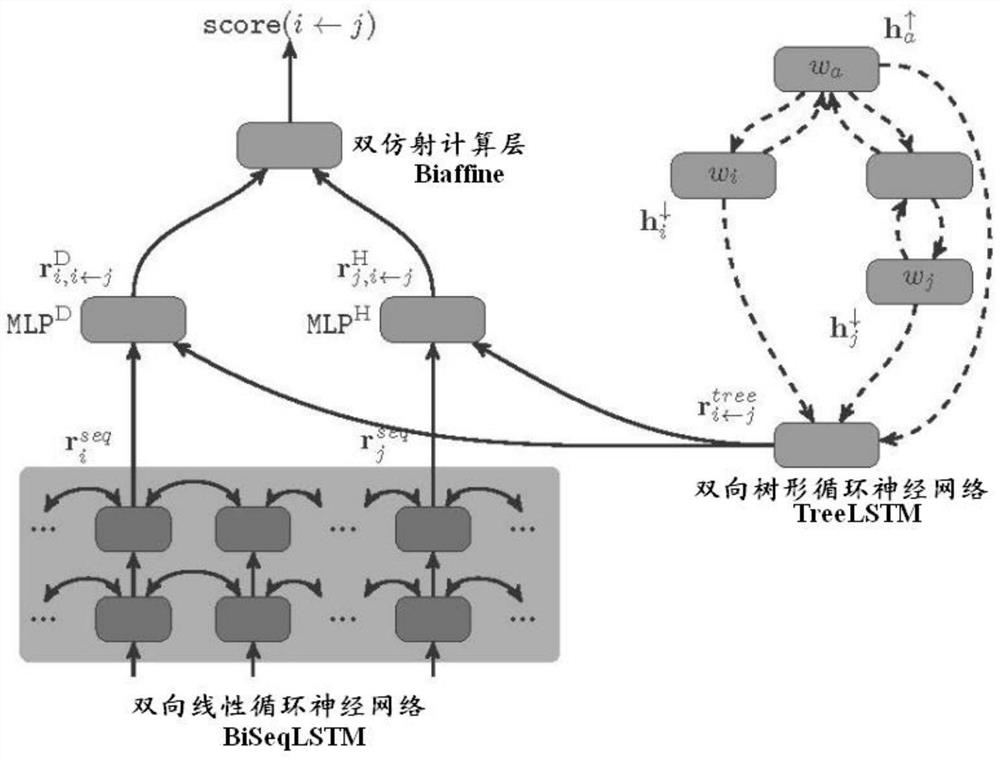

[0073] Calculate respectively the arc-score value of the dependence of every two words in the target terminal tree in each described sentence, wherein, the two words described are separated by word w i and the word w j Indicates that the presupposition word w i and the word w j In the target tree, they are respectively modifiers and core words, word w i and the word w j The calculation process of dependent arc minutes in the target tree includes:

[0074] Extract the word w in the source tree i , word w j The shortest path tree of , based on the bidirectional tree-shaped recurrent neural network TreeLSTM, the word w in the shortest path tree is obtained i , word w j , word w a The respective hidden layer output...

example 1

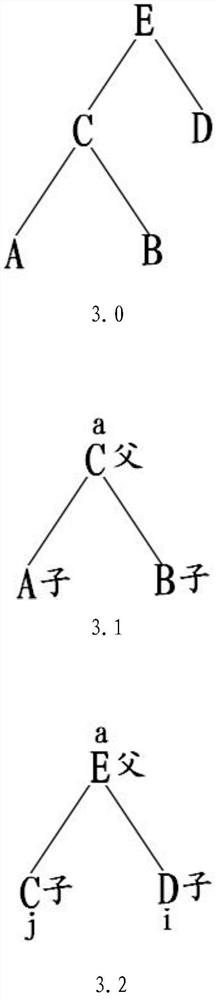

[0110] Example 1: see image 3 .1, the shortest path tree of word C, word A, and word B, where word A corresponds to word w i , word B corresponds to word w j , word C corresponds to word w a .

[0111] Operation from the bottom up:

[0112] (1) Calculate the hidden layer output vector of word A: part of the input information of the LSTM node is: the top layer output vector corresponding to word A; the other part is the zero vector.

[0113] (2) Calculate the hidden layer output vector of word B: part of the input information of the LSTM node is: the top layer output vector corresponding to word B; the other part is the zero vector.

[0114] (3) Calculate the hidden layer output vector of the word C as the ancestor node: part of the input information of the LSTM node is: the top layer output vector corresponding to the word C; since the word C has two sons, the other part is the child node word A and word B's hidden layer output vector. so far image 3 .1 All calculatio...

example 2

[0119] Example 2: see image 3 .2, the shortest path tree of word E, word C, and word D, where word D corresponds to word w i , word C corresponds to word w j , word E corresponds to word w a . Word E is the closest common ancestor node of word C and word D, and the calculation method is the same as that of Example 1, and will not be repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com