Three-dimensional human body posture estimation method based on monocular camera

A monocular camera and 3D attitude technology, applied in the field of computer vision, can solve problems such as poor robustness, low computing efficiency, and dependence on 3D human body models, and achieve the effects of wide adaptability, strong adaptability and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The specific implementation manner of the present invention will be described in detail below in conjunction with the accompanying drawings and preferred embodiments.

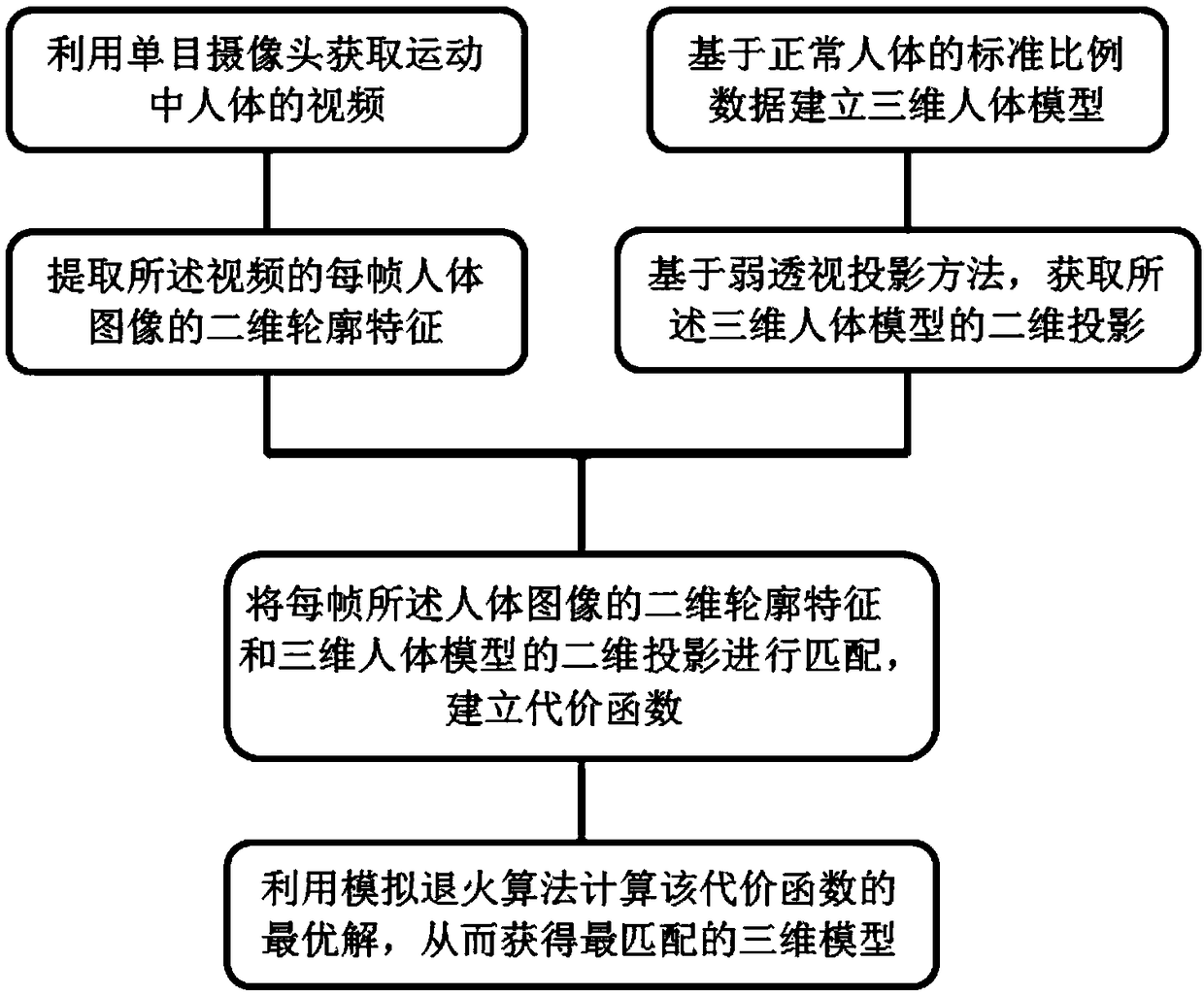

[0047] Refer to attached figure 1 , the present invention provides a method for estimating the three-dimensional pose of a human body based on a monocular camera, comprising the following steps:

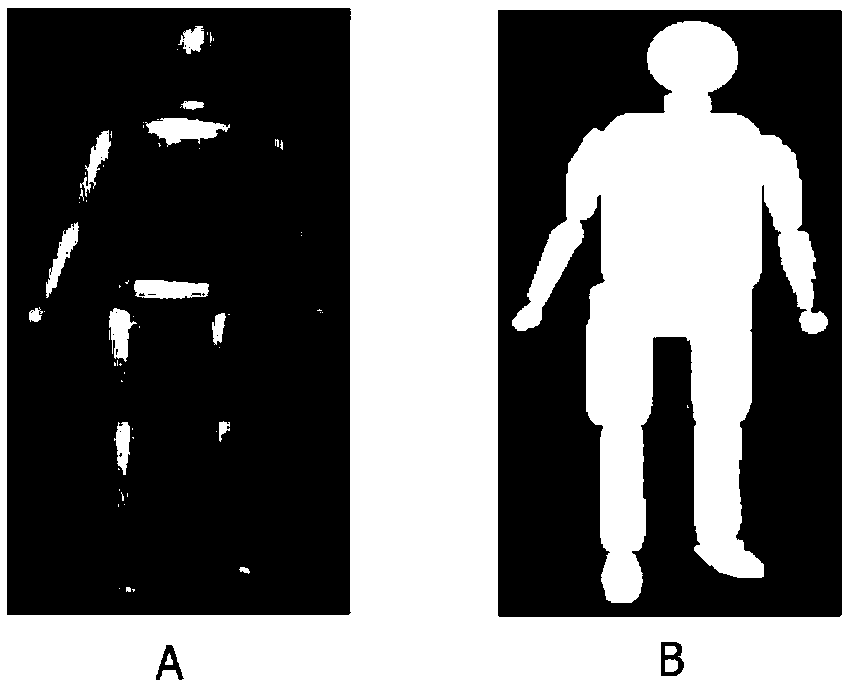

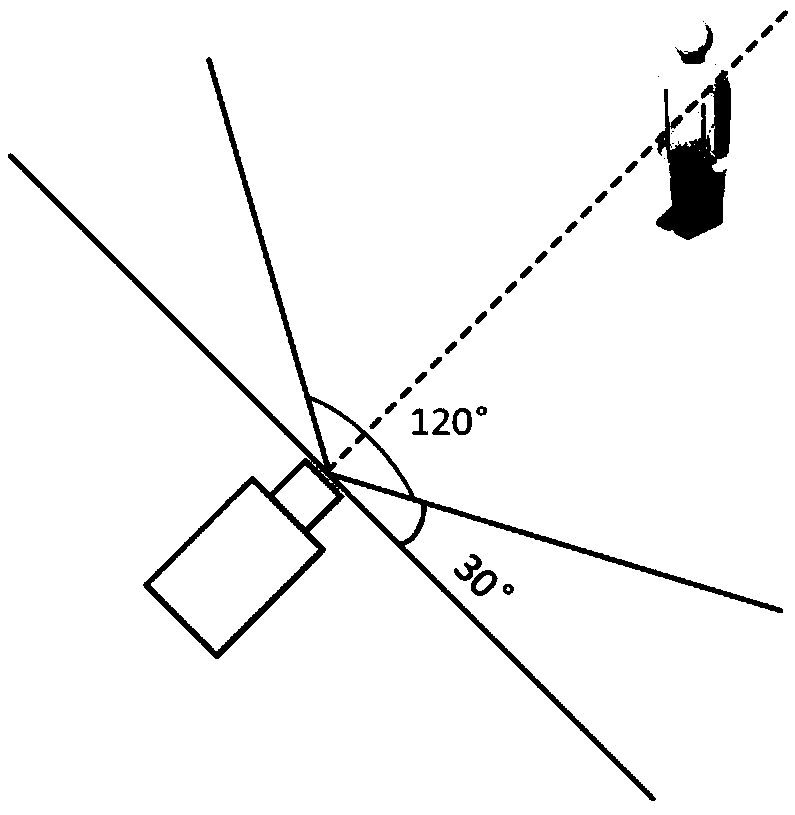

[0048] Step 1. Establish a 3D human body model based on the standard proportion data of a normal human body, and obtain the 2D projection of the 3D human body model based on the weak perspective projection method. See the attached figure 2 , the three-dimensional human body model includes at least six parts including head, torso, left arm, left palm, right arm and right palm, and is provided with 40 degrees of freedom, of which 25 degrees of freedom are used to adjust the overall scaling of the human body model , rotation angle and joint angle, 15 degrees of freedom are used to adjust the length and width of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com