Automated steering systems and methods for a robotic endoscope

An endoscope and robot technology, applied in the field of automatic steering systems and methods for robot endoscopes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0199] Embodiment - Soft Robotic Endoscope Including Automated Steering Control System

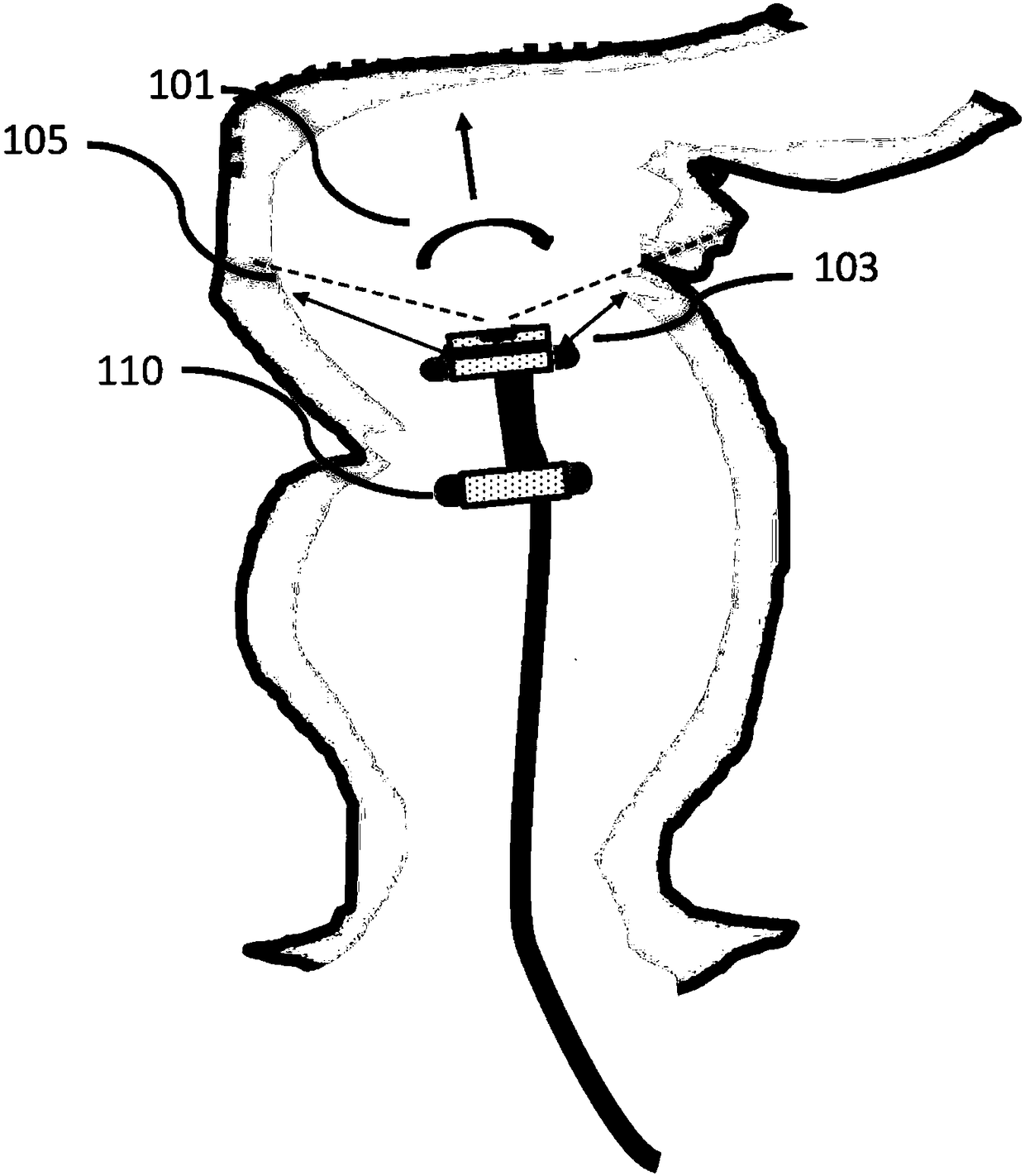

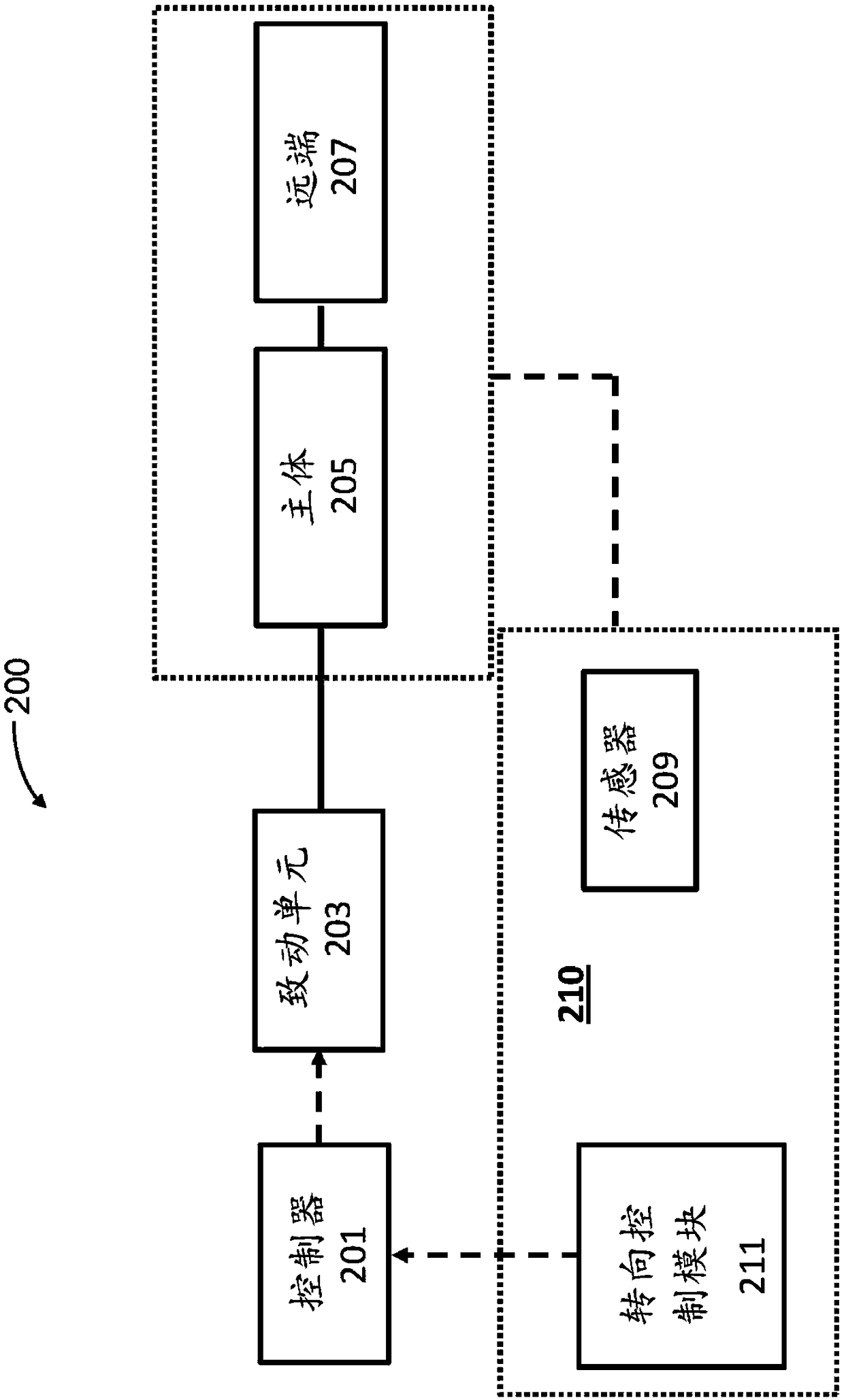

[0200] Figure 11A block diagram illustrating a robotic colonoscope including the automated steering control system of the present disclosure is provided. As shown in the figure, the robotic colonoscopy system includes a guide portion 1101 (ie, a steerable bend section) to which a plurality of sensors can be affixed. In this particular embodiment, the plurality of sensors includes imaging sensors (eg, cameras) and touch (or distance) sensors. The movement of the steerable bending section is controlled by the actuation unit 1103 . In this embodiment, the degree of motion controlled by the actuation unit includes at least rotational motion around the pitch axis and the yaw axis. In some embodiments, the degree of motion controlled by the actuation unit may also include, for example, rotational or translational movement around the roll axis (for example, forward or backward). In some case...

Embodiment approach 1

[0244] Embodiment 1. A control system for providing an adaptive steering control output signal for steering a robotic endoscope, the control system comprising: a) a first image sensor configured to capture a series of A first input data stream of two or more images; and b) one or more processors, individually or collectively, configured to analyze the input data derived from said first input data stream based on the use of a machine learning architecture. analysis of the data to generate a steering control output signal, wherein the steering control output signal adapts in real time to changes in data derived from the first input data stream.

Embodiment approach 2

[0245]Embodiment 2. The control system of embodiment 1, further comprising at least a second image sensor configured to capture at least one of a series of two or more images comprising the cavity. A second input data stream, wherein the steering control output signal is generated based on an analysis of data derived from the first input data stream and the at least second input data stream using a machine learning architecture, and wherein the steering control The output signal is adapted in real time to changes in data derived from said first input data stream or said at least second input data stream.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com