A Human 3D Pose Estimation Method Combining Densely Connected Attention Pyramid Residual Networks and Isometric Constraints

A dense connection and attention technology, applied in character and pattern recognition, biological neural network models, computing, etc., can solve problems such as sickness, gradient explosion, gradient disappearance, etc., to increase recognition, ensure recognition, and increase feature reuse Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0050] The 3D posture estimation method of the human body provided by the embodiment of the present invention can obtain the 3D posture of the human body in an image, and can be applied to video surveillance, behavior recognition, human body interaction, virtual reality, game animation, and medical care.

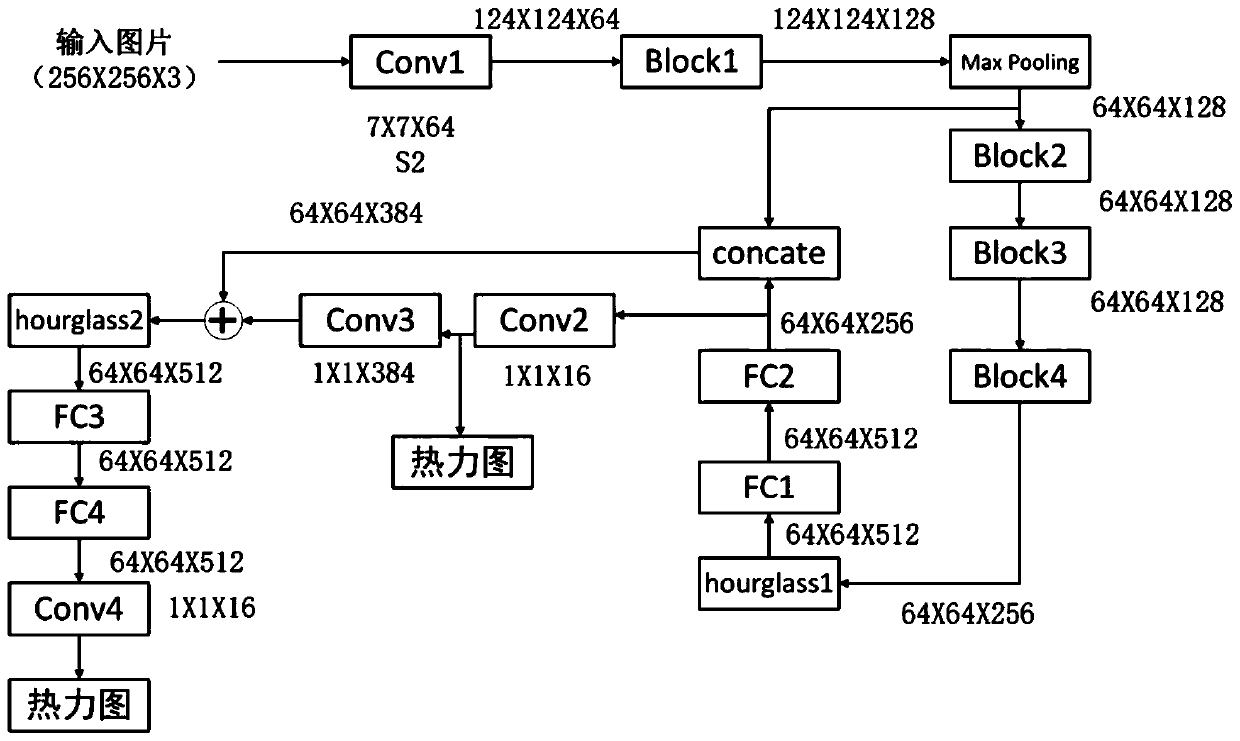

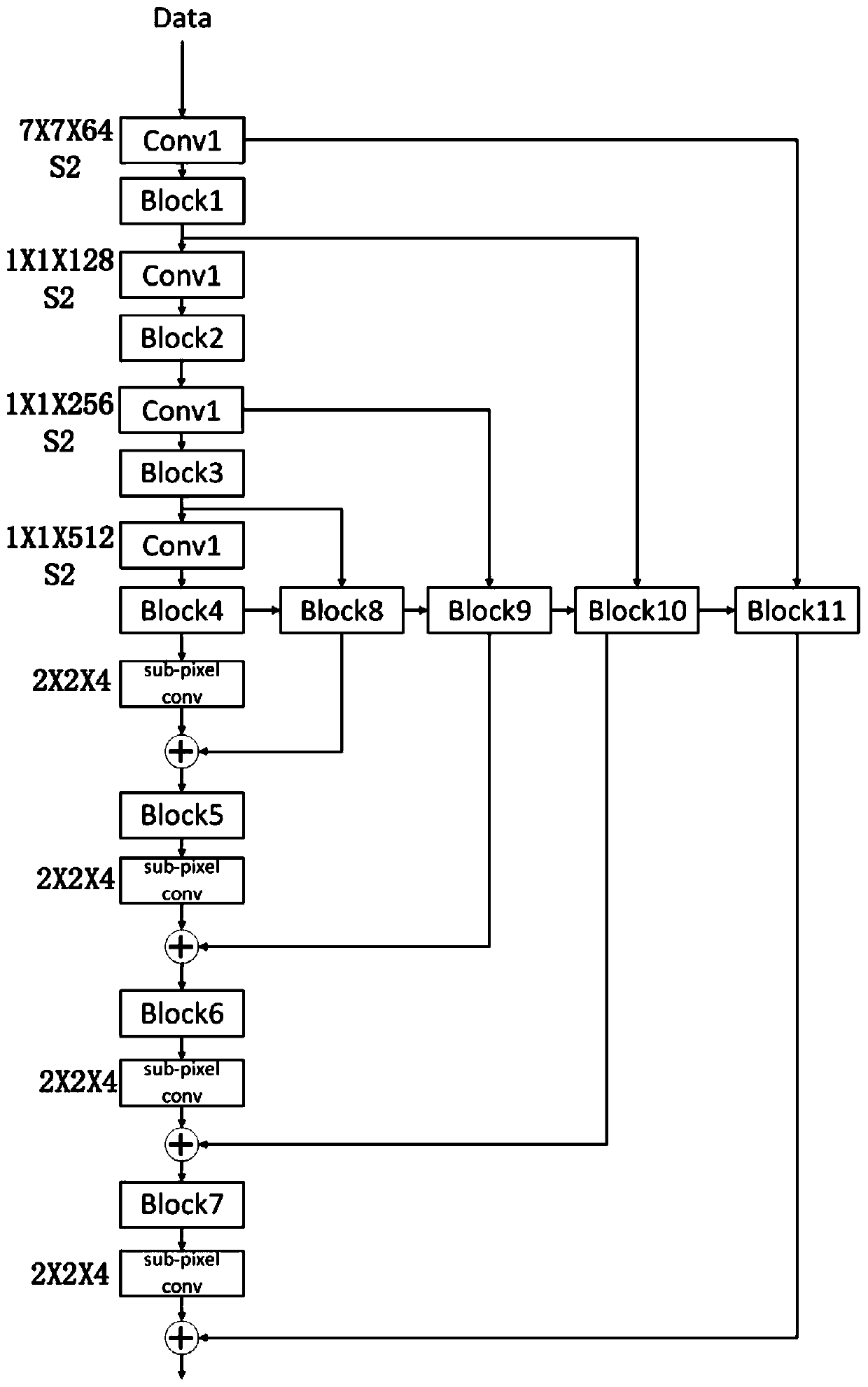

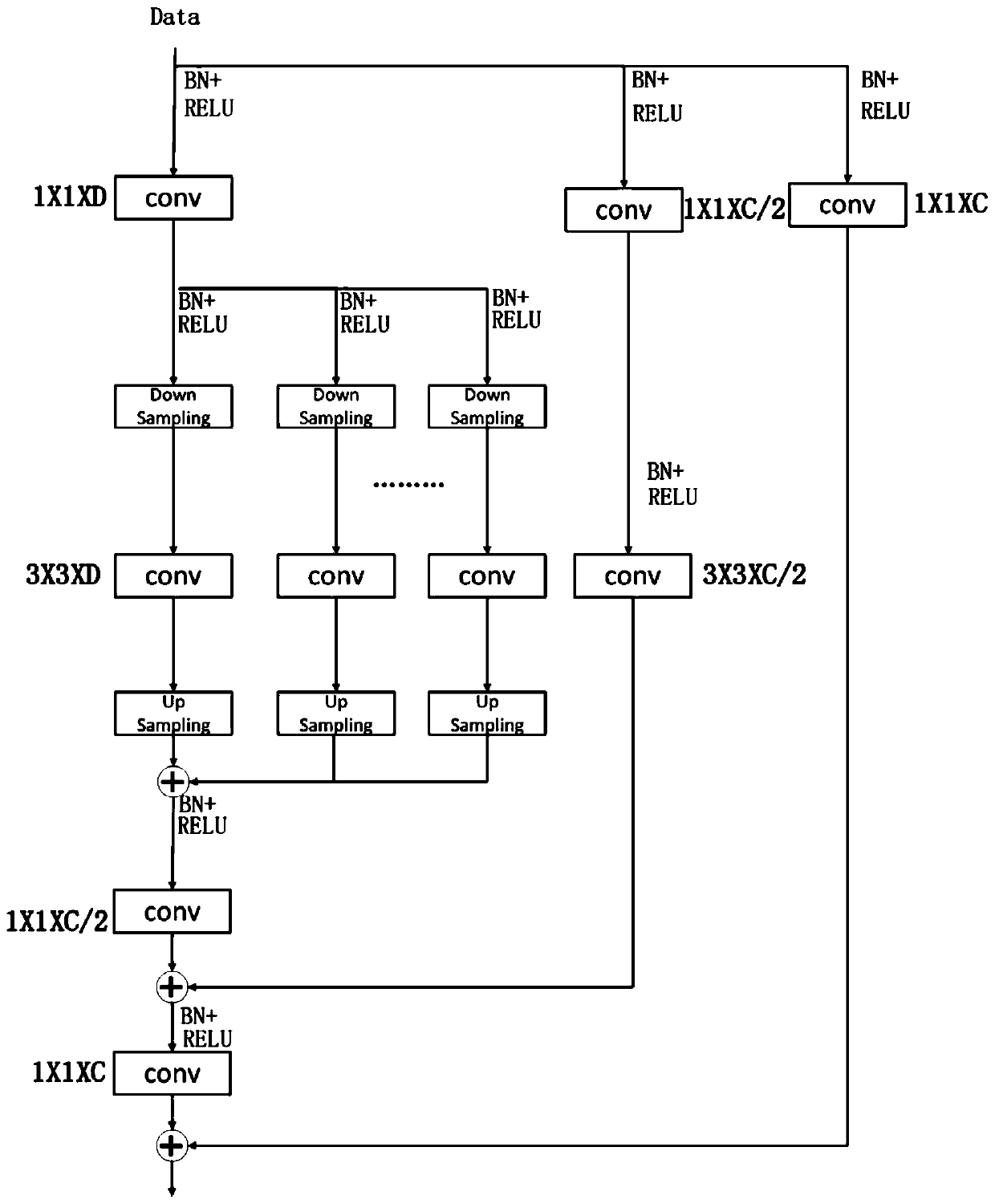

[0051] The method includes two parts: human 2D pose estimation and 3D pose estimation. Before explaining these two parts, the following focuses on introducing the human body 2D pose estimation model adopted in this embodiment.

[0052] For a schematic diagram of the framework of the human body 2D pose estimation model provided by the embodiment of the present invention, see figure 1 , the human body 2D pose estimation model includes an attention pyrami...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com