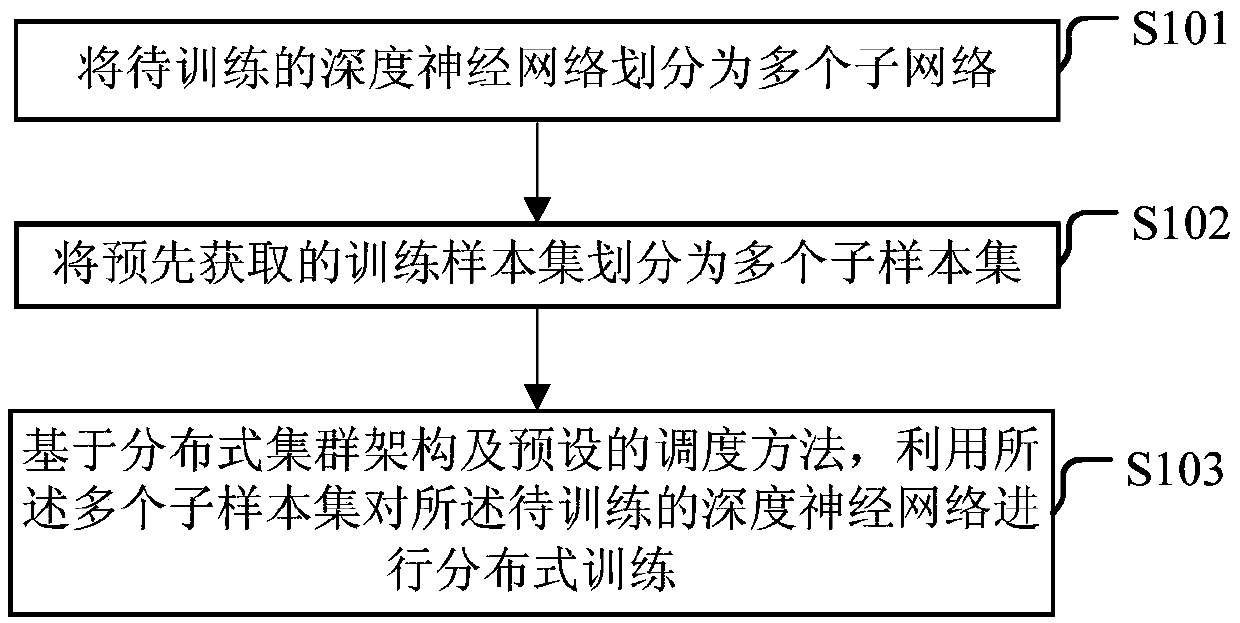

Training method and device for acceleration distributed deep neural network

A deep neural network, distributed technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as long training time of deep neural networks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

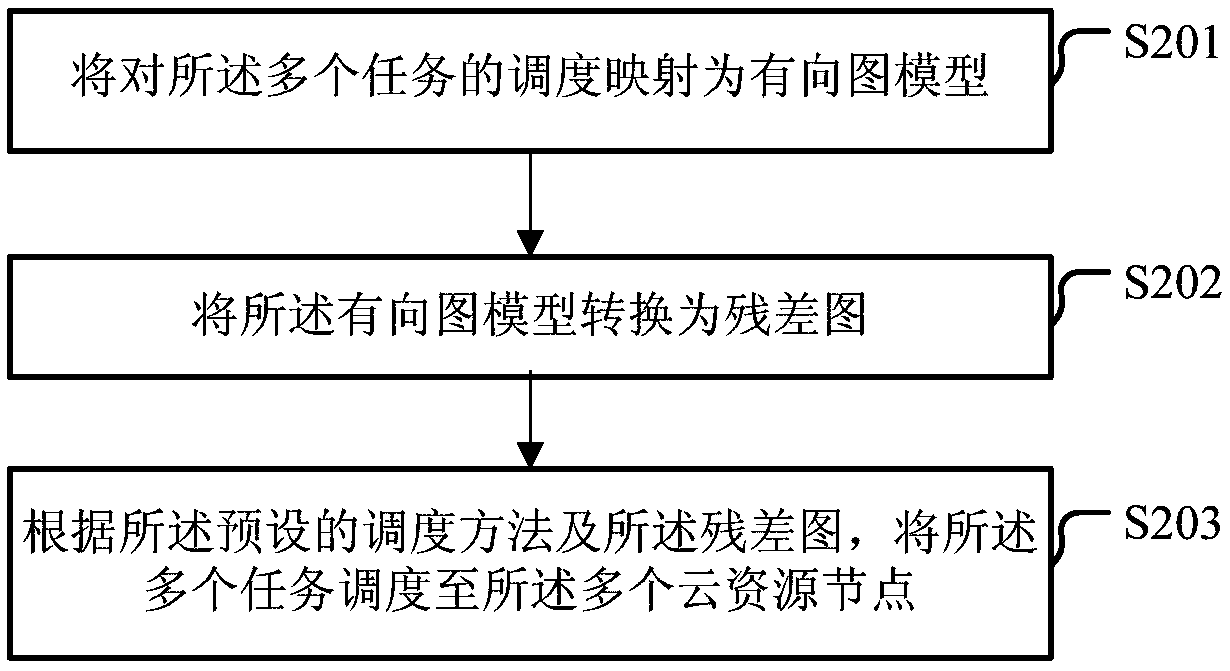

[0164] As an implementation manner of the embodiment of the present invention, the above-mentioned model mapping unit may include:

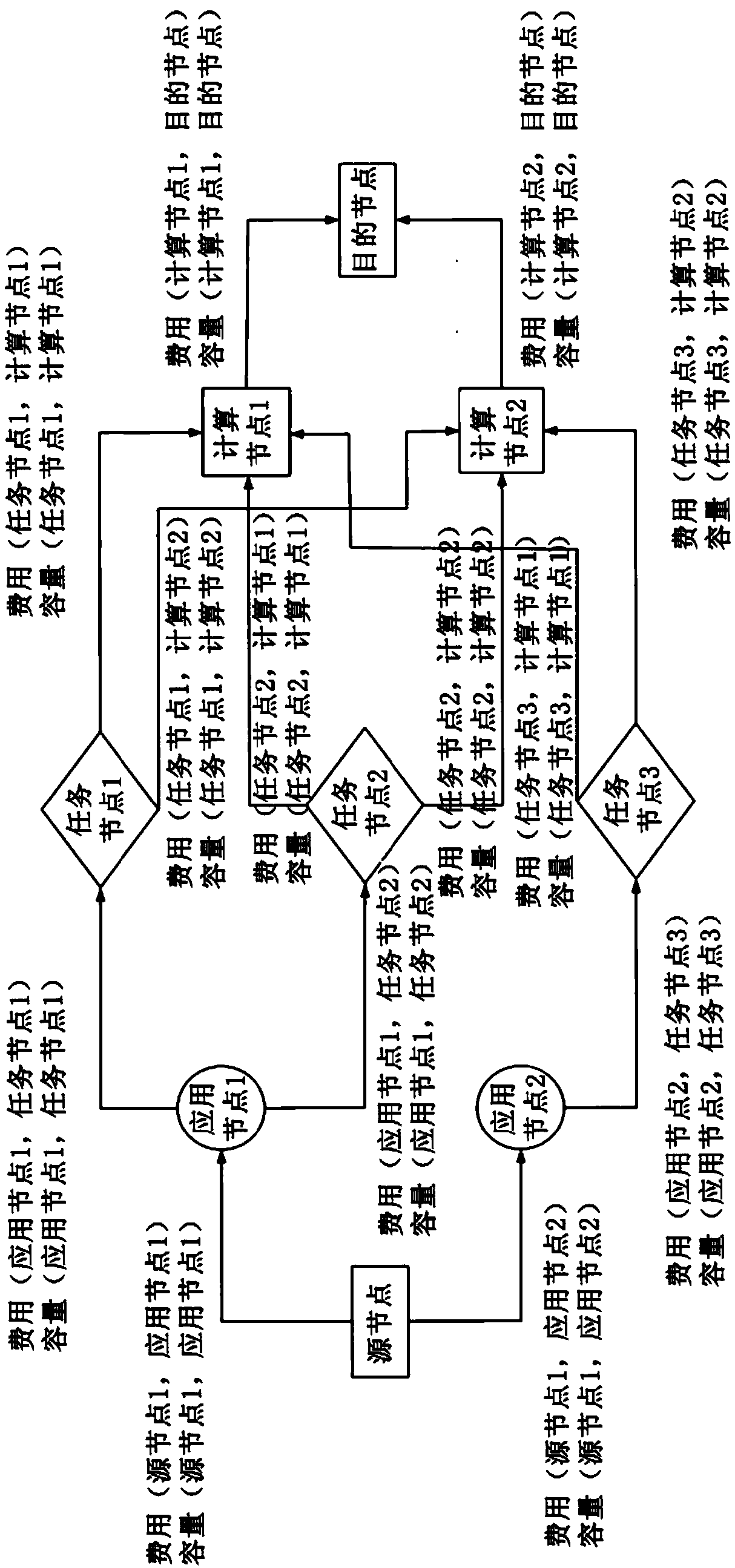

[0165] The model mapping subunit ( Figure 5 Not shown in ), used to map the scheduling of the multiple tasks into the following directed graph model:

[0166] The directed graph model includes source nodes, application nodes, task nodes, computing nodes, and destination nodes, as well as directed edges from source nodes to application nodes, directed edges from application nodes to task nodes, and directed edges from task nodes to computing nodes. Edges, directed edges from computing nodes to destination nodes;

[0167] Wherein, each node object has a potential value, and the node object includes the source node, the application node, the task node, the computing node and the destination node; the potential value is positive, indicating that the node object has Tasks can be assigned, and the number of tasks that can be assigned is the potentia...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com