Method for maintaining spatial consistency for multi-person augmented reality interaction

An augmented reality, consistent technology, applied in the field of computer vision, it can solve the problems of tracking range and distance limitation, increased calculation amount, complicated circuit, etc., and achieve the effect of maintaining spatial consistency and low calculation amount.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] The specific implementation manners of the present invention will be further described in detail below in conjunction with the drawings and examples.

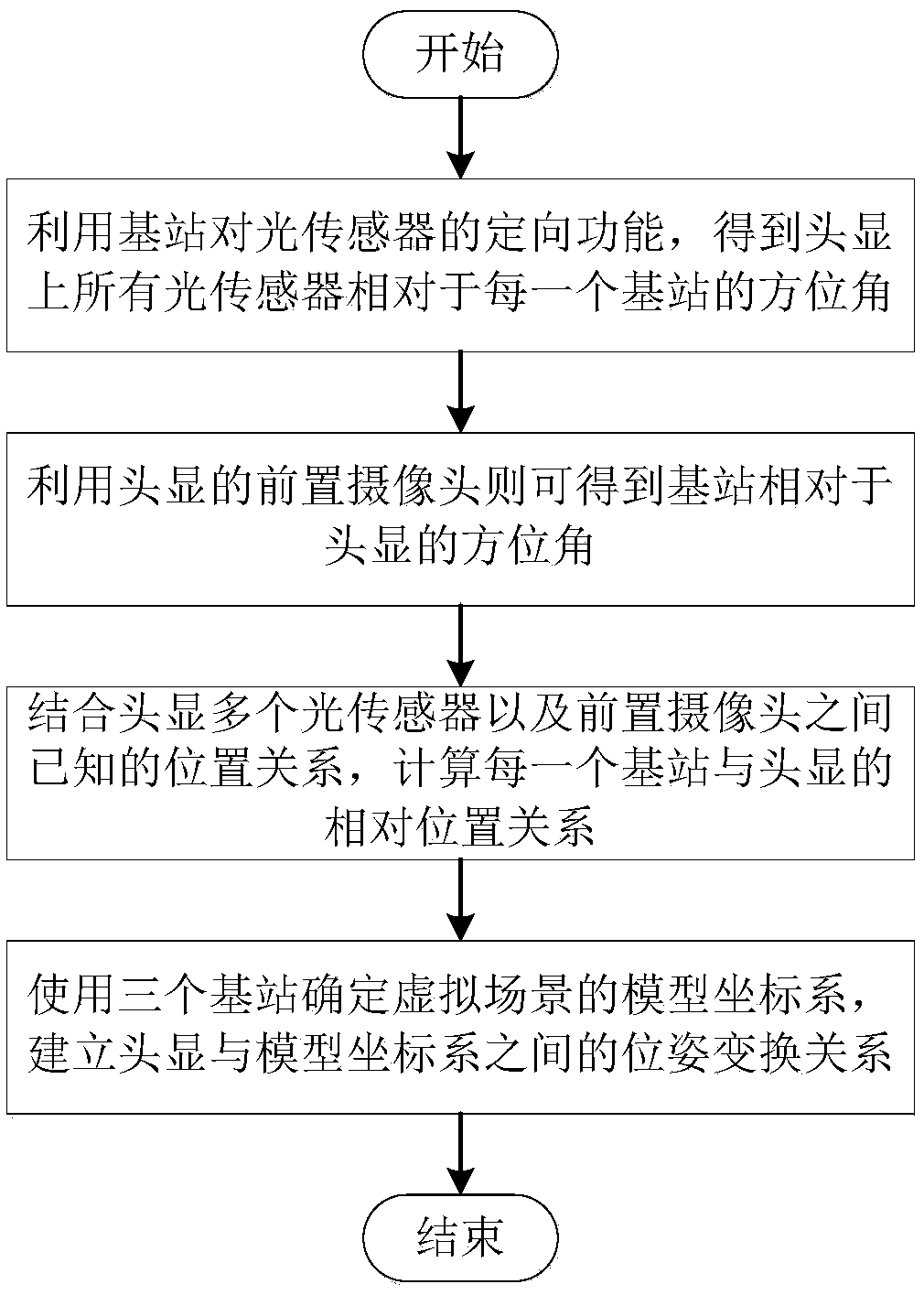

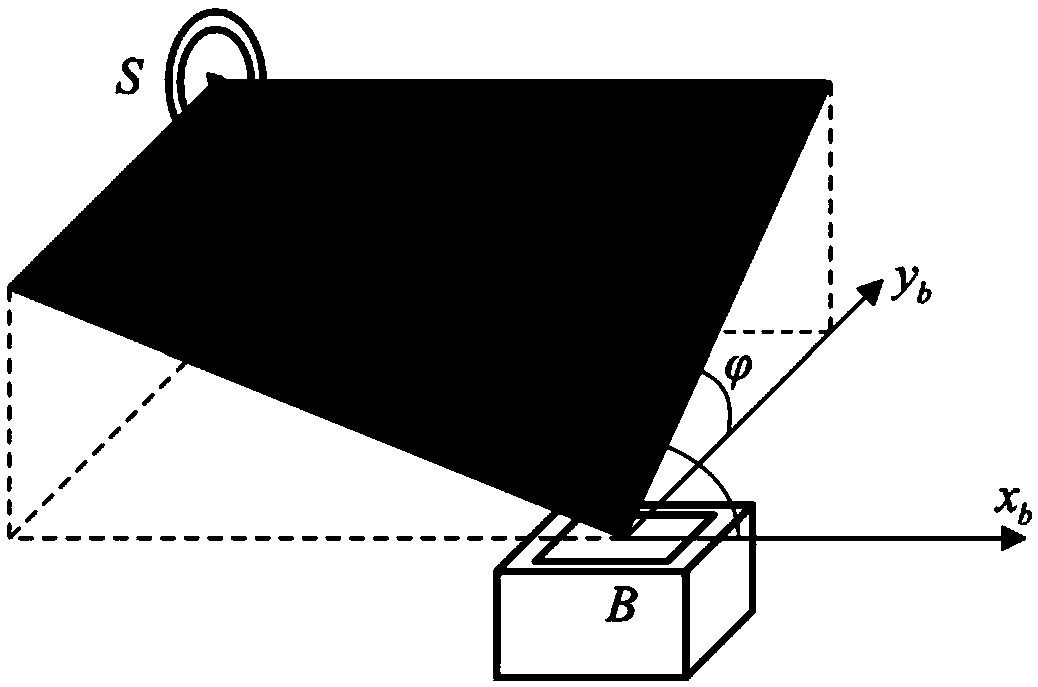

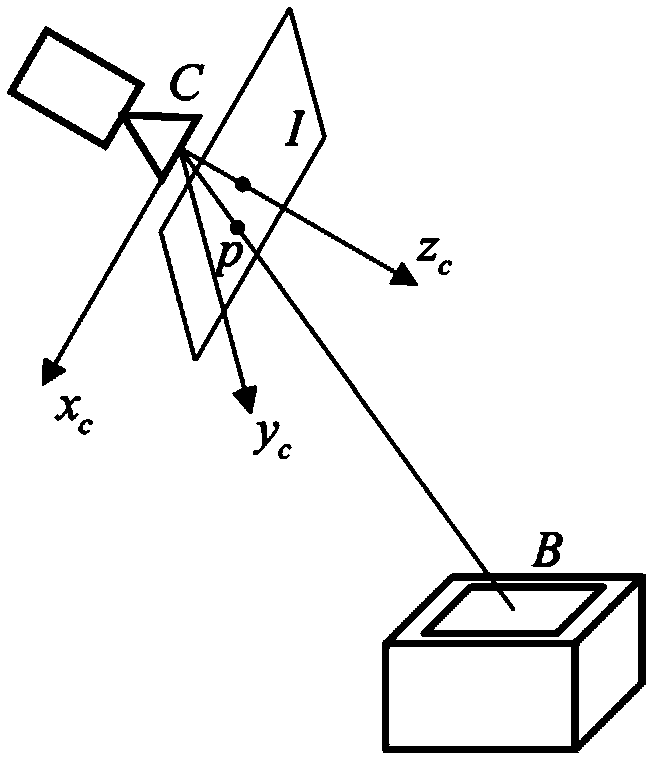

[0059] The method of the present invention proposes a perspective image splicing method based on spatial triangular patch fitting through patch-based approximate processing of the scene structure to be photographed. First, using the orientation function of the Lighthouse base station to the light sensor and the imaging of the scanning laser surface emitted by the base station by the front camera of the head-mounted display, the azimuth angles of all light sensors on the head-mounted display relative to each base station are obtained, as well as the relative distance between the base station and the head-mounted display. The azimuth angle; then, using the known positional relationship between multiple light sensors in the head-mounted display and the front-facing camera, calculate the relative positional relationship betwe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com