Toolkit for three-dimensional interactive scenes

A three-dimensional interaction and three-dimensional scene technology, applied in the input/output of user/computer interaction, the processing of 3D images, the input/output process of data processing, etc. And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] In order to clearly illustrate the technical features of the solution of the present invention, the solution will be further elaborated below through specific implementation modes.

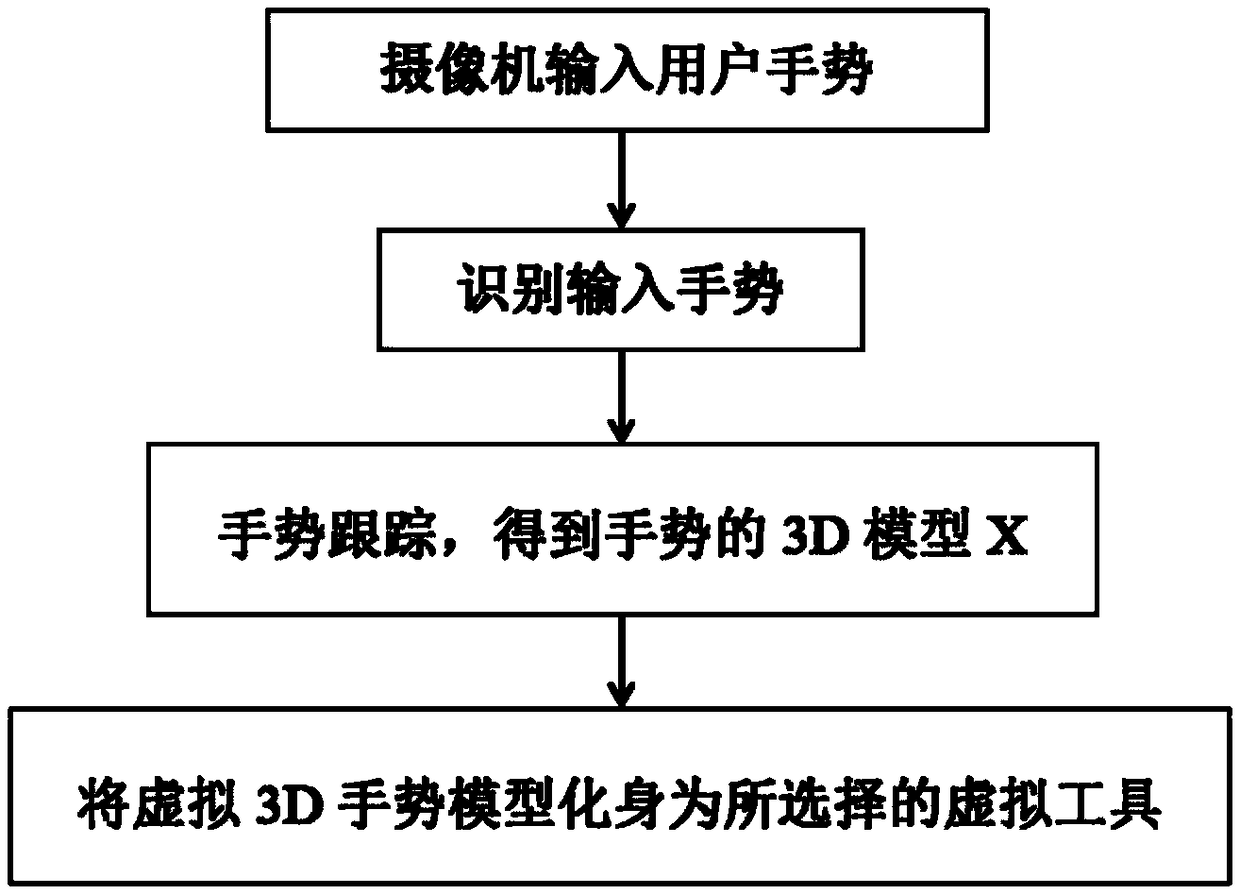

[0051] Such as figure 1 As shown in , a toolbox for 3D interactive scenes, including the following steps:

[0052] a. Make a tool set for 3D scene interaction, use software such as OpenGL to generate a tool set for 3D scene interaction;

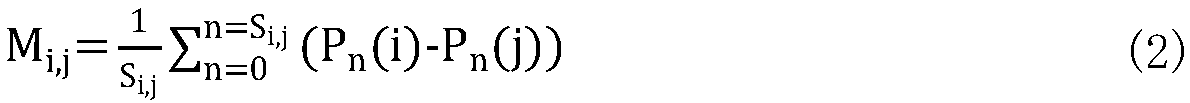

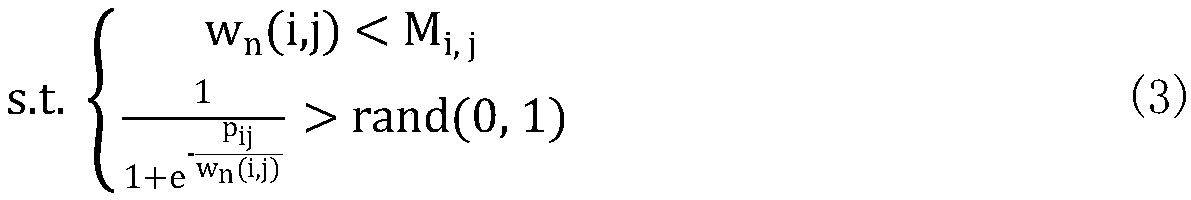

[0053] b. Gesture recognition, the method of gesture recognition described in this embodiment is a CNN-SVM hybrid model gesture recognition method based on error correction strategy, the method first preprocesses the collected gesture data, and then automatically Extract feature and carry out prediction classification to obtain classification result, utilize error correction strategy to correct described classification result at last, described CNN-SVM hybrid model gesture recognition method based on error correction strategy comprises the following steps: ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com