Speech emotion recognition method for extracting depth space attention characteristics based on spectrogram

A technology of speech emotion recognition and depth extraction, which is applied in speech recognition, speech analysis, character and pattern recognition, etc., and can solve problems of potential nature (neglect of relevance, etc.)

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

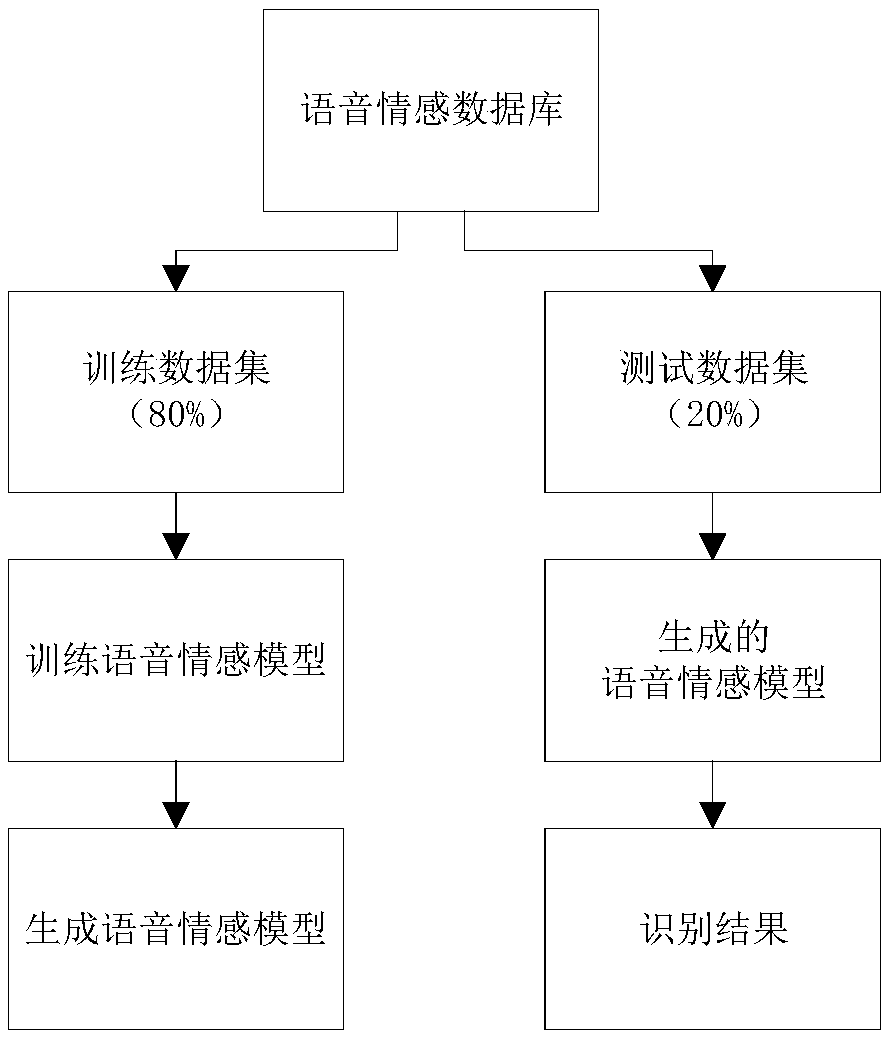

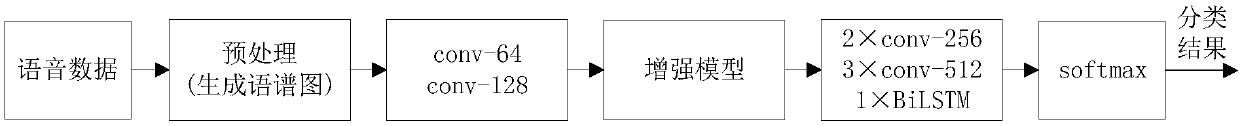

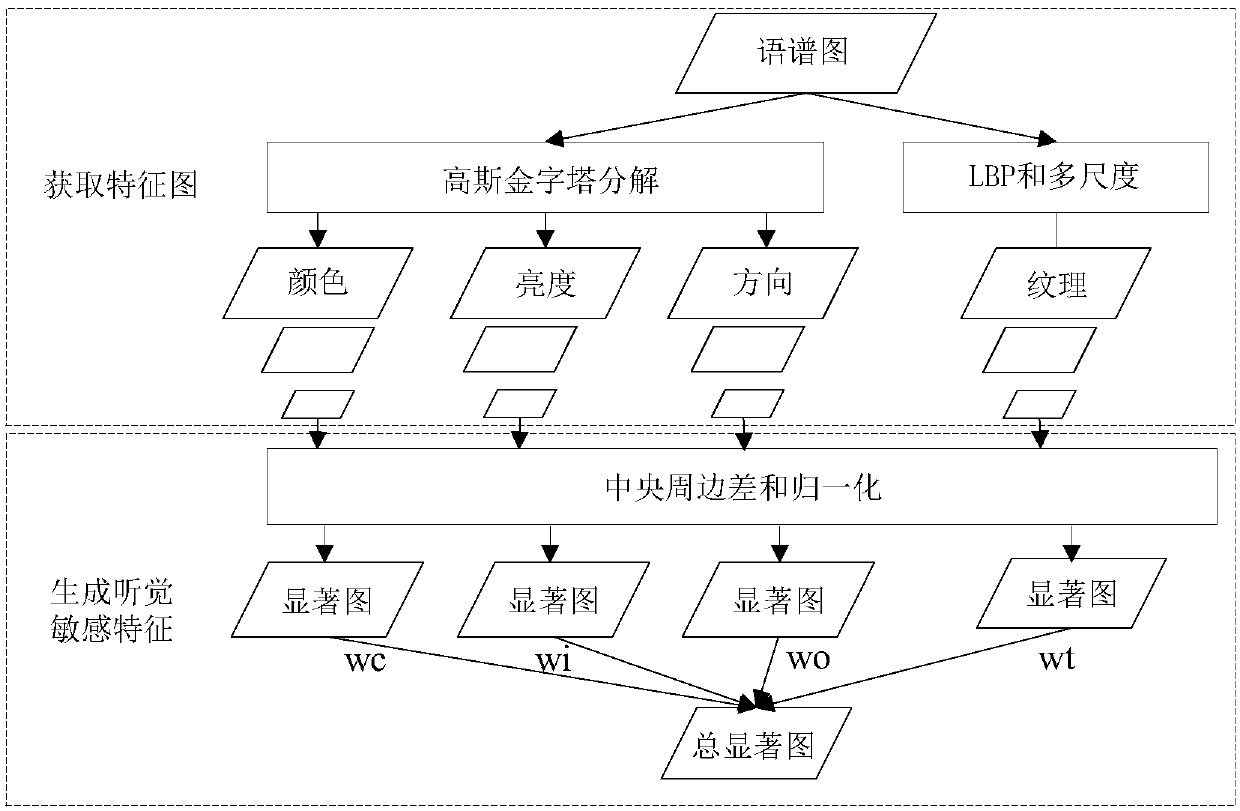

[0074] The embodiments of the present invention will be described in detail below in conjunction with specific embodiments and drawings.

[0075] Before describing the specific technical solution of the present invention, first define some abbreviations and symbols and introduce the system model: the basic setting of the experiment is that the learning rate I is 0.001, and the input batch B is 400 epochs. For the number of layers of the network, it is determined under the optimal performance. The convolution part is based on VGGNet and the specific layer settings are formed through multiple experiments. For details, see Table 1. The F_CRNN network structure uses random initial words for the initialization of model weights and biases. , for convenience, the following hybrid neural network (CRNN) is an optimized network. The algorithms all adopt supervised training, and the category labels of the data are only used during training, and the experimental results are presented in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com