An image subtitle generation method based on MLL and ASCA-FR

An ASCA-FR, subtitle technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems of low accuracy of subtitle description, poor reflection, and thinness, and achieve smooth and grammatical expression of subtitles. Accurate, full training process results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

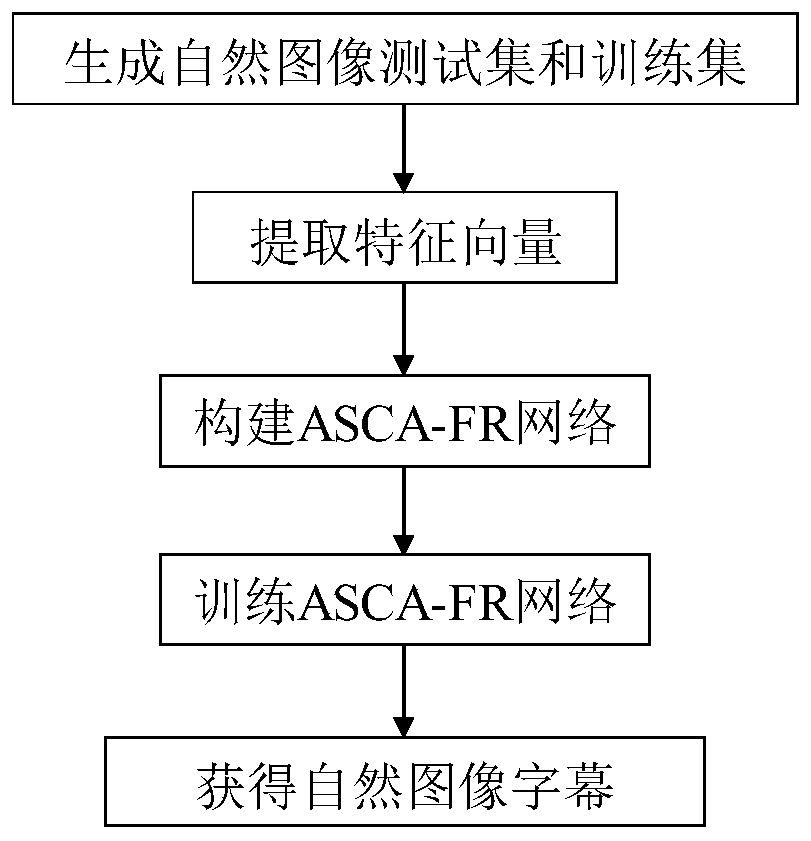

[0040] Attached below figure 1 , the present invention is further described in detail.

[0041] Refer to the attached figure 1 , the implementation steps of the present invention are further described in detail.

[0042] Step 1, generate natural image test set and training set.

[0043] At least 10,000 natural images are randomly selected from the Internet or public image datasets to form a natural image collection.

[0044] No more than 5000 natural images are randomly selected from the natural image collection to form a natural image test set.

[0045] Configure English label subtitles for each remaining natural image in the natural image set, delete the part of English label subtitles greater than L, where L represents the maximum number of English words in the set subtitles, and the deleted label subtitles correspond to them The natural images constitute the natural image training set.

[0046] Set the English end character to .

[0047] The Englis...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com